More than almost any other market or research segment, genomics is vastly outpacing Moore’s Law.

The continued march of new sequencing and other instruments has created a flood of data and development of the DNA analysis software stack has created a tsunami. For some, high performance genomic research can only move at the pace of innovation with custom hardware and software, co-designed and tuned for the task.

We have described efforts to build custom ASICs for sequence alignment, as well as using reprogrammable hardware for genomics research, but for centers that have defined workloads and are limited by performance constraints (with an eye on energy efficiency), the push is still on to find novel architectures to fit the bill. In most cases, efforts are focused on one aspect of DNA analysis. For instance, de novo assembly exclusively. Having hardware that is tuned (and tunable) that can match the needs of multiple genomics workloads (whole genome alignments, homology searches, etc.) is ideal.

With these requirements in mind, a research team at Stanford, led by computing pioneer, Bill Dally, has taken aim at both the hardware and software inefficiencies inherent to genomics via the creation of a new hardware acceleration framework that they say can offer between a “125X and 15.6X speedup over the state-of-the-art software counterparts for reference-guided and de novo assembly of third generation (long) sequencing reads, respectively.” The team also reports significant efficiency improvements on pairwise sequence alignments (39,000X more energy efficient than software alone).

“Over 1,300 CPU hours are required to align reads from a 54X coverage of the human genome to a reference and over 15,600 CPU hours to assemble the reads de novo…Today, it is possible to sequence genomes on rack-size, high-throughput machines at nearly 50 human genomes per day, or on portable USB-stick size sequences that require several days per human genome.”

The Stanford-based hardware accelerated framework for genomic analysis, called Darwin, has several elements that go far beyond the creation or configuring of custom or reprogrammable hardware. At the heart of the effort is the Genome Alignment using Constant Memory Trace-back (GACT), which is an algorithm focused on long reads (more data/compute intensive to handle but provide more comprehensive results) that uses constant memory to make the compute-heavy part of the workload more efficient.

The use of this algorithmic approach “has a profound hardware design implication,” the team explains, because “all previous hardware accelerators for genomic sequence alignment have assumed an upper-bound on the length of sequences they align or have left the trace-back step in alignment to software, thus undermining the benefits of hardware acceleration.” Also critical to the effort is a filtering algorithm that cuts down on the search space for dynamic programming, called D-SOFT, which can be tuned for sensitivity.

To put this in context, keep in mind that long sequence reads are improve the quality of genome assembly and can be very useful in personalized medicine because it is possible to identify variances and mutations. However, this capability comes at a price—the team notes that mean error rates can be as high as 40% in some cases and while this error can be corrected, it takes time to do so, thus cutting down on the performance and efficiency of the process. The tunable nature of Darwin helps correct for this and is fit to the hardware to speed for more accuracy faster, and with less power consumption.

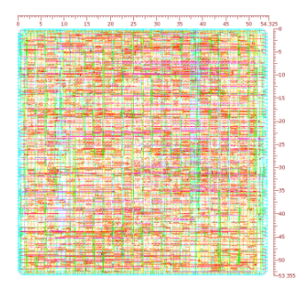

On the hardware side, the team has already fully prototyped the concept on FPGA and performed ASIC synthesis for the GACT framework on a 45nm TSMC device. In that prototyping effort, they found pairwise alignment for sequences had a 763X jump on software-only approaches and was over 39,000X more energy efficient. “The parameters of D-SOFT can be set to make it very specific event for noisy sequences at high sensitivity and the hardware acceleration of GACT results in 762X speedup over software.”

Although D-SOFT is one of the critical elements that creates the tunability that is required for both accuracy and efficiency, it is also the bottleneck in the hardware/software design, eating up 80% of the overall runtime. The problem is not memory capacity, but access patterns, which the team expects they might address by speeding the random memory access using an approach like e-DRAM. Removing this barrier would allow the team to scale Darwin’s performance. Unlike other custom designs, for once, memory capacity is not a bottleneck as it uses only 120 MB for two arrays, which means far more can fit on a single chip.

“Darwin handles and provides high speedup versus hand-optimized software for two distinct applications: reference-guided and de novo assembly of reads, and can work with reads with very different error rates,” the team concludes, noting that Darwin is the “first hardware-accelerated framework to demonstrate speedup in more than one class of applications, and in the future, it can extend to alignment applications even beyond read assembly.”

Be the first to comment