When ARM officials and partners several years ago began talking about pushing the low-power chip architecture from our phones and tablets and into the datacenter, the initial target was the emerging field of microservers – small, highly dense and highly efficient systems aimed at the growing number of cloud providers and hyperscale environments where power efficiency was as important as performance.

The thinking was that the low-power ARM architecture that was found in almost all consumer devices would fit into the energy-conscious parts of the server space that Intel was having troubling reaching with its more power-hungry Xeon processors. It made sense, and there was a lot of excitement about the idea of ARM in the datacenter, particularly after ARM’s release of its 64-bit ARMv8-A architecture, which included such datacenter features as better support for virtualization.

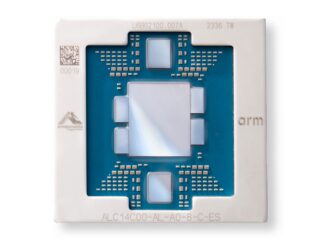

A number of chip makers – including Applied Micro, Calxeda, Cavium, and Marvell – pushed ahead with plans to bring ARM-based systems-on-a-chip (SoCs) to market, and have done so over the years (Applied Micro with its X-Gene chips, Cavium with ThunderX and Marvell with Armada XP). Calxeda came out early with server chips, but ran out of money and disappeared.

Proponents over the past several years also have pushed the idea of ARM chips in general purpose servers, rather than simply in smaller, low-power microservers. Larger players AMD and Qualcomm also have targeted the server, though others, notably Samsung and Broadcom, have backed away from plans. Servers makers, including Hewlett Packard Enterprise, Dell, Lenovo, and Cray, have rolled out or tested ARM-based systems.

However, the playing field has changed over the past couple of years. Chip makers have taken longer than originally expected to roll out ARM server SoCs, and during that time Intel has worked hard to drive down the power consumption of its Xeon server processors. In addition, others have begun angling to be the primarily alternative silicon provider to Intel in the datacenter (Intel owns more than 99 percent of the server chip space). In particular, IBM, with its OpenPower effort, wants to be the top option for organizations that want a second chip supplier to help drive down costs and accelerate innovation while also protecting them in case something goes awry in the Intel supply chain.

Still, the continued rise of cloud environments, mobile computing, the Internet of Things, and hyperscale datacenters is helping to drive the need for highly efficient processors and servers, which is all keeping ARM in the discussion. That can be seen in recent situations where ARM is being embraced. Fujitsu is moving away from its own Sparc64 architecture and adopting the ARMv8-A architecture for SoCs that will power the next generation of its K supercomputer, currently seventh on the Top500 list of the world’s fastest supercomputers. With the internally developed ARM-based SoCs, Fujitsu officials want to improve the performance-per-watt of the upcoming Post-K supercomputer, an exascale system they say will be 100 times faster than the current machine. In addition, the Mont Blanc Project in Europe is developing with Atos-owned system-maker Bull a prototype exascale supercomputer powered by Cavium’s ARM-based ThunderX2 chips, while Qualcomm is creating a joint venture in China called Huaxintong Semiconductor Technology to develop an ARM-based server chip for the Chinese market.

This demand is continuing to fuel tests comparing the power efficiency and performance of ARM-based systems versus those powered by Intel and its X86 architecture. The results of a recent study were published by the IEEE. In their effort – titled A Feasible Architecture for ARM-Based Microserver Systems Considering Energy Efficiency – researchers Sendren Sheng-Dong Xu, a member of the IEEE, and Teng-Chang Chang set out to test the performance and power efficiency of microservers powered by ARM and Intel chips when running parallel and server workloads. They created what they called an ARM-based server cluster board (SCB) running four 32-bit quad-core chips from Marvell, the Armada XP MV78460 SoC, running at 1.2GHz. The SCB also included both PCIe and Gigabit Ethernet links for communication between the chips and the nodes. The X86 system was based on a single quad-core Core i7 920 chip running at 2.67 GHz. While each core of the ARM chip can run a single instruction thread, the Intel chip can run two threads per core.

“In the era of Industry 4.0 and the Internet of Things (IoT), key issues concerning performance and energy efficiency have to be taken into account for systems design, for example, the applications of embedded systems and sensor networks,” the researchers wrote. “For multicore chip vendors, increasing processor core density is a typical method used to address the above stated issues for processors. . . . To further improve the density within the server case, these chip multicore processors are often packaged as a separate device, external to the host system.”

In their test of scalability, Xu and Change used all four system nodes were used to test the scale-up and scale-out performance. For the two case studies running parallel and server workloads, only a single ARM node was used to match the configuration of the x86-based system. In testing the scalability of the SCB, they used the NPB benchmark suite 5 and found that under most workloads – such as the embarrassingly parallel program, or EP – the performance of the systems scales in both the quad-core chip and across the processors. That said, the Integer Sort performance doesn’t scale well for more than eight cores, thanks to the off-chip communications playing a large role in the execution time.

When compared with the Intel system running both parallel and server workloads, the ARM-based microserver showed significant energy savings that the study’s authors said greatly offset the slower performance when compared with the X86-based system. With parallel workloads, Xu and Change used both the NPB and Hadoop benchmarks and found that in a single-core experiment, the ARM system consumed at least 39 percent less energy than the X86 system, though it was 8.6X slower in its average execution speed, according to NPB numbers. In the experiment involving four processor cores, there was a 26 percent energy savings.

“It is important to note that since a large portion of the benchmarks involves floating point operations, the hardware support for floating point operations in the ARM processor should be enabled; otherwise, the ARM system takes too much time to deliver the results; it is about 10X slower using software emulation for the floating point operations,” the authors wrote.

In parallel workloads using Hadoop benchmarks, the systems were tested with the Grep, WordCount, and Sort programs with data sizes ranging from 128 MB to 1,024 MB. Again, there was a tradeoff. Energy savings when running small data sizes ran from 44 percent to 75 percent, but with a four- to nine-times performance slowdown. However, the numbers fell as the data size grew.

“The reason for the degradation in energy saving efficiency is that we used the USB drive (USB 2.0 standard) as the primary means of data storage, and extensive disk I/O operations on the ARM system limit the overall performance,” they wrote. “From our experiments, when the data size is larger than 256 MB, the I/O performance delivered by the ARM system is several orders of magnitude larger than that achieved by the X86 system. For example, the ARM server requires 6.1X longer than the X86 system to transfer 1 GB of data. This problem can be solved by using a faster storage media, such as the SATA II disk drive, which is supported by the Marvell processor.”

The server workloads were tested using the web server lighttpd benchmark and involved the systems running 50 concurrent connections and a total of 100,000 web requests of different data sizes. With smaller web files – such as 512 bytes – the ARM server consumed half of the power that the X86 server did, but 7.2X more time was required to handle the requests. As the data sizes became larger, “the loading was gradually shifted to the network subsystem for transmitting the web files, and hence, the performance gap between both systems narrowed as the impact of CPU computing power on the performance decreased. More specifically, when the data size was larger than 2 KB, the energy benefit of the ARM processor outweighed its performance loss.”

More details can be found in the study, but ARM chip makers have some more ammo when pitching their products to cloud providers and other large datacenter operators. That said, the authors noted other work that needs to be done, including building 64-bit servers and comparing the efficiency of those to 32-bit systems as well as using an open source implementation of OpenCL for ARM – such as Pocl – in a 64-bit SCB.

I applaud system assessments generally, the work and applied science in conducting them, however the very different processors relied in this study, Marvell Armada XP MV78460 running at 1.2GHz and i7 920 running at 2.67 GHz, make this exercise, especially in today’s terms, irrelevant.

I see IEEE original and this republication, a competitive detractor, lacking relevant commentary addressing what is ‘state of the art’.

The exercise itself, on resource investment in platform choice, could have been better conceived. I’m sorry to say flawed science poorly administered.

Mike Bruzzone, Camp Marketing

It’s difficult to get excited about any of these savings when the processors are all from the last decade. The Core i7 920 that was mentioned is a DT processor released in 2008. And the ARM designs are old too. I think I was using one of them 3 phones ago.