Last week we discussed the projected momentum for FPGAs in the cloud with Deepak Singh, general manager of container and HPC projects at Amazon Web Services. In the second half of our interview, we delved into the current state of high performance computing on Amazon’s cloud.

While the company tends to offer generalizations versus specific breakdowns of “typical” workloads for different HPC application types, the insight reveals a continued emphasis on pushing new instances to feed both HPC and machine learning, continued drive to push ISVs to expand license models, and continued work to make running complex workflows more seamless. For a time, mostly a few years ago, there was a concentrated push from AWS teams to detail HPC use cases—those workloads with tight coupling, MPI stacks, and custom hardware configurations. The pace of publicized new use cases with real high performance demands has slowed, but AWS remains confident they are on a path to continue reeling in the supercomputing set. Of course, not everyone who uses AWS for HPC at scale talks.

As we outlined in the past, the company has been at the fore for HPC end users, adding advanced 10GbE networking, GPU acceleration, diverse CPU offerings for a range of compute-intensive jobs, and now, FPGAs. These hardware investments, coupled with more ISV partnerships for HPC codes (and new use cases to support the viability of those at scale in a cloud environment) have helped AWS keep pace with what Singh says is sustained interest from traditional HPC users—and new users with machine learning needs tied to HPC hardware.

“The big discussion we’ve been having when it comes to HPC is around usability,” Singh explains. “Making sure we have an optimized stack. People in HPC are used to having a particular hardware stack, a certain MPI stack, math libraries, and other specific tooling, but when you look closer, a lot of this is built around the idea that things are static. That there is a fixed sized cluster and a bunch of users with a queue.” For these HPC end users, the company introduced AWS Batch, which is exactly what it sounds like—a managed way to run batch jobs without getting involved in extensive provisioning or other complexities.

We asked Singh what generalizations could be made about their HPC user base as we kick off 2017, and while it’s not hard to imagine that they vary dramatically in terms of requirements, the sweet spot is users with scale-out workloads. Here, life sciences workloads come to mind first, but these were some of the initial large-scale users AWS could point at to entice HPC to come to the cloud side. Another area where they’re seeing larger numbers of new users is in engineering. This was driven by use cases where HPC codes are shown to scale higher than they have even on on-site supercomputers, as was the case with ANSYS and a recent scalability run that used over 2000 cores on AWS. This has driven adoption in the many areas where computational fluid dynamics (CFD) looms large in both research and enterprise. Further, Singh says they are also seeing the HPC set demand more integration of visualization capabilities for simulation workloads and those in animation, among others.

If there is any large company that has keen insight about the next direction for applications at scale, it is certainly AWS. Although the vast majority of workloads running on the Amazon cloud fall into the general purpose category, AWS keeps close tabs on what is emerging. While the addition of an acceleration like the high-end Nvidia Tesla K80 was aimed at high performance computing users (these are generally only found on top-tier supercomputers), Singh says his team saw overlapping capabilities for the top end of the machine learning users—a user set that continues to grow. “HPC users fall into two categories,” Singh tells The Next Platform. “There is the user base of researchers, scientists, and engineers, and then another group that develops these applications. For these users, scale is important, tight coupling is important, being able to run MPI, accelerators, and various math libraries all matter. But modern machine learning is something like a HPC workload—we’re looking at both from a usability standpoint. And it’s also why we have invested in strong networking and parallel computing capabilities.”

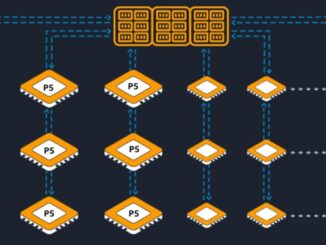

In short, what is good for the relatively small base of supercomputing application customers is also going to be attractive for a potentially new (and lucrative) group of users in machine and deep learning. Both are looking to use HPC hardware via the AWS cloud (FPGAs, GPUs, and compute or memory-laden instances). While HPC might be somewhat limited by the number of scientific applications that can run in a public cloud environment (licenses, complexity, etc.), the growing machine learning set is limited only by imagination since there are so many open machine learning frameworks primed and ready for public cloud. Singh pointed the dual market benefits of some of their offerings for the higher end market, including GPUs. “HPC was a driver for us to invest in bringing in a big Tesla GPU but so was significant interest from the machine learning community. The P2 instances are designed to meet the needs of a broad set of users; everything from people running CFD codes to molecular dynamics, to people doing deep learning. It is for engineering, science, and deep learning.”

While many of the machine learning packages do not come with license restrictions, this is one area where HPC adoption on the cloud might lag behind. Several ISVs have made their code available to the AWS user base, but licensing models (and how to charge without static infrastructure/per core) remain a stopping point for some HPC code makers. Singh says that in terms of license scalability, “progressive ISVs are now offering options for scalability that combines traditional annual license terms with shorter-term scalable licenses. The shorter term licenses may be priced at a premium by ISVs to incentivize customers to purchase longer term, more traditional licenses for high utilization workloads.”

Singh notes that over time we can expect that HPC ISVs across industries will adopt combinations of annual license terms and pay-per-use models that address the scalability needs of end users, as well as providing ISVs with predictable revenue necessary to improve and support their technical software products.

Software license models aside, Singh says there is still a path to be cleared to get users to move core workloads to AWS. And while it is difficult to make broad generalizations about a user base as diverse as HPC, Singh was able to demonstrate how their investments in machine learning and deep learning can have a double pay-off as unique hardware can be tuned to fit two lucrative niches, something we expect will spur the cloud provider to keep beefing up its offerings for compute at the high-end—whether that is defined as scale (core counts), application complexity, or specialized hardware needed.

Be the first to comment