The ultimate success of any platform depends on the seamless integration of diverse components into a synergistic whole – well, as much as is possible in the real world – while at the same time being flexible enough to allow for components to be swapped out and replaced by others to suit personal preferences.

Is OpenHPC, the open source software stack aimed at simulation and modeling workloads that was spearheaded by Intel a year ago, going to be the dominant and unifying platform for high performance computing? Will OpenHPC be analogous to the Linux distributions that grew up around the open source Linux operating system kernel, in that it creates a platform and helps drive adoption in the datacenters of the world because it makes HPC easier and uniform?

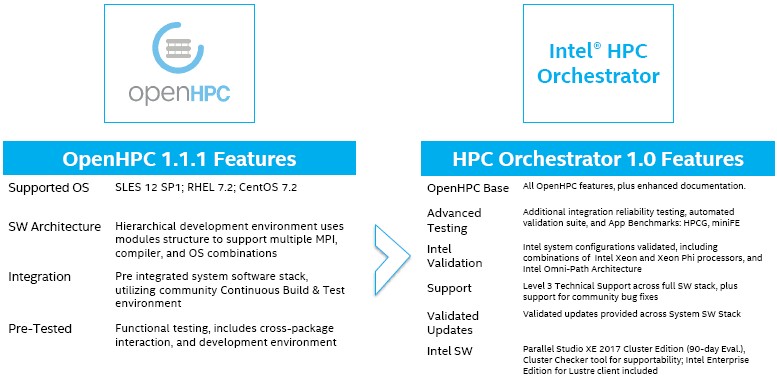

These are good questions, and ones that are not, as Betteridge’s Law of Headlines would suggest, easily answered with an emphatic “No.” OpenHPC is now more mature and better integrated, and in the wake of the SC16 supercomputing conference in Salt Lake City is starting to be shipped as a commercial product by system makers Dell and Fujitsu and, in some special cases, by Intel itself. Others will follow in providing commercial variants of OpenHPC, which Intel commercializes as HPC Orchestrator, and we think that in the fullness of time OpenHPC will be ported to ARM and Power platforms, too. While Hewlett Packard Enterprise did not make any noise about it at SC16 and Intel did not mention it, the HPE Core HPC Software Stack v2.0 was quietly launched at the conference and it is based on OpenHPC with extra goodies tossed in from HPE and special tuning and certification for ProLiant servers and InfiniBand and Omni-Path networks.

And we fully expect for OpenHPC to eventually be intermingled with the OpenStack cloud controller, the Open Data Platform variant of the Hadoop data analytics stack, and something that would have been called the OpenAI machine learning framework if that name had not already been picked by Elon Musk of SpaceX and Reid Hoffman of LinkedIn for their AI advocacy group.

In the end, there will only be one platform, and it will have to do everything. Thank heavens for all of us that everything in that platform and running on it keeps changing just enough to keep things really interesting. . . .

Stacking Up Open Source HPC

“This is something that many of our largest customers have asked us to do over the years,” explains Charlie Wuischpard, vice president of the Scalable Data Center Solutions Group at Intel and, significantly, formerly the CEO of supercomputer maker and stack builder Penguin Computing. “We saw that there was a gap for an open source, community based software stack for high performance computing. There are all sorts of variants in the world today in terms of cluster stacks, and they have varying degrees of adoption and various degrees of usability, let’s just say.”

Since the launch of the OpenHPC project, which included supercomputer makers Atos/Bull, Cray, Dell, Fujitsu, Hewlett Packard Enterprise, Lenovo, and SGI (now part of HPE) as well as some of the largest HPC centers in the world, the organization has grown to 29 members and has had over 200,000 downloads of the software stack, which includes everything needed to run the compute portion of an HPC-style distributed system. (You are on your own for file systems and object storage, but we think that will change, too, as elements are added to the stack.)

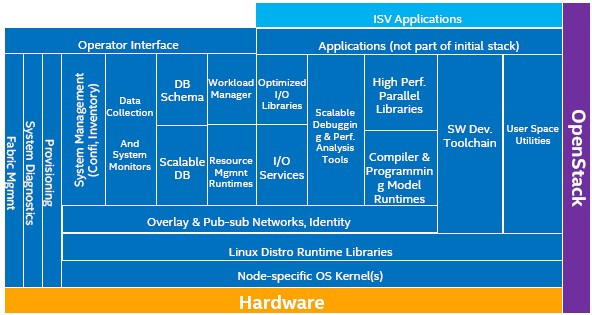

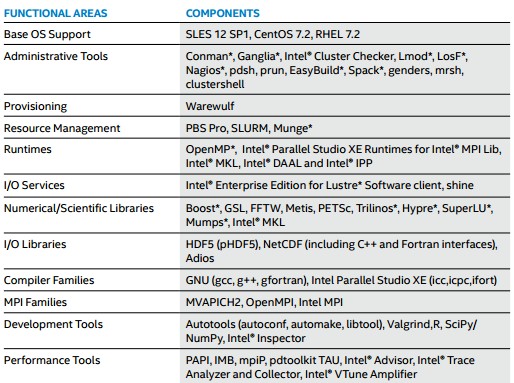

In a sense, Intel and the OpenHPC community is doing the same job that Linus Torvalds, the benevolent dictator of the Linux kernel, does for the heart of the open source operating system. And that is defining that elements go into the software, with community members who want to do some swapping out and replacing of components without breaking the stack. The HPC Orchestrator stack includes a slew of components, starting with officially supported Linux releases and building the HPC platform up from there:

The OpenHPC platform includes various implementations of the Message Passing Interface (MPI) protocol that is at the center of distributed HPC simulations (and which will find its uses in parallel machine learning clusters), as well as various compilers (in this case, those from Intel and the GNU open source compilers), and system management, cluster management, workload scheduling, and application development tools and the math libraries that are key to running simulation and models.

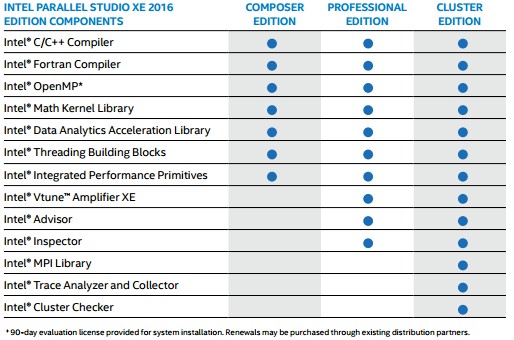

While the OpenHPC 1.2 release was announced at SC16 two weeks ago, the latest OpenHPC 1.1.1 is the one that Intel is using to make its commercial-grade HPC Orchestrator version. These three flavors of the HPC Orchestrator correspond to the three different levels of the integrated Intel Parallel Studio XE 2016 compiler tools, which are known as Composer Edition, Professional Edition, and Cluster Edition. Here is how they break down:

The beauty of the OpenHPC approach is that SUSE Linux, Red Hat, or Canonical, the three major Linux distributors, could do their own rev on OpenHPC, providing paid-for tech support on this stack and using the open source GCC compilers. (Thus far, Canonical does not seem to be interested much in porting OpenHPC to its Ubuntu Server.) Anyone else could create a variant of OpenHPC that uses other compilers, schedulers, or workload managers. The stack could be ported to Unix or Windows Server, too, if that seemed useful, but this would be a lot of work and for no real gain. HPC embraced Linux nearly two decades ago, and that does not seem to be turning back. The same holds true for Hadoop and other data analytics platforms and for the evolving machine learning frameworks. They are all running atop Linux.

Wuischpard says that OpenHPC is an “active, busy community now” and it is not just being driven by Intel, which very much wants HPC to run best on its compute and networks and is creating OpenHPC as a means of leveling the playing field and letting it tune it up for its hardware. Intel’s bet is that it can get dominant share of new HPC this way, and it is a safe bet. IBM would be wise to get OpenHPC running on Power, just like it has pulled level with Linux on X86 thanks to Power chip support from Red Hat, SUSE Linux, and Canonical. The ARM community is working to get to parity with OpenHPC, too. (More on that in a moment.)

With OpenHPC, high performance computing centers and their enterprise analogs can go download the software and just use it in its raw state. With HPC Orchestrator, Intel is looking for someone, like one of the server OEMs that has a presence in HPC, to actually distribute the OpenHPC stack on their machines and, significantly, with the Intel management tools and compilers preloaded and tuned for Xeon and Xeon Phi compute and Omni-Path networking.

That integration is worth a lot to Intel, and it is what it gets for its troubles. These HPC Orchestrator distributors – so far Dell and Fujitsu have signed up – do Level 1 and Level 2 tech support on the software stack, with Intel providing the final Level 3 backstopping when something goes wrong deep in the stack. Neither has provided pricing on their HPC Orchestrator support, but Dell hints to The Next Platform that it will be on the order of “hundreds of dollars per node,” not the crazy many thousands of dollars it costs to get a modern Hadoop stack from a commercial distributor.

The Cluster Checker tool that Intel and its partners have been using to validate cluster configurations as part of its Cluster Ready program is included in the HPC Orchestrator stack, by the way, and so is the Intel variant of the Lustre client, which allows for compute nodes on a cluster to push and pull data from external Lustre parallel storage clusters.

“The main point, especially as we look at the world going forward, is that software plays a crucial role in gluing these advanced systems together,” says Wuischpard. “Expect to see more from us around the software stack, not just in terms of delivering systems at these scales for the wider industry, but particularly at the top end of the supercomputing world where we specific and unique technical challenges are starting to emerge. But we are not talking about that today.”

Whatever it is, expect to see it emerge first on the “Aurora” supercomputer that Intel is building with Cray for Argonne National Laboratory for delivery in 2018.

The ARM And HPC Hammer

IBM has been mum about what plans it has to support OpenHPC or not – and with its own cluster scheduling and MPI stack (based on software it acquired through Platform Computing several years ago), we suspect that it will instead build a PowerHPC stack akin to the PowerAI machine learning stack that it just announced at SC16. But, then again, IBM could endorse OpenHPC and plug in the platform tools and its GPFS file system and certify the whole thing to run on Power.

The ARM community is hoping to make inroads into the HPC community, and having support for Linux that was on par with that of X86 iron was the second step toward that goal. (Having 64-bit ARM server chips that could at least compete a little with Xeons was the first step.)

ARM Holdings, the company that licenses the ARM chip intellectual property, was one of the original members of the OpenHPC community and it is, practically speaking, the main organization that makes OpenHPC truly open. At the SC15 supercomputing conference, ARM announced that it was tuning up the compilers for 64-bit ARMv8 chips to do HPC math, and at ISC16 earlier this summer ARM outlined its work with Fujitsu to bring Scalable Vector Extensions to the ARM architecture in future supercomputing chips.

Darren Cepulis, datacenter architect and server business development manager at ARM Holdings, tells The Next Platform that programmers at the chip designer are working to get OpenHPC running on various ARM processors, namely the ThunderX line from Cavium and the X-Gene line from Applied Micro. The Texas Advanced Computing Center is working with ARM to get OpenHPC working on these ARM chips, and they have taken the latest-greatest OpenHPC 1.2 that was just announced at SC16 and getting it working with the continuous integration build systems that the OpenHPC community uses that are hosted at TACC.

But don’t expect for ARM Holdings to be a software distributor. (Although you could make a strong argument that it should have OpenHPC and Lustre distros of its own, just as Intel does, if it wants to compete in HPC.)

“This is our first step,” cautions Cepulis. “Open source is not a polished commercial product, and it is not something that can be deployed straight to the end user. You are going to have to go through an OEM, an integrator, or a distro at some level. So for ARM, we are looking at this as a first step. We are collaborating with Red Hat and SUSE Linux, and they may take it and bring it into their own HPC packages within their distributions. And we do partner heavily with Cray and Lenovo and the major OEMs as we try to support various HPC platforms down the road in 2017.”

Dell and Hewlett Packard Enterprise could do ARM support for OpenHPC, and with Fujitsu building the Post-K supercomputer for the Japanese government based on ARM and being an HPC Orchestrator distributor already, it seems likely that Fujitsu will be an early and enthusiastic supporter of OpenHPC on ARM – mainly because it makes the job of creating a software stack on that Post-K machine all that much easier. Cepulis tells The Next Platform that he does not think that either Cavium or Applied Micro will provide OpenHPC support, and this stands to reason. They need to stay focused on chip design and manufacturing.

There is not a lot of work left to do for OpenHPC to run on ARM. Cepulis says that 98 percent of the packages for OpenHPC compile down to run on ARM with the 1.2 release that just came out. But to have a true HPC platform running exclusively on ARM means getting Lustre and/or GPFS working on ARM chips, too. Cepulis says that the Lustre client has worked on ARM for several years, but as for the server side of the parallel file system, the ARM collective is “still trying to figure that out.” An open source file system seems to be in order, and it could turn out that the BeeGFS parallel file system will be anointed as the preferred file system for the commercialized OpenHPC distro for ARM – when, not if, someone steps up to create one. SUSE has already said it will support OpenHPC on ARM, so it would not be a stretch to add BeeGFS and provide some differentiation with Red Hat and try to preserve some of the substantial footprint that SUSE Linux has in the traditional HPC arena.

Be the first to comment