Close to a year ago when more information was becoming available about the Knights Landing processor, Intel released projections for its relative performance against two-socket Haswell machines. As one might image, the performance improvements were impressive, but now that there are systems on the ground that can be optimized and benchmarked, we are finally getting a more boots-on-the-ground view into the performance bump.

As it turns out, optimization and benchmarking on the “Cori” supercomputer at NERSC are showing that those figures were right on target. In a conversation with one of the co-authors of a new report highlighting the optimization steps and comparative results, NERSC Engineer in the Advanced Technologies Group, Douglas Doerfler, says there are consistencies between what Intel targeted with Knights Landing (KNL) compared to two-socket Haswell machines (as there will be in the “Cori” system once a pending upgrade is complete—the teams already have some KNL nodes). “We are seeing anywhere from equal performance to Haswell to 2-2.5X performance improvement.”

If there is any lesson from the full report and our chat with Doerfler, however, it is certainly not a matter of simply adding more cores to throw at problems. There are a few key areas that are critical to centers looking to implement KNL and they all take some serious software footwork. As we already described here, one of the most important aspects for nearly all applications using KNL is the availability of the high bandwidth memory. It is very fast memory from a bandwidth perspective, but is capacity limited, so users need to be able to manage the memory hierarchy explicitly and also understand how the cache mode support cooked into KNL can be harnessed.

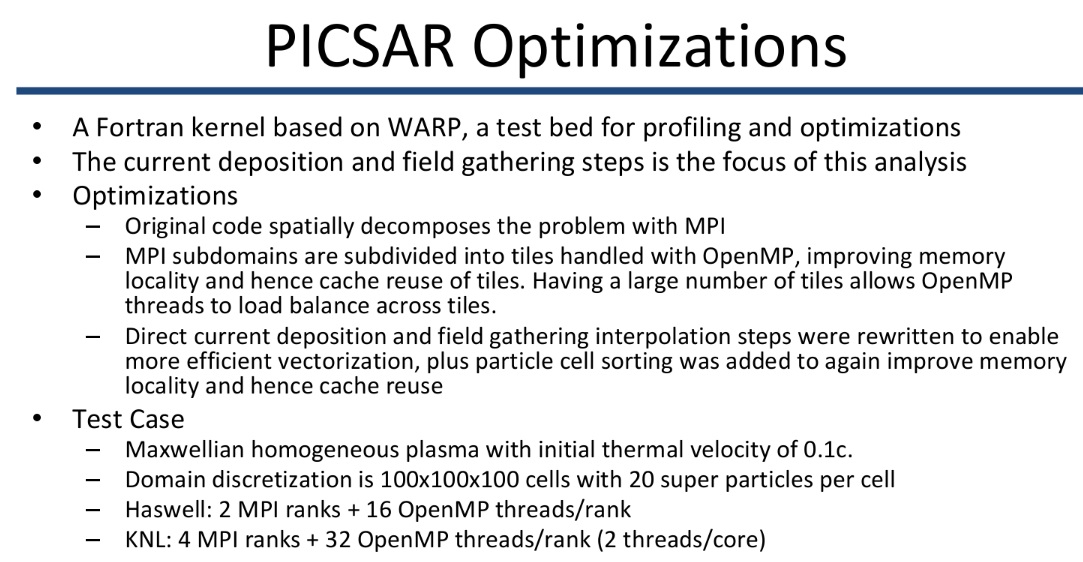

In addition, the vectorization capabilities (two 512 wide vector units) are critical to achieving stated levels of performance but this presents the commonly-cited challenge of massive software overhauls needed to start taking advantage of high performance architectures like KNL. “Frankly, a lot of the national codes, both classified and for open science are only just now starting to add OpenMP; they’re MPI only and they haven’t worried much about exploiting wide vector units explicitly until now. Some automatically get it, the compiler does the right thing but with 128 and 256-bit SIMDS previously, it was never that important.” Doerfler says it is a huge software burden, but it’s not in a void since, as Intel promised from the first conversations about KNL, optimizations will also improve performance on regular Xeons.

If that isn’t enough of an impending software development and modernization headache for shops considering implementing Knights Landing, consider too that for full performance benefits from such an architecture, codes need to exploit far higher levels of fine-grained parallelism. There are a lot more, but less capable cores and each of them has four threads, which means a lot more thread-level parallelism that needs to be exploited. And with this, and the other two areas, Doerfler says seeing the improvements they have benchmarked at NERSC won’t be possible.It is a lot of work, but as his team’s benchmark and roofline model efforts highlight, the performance boost is quite impressive.

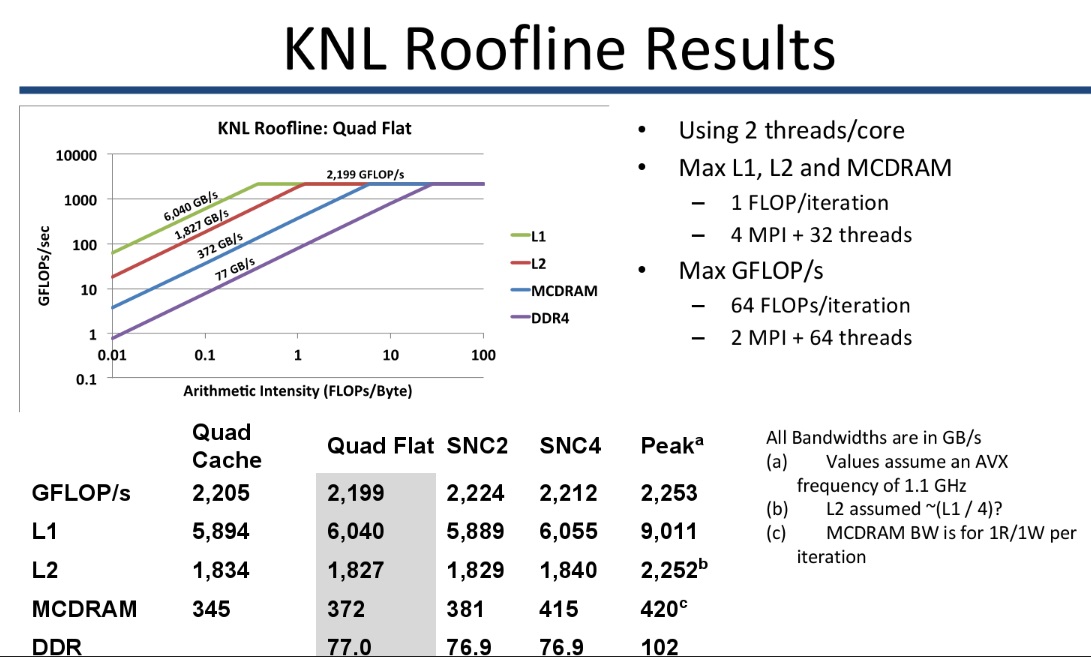

To demonstrate this, the team developed a roofline model for KNL with estimated upper bounds for L1, L2, MCDREAM and DDR4. They measured the performance of a suite of applications (proxy kernels, actually) and used the model to determine to what degree they were compute or memory bound. Applications developers then looked a series of optimizations to boost both arithmetic intensity and overall performance and then applied those results back to the model to understand the real benefit for all of the hard optimization work.

To put this work in light of actual codes, see the example below. The original code (red square shows it has low arithmetic intensity) is also not memory bound either (it’s below the roofline/blue line) but as the optimization efforts demonstrate, arithmetic intensity was bolstered for better performance and with further vectorization steps, more performance was gained.

Of this effort, the authors say that all of the evaluated applications they tested were able to substantially improve their overall performance on both Haswell and KNL, often by increasing the computational arithmetic intensity and improving memory bandwidth utilization. “Having a visual representation of the performance ceiling helps guide application experts to appropriately focus their optimization efforts.”

Once again, the full paper highlighting the various codes and extensive optimization details can be found here.

Be the first to comment