With the general availability of the “Knights Landing” Xeon Phi many core processors from Intel last month, some of the largest supercomputing labs on the planet are getting their first taste of what the future style of high performance computing could look like for the rest of us.

We are not suggesting that the Xeon Phi processor will be the only compute engine that will be deployed to run traditional simulation and modeling applications as well as data analytics, graph processing, and deep learning algorithms. But we are suggesting that this style of compute engine – it is more than a processor since it includes high bandwidth memory and fabric interconnect adapters on a single package – is what the future looks like. And that goes for Knights family processors and co-processors as well as the “Pascal” and “Volta” accelerators made by Nvidia, the Sparc64-XIfx and ARM chips that will be used in the used in the Post-K system in Japan made by Fujitsu, the Matrix2000 DSP accelerator being created by China for one of its pre-exascale systems, or the CPU-GPU hybrids based on its “Zen” Opterons that AMD is cooking up for supercomputing systems in the United States and, with licensing partners, in China.

During the recent ISC16 supercomputing conference in Frankfurt, Germany, Intel gathered up the executives in charge of some of the largest supercomputing facilities on the planet who are also – not coincidentally, but absolutely intentionally – also early adopters of the Knights Landing Xeon Phi and, in some cases, the Omni-Path interconnect that is a kicker to Intel’s True Scale InfiniBand networking. Intel’s plan, as it has stated for the past several years, is to create a standard HPC environment, which it calls the Scalable System Framework, that is suitable for all kinds of workloads, not just simulation and modeling and that will not just scale up, but also scale down and be useful for smaller organizations than the largest national labs like the ones that sat in on a panel discussion at the ISC16 conference.

First Of A Kind

“It is an important distinction that these are first of a kind, not one of a kind, systems,” explained Charles Wuischpard, general manager of the HPC Platform Group within Intel’s Data Center Group, when talked turned to the trickle-down effect that many HPC vendors have always hoped for but they could often not deliver because they lacked volume economics. “This is part of the whole strategy here. It is ironic that we normally do this in reverse. We usually implement a small machine and scale up, but many times now we are implementing a massive machine first and then the technology waterfalls down. A lot of these design ideas are meant to be deployed at all scales.”

For this waterfall effect to happen, Intel has to win a lot of big deals away from other architectures, and thus far, it seems to have done a pretty good job. As Intel revealed back in June, the company expects to ship more than 100,000 Xeon Phi units into the HPC market this year, and we think it will also be able to sell many into hyperscalers with scale issues on graph analytics and deep learning workloads, too. And the supercomputing centers that are at the front of the Knights Landing line have to have good experiences with porting their code and getting reasonable performance out of them.

We also think that Intel may have to pick up the pace for the Knights roadmap, and there are indications that this might happen. With supercomputing centers replacing their capability-class systems every four or five years, there is a slight impedance mismatch with the Knights family getting updated every three years or so. Something akin to the traditional two-year Moore’s Law cadence is probably more appropriate, if Intel can swing it.

Initially, quite a few of the supercomputing centers that are getting Knights Landing Xeon Phi nodes are mixing them with Xeon E5 nodes, and this is helping smooth out the cadence and providing other benefits.

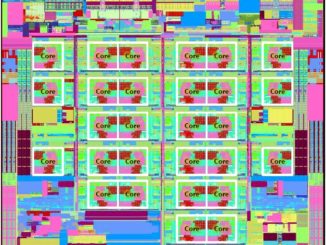

Jim Lujan, HPC program manager at Los Alamos National Laboratory and project director for the “Trinity” system, had an interesting take on this idea. The Trinity system is part of the compute infrastructure for managing the nuclear stockpile for the US government under the control of the Department of Energy. The Trinity system has over 9,000 two-socket server nodes based on Intel’s “Haswell” Xeon E5 v3 processors and is already delivering cycles to the classified users of the machine. Recently, over 2,000 of the Knights Landing nodes, using standalone versions of the chip, have been added to Trinity and by the end of July Los Alamos will have over 9,900 Knights Landing nodes.

“Trinity was going to be an entirely Knights Landing-based system,” explained Lujan. “Unfortunately, due to the timing of some deliveries and constraints by our federal program management, we had to deliver cycles to the community differently than what we had originally anticipated. This situation encouraged us into this ‘mixed mode’ approach with Haswell Xeons and Knights Landing Xeon Phis. Ultimately, I think this is going to work out to our advantage because it allows our user community to jump on board with a newer Xeon processor that they could very easily get their codes up on and running smoothly to get better performance and better characteristics and time to solution. And then when we introduce the Xeon Phi, this gives them more time to adapt their codes for high bandwidth memory as well as looking at the multicore to many core shift to try to do more threading. It gives them a platform for now to transition without having to be thrown on to the new machine and have to do a transition right off the bat.”

Given how many of the initial Knights Landing systems are hybrid Xeon-Xeon Phi machines, we asked Lujan specifically if eventually it would be desirable to just pick one architecture. “Ultimately, it is going to give us a path and users want to leverage both, but in the end, it makes it that much easier to go to a strictly Xeon Phi-based system,” he said, adding that at the moment because both kinds of nodes on Trinity use the same operating system and the same programming environment, which allows for targeted compilations for Xeon or Xeon Phi chips, managing he complexity of the Trinity cluster is trivial because there is a common interconnect and it all comes down to job scheduling and resource management tools.

Michael Levine, founder and co-scientific director at the Pittsburgh Supercomputing Center, which has a hybrid system called “Bridges” that will mix Xeon and Xeon Phi processors on an Omni-Path interconnect, said that there is another reason to go hybrid.

“We are talking about processors, but there is another degree of freedom as well,” Levine said. “We know we have a requirement for a wide range of memory sizes, and at any given time, wanting tens of terabytes of physical memory will depend on what sort of nodes are available. It is not always the choice of processor that drives the acquisition.”

But ultimately, over the long haul, there are those who believe exascale class machines will have a single, rather than a hybrid, processor architecture. (That single architecture could be based on Power chips from IBM with Tesla GPU accelerators from Nvidia, as is being built by these two companies as an alternative to Knights Landing for the DOE with its “Summit” and “Sierra” systems to be installed at Oak Ridge National Laboratory and Lawrence Livermore National Laboratory.)

Argonne National Laboratory, like many of the DOE labs, has tapped Intel and Cray to build successors to its BlueGene massively parallel systems, which IBM quietly discontinued several years ago, leaving the way open for Intel and its Knights Landing compute engines. (Why IBM didn’t just put high bandwidth memory on the PowerPC A series chips and keep this business is a bit of a mystery, but clearly Big Blue had a different plan with OpenPower and a hybrid approach to compute engines mixing CPUs, GPUs, and other types of specialized processing.) Argonne is putting in a system based on Knights Landing, code-named “Theta” and built by Cray, that will replace its BlueGene/Q machine and that will deliver around 9 petaflops of performance. In 2018, Argonne will fire up the 200 petaflops “Aurora” system, which will have approximately 50,000 nodes of the future “Knights Hill” Xeon Phi chips as well as big chunks of 3D XPoint memory and a storage system based on flash to keep them all fed.

“I think of it as a way of buffering, and I think Intel’s timing is in flux at the moment as they spin up a comfortable cadence for Xeon Phi,” said Rick Stevens, associate laboratory director for computing, environment, and life sciences at Argonne when asked about the cadence issue. “We are certainly thinking that Xeon Phi is going to be at a fast enough cadence to upgrade every cycle. Our design point is not a heterogeneous environment and part of that is as we are aiming towards exascale, it may be possible at some point for there to be heterogeneity in the node, but right now heterogeneity across the system with different node types adds complexity that we are not interested in. And we are trying to get the users to understand that if they want to compute at exascale and beyond, they really have to get into the mindset that how are you going to support billion-way or ten-billion-way concurrency, and if you have to think too much about having a handful of latency-sensitive processors and a lot of throughput processors, that is really not the right mindset. You have to start by assuming that you are highly threaded and highly distributed. You have to hit yourself every time you cannot do one-billion-way concurrency.”

Wuischpard confirmed that Intel was thinking about changing up the Knights roadmap, but was not terribly specific, as you might expect.

“While what Rick has said is true, we are busy looking at how we modify the cadence of deliveries so there is not this long wait,” Wuischpard said. “I won’t go into any more details, but there is an advantage to increasing the frequency of technology drops.”

Shaking Down The Initial Knights Landing Systems

Bringing any new technology to market always requires close collaboration between initial users, chip makers, and system builders, and this is certainly the case with Knights Landing Xeon Phi processors and Omni-Path interconnects.

Lujan from Los Alamos explains the process: “Because for many of us, these are first of a kind systems, not one of a kind, we really have to work closely with development and engineering organizations because we are seeing things either at scale or in such small frequency that without having access to people working directly with the technology makes it very, very difficult for us to get problem resolution done quickly.”

The early access that the national labs have, combined with their large scale, is a key shakedown that benefits the entire IT industry for those areas where compute engines like the Xeon Phi are appropriate. “We are introducing new technology, hardware or software, and while it may seem to work well conceptually in a laboratory, when you get large aggregations together, it starts to present new issues,” said Lujan. “Having direct access into Intel’s Knights Landing development as well as Cray’s engineering development means we are able to bring much faster resolution to these problems.”

Stevens at Argonne said that there were issues with the Theta system, and without being specific about what they were in this case, he talked generally about the kinds of issues that crop up. For instance, there can be issues in the software stack that create I/O bottlenecks at large scale that were not evident at a smaller scale. Once in a while, there are hardware problems, not usually having to do with chip design flaws but often failures with connectors or bad solder. The big problem, Stevens said, is trying to figure out what might go wrong.

“It is actually very difficult to predict what kinds of problems you are going to have,” Stevens said. “It is a bit like difficulties in aviation. Every single problem that you encounter you try to fix and then have a regression test so you don’t ever have that problem again. You always see some new problem, and that is why it is exciting. People that have to bring machines up – I used to do it when I was a kid, but I am too old now because you have to do too many all-nighters – it is kind of exciting because you don’t know what kinds of problems you are going to see. But I think that the industry as a whole is getting better. Midrange clusters up to a few thousand nodes are routinely brought up. I think that it is when you push the frontiers of scalability, particularly on a new serial number zero system, are ones where everybody has been planning for it to work. But until you actually make it work, you don’t know. Our community is really good at solving these problems and with the DOE labs, we all share information. The teams help debug each other’s systems, which we can do because we don’t get one-off systems, but two or three systems of the same type.”

Stevens said that Argonne has been running benchmarks and test codes on Knights Landing “for quite a long time” and that the lab has a pretty good understanding of how the codes will perform. “It is going to be a very easy move for people moving off of BlueGene/Q onto the Theta platform and we are very much looking forward to having a complete X86 stack that we can run both data analytics and machine learning workloads as well as traditional scientific applications ranging from materials science to genomics to climate modeling to cosmology and so on.”

The Texas Advanced Computing Center was a flagship user of the prior generation “Knights Corner” accelerators from Intel, and with the 18 petaflops Stampede 2.0 system that was unveiled ahead of the ISC16 event, TACC will be upgrading to Knights Landing in phases. In the meantime, TACC has fired up 512 nodes with its initial Knights Landing processors.

“First time compilation was actually pretty easy for us,” explained Dan Stanzione, executive director at TACC. “You can mess with flags a little bit, but there was nothing that we spent more than a couple of days tuning. I think there is a difference between the performance we saw on Day One and the performance we are going to see six to nine months from now when we have had a chance to dig in and tune. We will continue to get performance gains.”

“We also had a very smooth experience getting running, but it was a little bit of a breakneck pace to get done by the Top 500 list,” Stanzione continued. “We submitted our numbers three days after the initial racks came in. So obviously the software came up pretty quickly to make that happen. We finished all of our benchmarking and we are putting users on it.”

The issue that Stampede faces – the diversity of applications and users – is one that many HPC centers are dealing with, but is perhaps it is a bit more extreme at TACC. The Stampede 1.0 system had about 10,000 direct users and many tens of thousands of indirect users coming in over the web on the system to run the 7.5 million jobs over the past four years.

“We know we have 30 or 40 codes that use 50 percent of the cycles, and about 4,000 different codes use the other 50 percent of the cycles,” Stanzione said. “So it is a really broad mix. The thing that we figured out that would enable the most is that this is a scale of code counts that we simply cannot touch each one and fix them, and we need a platform where we can use them as broadly as we can, and the thing that seems to lift the most boats is memory bandwidth. So we are really excited about the MCDRAM in particular on the Knights Landing and we know that even for the codes that we can’t get into and tune them by hand that extra memory bandwidth will be great.”

While the consensus of the members of the labs that took part in Intel’s launch event at ISC16 was that moving code to the new machines was fairly easy and straightforward, Jack Deslippe, acting group leader at the National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory, which is building out its “Cori” hybrid Xeon-Xeon Phi system, cautioned against making this all seem too easy. NERSC has over 6,000 users running approximately 600 different applications, and Cori, which already has a bunch of Xeon E5 v3 nodes, will eventually have over 9,000 Knights Landing nodes.

“We have spent the better part of the last two years preparing our applications for the Cori system, and we have learned a lot about what it takes to port our applications from traditional CPU architectures to the energy-efficient, many core architecture,” said Deslippe. “We have a lot of early success cases, and I think a lot of our applications are projected to do really well and we have developed a strategy to make sure that all 600 make the transition.”

One of the good things about Knights Landing, explained Deslippe, is that it is self-hosted, so you don’t necessarily have to use special directives in the code because you are not offloading from a CPU. There are directives that you can use to selectively place data in the high bandwidth memory, but there is a run mode that does not require this.

“If there is a challenge,” Deslippe continued, “while there are a lot of apps that are well-written and previously using SSE or AVX instructions, given the updated locality of using MCDRAM there are a good number of other apps out there that take a significant amount of effort to use the features of the Knights Landing processor. It is not something that you can just move all of your apps wholesale over without spending some time on the optimization process.”

At PSC, the initial phase of the “Bridges” system built by Hewlett Packard Enterprise has Xeon processors linked by Omni-Path interconnects, and is looking to add Xeon Phi processors to the machine at some point in the future.

“The performance has been good,” Levine said of the Omni-Path interconnect. “Our system is still in the process of being accepted by the National Science Foundation, nevertheless it has been in production for months and Lustre has worked and there is particular support for Lustre in OPA and that has worked. We are just beginning its tuning, but it is working well enough that we don’t really need to.”

As far as Knights Landing is concerned, Levine said that PSC was “optimistic” and that PSC has users with deep learning and data analytics workloads and expects Knights Landing to be useful in both of those cases as well as for HPC jobs.

Be the first to comment