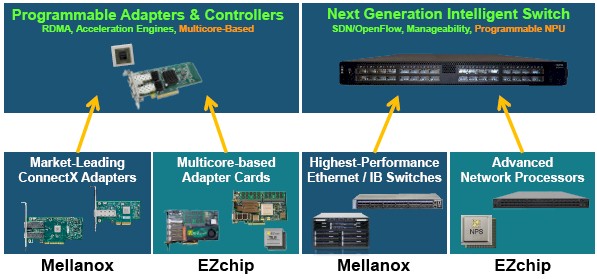

One of the things that high-end network adapter and switch maker Mellanox Technologies got through its $811 million acquisition of network processing chip maker EZchip last September was a team that was well versed in massively parallel processor chip design, and one that could make Mellanox a potential player in the server chip space.

But not necessarily in the way you might be thinking about it.

The reason this is the case is that in July 2014, EZchip, wanting to expand out beyond its networking chip business as Applied Micro, Cavium, and Broadcom have all done with ARM-based server chips, had paid $130 million to acquire upstart multicore chip maker Tilera, which had created its own minimalist core and on-chip mesh architecture and that was taking on the hegemony of Intel in the datacenter and the other players mentioned above in networking (and others) at the same time. Mellanox got the techies and the assets from both EZchip and Tilera through its own deal, and the combined companies were working on a chip called the Tile-Mx100, which would pack a hundred of ARM’s Cortex-A53 cores on a die and use the fifth generation of its SkyMesh interconnect to link them together.

But Mellanox has other plans for this technology than to try to take on Intel and its ARM rivals directly in core compute. But Mellanox does intend to steal as many CPU cycles as possible from the compute complex by getting as much network processing as possible done on adapters and switches, where it believes this work naturally belongs.

“As soon as the teams started talking in October last year after the Mellanox deal was announced, it was decided pretty quickly to put the TileMx-100 project on hold because Mellanox has other designs for the technology,” Bob Doud, the long-time marketing director at Tilera and then EZchip, tells The Next Platform.

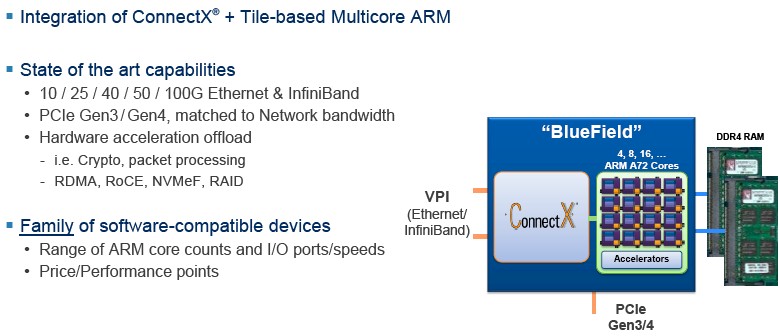

One use of the technology embodied in the TileMx-100 is going to be a series of programmable network adapters that Mellanox is developing under the code-name “BlueField,” which are set to come to market next year and which have been in development for the past five months. Mellanox lifted the veil a bit on BlueField and this provides some clues on how the company plans to put more low-power, high-throughput compute capability inside of both its adapters. This, Mellanox believes, will provide it continued differentiation from its main rivals in Ethernet adapters and switching, which it pegs as Intel, Broadcom, and QLogic. But there are, of course, other rivals that Mellanox is up against.

EZchip had its own RISC architecture, called ARC, that it has deployed in network processing units, or NPUs, which do deep packet inspection and other manipulations of data as it is whipping around the network. These chips are really designed for the network control plane and for devices that scale up to 400 Gb/sec and 800 Gb/sec bandwidth. EZchip acquired Tilera not only to get its hands on the SkyMesh on-chip interconnect, but also to get expertise in ARM cores and multicore chip design as it sought to shift to a more standard instruction set to make it easier for developers to program functions into network devices and to cram a lot more cores on its chips. (Tilera was making a similar shift to ARM cores with the Tile-Mx from its proprietary RISC instruction set used with the TilePro chips it first shipped in 2009, which had 36 or 64 cores on a die, and the Tile-Gx, which shipped in 2012 and had 9, 16, 36, or 72 cores on a die.)

In any event, the EZchip NPUs are the market share leader in the network devices they compete in, and in the latest NPS generation, these specialized devices can run C code and a Linux environment just like other processors and do not, like prior NPUs, have to be programmed in assembly language at a much lower level. Tilera arguably had the most elegant multicore design out there for many years and was shifting to ARM, so the combination of the two companies made good sense to better compete with Broadcom, Applied Micro, Cavium, and Intel, among others. The Tile-Mx100 was aimed at both the data plane and control plane processing of various kinds of networking devices like load balancers, firewalls, voice and video encoding, security and encryption, and so on where they run at 10 Gb/sec to 200 Gb/sec speeds.

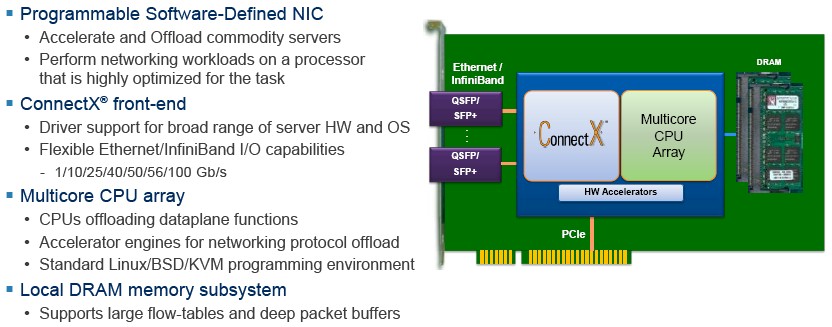

With Mellanox having the market share lead with 40 Gb/sec server adapters and wanting to maintain that lead with 25 Gb/sec, 50 Gb/sec, and 100 Gb/sec adapters, marrying the ConnextX line, which has a modicum of programmability in its firmware, with massively multicore ARM chips seemed like the next logical step in offloading yet more functionality onto Mellanox server adapter cards, and we think, someday on Mellanox switches.

Without being specific, Doud confirms that we can expect for Mellanox to be innovating “on both fronts.”

Having programmable adapter cards is not new for Mellanox. The Innova line of ConnectX-4 Lx EN adapter cards, launched last November at the SC15 supercomputing conference, have Xilinx Kintex FPGAs embedded on them for offloading the IPsec security protocols from CPUs to the adapters. This adapter has a single port that supports 10 Gb/sec or 40 Gb/sec Ethernet, and allows other functions to be programmed into the FPGA. It is safe to say that using a massively multicore chip that can run Linux and compile C code is going to make a programmable adapter much more approachable for IT shops compared to hacking an FPGA.

“You have to think of this as a continuum,” says Doud, focusing in on the adapter part of the network. “On the one side, we have ConnextX, which is remarkably sophisticated in the kinds of functions it can do. We don’t advertise this, but deep inside of ConnectX, there are programmable engines running firmware, so it is not just a bunch of gate logic and it is somewhat adaptable. Customers don’t write the code for that – we do. We launched the Innova cards, and that effort was started before the EZchip acquisition and that was one way Mellanox was pursuing in getting more programmability into a card, and there are some good reasons to have an FPGA product. There is some religion out there and some people just like FPGAs, and by putting it on the ConnectX card you get a lot of benefits such as driver support from the host. Mellanox is not going to part with the FPGA approach, but I think the multicore approach with BlueField is a bigger initiative for us and we think has a lot of promise.”

If you look at the specs for the Tile-Mx100 from last year, the chip was being pitched as a motor for deep packet inspection for networks as well as doing Layer 2 and Layer 3 virtual switching, pattern matching in data streams, and encryption and data compression algorithm acceleration. The idea is to offload these functions from servers, freeing up CPU cycles to do other work, such as supporting a larger number of virtual machines (and someday, containers). The Tile-Mx100 had enough oomph to run other C programs, too, that might otherwise end up on server CPUs.

Mellanox is not providing the feeds and speeds of the BlueField adapter cards and their many-cored ARM processor, but Doud confirms that it makes use of the SkyMesh 2D mesh interconnect and is using Cortex-A53 cores, which are the so-called “little” ARM cores versus the bigger Cortex-A72 cores that are being deployed in some server processors. (Doud says that a Cortex-A72 core is about 2.5X the performance of a Cortex-A53, so a 16-core A72 is about the same performance on parallel jobs that like threads as a 40-core Cortex-A53.)

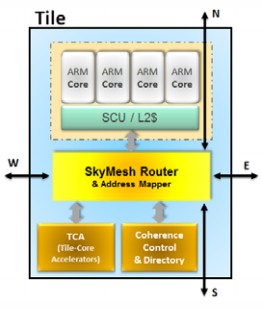

The architecture for BlueField will likely be very similar to the Tile-Mx100, which starts with a basic tile of compute and interconnect as all Tilera chips did, thus:

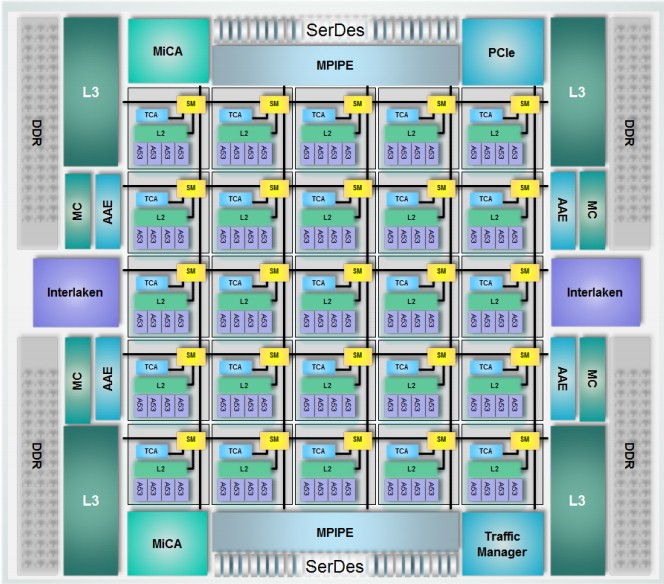

Each tile in the Tile-MX100 has four Cortex-A54 cores that have 64 KB of L1 data and instruction caches and a 128 bit NEON SIMD floating point acceleration unit, plus a shared L2 cache across those four cores. The SkyMesh interconnect is fully cache coherent and implements symmetric multiprocessing across all of the cores on the Tile-Mx die. With 100 cores fired up, the Tile-Mx design has 40 MB of on-chip cache, including an L3 cache. Here is what the block diagram for a 100-core Tile-Mx chip looks like:

The Tile-Mx100 chip puts 25 of these tiles on a die and with the 2D mesh interconnect links them to each other and to the four DDR4 memory controllers on the die. The SkyMesh interconnect has 25 Tb/sec of aggregate bandwidth across the components on the chip.

It was always the plan of EZchip to offer the Tile-Mx100 as a processor for programmable adapter cards that supported anywhere from 10 GB/sec to 100 Gb/sec ports and that embedded virtual switches, packet classification and filtering, deep packet inspection, and various encryption and compression algorithm offloads. So, by acquiring EZchip, Mellanox not only removed an engine that could have been used by its competitors in the adapter and switch space, but also got an engine for its own ConnectX adapters and Spectrum and Switch-IB switch lines that is more programmable than what it currently has. The Tile-Mx processor could also be paired with SAS and SATA controllers to me a software-defined storage array with various network functions built in, but Mellanox is not talking about this use case.

The Tile-Mx100 was expected to start sampling in the second half of 2016 prior to the Mellanox acquisition, and Doud says that the BlueField chips will sample in the first quarter of 2017.

One big change with the BlueField chips is that Mellanox switched from the little Cortex-A53 cores to the big Cortex-A72 cores. And while Mellanox is not being precise, the following slide suggests it will have BlueField chips with 4, 8, 16 and possibly more cores and says flat out that it will have a range of core counts, of I/O ports and speeds, and price/performance points. Our guess is that each tile will have two Cortex-A72 cores instead of four Cortex-A53 cores as with the Tile-Mx100 design, but we are guessing; it could be one core per tile, but probably will not be four cores per tile.

The SkyMesh interconnect can scale to over 200 cores with full cache coherency, so if Mellanox was so inclined, it could drop a very powerful parallel processor – something with real compute performance – into the field of battle in the datacenter. But again – don’t get ahead of yourself – this is not the plan.

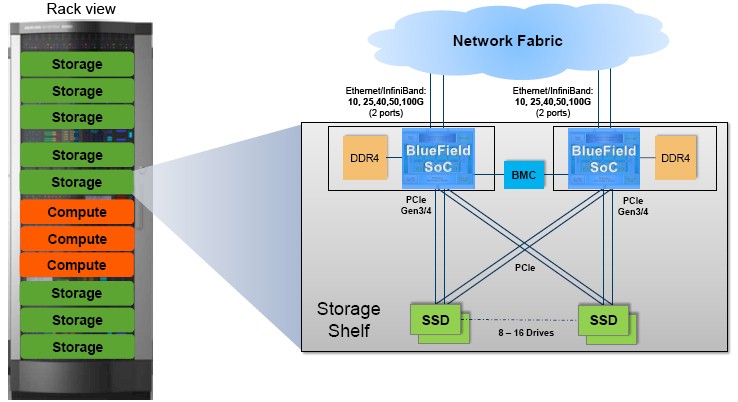

What is the plan is putting BlueField chips in network adapters for programmable offload and to embed the many-cored ARM chips into disaggregated storage arrays, particularly those that are using NVM-Express to link flash drives to servers. Doud says that a single NVM-Express drive will need around 20 Gb/sec of bandwidth all by itself, so having a very high bandwidth link between servers and storage and running over a fabric to make clustered storage (using the NVM-Express over Fabrics protocol) is a key aspect of future storage. Here is what a BlueField-based scale-out storage system based on NVM-Express might look like:

In this scenario, you will notice there are not any Xeon processors in the storage shelves, although they are obviously connected to compute nodes by the network fabric and, if Mellanox has its way, with its ConnectX adapters and Spectrum Ethernet or Switch-IB InfiniBand switches. The ConnectX cards enable RDMA or RoCE direct memory access between the storage servers and the compute servers, and also accelerate NVM-Express over Fabrics protocols when the storage fans out as well as erasure coding offload for RAID data protection. The programmable BlueField cores in the storage shelves will handle some RAID offload (including rebuilding failed drives) as well as converting from NVM-Express over Fabrics to SAS protocols and encryption, de-duplication, and compression of data stored on flash.

The obvious application of BlueField processors will be on an accelerated line of ConnectX adapter cards, and this is indeed not only the plan but very likely the first product that will use the chips. The network adapters are a baby server in their own right, complete with compute and DRAM:

These so-called intelligent adapters are similar to the SmartNICs that Microsoft has created using FPGAs in its Open Cloud Server systems or the accelerated cards that Amazon Web Services has created using ARM chips designed by Annapurna Labs, which it acquired in early 2015, or the Agilio adapters made Netronome.

What you may not realize is that the ConnectX-4 ASIC has a PCI-Express switch, called eSwitch, already built into it, and it can be programmed to run Open vSwitch. So customers can offload the control plane from the X86 servers in the cluster where they were running to the ARM cores and move the data plane control with the Open vSwitch to the ConnectX chip. That frees up X86 cycles to do compute jobs, and means that Mellanox can charge a premium for its adapters or drive market share – and maybe both if the competitive pressures are not too high. We can expect Intel to marry its own Xeon D chips to its adapter cards if this idea takes off for Mellanox. That we know for sure.

Maybe the new chips will find a way in the next generation storage arrays & shelfs, the distributed ones.