Computing for neuroscience, which has aided in our understanding of the structure and function of the brain, has been around for decades already. More recently, however, there has been neuroscience for computing, or the use of computational principles of the brain for generic data processing. For each of these neuroscience-driven areas there is a key limitation—scalability.

This is not just scalability in terms of software or hardware systems, but on the application side, limits in terms of efficiently deploying computational tools at sufficient size and time scales to yield far greater insight. While adding more compute to the problem can push new research, there are barriers at a certain point there. And adding more neurons to a network makes the problem itself unwieldy. So what is next for neuroscience when it comes to scaling the biggest research question of all—how the brain processes in real-time when our brains will soon be outpaced in terms of capability by computers?

Karlheinz Meier is one of the co-developers of the Human Brain Project, which aims to develop better computational tools to understand the brain. He agrees that while there is clear value for extending computational power into the exascale and beyond, neuroscience might have better approaches to solving its core problem of simulating brain activity at multiple scales and eventually, as close to real-time as possible.

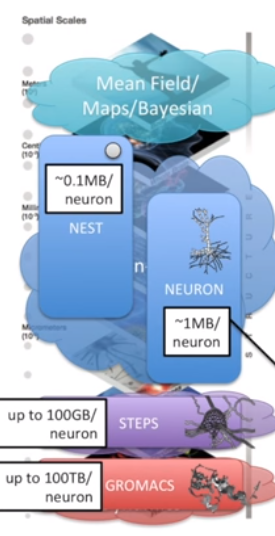

As one might imagine, the neuroscience simulation tools and challenges closely parallel those in other areas of supercomputing. Scalability and parallelization of complex codes, power consumption limits, and working with systems that balance I/O and compute capability are just a few. At the core, however, Meier says that solving the problem of strong scaling in a major challenge—and one that might be addressed by looking to other devices, including the few neuromorphic efforts from IBM, the Spinnaker project, and BrianScales in particular.

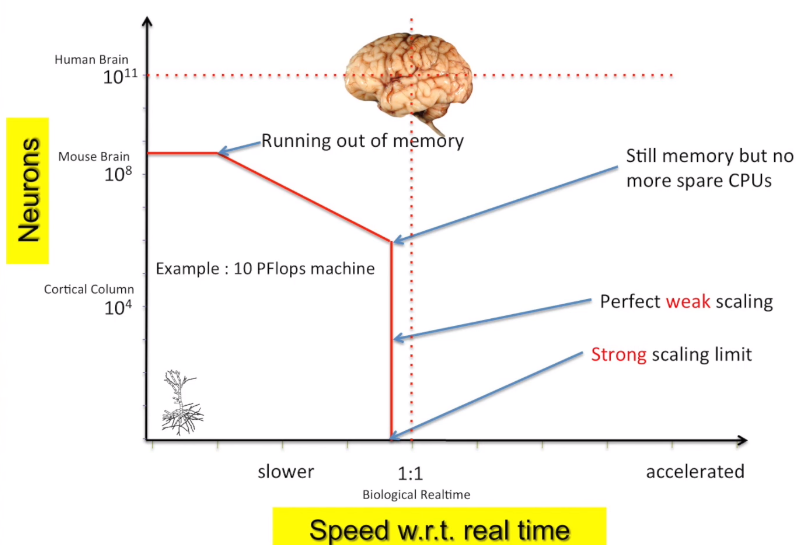

Tackling large-scale challenges with weak scaling approaches (at its simplest, throwing more compute at the problem to extend the network and capability) works to a degree, but as neuroscientists in the Human Brain Project and elsewhere want to move closer to real-time brain activity using far more detailed cellular-level data, breaking the problem into components to be computed is more efficient. Even still, simulating a human brain in real-time is still only a distant possibility with current computational approaches.

“It’s easy to draw a straight line and say we need 100 petabytpes of memory and an exaflop machine to simulate the human brain on the cellular level, but there is a problem with this approach,” Meier says. “Strong scaling is hard and it is the limitation for speed when it comes to neural networks.”

Using the chart below, Meier says that the intersection where the real brain is depicted is not attainable in any near future because of the number of cells—at least not in real time. “What they do in supercomputing is use weak scaling as much as possible. The example here is for a 10 petaflop machine using detailed cellular models with 1 MB per cell. To an extent, as long as there are enough processors, the simulation time is slower than real time, of course. But if this is true weak scaling, there shouldn’t be a difference in time as the problem size increases.” At some point, Meier says, processors run out and it becomes slower and slower, then memory runs out as well, which spells the end of the game—a point that given current approaches, ends at a full simulation of the mouse brain.

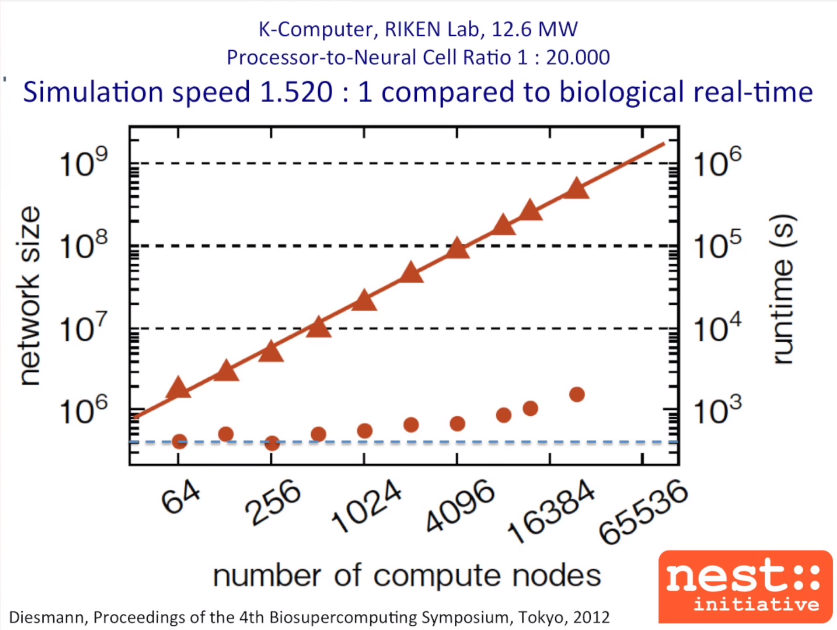

To drive home the point about the need to improve strong scaling in neuroscience, Meier described an effort on the K Computer at RIKEN in Japan where researchers worked with 20,000 neurons per processor using a weak scaling approach. He demonstrated how it did work but since the time the problem took was not independent of the problem size (the rule for true weak scaling) is would eventually peter out.

While the results were not bad necessarily, Meier estimates that the K Computer, simulating 1 billion very simple neurons on 65,000 processors would yield insight on about 1% of the “brain” size. Such a simulation would take 13 megawatts of power (our brains consume around 20 watts for the same for the equivalent action) and would be 1500x slower than our own brains’ responses. Using the Energy = Power X Time equation, he reasons that simulating the human brain on one of the most powerful supercomputers now is 10 billion times less energy efficient.

Computationally speaking, “learning is much more costly than the actual application and learning is the key for applications,” Meier says. “The only way to ever make use of artificial neural circuits derived from biology is to give them function through learning via closed-loop interaction with data.”

In his talk from the Exascale Application and Software Conference, Meier details how neuromorphic devices (as well as their various implementations by IBM and others) can get around the problems of weak scaling (and the challenges of strong scaling) to make the process of brain simulation in near real-time possible, if not more efficient.

Be the first to comment