Storage giant EMC, soon to be part of the Dell Technologies conglomerate, declared that this would be the year of all flash for the company when it launched its DSSD D5 arrays back in February. It was not kidding, and as a surprise at this weeks EMC World 2016 conference, the company gave a sneak peek at a future all-flash version of its Isilon storage arrays, which are also aimed at high performance jobs but which are designed to scale capacity well beyond that of the DSSD.

The DSSD D5 is an impressive beast, packing 100 TB of usable flash capacity into a 5U enclosure and delivering an aggregate of 10 million I/O operations per second across that flash with an average latency of 100 microseconds. As we have pointed out before, DSSD is about scale-in performance, adding flash to a system to eliminate the use of other kinds of storage to radically speed up an application with a relatively small dataset. (In fact, this is how Apple and Facebook first widely adopted PCI-Express flash cards to accelerate their databases.)

The forthcoming Isilon Nitro arrays won’t be as fast as the DSSDs, and that is so by design because Isilon is a scale-out NAS product and the whole point is to have capacity scale with reasonably fast performance across that scale. The thing to remember is that not all simulation and modeling, media processing, and similar HPC-style workloads work well with a parallel file system like Lustre or GPFS and they are really designed to work on NAS arrays with a regular file system. Or rather, something like Isilon’s OneFS file system that looks normal (meaning like NFS) but is a radically different beast under the covers.

Thus, the Isilon Nitro all flash arrays will pack a lot more capacity than the DSSDs and what EMC claims will be higher IOPS and lower latency compared to other petabyte-scale, blade architecture all flash arrays on the market. Chad Sakac, president of the VCE converged platform division at EMC, was intentionally vague for humorous effect about which vendor he was comparing to, but this comment seems to be aimed at the Pure Storage FlashBlades launched in March, which scale to 3.2 PB of capacity across 30 blades with various kinds of compute and flash storage in two 4U enclosures.

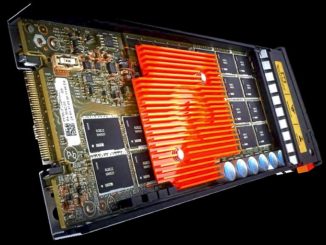

Like the FlashBlades, the EMC Isilon Nitro arrays are based on a bladed architecture that will mix compute and storage on a single element. EMC is purposefully holding back on the details for the Nitro architecture, but is offering a few tidbits of data to whet the appetite. The Nitro arrays will use 3.2 TB and 15 TB SSDs, allowing for 192 TB and 900 TB, respectively, to be housed in a 4U rack enclosure with 60 drives. EMC will be using SSDs from Samsung that are based on 3D NAND flash memory and using NVM-Express links to hook them into the array, which like other products is really a server.

Sakac said that the OneFS file system has been re-engineered to deal with all flash hardware, rather than the disk-based hardware Isilon relied on up until now, and would deliver 250,000 IOPS per node and 15 GB/sec of throughput. The latency on data retrievals for OneFS on disk arrays is somewhere on the order of 5 milliseconds to 10 milliseconds, and will be on the order of ten times faster – 500 microseconds to 1 millisecond – with the all flash Nitro array.

As for scale, Nitro is apparently going a lot further than the Pure Storage FlashBlade, which only scales to two enclosures at the moment. The Nitro array has eight 40 Gb/sec Ethernet interfaces to link to the outside world and another eight 40 GB/sec ports to lash multiple nodes together in a cluster. The Nitro array will support multiple protocols, including SMB 3.X, NFS v3 and v4, HDFS, and object interfaces, and have features that are on disk-based Isilon arrays, such as snapshots, SyncIQ, and Cloud Pools. EMC talked about a scale out version of the Nitro all flash array with 400 nodes with 1.5 TB/sec of aggregate throughput and 100 PB of capacity, but those latter two metrics don’t jibe with the single node performance. The throughput should be 6 TB/sec for a hundred nodes, and using the 3.2 TB SSDs, it 76.8 PB of capacity. Go figure.

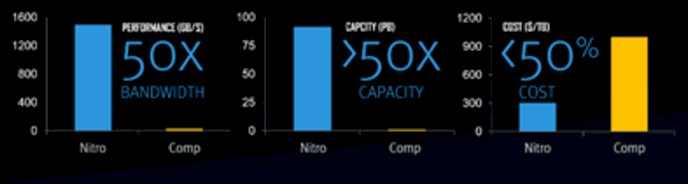

Here is the vague performance comparison that EMC is making, pitting Nitro against another blade all flash array:

This comparison is a little strange, but let’s pick it apart. Pure Storage, if this is indeed the competition that EMC is referring to, offers the FlashBlade in two versions: one with an 8 TB blade and one with a 52 TB blade. Assume EMC picked the skinnier blade to make its comparison. The FlashBlade then has 132 TBs of raw capacity and 268 TB of usable capacity. The raw capacity difference is 45.5 percent Nitro versus FlashBlade for a single 4U unit. FlashBlade can have two arrays now, which tields 268 TB raw compared to 76.8 PB for a Nitro with 400 nodes (if that is indeed the top-end scalability for Nitro). This is a factor of 268X more capacity in a single image. If you compare the fatter capacity blades from both vendors – two nodes using 52 TB blades from Pure Storage gets 1.58 PB raw and 3.22 PB usable for the FlashArray and 400 nodes using 15 TB blades gets you 360 PB raw. And that is still a 227X capacity difference. Not 50X.

Now, Pure Storage told The Next Platform back in March that it was going to have a new fabric module that allows for ten FlashBlade enclosures to be lashed together, which would yield a raw capacity of 1.32 PB raw and 2.68 PB usable with data reduction algorithms using the 8 TB blades and 7.92 PB raw and 16.1 PB usable for the 52 TB blades. The fattest 400-node Isilon Nitro array is 45X as capacious using raw storage as a ten-node FlashArray, which emphasizes the scale for sure that OneFS can bring. But the density per 4U node 900 TB for the fat Nitro compared to 792 TB for the fat FlashBlade, or only 14 percent fatter.

As for the assertion that Nitro offers 50X the bandwidth, both machines are offering 15 GB/sec of throughput per 4U node, so that comes down to scalability differences of 10 nodes versus 400 nodes, so that should be 40X over the maximum sized clusters that Pure Storage and EMC can deliver.

And for cost, EMC is saying Nitro will cost around $300 per TB, compared to what looks like around $1,000 per TB for this unnamed competition. This is consistent with the $1 per raw GB figure that Pure Storage gave us back in March for its fattest blades, and if so, EMC is being aggressive on price at 30 cents per raw GB. (You can’t count on data reduction using deduplication and compression for many HPC and media workloads. So it is not wise to price based on these factors.)

This battle has not even started yet between EMC and Pure Storage, and the SolidFire unit of NetApp will enter the fray, too, perhaps. We shall see. Pure Storage did not set a shipment date for its FlashBlades, but hopes to have them selling in volume by the end of the year, while EMC is only saying that the Isilon Nitro arrays will come sometime in 2017. That is plenty of time for others to react with products they have in development.

Those scalability limits that EMC is talking about are pretty impressive, by the way. At the moment, the Isilon architecture can scale far, but has only been certified to 50 PB using 144 nodes based on disk drives, and incidentally, the X410 nodes have 36 drive bays with a 3.5-inch form factor that can use flash SSDs ranging in capacity of 400 GB to 1.6 TB each; disks spin at 7.2K RPM and come in capacities ranging from 1 TB to 4 TB. With a mix of disk and flash, the Isilon X410s deliver 1.2 GB of sustained throughput per node, which means the all flash Nitro has 12.5X the bandwidth of the hybrid Isilon array. So with 2.8X the node scalability in a cluster and all that extra bandwidth, plus heaven only knows how many more IOPS, these are perhaps more meaningful numbers for Isilon customers to consider. If we had a price for Isilon hybrid arrays, that would be interesting to look at, too.

latency of 100 milliseconds

You mean microseconds.

Correct! Thanks for the catch

Several years ago IBM sold a SAS drive product named “RDX”.

*That* was explosive.

HA!

“$1 per raw GB figure that Pure Storage” → Should be $1 per usable GB