Servers are still monolithic pieces of machinery and the kind of disaggregated and composable infrastructure that will eventually be the norm in the datacenter is still many years into the future. And that means organizations have to time their upgrade cycles for their clusters in a manner that is mindful of processor launches from their vendors.

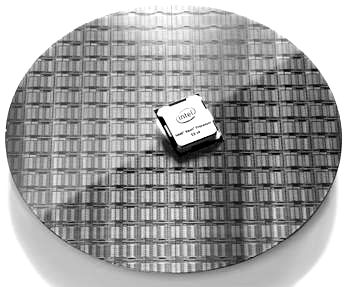

With Intel dominating the server these days, its Xeon processor release dates are arguably the dominant component of the overall server cycle. But even taking this into account, there is a steady beat of demand for more computing in the datacenters of the world that keeps servers flying off manufacturing lines and into glass houses at a reasonably steady pace, and this is what really drives the Xeon business. The Moore’s Law improvements that Intel delivers help make the upgrades more attractive, but the demand for more compute was going to be there no matter what it does – or doesn’t – do.

This momentum is a source of frustration for those who want to break into the datacenter through the server, such as the handful of ARM server chip makers, or those like IBM and its OpenPower partners, who want to revitalize an alternative to the Xeon. But it is surely a comfort to Intel and its server partners, particularly when it comes to “tick” processor shrink as is the case with the “Broadwell” Xeon E5 v4 processors that launched a month ago. While there are not a huge number of architectural features that make Broadwell a “must have now” like the “Nehalem” Xeon chips from 2009 were and that the “Skylake” Xeon chips from next year could be, the increase in performance and bang for the buck that comes with the Broadwells keeps the Intel platform on track and the compatibility with prior “Haswell” Xeon E5 v3 processor sockets means it is a relative snap for server makers and their customers to qualify the new chips on existing systems.

With Dell and Hewlett Packard Enterprise being the two top shippers of Xeon systems in the world, we reached out to them to get a sense of how they expected the Broadwell chips to be adopted by customers and what kind of value proposition they were pitching to customers. (We have presented Intel’s own math on the Broadwell platforms already, which you can see here.)

Depending on the workload, a two-socket Broadwell system will deliver somewhere between 7 percent and 64 percent more oomph on the wide array of benchmark tests that the chip maker has used to characterize the performance of the chips compared to their Haswell predecessors, with the average being around 27 percent. (This is a SKU for SKU comparison, with most of that performance coming from the increase in cores.)

The View From Round Rock

Brian Payne, executive director of server solutions at Dell, tells The Next Platform that for its 13th generation PowerEdge servers will depend on the processor selected and the memory capacity and speed, the company expected “to see a little pop” on the pricing, with maybe a 10 percent increase in the memory price for 2.4 GHz sticks on a gigabyte to gigabyte basis compared to slower sticks. “We will see a slight premium for a couple of quarters and then it will go away,” says Payne. At the system level, the premium that will come with a Broadwell system will depend on how much of this faster memory is put in the system, and the overall system price increase could be “pretty small if not negligible,” perhaps in the range of middle single digits.

So, for instance, let’s say that the average Broadwell machine yields 25 percent more performance thanks to more compute and faster memory, this is a bargain with only a 5 percent price hike. The point is, customers do not have to pay 25 percent more to get 25 percent more performance.

While it is possible to actually upgrade processors and memory in existing Haswell-based systems, Payne says that most IT shops do not actually do this – with the exception of the high frequency trading platforms in use at financial services companies, who are always looking for an edge and who are willing to pay for the hassle because the extra performance nets them out more money. Some HPC centers also occasionally do a literal processor upgrade inside of existing systems, too. But generally, what organizations do is add new capacity for existing or new workloads and then cascade older iron down to jobs that are less dependent on performance until the boxes are so long in the tooth they are sent to the crusher. Payne says that the obvious targets for Broadwell system upgrades are machines using “Sandy Bridge” and “Ivy Bridge” Xeons, but adds that there is still a portion of the PowerEdge base that is using “Nehalem” and “Westmere” Xeons and these are an even easier sell for a swap out.

Not all of the PowerEdge 13G machines will be getting a Broadwell option at the same time. Dell is rolling out the Broadwells into the PowerEdge R630, R730, and R730xd rack-mounted servers right now, and is also refreshing the PowerEdge M630 blade for the M1000 blade server chassis and the FC630 module for the FX2 modular system, too. The hyperscale-inspired PowerEdge C6320 will get the Broadwell Xeon now as well, and so will the PowerEdge C4130, which packs two Xeon and four Tesla GPU accelerators from Nvidia into a 1U chassis.

Perhaps the most interesting machine from Dell is a variant of the PowerEdge R730 rack-mounted system that has a pair of overclocked Xeon E5-2689 v4 processors that is aimed at high frequency trading. These E5-2689 v4 processors have ten cores that run at a 3.1 GHz base clock speed and that Dell is running at 3.7 GHz within a 165 watt thermal envelope. The neat thing about the way Dell has put this chip into the PowerEdge R730 server is that it can run it just using air cooling, and Payne says that he expects others will have to use water cooling in some fashion in their machines. By the way, on the standard Broadwell Xeon lineup, the frequency optimized parts come in at 3.5 GHz for a four-core, 3.4 GHz for a six-core, and 3.2 GHz for an eight-core, all within a 135 watt power budget. So you would expect something around a 3 GHz clock speed for a ten-core in the same 135 watt envelope, but Intel doesn’t sell such a thing as a standard part. There is a Xeon E5-2640 v4 that runs at 2.4 GHz with ten cores and the same 25 MB of L3 cache that the overclocked Xeon E5-2689 v4 has.

Houston, We Have A Memory Problem

Over at HPE, the ProLiant Gen9 machines that launched in September 2014 with the Haswell Xeons are being updated with the Broadwell Xeons, and Tim Peters, general manager of ProLiant rack servers, server software, and core enterprise solutions at the company, tells us that the entry DL100 series, the workhorse DL360 and DL380 machines, the Ml350 tower, and the Apollo density-optimized systems were all ready to use the new Broadwell chips by the end of April. (The Cloudline hyperscale machines made in conjunction with Foxconn are a separate product on their own cycles and are not under Peters’ purview.) Other ProLiants not mentioned above will see the Broadwells in time.

While HPE is obviously always game to use more CPU performance as a way to sell systems, the company is focused as much on memory in the ProLiant systems with the Broadwell rollout. The new 2.4 GHz DDR4 memory modules that are supported with the Broadwell Xeons help keep the extra cores in the processors well fed, and HPE has a new load reduced DIMM memory stick that weighs in at 128 GB of capacity that on memory-sensitive benchmarks has delivered a 23 percent improvement in performance over skinnier sticks in the Haswell generation of machines. With up to a dozen memory slots on a two-socket Xeon server, that means the memory capacity on a machine is now doubled up to 1.5 TB. Not that we expect many companies to go that far.

HP has been hinting about persistent memory for the ProLiants for a while, too, and it turns out that the company is reselling 8 GB NVDIMM memory modules created by Micron Technology as its first persistent memory. (3D XPoint and other technologies will no doubt follow as they evolve.)

We told you about the Micron NVDIMMs back in December, which are special DRAM modules with flash on them for data persistence and supercapacitors to provide the power to flush data from the memory to the flash in the event that a server loses power. Micron figures that a certain percentage of the server workloads out there could use persistent storage to boost performance or simplify their programming model – somewhere on the order of maybe 10 percent of the base and somewhere between hundreds of thousands to over 1 million units a year.

With the Broadwell Xeon launch, HPE created special drivers for Microsoft’s Windows Server 2012 R2 for the Micron NVDIMMs and also did BIOS tweaks for ProLiant machines that let the firmware see server failures coming and dump the contents of memory to the flash transparently without having to tweak the applications. On early tests running SQL Server database and Exchange Server collaboration software, HPE is seeing a 2X performance improvement on database and 63 percent on email using NVDIMMs compared to using flash-based SSDs hanging off the PCI-Express bus; latencies for the database transactions improve by a factor of 81X. And this is without optimizing the application code. Peters elaborates on how this works:

“The number of software instructions it takes to make one write for block storage to a hard drive with a database is 25,000, with five data copies. But the bottleneck is down in the storage media. When you take storage-class persistent memory and put it on the memory bus, now you are at nanosecond speeds instead of millisecond speeds, and what becomes the bottleneck? It is the software. If you take those applications where you are already getting a significant improvement and then you optimize the code to take only 3 instructions to make a byte-addressable write to DRAM-speed memory, you can see 4X improvements in performance. That is substantive.”

Windows Server 2016 and SQL Server 2016 will have native support for persistent storage (presumably both NVDIMM and 3D XPoint, and perhaps others) and the Linux community is no doubt also working on adding this, too. Peters says that the way HPE sees it, the Micron NVDIMMs are focused more on performance, while the 3D XPoint will be focused more on capacity. (We think both types could end up in machines, depending on the workloads, alongside both DRAM and flash-based SSDs.)

These ProLiant NVDIMM memory module will start shipping later this month in ProLiant DL360 and DL380 machines, HPE’s most popular two-socket rack servers. Only HPE machines that have the Broadwell Xeons will be certified to support the NVDIMM features, which is not a technical necessity but rather a marketing one. HPE is not requiring the supercapacitors to use NVDIMMs, but is rather hooking into the in-node battery backup that it has for these ProLiant machines. To use the NVDIMMs, you have to have at least one memory stick of regular DDR4 DRAM in the system, and on machines with 24 memory slots, the NVDIMM capacity tops out at 16 slots or a total capacity of 128 GB. Micron will be soon doubling up the capacity on the NVDIMMs, and has 32 GB versions in the works, that could be 512 GB of flash-based DRAM per machine. Again, the capacity is not the point, but the persistence at main memory latency is.

As far as we know, HPE has not launched a Broadwell Xeon system aimed at high frequency trading, but the company has launched an Apollo r2600 system based on Intel’s Haswell Xeon E5-1680 v3 processors that is overclocked to boost its performance. These chips have eight cores that have a base frequency of 3.2 GHz and a maximum turbo frequency of 3.8 GHz, but HPE engineers are overclocking them up to as high as 4.6 GHz. On Monte Carlo financial simulations, applications saw a boost of between 17 percent and 26 percent using the overclocked chips, and Black Scholes derivative pricing applications saw a boost of between 11 percent and 28 percent. The Apollo r2600 has four XL170 server nodes, which each have a single processor plus 32 GB of memory (on four sticks) and a single 500 GB 7.2K RPM SATA drive per sled. The four-sled enclosure has two 1,400 watt power supplies.

Be the first to comment