If component suppliers want to win deals at hyperscalers and cloud builders, they have to be proactive. They can’t just sit around and wait for the OEMs and ODMs to pick their stuff like a popularity contest. They have to engineer great products with performance and then do what it takes on price, power, and packaging to win deals.

This is why memory maker Micron Technology is ramping up its efforts to get its DRAM and flash products into the systems that these companies buy, and why it is also creating a set of “architected solutions” focused on storage that are created in its new Austin, Texas lab in conjunction with partners such as Supermicro and Nexenta. Micron needs such partnerships if it wants to compete against the default, knee jerk purchase of Intel flash drives inside Xeon systems that often have Intel motherboards as well as processors and chipsets and – someday soon – 3D XPoint memory and storage.

While Nexenta has products aimed at midrange companies and the edge of the network, Supermicro has its share of hyperscale and cloud customers (its biggest being IBM’s SoftLayer cloud) and Micron is keen on using the combined technical prowess and marketing push of the two companies to get tuned-up storage servers for different kinds of workloads into datacenters at a much larger scale.

Micron is hosting a big shindig in that Austin center today, with all the company’s top brass on hand to roll out the company’s first line of flash devices to support the NVM-Express protocol, which is a rejiggered means of linking flash devices directly to the processor complex over the PCI-Express bus without using media controllers and drivers that are really designed for rotating rather than nonvolatile media and thereby providing much lower latency for all kinds of applications.

Flash For The Masses, Two Ways

Like most component vendors these days, Micron has to customize its product line to meet the distinct needs of the enterprise and hyperscale customers that it is chasing, and this is no different with the new NVM-Express flash cards and drives that the company is launching today. The new 9100 series is aimed at enterprise customers and is intended for customers who want to use flash as a cache between compute and spinning rust storage within a system or as a fast storage pool in their storage hierarchy supporting many systems.

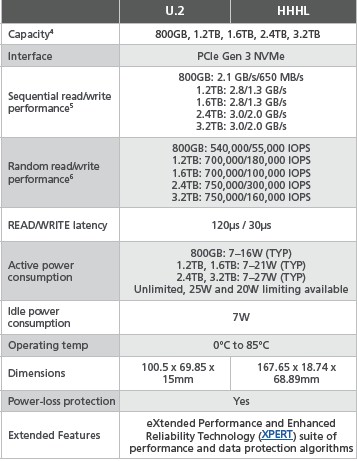

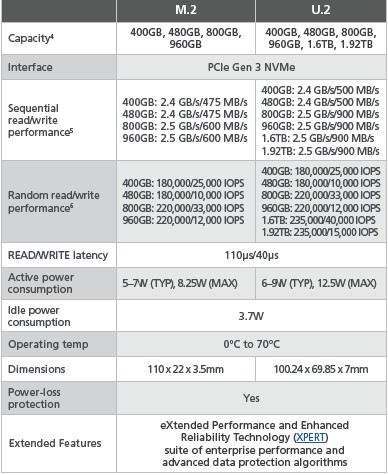

“The 9100s are important for us because we are filling out our storage portfolio and these are our first NVMe drives,” Eric Endebrock, vice president of storage solutions marketing at Micron, tells The Next Platform. The 9100s come in two flavors, a 2.5-inch U.2 form factor that slides into drive bays in servers and a half height, half length PCI-Express card, and here are the feeds and speeds of the units:

The capacity and performance of the two different form factors are the same in the 9100 series, regardless of the form factor, and these devices have an idle power draw of 7 watts. But when they are fired up and working hard, they can pull as much as 21 watts to 27 watts (depending on the capacity), and that is not necessarily low enough for the typical hyperscaler or cloud builder. (More on that in a moment.) The 9100 series is based on Micron’s own flash chips, of course, and come in capacities ranging from 800 GB up to 3.2 TB, and come in read-centric versions, which are called Pro, and mixed-use versions, called Max, that at the high end deliver about the same I/O operations per second as a disk-based storage array using two dozen 15K RPM disk drives.

Micron is only sampling the 9100 series now and will ship them in volume later this year, and pricing will be available at that time. It is going to have to be pretty low to compete with Intel and SanDisk, who are both storming the datacenter in search of revenues and profits and who are also making their own partnerships to ensure that their flash is the one that gets in the glass house door rather than those from Micron or anyone else.

While the hyperscalers were among the first companies to adopt flash memory in volume, mostly to accelerate their databases and then more recently to add a caching layer to their distributed file systems, they are no longer interested in the PCI-Express flash card form factor and are more interested in M.2 memory sticks, which plug directly into servers and are often used for local operating systems as well as local caching on servers, and smaller form factor 2.5-inch flash units. We presume that NVM-Express, which is just making its way into the enterprise, is pervasive after early adoption by the hyperscalers – Facebook has talked about its “Lightning” all-flash array recently and donated it to the Open Compute Project, but we presume the other hyperscalers have their own variants on the theme.

We wonder that there is not an M.2 array being developed, but the tooling for 2.5-inch drives is already done in storage servers. What hyperscalers seems to want to do is stack up skinnier 2.5-inch U.2 flash drives and then double stuff them into their existing machines.

“Even with 2.5-inch drives, we have been asked to provide a lower Z height,” says Endebrock. “The average U.2 drive is 15 millimeters tall, but the 7100 series are 7 millimeters tall. There are not many platforms to do that stacking yet, but that is what some of these companies want to do. Whether they do it as M.2s or ultradense, they are looking to fit more into less space.” The other issue, says Endebrock, is the power consumption of a PCI-Express slot, which are we pointed out above can be as high as 27 watts for a PCI-Express flash card. But these U.2 and M.2 hyperscale flash devices top out at 12.5 watts with an idle power consumption of 3.7 watts.

Here are how the 7100 flash devices from Micron stack up:

As you can see, the capacity of these devices ranges from 400 GB to 1.92 GB, which is half of the capacity of the PCI-Express cards in the 9100 series that are aimed at enterprises. So the lower power per bit stored is not as large as the gap might suggest in the numbers above. But it is still significant enough, and hyperscalers and cloud builders will definitely switch technologies for a 10 percent or 20 percent price per dollar per watt advantage. The 7100 series has 110 microsecond read latency, which is a bit faster than the 120 microsecond read latency of the 9100 series, but at 30 microseconds for writes it is a little slower than the 9100 series, which comes in at 40 microseconds.

The 7100 series are also sampling now and available later this year in volume, and we presume that the cost per GB of these devices at the street level is also lower given the targeted customer and the number of devices they tend to buy. (At list prices, the cost per GB could be about the same on the 7100 and the 9100.)

These components will be the foundation of Micron’s push into the datacenter, but it also is working with partners to get its DRAM and NVDIMM products into machines as well. (We took a look at Micron’s NVDIMM persistent memory back in December.) The only key components that Micron doesn’t sell that are key parts of a server or storage server are CPUs and disks, but three out of five ain’t bad. (We would add Hybrid Memory Cube to the list, too, but that is a special kind of DRAM that is not yet widely available in a server.)

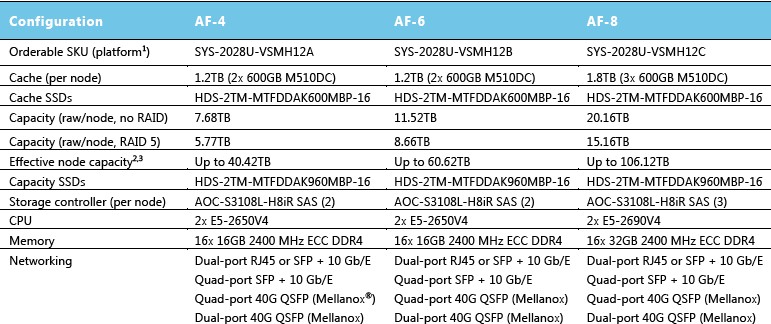

To help ensure that its products end up in modern storage, Micron is working with whitebox server maker Supermicro to cook up certified and tested configurations of all-flash storage servers running VMware’s VSAN 6.2 hyperconverged storage and Red Hat’s Ceph object and block storage. In both of these cases, the arrays use Micron’s M510DC enterprise-class 2.5-inch SATA flash drives, which were announced last year.

Supermicro and Micron have cooked up three different configurations with the VSAN 6.2 stack, which we detailed back in February, as below:

These configurations assume that the storage is configured with RAID 5 data protection and that the VSAN software can attain a 7:1 data reduction and drive the cost of the overall setup down to 44 cents per GB. With an effective capacity of 106.1 TB, that comes to $46,700, and that is less than half the price that VMware was saying it could get, all in including hardware and software, at $1 per GB when VSAN 6.2 was launched.

The Supermicro servers that are used as the basis of this VSAN platform have a total of 24 drive bays. In the top-end AF-8 machine, three of the M510DC drives with 600 GB SATA drives are the cache layer and 21 of the M510DC drives with 960 GB capacity are used for storage. The AF-8 configuration has three drive sets, each with one cache drive and seven capacity drives. These systems are configured with the just-announced “Broadwell” Xeon E5 v4 processors from Intel.

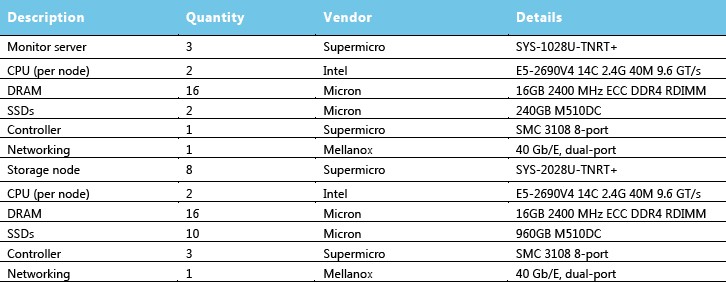

As for object storage, Micron and Supermicro are tapping the open source Ceph, and have cooked up an all-flash cluster that looks like this:

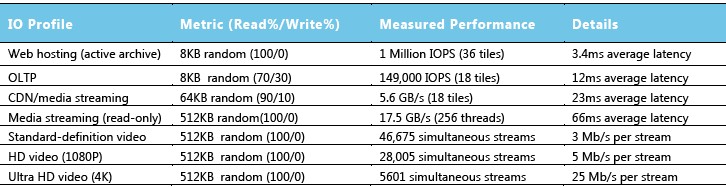

This setup has eight storage nodes, again using the current M510DC flash drives, plus three Ceph controller nodes and 40 Gb/sec Ethernet switching from Mellanox Technology hooking them all together. Here is the performance of the Ceph cluster on various workloads where object storage is used:

Endebrock tells us that Micron will be working with Supermicro to add 7100 and 9100 series flash to the machines, offering variants that have higher performance or that have lower power consumption. Pricing for the Ceph cluster was not revealed.

Be the first to comment