Over the last several rankings of the Top 500 most powerful supercomputers, the conversations in high performance computing have shifted from hardware optimization to data and workflow tuning. Achieving floating point performance is far less complicated these days than tweaking hardware and software stacks for HPC systems to target real application performance, thus we are seeing all major vendors in HPC (and in many ways, in their non-HPC commercial approaches) focusing on data movement-related issues.

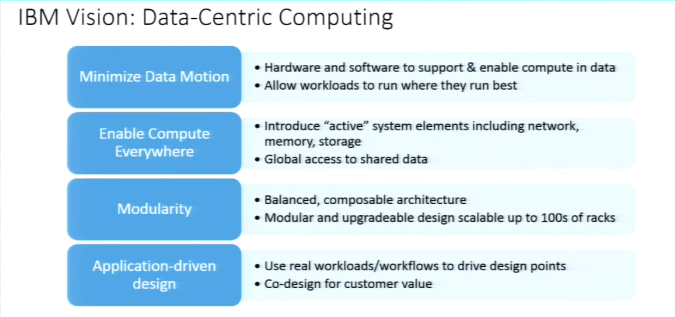

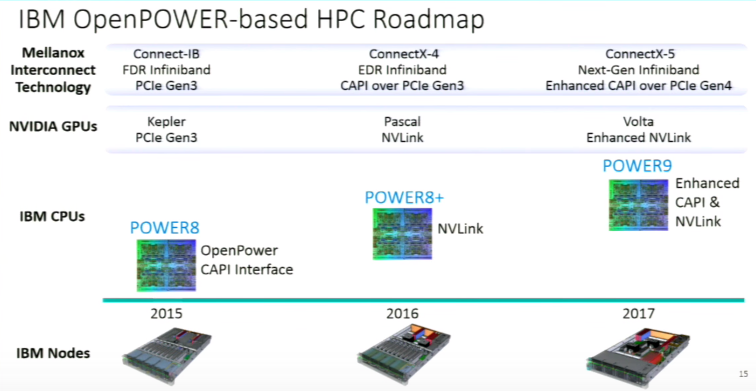

For IBM, this approach is wrapped around their “data-centric” approach, which will be showcased in two massive systems in particular as part of the CORAL national lab procurements. Both machines (a total of $325 million for one system at Oak Ridge National Lab, the other at Lawrence Livermore National Lab) will feature the Power9 architecture with Nvidia Volta acceleration and Mellanox EDR Infiniband when they are installed in 2018, which will mean a 5X-10X performance improvement over the current top systems at both labs, including the #2 ranked Titan machine at Oak Ridge National Lab.

While this new architectural direction for both IBM and the national labs that will soon house some of Big Blue’s largest new supercomputers comes with some programming and other hurdles, the real test over the long haul will be in how IBM manages to pull its HPC strategy together. This effort hinges on the data-centric approach, but the company requires a broader ecosystem outside of itself to manage it effectively, thus the OpenPower Foundation will continue making waves—some of which we expect will reach the shores this week in our coverage of the OpenPower Summit, which will be taking place alongside Nvidia’s GPU Technology Conference.

“Innovation around HPC is slowing down a bit—the application performance we are getting is not that great,” Klaus Hottschalk, HPC Architect at IBM noted during the HPC Advisory Council meeting in Lugano, Switzerland. “One of the things we are trying to address is how we can bring that innovation back” and specifically, how trends in hyperscale and cloud will influence IBM’s Power systems strategy going forward. “There are requests coming in for making use of public clouds and incorporating those into classical HPC environments” and that, coupled with a continued drive toward open source and a hyperscale datacenter model will change the way IBM looks at its approach to the supercomputing market, both in terms of Top 500 academic machines and commercial HPC.

“A lot of centers are still just adding capacity and running out of power and cooling, but the applications and data movement are the most important. Our strategy is to move into the HPC market with Power again, but the important thing is that we are not doing it alone—the OpenPower Foundation is central in creating an ecosystem over the next several years.”

IBM has been working with common HPC codes over the last several months to determine how the current Power architecture performs (it out performs significantly when the application is memory bandwidth-dependent, Hottschalk says) and how portable applications are. “For most applications, we are doing quite well; there are some that are optimized for AVX only, which I don’t consider portable any longer. We did get a comment in this process that the customer was able to generate an executable for Power in one day.”

“We never moved out of HPC. Even though we decided to sell the X86 division to Lenovo, it was never our intention to move out of this area. Power is the platform we are bringing back and even now, there are still BlueGene systems that are visible on the Top 500.”

Be the first to comment