There is no question that Docker is emerging as the dominant container format and runtime for the encapsulation of the modern microservices way of creating and deploying software, and that container podding systems like Google’s Kubernetes or Docker’s Swarm are useful for managing a container collective that expresses an application. But that doesn’t mean these elements comprise a complete system.

Myriad ways of skinning this container management cat are emerging as companies see the problems and smell the money. Microsoft, Google, and Amazon Web Services have built container management systems for their public clouds, and Google, of course, has over a decade of running its own Linux-based containers internally using its Borg cluster and container controller. Docker, the company, and CoreOS have also woven together management, monitoring, scheduling, and podding elements to make something akin to Borg for the enterprise – Docker Datacenter from the former, and Tectonic from the latter. To a certain way of looking at it, the Apache Mesos cluster controller and its commercial-grade Data Center Operating System from Mesosphere is also a mostly complete container management system. This is by no means an exhaustive list. But without a doubt one of the tools that can be added to the list for the title of enterprise Borg is without a doubt the private container service called Rancher, which is ready for primetime starting today according to the project’s founders.

The name Rancher is illustrative, since it embodies the notion that infrastructure like servers, virtual machines, or containers should be treated like cattle – with a number, and basically interchangeable as far as our purposes go – and not like pets – which we name and love and have difficulty letting go of. (We shut down our servers only when they died, so we know what server pets are like. We still miss their persistent whirr. . . but only for nostalgia for a time, not because we enjoyed the hassle of systems management.)

As the Rancher stack reaches the milestone 1.0 release level today, The Next Platform sat down with project founders Sheng Liang, who is the company’s CEO, and Shannon Williams, vice president of sales and marketing, to talk about the explosive growth for Rancher in the past year as the software has come to maturity. Both Liang and Williams have long histories in systems software, but notably they held the same roles at Cloud.com, which created a cloud controller that predates OpenStack that was eventually renamed CloudStack, opened up, and acquired by Citrix Systems. CloudStack has lost the battle to OpenStack as the dominant open source cloud controller, but the battle is shifting from virtual machines to containers so Liang and Williams are not crying too much about that as Rancher ramps.

Rancher was founded in September 2014 after the two did some consulting engagements with big banks and other large enterprises who were trying to figure out how to use software containers on top of their virtualized infrastructure, which was CloudStack or VMware ESXi/vSphere on premises or on the AWS public cloud. The work predated the rise of Docker and involved LXC Linux containers (which are based on work done by Google as it created its own container formats).

“This really did not go very far,” Williams tells The Next Platform. “There were clearly a lot of technical challenges and limitations to overcome before you could write an application for containers and consistently run it. But we were still totally enamored of the idea. To our way of thinking – and Liang is from Sun Microsystems, where they invented software containers for Solaris a long time ago – we have been moving away from containers and now we are moving back towards them. The idea that Linux was running everywhere but you still could not write the application once and run it anywhere was really frustrating. What is so great is that every time you dig into this stuff, you realize that we are not creating new concepts in computing, it is just about applications and usability and solving actual problems. I don’t think this exists in a world where you just have VMware, which is not all that useful. Sure, you can have small VMs and big VMs, but it is not enormously useful until you are dealing with clouds and DevOps and you are creating 30 instances of something where you realize that you should probably use something other than a server or a VM as the level of abstraction. Then the container becomes the means of application portability and the industry asks, ‘Why didn’t we do this to begin with?'”

The short answer is: because, humans. We solve the problems that are in front of us, and low server utilization because operating systems did not have proper containers was a big deal just as the Great Recession was taking off. Now that virtual infrastructure is normal, we can go back and do it right. And that might mean a mix of virtual machines and containers in the short run but something else in the long run.

“We are definitely seeing the vast majority of users run Rancher on virtualized infrastructure, with most of that being on Amazon Web Services, followed by VMware and maybe a little OpenStack here and there, but it is not very common,” says Liang. “The people who tend to deploy Rancher and Docker are the apps teams, and the people who deploy OpenStack and VMware tend to be the infrastructure team. Over time, I think it could change and people will realize that this extra layer might not be necessary. But we are not there yet, or even close. Today, the state of the art requires a virtualization layer for multitenancy and isolation, but nobody knows where the technology will go. When there is a technology problem with a business justification, I think it will be solved.”

Companies are clearly looking for something that turns Kubernetes or Swarm into a true container management service. In November 2014, only a few months after founding the company, Liang and Williams put out the alpha release of Rancher tools and let 200 companies play around with it to give them feedback on how to further develop it. In June last year, Rancher uncloaked from stealth mode, announced it had secured $10 million in its first round of venture funding from Mayfield Fund and Nexus Venture Partners, and opened up a beta for the Rancher tool. Between then and the 1.0 release today, more than 2,500 companies have gone through the formal beta, there have been over 600,000 downloads of the open source tool, and over 1.5 million agents deployed on virtualized infrastructure.

One of those customers that has been out on the bleeding edge of both Docker and Rancher is a software provider called Pit Rho, which among other things provides a set of Python-based predictive analytics tools for NASCAR and other auto racing circuits to help them develop driving strategies to win races. The issue with Pit Rho is that sometimes the application will run in a cloud and sometimes it will run on a local server cluster that is actually down in the pit, ensuring the lowest latency and local processing, which NASCAR teams require. So two and a half years ago, long before Docker was production grade, Pit Rho took its lumps and put it into production because its need was greater than the time to wait for it to mature.

“When you get into the cloud, you get into a state of complacency where assume a deployment will go consistently,” explains Gilman Callsen, co-founder of the company. “But if you are talking about a server that has intermittent access to the Internet and they can go up and down without you having any idea when this might happen, things can fail silently and you won’t have any idea. That’s where Docker came into play for us – if we install software, we know it will run consistently.”

Having adopted Docker, Pit Rho needed a container service, and last fall, after the beta had been out a while, it joined up and got on the bleeding edge there, too, and put it into production. “It immediately became one of those things that we just had to use,” says Callsen.

The typical Pit Rho customer has clusters with maybe 10 or 20 servers running the Pit Rho predictive analytics software – these are not huge clusters – but the same principles apply for larger scale organizations.

The Borg For Enterprise

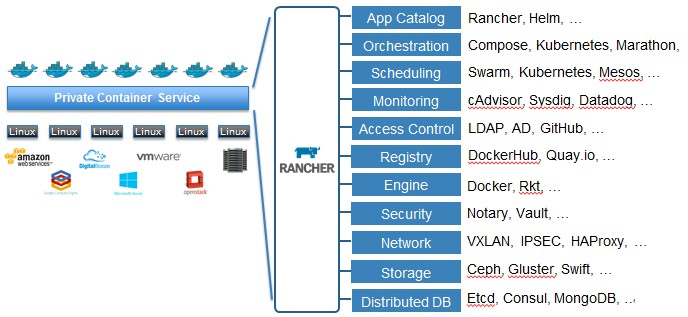

If you wanted to build a modern platform, as we have discussed in detail before at The Next Platform, there are a lot of different open source tools that you could cobble together create that stack. A tool like Rancher would be part of that stack, of course, but there is more to it. Even when creating a private container management service – akin to the one based on Kubernetes that Google has for Compute Engine, the one based on Mesos that Microsoft has for Azure, and the one (presumably based on homegrown code) that AWS has for EC2 – there are a lot of elements that companies can choose from. (Yelp has created its own Docker container service from piece parts and addition code, as have many other organizations that have such skills.)

Here is how Liang and Williams stack up the layers in a container management system:

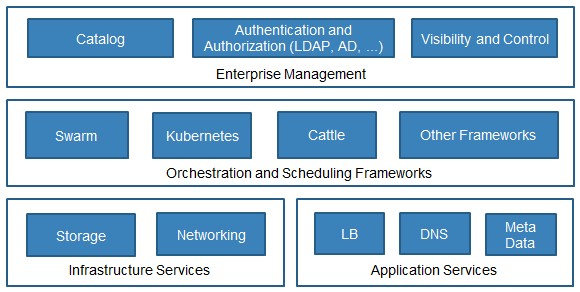

The idea with Rancher is not try to tackle the entire software development and deployment and infrastructure management task, but to do the things that can orchestrate and provision compute, storage, and networks for podded containers that are in turn managed by software such as Rancher’s homegrown Cattle container herder or the Google Kubernetes or Docker Swarm alternatives. Like this:

The assumption that Liang and Williams make is that companies will write and share code through GitHub, use Jenkins as a build manager, and package up and distribute working code through Docker Hub, either the public one or the private one. Then, Rancher takes over from there, abstracting away public cloud infrastructure like AWS or private infrastructure such as clusters equipped with VMware ESXi hypervisors and then it orchestrates containers as they move through test to deployment to operations to upgrade to redeployment and so on.

Rancher itself is written in a mix of Python, Go, and Java, and the software is completely open sourced. (The company is not doing an open core model for the moment, but as it adds features, it may be tempted to do so to earn more than support fees for its open source software.) The container service currently supports Kubernetes and Swarm for container podding (which are both open source and can be embedded in Rancher pretty easily) and based on customer input, Mesos could be added soon and there is even some interest for the container scheduler at the heart of HashiCorp’s Atlas platform. The ability to have multiple schedulers for workloads is one of the key innovations that Google came up with for Borg. (Or, more precisely, personal preferences for techies and the nature of different workloads and their need for different schedulers gave Google no choice but to allow for multiple schedulers.) We can envision Rancher making use of other schedulers, perhaps the Grid Engine HPC scheduler from Univa, which is at the heart of its own Navops container management system. Perhaps even more importantly, Rancher will provide an overlay on top of these container schedulers and pod systems to give a single view and point of control for a mix of different cluster types across a mix of infrastructure – just like Borg does for Google internally.

For persistent storage – which is an issue for container platforms – Liang says that NFS is the default by container-aware storage such as ClusterHQ’s Flocker companion to Docker will eventually be integrated.

In general, you can expect for different open source components to be added at the different layers of the container service as customers demand them, much as the Hadoop data analytics stack has alternatives for just about every function of that framework these days.

Rancher is providing tech support for its eponymous container service software, and rather than price based on server node count, the company is counting up logical CPUs – be they cores on physical machines or virtual CPUs on public or private clouds – to do the pricing. A support contract for a bundle of 1,000 logical CPUs costs $25,000 per year.

Be the first to comment