The tick-tock of Intel’s Xeon server chip product cycles means that some generations are more important than others, and while we welcome the “Broadwell” Xeon E5 v4 chips that are impending, it is safe to say that the confluence of the “Skylake” Xeon E5 v5 chips with a slew of new memory and fabric technologies next year will quite possibly be the most transformative year we have seen in systems since the “Nehalem” Xeon launch back in March 2009.

Server maker Dell won’t pre-announce its Broadwell or Skylake products, and Broadwell is basically a socket upgrade for existing PowerEdge 13G systems that have been using “Haswell” Xeon E5 v3 chips for the past year and a half. But Ashley Gorakhpurwalla, vice president and general manager of Server Solutions at Dell, sat down with The Next Platform on a recent visit to New York to talk about the issues the company’s system architects were trying to address during the Skylake generation and how various networking and memory components will be integrated to create new kinds of servers.

“Skylake is fascinating,” Gorakhpurwalla says. “We have an acronym here at Dell called NUDD, which is short for New Unique Different Difficult. If we are talking about new technologies, we identify it as a NUDD and we pay more attention to it and we have different processes by which we look at these things. We have a lot of NUDDs coming with PowerEdge 14G in the Skylake timeframe. For instance, fabrics and non-volatile memory, and both are ecosystem plays so you have to think about everything: software drivers, stacks, ISVs, a pretty wide swath of validation to make sure you have it right. There are a lot of things on both sides of the wire and they have to all work well.”

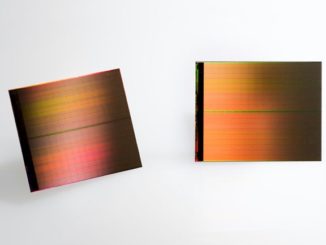

While Intel says that companies are buying higher up the SKU stack in the Xeon product line, thus driving up average selling prices of its processors and its overall revenue faster than the overall growth in server shipments, we still see a lot of companies buying in the middle of the SKU stack where the price/performance is better. (The systems at Facebook, which are fairly modestly configured Haswell Xeon machines these days, are a good example.) So we wonder just how much demand there will be for a top-bin, 28-core Skylake chip when it appears sometime in 2017. We do think there will be demand for 3D XPoint memory sticks and integrated Omni-Path fabric adapters on the Xeon chips, as well as a desire to put scads of 3D XPoint memory on SSD form factors linked into the processing complex over NVM-Express protocols over the PCI-Express peripheral bus. And even Intel is billing the future “Purley” platform as the biggest architectural change since the Nehalem platform came out in the teeth of the Great Recession. (The combination of the recession, Nehalem, and a bug in the “Barcelona” Opterons pretty much ended AMD’s assault on the datacenter.)

We brought all of this up to Gorakhpurwalla. “I am with you there, and we could probably draw a curve,” he says. “Frequency bought us quite a bit a few years ago, and we could make an argument with customers based on their ability to recompile and take advantage of frequency increases that they would have a TCO that would work out for them, however long it was – twelve months or eight months – because they would get it back in performance or efficiency. When you hit a certain frequency barrier, we saw the core counts increase, and that was the way you got the next wave of performance and TCO. Now you have to find the next curve, and you start to look at how do you get data in and out of the machine and you can either do it through I/O or fabrics if you need to communicate with another node or you can do it within the node with a memory hierarchy change. So I think these are the two potential big changes.”

Server makers like Dell are constantly thinking about how to architect their systems, both the off the shelf ones and the ones they tailor specifically for hyperscale, cloud, and sometimes HPC customers, but the designs are perhaps not done as far out for the future as many of us might expect.

“We have known on paper for quite a while, and we already have machines booting and running applications,” Gorakhpurwalla says. “For us, it will still take quite a while before we will punch out a ticker tape of TCO analysis. We are still mostly in the development and validate phase.”

As is always the case, the hardware is way out in front of the systems and application software, but if we want to have progress, somebody has to ship something to start the process. To illustrate the point, Gorakhpurwalla brought up ARM servers.

“People always ask me when Dell is going to start shipping ARM servers. We have been, but we have been building them for people who need to develop software and build and validate drives, stacks, and applications but not for production and not with very widespread usage at all. I think of Skylake in the same way. You have to have a vehicle by which you can develop, and a lot of our early Skylake protoypes will go to partners to develop.”

The hardware tends to outstrip the capabilities of the hardware at first, and Gorakhpurwalla used the SAP HANA in-memory database as an example, and talked about the architectural differences that a Skylake server will require compared to today’s Xeons.

“In a HANA machine, you could have a four-socket Xeon E7 server that could address up to 12 TB, but the software says you need another node if you are going to go above 3 TB. Why? Because that is how it was architected. What will happen when we can put 100 TB or 128 TB in a four-socket box? What if some of it never goes away if I turn off the machine? Today, we have to architect the system so that metadata is stored in such a way that it cannot be lost and scratch data is put on media where it can be. What happens when you have a machine where 90 percent of the memory is persistent, but 10 percent is DRAM that isn’t? We spend a lot of time thinking about this in storage, with coherency across nodes – clustering, caching, however you want to think about it – and this is all based on the fundamental principle that something is going to crash and we better have copies. But now, we will have memory capacity that is persistent, but we may have to wait five minutes for the system to reboot.”

Another thing that will be different with Skylake will not just be the capacity of the machines, but people’s comfort level with that capacity and using it to its fullest. Gorakhpurwalla explains:

“If we go back five years ago, people would tell us that they were good at packing maybe ten or twelve virtual machines on a server. It can take many, many more, but this was the level they were comfortable with. It is the all eggs in one basket problem, it was new and they were not sure. Live migration was not great back then, so that was a limiting factor. They couldn’t manage all of these VMs well, and in some cases, they didn’t know where they were. But now, all of the tools have caught up, and it may not have taken seven or eight years for that catch up to happen, but only five. I think that period gets quicker every time. Now people are thinking about containerized workloads, and they are more comfortable, and they are thinking about putting a hundred containers on a machine because they are lightweight and they can pack them in there. It is going to be dense, and it is going to be great. So you have to think about what you can do when you have a tremendous amount of compute and storage within a node and you can talk very quickly with the node next to you.”

In the end, we are sort of coming full circle to a mainframe, albeit a distributed one based on X86 technology, and Gorakhpurwalla said as much with a laugh. “I think this is going to finally give us an opportunity to click over from hype to reality in the ability to carve up machines into resources and apply them by application. You will have large memory very close to processors, and that memory will look like storage when you want it to, and you will have tremendous bandwidth with very low latency. The latency penalty in clusters could go away, and rack-scale architectures can come to fruition.”

For Dell, that means further commercialization of the G5 rack-scale systems it builds for hyperscalers and which it has just tweaked to create the DSS 9000 systems for telcos, carriers, and smaller cloud builders.

“When you get to Skylake technologies,” says Gorakhpurwalla, “we think that this architecture can get down into the enterprise level because you have the resources, the latency, and the memory to carve this thing into whatever you it to be.”

I find his comment about mainframes thought provoking. “Traditional” mainframes using specialized CPUs still exist, but I wonder if architectures like Purley will make them even more rare or totally replace them? I would love to see an article on that.