There are two sides to every server network connection coming out of the rack, and staunch competitors are vying to own as much of the circuitry real estate that drives those connections as they can possibly attain. The trick to differentiation for those who make server network adapters is to provide something above and beyond a standard Ethernet interface running at 10 Gb/sec, and as we have been profiling here in The Next Platform, there is plenty of innovation going on in adapters.

Three years ago, QLogic sold off its InfiniBand networking business to Intel, which has evolved into the Omni Path line of adapters and switches aimed at high performance computing, but the company continues to sell converged switches that support Fibre Channel and Ethernet protocols as well as server adapters that support one or the other – and sometimes both – protocols. And thus, QLogic has a tidy niche of its own that it has carved out.

Now, QLogic is aiming at the hyperscalers and those who want to emulate them with a new line of server adapters, code-named “Big Bear” and sold under the QL4500 brand, that support the 25G Ethernet protocol that hyperscalers Google and Microsoft initiated back in the summer of 2014 because they were unhappy with the way the industry was planning to string out 40 Gb/sec fabrics and build 100 Gb/sec products. The 25G ramp will start in earnest this year, and as we have said from the beginning, the compelling mix of high bandwidth, low power, and what we presume will be low cost will make 25G products that support 25 Gb/sec, 50 Gb/sec, and 100 Gb/sec bandwidths will make them appealing to more than the hyperscalers.

This is particularly true when it comes to storage, a business that QLogic knows plenty about because it is the dominant supplier of Fibre Channel adapters, with over 17 million ports shipped and making it the market leader. QLogic has a growing converged Ethernet business, too, with over 5 million ports in the field and second only to Mellanox Technologies in this space. QLogic has around 70 OEM and ODM partners who buy its raw chips or resell finished adapters or switches. The company generated $473 million in its trailing twelve months and has $299 million in cash and no debts, and is eager to expand its presence in the datacenter.

The new QL4500 adapters are part of that plan, and as you might expect, there is a strong storage angle to its strategy as well as a desire to feed the adapter needs of hyperscalers that are doing their 25G ramps.

“The storage space is really starting to need the speed,” Jesse Lyles, general manager of QLogic’s Ethernet business unit, explains to The Next Platform. “Especially when you are talking about flash arrays, 10G is just not fast enough, and a lot of customers are looking for 25G, 50G, and even 100G capabilities since the bottleneck of writing to spinning media is no longer there and they do not want to NIC to be the new bottleneck. They are embracing these higher-speed adapters at a faster rate than normal.”

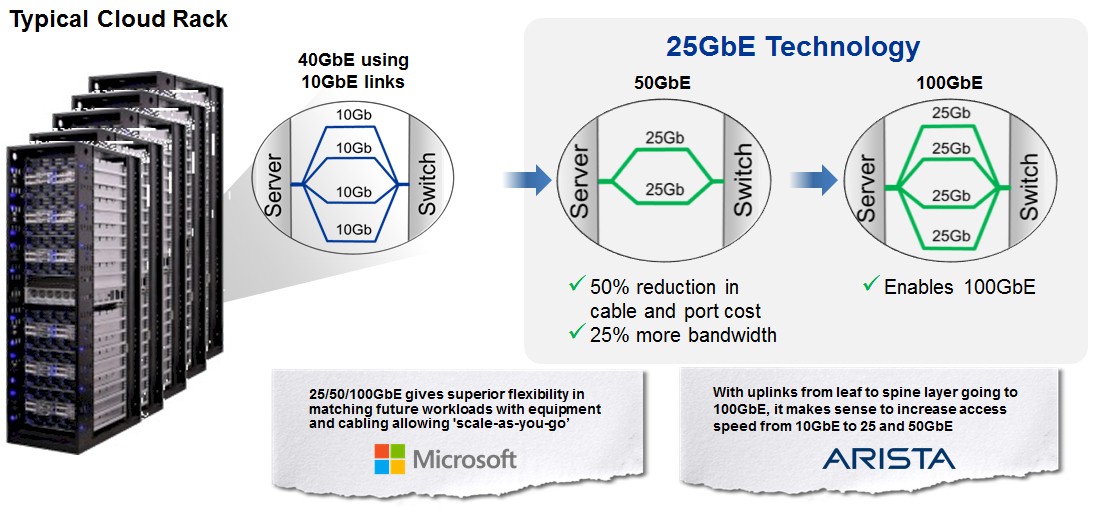

Public cloud builders are also looking to boost the bandwidth and also cut down on the link aggregation coming out of their servers – it is better and cheaper to have a single low-power adapter that has two 25 Gb/sec ports than to have four 10 Gb/sec ports on multiple adapters, which is why Google and Microsoft were making such a fuss to begin with and why the rest of the industry will benefit. The cost per bit and power consumption per bit of 25G products is lower.

End of story.

And while Amazon Web Services and Microsoft Azure have been coming off their servers using 40 Gb/sec pipes for several years now in their public clouds (and Google and Facebook have been doing their same thing for the infrastructure behind their public-facing applications), the adapters only come from one source – Mellanox – and they want competition here and have fostered it with the 25G standard. In fact, the word on the street we hear is that many of these public cloud providers are looking to quadruple pump their servers and have 100 Gb/sec pipes in their proof of concept machines right now.

The idea is not that these cloud providers will necessarily buy a 100 Gb/sec adapter card, by the way, although in some cases they will and virtualize the links as Mellanox is doing with its ConnectX-4LX adapters for the Facebook “Yosemite” microserver. It is just as likely, says Lyles, that they will have two ports running at 25 Gb/sec coming off the server and plug aggregated links into a 50 Gb/sec top of rack switch port, cutting the cabling requirements in half compared to the current way of linking four 10 Gb/sec ports that hyperscalers and cloud builders use today and boosting the bandwidth by 25 percent. Those looking to jump from 40 Gb/sec to 100 Gb/sec – particularly for storage heavy workloads – can aggregate four ports and use 100 Gb/sec switches. The strategies that any company employs will be a reflection of the particular workloads, but you can bet that they will want to standardize as much as possible and that argues for 25 Gb/sec server ports that are aggregated because this leaves the hyperscalers and the cloud builders with more options.

The HPC market could take notice of this and follow along, and we think those large enterprises building big clusters for running distributed workloads like risk management or data analytics are not just paying attention, but are clamoring for parts so they can run some tests. The telco and service provider market, which is going through a massive refactoring of its infrastructure as it shifts from closed, specialized network appliances such as switches, firewalls, load balancers, and such to processor-based systems that virtualize these network functions and aggregate them on servers, is very keen on 25G products as well.

The thing to remember is that the switch and the adapter do not have to change at the same time, and decoupling is very, very important because of the different upgrade cycles for servers and switches in every datacenter. A server may last three or four years at a hyperscaler, cloud builder, or telco, but switches can be in the field for seven to ten years, depending on the technology. So you have to be able to change either side of the equation and not have stranded ports or bandwidth.

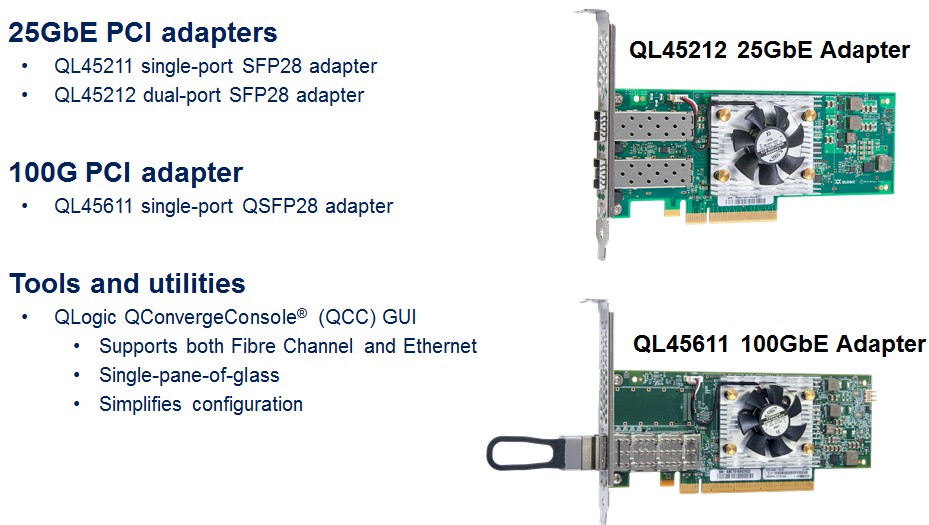

QLogic is announcing three adapters supporting the 25G protocols that will ship by the end of March and has another one in the works for later this year that will be very interesting depending on how it is priced. As the chart above shows, there are two 25 Gb/sec adapters, one with a single SFP28 port and one with two SFP28 ports; these can use copper cabling already installed. There is also a third card coming out now that has a single QSFP28 port running at 100 Gb/sec and that will require fiber optic cabling. The fourth card will sport four 25 Gb/sec SFP28 ports and deliver an aggregate of 100 Gb/sec obviously.

But here is the interesting bit. Lyles says that the single-port QL25211 card will cost around $300 with the dual-port QL45212 card costing around $350. So clearly, that second port is very attractively priced. But if you want 100 Gb/sec coming off the server, you have to buy two cards and burn two slots and it will cost you around $700. The future quad-port 25 Gb/sec card (presumably to be called the QL45214) will cost somewhere between $400 and $450, so the cost for 100 Gb/sec of aggregate capacity will be considerably lower. And given that the QL45611 adapter card that has a single 100 Gb/sec QSFP28 port and requires fibre optic cables will cost around $600, the cheapest way to 100 Gb/sec is a four-port card using copper cables.

Funny how that worked out to be precisely what the hyperscalers and cloud builders need inside the racks. And funny how if you are not a hyperscaler and have more modest bandwidth needs, your cost per bit goes up, but the hyperscalers buy in lots of 50,000 to 75,000 adapters so this is to be expected. We think enterprises will go for the two-port QL45212 card and hyperscalers and cloud builders will go for the four-port QL45214 card, linking out to 25 Gb/sec and 50 Gb/sec switches, and the true 100 Gb/sec card will only be used in very special circumstances when the switches are running at 100 Gb/sec.

As is the case with other server adapter cards, the Big Bear NICs from QLogic have lots of functions embedded in them to offload functions from servers and their operating systems. (Broadcom’s adapters have embedded offloading for network overlays used in virtualized infrastructure clouds, and Netronome offloads a large portion of the processing of the Open vSwitch virtual switch from servers to the NIC.)

The most important offloading that the QLogic adapters are doing are for Remote Direct Memory Access (RDMA), which allows two servers (or storage servers or a mix of the two) to rapidly access data in the main memory over the network without incurring the overhead of the operating system. Unlike other server adapter cards, Lyles says that the Big Bear adapters support both RDMA over Converged Ethernet, or RoCE, as well as Internet Wide Area RDMA Protocol, or iWARP. The latter borrows RDMA ideas from the InfiniBand protocol and encapsulates RDMA traffic at a high level in the Ethernet stack, uses TCP/IP drivers running on servers to transmit data from machine to machine, and also employs TCP/IP’s error-correction methods. RoCE is arguably a cleaner and leaner implementation, but the industry still has applications that require iWARP so this is useful.

For cloud builders, the NICs also offload some of the processing of network overlays and specifically can handle Virtual Extensible Local Area Network (VXLAN, promoted by VMware), Network Virtualization using Generic Routing Encapsulation (NVGRE, promoted by Microsoft), Generic Routing Encapsulation (GRE), and Generic Network Virtualization Encapsulation (GENEVE) protocols. The cards have multi-queue and NIC partitioning (NPAR) and support Single Root I/O Virtualization (SR-IOV) as well, the latter being ways of carving up the bandwidth for different applications or virtual machines on a server.

Be the first to comment