It is hard to believe but it is still early days for cloudy infrastructure. While a very large percentage of server capacity that can be virtualized has been over the past two decades, a lot of that capacity has not been equipped with additional tools to automate the management of that infrastructure and orchestrate the movement of workloads around server clusters. This is what makes a cloud a cloud.

There are many ways to build a public or private cloud, but for most enterprises, it is going to come down to three possibilities, or some hodge podge hybrids such as Platform9 or VMware Integrated OpenStack. Companies that are by and large Windows Server and Hyper-V shops will naturally move towards Microsoft’s Azure Stack once it is released later this year and they will probably increasingly use the Azure public cloud. Companies that have invested heavily in VMware’s ESXi and vSphere infrastructure are ever so slowly adopting its vCloud/vRealize suite of tools, which turn virtual servers into clouds. And finally, those who are mostly Linux shops will looks to OpenStack and probably the KVM hypervisor to compose their clouds.

In a sense, and oversimplifying a bit, OpenStack is an automation framework for Linux that just happens to allow Windows instances to run on KVM, just like the Azure cloud and Azure Stack are similar extensions to Windows Server that just so happen to also support Linux instances on Hyper-V. There have been a dozen releases of OpenStack since that time, on a six-month cadence of April and October launches that, interestingly enough, have also regularized the pattern for Linux operating system launches, too.

Quite a number of OpenStack distributors have come and gone. Nebula, which was founded by the NASA team that initially created the Nova cloud controller at NASA, tried to create a hardware appliance running OpenStack but ended up running out of steam with most of the employees and intellectual property being picked up by Oracle. Piston Cloud and Metacloud, two other distros, were snapped up by Cisco Systems, Cloudscaling was eaten by EMC, and of course Red Hat, SUSE Linux, and Canonical all have their own OpenStack platforms mashed up with their respective Linuxes.

To get a feel for what is going on out there in the clouds, The Next Platform sat down with Kamesh Pemmaraju, vice president of product marketing at Mirantis, one of the few remaining free-standing support providers for OpenStack, to talk about what is happening with the cloud tool that was initially the byproduct of a collaboration between NASA and Rackspace Hosting when the open source cloud controller project was launched in July 2010 and that is now backed by more than 500 companies.

Mirantis was founded in 1999 by Boris Renski and Alex Freedland, during the beginning of the commercial Linux revolution when distributed infrastructure was just getting rolling in enterprises and more than a decade before OpenStack was conceived. (We talked to Renski at length last summer when discussing the future of cluster controllers with various people from the Kubernetes, OpenStack, and Mesos camps.) Mirantis backed OpenStack from the get-go, and raised $20 million in Series A funding in 2013 from Intel Capital, Dell Ventures, Red Hat, SAP Ventures, Ericsson, and West Summit Capital. Most of these investors joined Insight Venture Partners in a $100 million Series B round in October 2014 that Mirantis took down, and last August Intel Capital led another $100 million Series C round that brought in August Capital and Goldman Sachs.

With such backing, Mirantis has grown to almost 800 employees and closed out 2015 with 127 commercial customers using its OpenStack support services, with close to 100 of them being added in 2015 alone. Pemmaraju reckons that there are maybe 400 customers doing OpenStack at reasonable scale in production, and that maybe in the whole world, there might be 1,500 to 2,000 OpenStack clusters. But the prospects for growth are good, based on that installed base growth and the fact that Mirantis grew its software subscription business by 4X last year. That is one reason it is paying a couple hundred engineers to work on the OpenStack code, including its own Fuel deployment tool. But the primary focus that Mirantis has is on services for OpenStack, be they training, assessment, implementation, and technical support services.

“All of these other companies have either been acquired or disappeared,” Pemmaraju tells The Next Platform. “And we know why they failed. All of the appliance companies failed because they did an appliance before the market was ready for it, and taking a completely product-oriented approach with something like OpenStack, which is still emerging, is a fool’s errand. The way we think you succeed is to provide training and services to the market and take the risk out of using an open source project that is kind of half-baked and make it work for companies.”

Who Is Stacking, And How High?

Mirantis separates OpenStack adopters into three camps. The first are service providers, ranging from cloud builders to SaaS providers that are building large scale infrastructure to host their customer-facing applications. (Hyperscalers have their own cloud controllers, and these predate OpenStack are and very tightly tied to their own software and hardware, such as Google’s Borg, Microsoft’s Autopilot, or Facebook’s Kobold.) Then come the telecommunications companies and other service providers, and these companies are more focused on using OpenStack to host network functions that are being pulled off of myriad proprietary hardware appliances (load balancers, firewalls, and stranger stuff), virtualized, and hosted on cloudy infrastructure.

The third bucket has traditional enterprises like big banks, manufacturers, retailers, and so on who are building private clouds. As was the case with server virtualization when it took off on the X86 platform fifteen years ago with VMware, the first use case here among enterprises as well as for some telcos is for an automated development and test system for application developers. It took too long to provision n-tier clusters to test applications back in the early 2000s, and server virtualization fixed a lot of that; now, it takes too much effort to orchestrate the virtual capacity for many different streams of applications, and this needs to be automated with cloud software. (Luckily for humanity, we will need application developers for a while yet. Maybe.) In this case, the scale of the OpenStack platform is really determined by the size and ferocity of the teams of software developers that make use of the private cloud. Aside from the NFV use case, the other big one driving OpenStack adoption is distributed analytical software, such as Hadoop and Spark, and specifically wanting to manage and deploy multiple applications on top of a cluster.

The companies on the highest end of the scale in the OpenStack community are the names we are all familiar with, says Pemmaraju, such as eBay, PayPal, Symantec, Wal-Mart, and they tend to have multiple thousands of server nodes. “Not all of these nodes are necessarily in one datacenter or under one OpenStack control plane, but the scale is thousands of nodes when you add it all up.”

Aside from Rackspace, which probably operates the largest OpenStack cloud on the planet, the biggies include CERN, which reportedly has more than 10,000 nodes in its OpenStack infrastructure, and a number of companies in China are on the same order of magnitude. Pemmaraju knows of a few customers with 5,000 nodes in production, and adds that “these are not trivial.” Although, as we are fond of pointing out, the original design goals set out by Chris Kemp, formerly of NASA and founder of the Nebula appliance company, were for OpenStack to span 1 million servers and 60 million virtual machines in one control domain.

What those real OpenStack clouds, as opposed to the theoretical ones, are not, as Pemmaraju pointed out above, always all in one datacenter and all wired up with top of rack and end of row switches into one giant OpenStack cluster. Just like Amazon Web Services, Microsoft Azure, and Google Compute Engine, which do have over 1 million servers each in their infrastructure, spread those machines across multiple regions and datacenters, so do OpenStack shops, at least the ones that Mirantis sees.

The OpenStack infrastructure is particularly spread out among the telcos and service providers using the cloud controller as a substrate for NFV, says Pemmaraju. These companies have points of presence, or POP, centers, which have small co-location spaces and which might have a few racks of gear with maybe 50 to 100 nodes running OpenStack. The idea is to get certain network functions close to the customers that are using the network. These feed into regional centers, which might have 400 to 500 nodes of OpenStack gear running NFV software, and these in turn feed into larger network operating centers, or NOCs, that generally have no more than 1,000 nodes, but sometimes have more if a telco or service provider consolidates to fewer datacenters at the hub of its networks.

“But if you look at the aggregate, there are tons of POPs,” Pemmaraju explains. “And if you look at AT&T or Deutsche Telekom or Verizon, they have POPs in every major area they cover with fiber, cable, or telephone wire. So they may have hundreds of these POPs, depending on the country and locality. The regional offices tend to be in the dozens, and these companies tend to have fewer than ten or fifteen central offices.”

Add it all up, and these service providers are deploying thousands to tens of thousands of OpenStack nodes.

For the enterprise use cases, data analytics applications like Hadoop on generally deployed on OpenStack on bare metal iron, although Mirantis can deploy it on virtualization hypervisors if customers want to do it. As we have pointed out before, most companies have hundreds of Hadoop nodes, with only a relative handful reaching up into the 1,000 or 2,000 range.

The other enterprise use case for OpenStack is for development and test infrastructure for developers. Even the largest organizations might only need an OpenStack cluster for this purpose that has maybe 200 or 300 nodes, says Pemmaraju. The biggest organizations have hundreds to maybe a few thousand developers, so the demand for virtual infrastructure to spin up applications for testing can only get so high.

“These clusters tend to be fairly departmental,” Pemmaraju adds. “I have not seen a large enterprise like a big bank go out and build a big OpenStack cluster in one datacenter that supports all of the developers in the world for that organization. Within a large enterprise, maybe one engineering department or an R&D center with a couple hundred developers or researchers needs only a few thousand VMs. Mind you, every department will have a cluster.”

The thing is, 200 or 300 nodes is not small, not when you consider that you can get 20 cores or more in a box and as much as 512 GB or even 1 TB of memory into each node for a reasonable price, and support 25 to 50 VMs on each node. That is anywhere from 5,000 to 15,000 VMs at that scale.

Mirantis charges anywhere from $700 to $3,000 per node per year for supported versions of its eponymous OpenStack stack, which is completely open source. (The price depends on the features.) Pemmaraju reckons that about 30 percent of the users of the Mirantis stack are paying for support, with another 70 percent doing self support. (That is a very high ratio of paid to unpaid for an open source project, and second perhaps only to Red Hat Enterprise Linux, which runs somewhere around 50 percent or so. Most open source projects are lucky to convert 5 percent from free to pay.)

Up until now, the large-scale OpenStack deployers have taken a do-it-yourself approach, as did the telcos and service providers initially. But it looks like they are doing the math on what it takes to keep OpenStack up, current, and humming and many are looking for some help from a distro. “Operating a cloud is not for the faint hearted, and running anything at scale is a major challenge. It takes a lot of skills, tools, and monitoring, and it can get pretty complicated.”

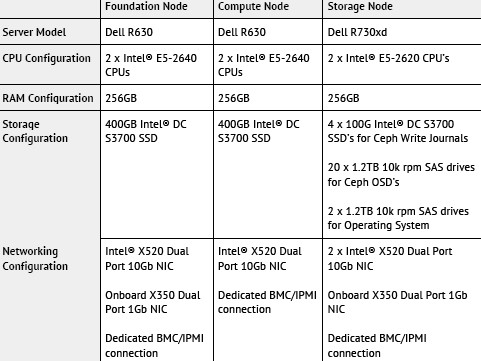

To help customers speed up OpenStack deployments, Mirantis is now offering what it calls the Unlocked Appliance, which is based on Dell PowerEdge R630 servers for compute and OpenStack controllers, Dell PowerEdge R730xd storage servers for Ceph object storage, and Juniper QFX5100 and EX3300 switches. A fully configured rack with 24 compute nodes and six storage nodes, can do about 768 VMs, with an oversubscription ratio of 4 to 1 on the virtual CPUs (vCPUs) versus hyperthreads in the compute nodes. (In other words, each VM has four vCPUs. Each VM also has 8 GB of virtual memory, and 60 GB of storage.) Including hardware, software, and support, the Unlocked Appliance will cost in the range of $250,000.

They question is, can organizations build and deploy and support OpenStack for less than that? In many cases, the answer will be no, if you fully burden the costs.

Good article, but you have an error:

“Piston Cloud and Metacloud, two other distros, were snapped up by OpenStack”

should be

“Piston Cloud and Metacloud, two other distros, were snapped up by Cisco”

Thanks. Welcome to my dyslexia. HA!