We have been talking about the impending and now delivered 100 Gb/sec speeds for InfiniBand used by HPC centers and for Ethernet to be used by hyperscalers that it is hard to remember sometimes that the enterprise is a significant laggard when it comes to network bandwidth. For a lot of companies, 10 Gb/sec Ethernet was sufficient for a long time, and for some, it will continue to be for many years. But other enterprises are busting up against the bandwidth ceiling.

For them, the next logical move is either to embrace 40 Gb/sec Ethernet now or to wait until the market around the 25G Ethernet standard, fomented by the hyperscalers, develops and products are available starting later this year.

It is a tough call, and many think that only the hyperscalers will want the 25G products. We happen to think otherwise, provided that the pricing and packaging of the 25G products is right. What datacenter, building cloudy infrastructure, would not rather have 25 Gb/sec ports into the servers and up to the switch and either 50 Gb/sec or 100 Gb/sec uplinks to the network core from the top of rack switch? The hyperscalers argued – we think intelligently – that 40 Gb/sec and 100 Gb/sec technologies based on 10 GHz lanes were too hot and expensive compared to those using 25 GHz lanes as embodied in the 25G standard they compelled the IEEE to adopt after it initially rejected it for 100 Gb/sec Ethernet based on 10 GHz lanes. Broadcom, Cavium, and Mellanox Technologies have all announced their respective Tomahawk, XPliant CNX, and Spectrum ASICs, and all three have been sampling to early customers (both switch makers and hyperscalers) for more than a year. (Mellanox has just said that it is shipping Spectrum chips and switches to partners and customers, and Cisco last year shipped its Nexus 3232C switch, which has 100 Gb/sec ports but we do not know if Cisco is using its own 25G silicon or using ASICs that employ 10 GHz signaling lanes to build the ports.)

Cisco likes to hedge its bets and as the dominant supplier of top of rack switches in the enterprise datacenter, the company continues to bang the 40 Gb/sec drum and squeeze more economic and technical life out of its existing ASICs. With good reason. Network switches are one of the most long-lived items in the enterprise datacenter. As we explained in covering the Ethernet roadmap last year, the Gigabit Ethernet standard came out in 1998, so this technology will have been in the market for over 20 years by the time it dies. The 10 Gb/sec Ethernet standard came out in 2002, and the industry tried to jump straight to 100 Gb/sec in 2010 but that did not work because the switches were way too expensive except for core aggregation at the largest service providers. That is how we ended up with the stop-gap 40 Gb/sec Ethernet option was offered alongside of 100 Gb/sec.

Cisco is not the only one hedging. Broadcom is doing much the same with its Trident-II+ ASICs for 10 Gb/sec switches, which launched last April and which support native VXLAN and have other features to try to get enterprises to move up off of vintage Gigabit Ethernet gear.

Speaking to The Next Platform at that launch, Rochan Sankar, director of product management for Broadcom’s Infrastructure and Networking Group, said that over 60 percent of the servers running in the datacenters of the world were still using Gigabit Ethernet ports (like the one you have on your desktop or laptop PC, if you still use one of those). By the end of 2018, though, Broadcom is expecting Gigabit Ethernet to diminish to only a few percent and for 10 Gb/sec ports to be installed on about 63 percent of machines. While 40 Gb/sec ports are only used on a small percentage of enterprise servers today, by 2018 it looks like 25 Gb/sec, 40 Gb/sec, and 50 Gb/sec ports will account for a little more than 10 points of share each on the server.

Forecasts by network equipment watcher Crehan Research bears this out as well. Last summer, Crehan estimated that the top of rack Ethernet market would generate around $4 billion in revenues in the datacenter across enterprises, cloud builders, hyperscalers, and those HPC centers that use Ethernet, and about $2.9 billion of that was for 10 Gb/sec switching. (Gigabit Ethernet was not part of the forecast.) The remaining $1.1 billion was for 40 Gb/sec switches, with a microscopic slice for 100 Gb/sec solutions, which have been around for years but which have been very expensive for both switching and cabling and therefore out of reach except for the most pressing bandwidth needs. Crehan is projecting that 100 Gb/sec will take off this year, and that 40 Gb/sec and 10 Gb/sec will continue to grow alongside 100 Gb/sec switching through 2017. The company expects for 40 Gb/sec to peak in 2017 and for 10 Gb/sec to peak in 2018. By 2019, the top of rack Ethernet switch market will grow to $7.4 billion, according to Crehan, with 10 Gb/sec switches still driving $3.4 billion of that and 40 Gb/sec switches driving $1.2 billion. By that time, 25 Gb/sec switches will drive around $720 million, and 50 Gb/sec switches will push about half that; 100 Gb/sec gear driving $1.7 billion. This implies very long 10 Gb/sec and 40 Gb/sec upgrade cycles at enterprises, and that switch makes can offer them at prices that undercut products based on the 25G standard. The distribution of Ethernet adapters by revenue is parallel, with a heavier proportion on 10 Gb/sec speeds, and revenues will rise from $1.1 billion in 2015 to $1.7 billion in 2019.

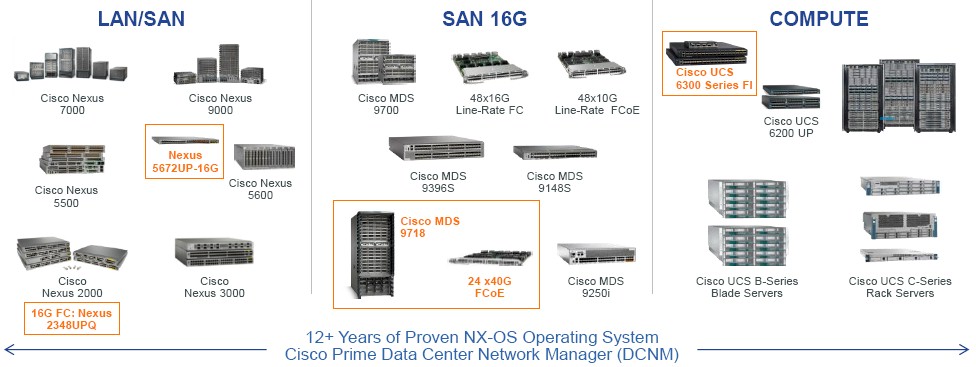

The consensus seems to be that 40 Gb/sec server adapters and switches have a few more years of legs left. Which is why Cisco is rolling out more support for this bandwidth level in various Nexus switches and its Multilayer Director Switch (MDS) for storage area networks.

As far as we are concerned, the most interesting part of the latest Cisco rollout is the support for 40 Gb/sec networking on the fabric interconnect switches that are at the heart of the Unified Compute System (UCS) blade servers. Cisco launched the UCS iron back in March 2009 with 10 Gb/sec switching that converged network and storage traffic onto the same Ethernet fabric. (Fibre Channel over Ethernet, or FCoE, was just starting to take off back then.)

“There is a subset of our customers who have been asking us for 40 Gb/sec connectivity because they are driving higher virtual machine densities, or they are using hyperconverged storage, which drives more demand for bandwidth,” Adarsh Viswanathan, senior manager of datacenter products at Cisco, tells The Next Platform. “It will be a slow transition, but this is the beginning of it and we will see this transition play out over the next three to four years. I think that 10 Gb/sec will be a dominant technology for some time, but as 40 Gb/sec reaches 50 percent of shipment rates, that’s when I expect to see 10 Gb/sec to start declining. There are emerging markets, such as those in Asia, that will be at 10 Gb/sec for quite some time, even after the US market migrates up.”

With the updated Fabric Interconnect 6332 announced today for the UCS iron and the related I/O Module 2304, Cisco is giving customers 32 QSFP+ ports running at 40 Gb/sec in the internal switch inside the UCS chassis, allowing for end-to-end connectivity at 40 Gb/sec speeds. Viswanathan says that the resulting setup will provide around 2.6X the throughput at 3X lower latency than the 10 Gb/sec fabric and modules in the original UCS gear. By the way, Cisco is not religious about FCoE these days, and there is a variant of the UCS fabric switch that provides 24 Ethernet ports running at 40 Gb/sec plus 16 universal ports that can either be configured as Ethernet ports running at 10 Gb/sec or Fibre Channel ports linking out to SANs running at 16 Gb/sec speeds.

As for 25G products, where customers will have 25 Gb/sec ports on the servers and on the switches and 50 Gb/sec or 100 Gb/sec uplinks on the top of rack switch, Viswanathan says that this is something that “all of the megascale datacenter customers want install” and that Cisco will have “announcements for this somewhere down the road.” If we had to guess – and we do – we would say that Nexus switches will get this 25G functionality first, followed by UCS gear further down the road. That said, if Cisco wanted to give its hyperscale-targeted UCS M Series machines, which we told you about here, an extra push, it might offer 25G switching along with them.

The Legacy SAN Of The Past Platform

While the focus of this publication is on the next wave of processing, storage, and networking innovation from which companies will build the next platforms to support their applications, we are not deaf to the innovation that is going on in the enterprise base. For many companies, block storage consolidated into a storage area network and linked to systems by Fibre Channel (whether native or over Ethernet) is going to be in place for a long, long time. These systems will not change very rapidly, any more than mainframes do. But they still need bandwidth increases, and that is why Cisco is upping the speed on its MDS SAN switches.

With the MDS 9718 multilayer director switch, Cisco is putting out a monster modular switch that can have as many as 768 line-rate Fibre Channel ports running at 16 Gb/sec speeds, and that will be ready to run at 32 Gb/sec speeds when this becomes available further down the road with a line card change and a software tweak. Like with Ethernet, the cycle of bandwidth upgrades for Fibre Channel is very long. Viswanathan says that host bus adapter makers Emulex and QLogic should get 32 Gb/sec adapters into the field this year, with Fibre Channel switch makers coming out in the next six to twelve months or so and disk array makers such as NetApp, EMC, IBM, Hitachi, and others supporting 32 Gb/sec protocols in the second half of 2017. Many of these vendors resell the Cisco MDS switches as part of their product lines.

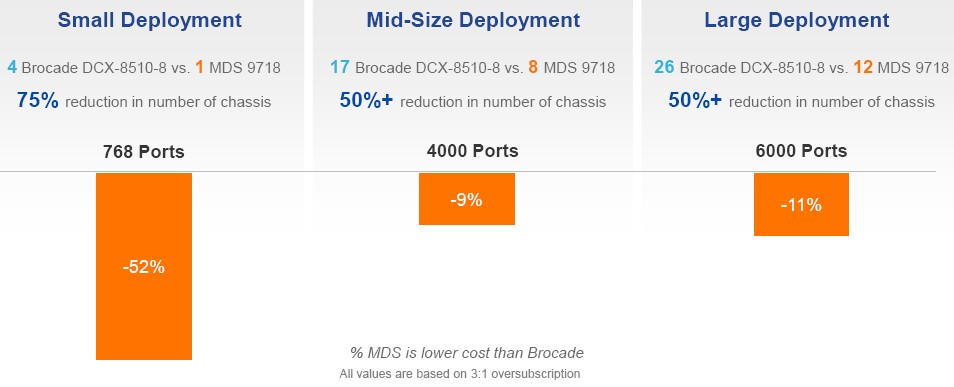

The sales pitch for the MDS 9718 is that customers can invest now, consolidate existing Fibre Channel switches onto bigger iron, and save money on operational expenses while laying the foundation for 32 Gb/sec connectivity to SANs further down the road. A 16 Gb/sec port on the MDS 9718 costs in the neighborhood of $1,000 to $1,200, and consolidating machines can save customers anywhere from 52 percent on a 768 port implementation with one enclosure to around 11 percent on a large setup with 6,000 ports. (These comparisons were made against Brocade’s DCX-8510-8 Fibre Channel switches.)

For flash arrays that have lots of bandwidth needs, Cisco is also stressing that there are enough ports on the box to get rid of oversubscription on the network and therefore relieve contention for data access between servers and the SAN.

As part of the upgrade of the MDS line, Cisco is also rolling out a 24 port interconnect module for the SAN switches that is based on 40 Gb/sec Ethernet. This ISL module links the host edge switches to the dedicated storage core switches, and provides 194 percent more bandwidth than the interconnect link running at 16 Gb/sec speeds using the Fibre Channel protocol. It will also be 47 percent faster than the future 32 Gb/sec links.

Finally, Cisco is adding 16 Gb/sec Fibre Channel ports to its Nexus 2348UPQ fabric extenders and Nexus 5672UP switches. The idea is to offer LAN and SAN convergence in the same switching infrastructure, much as the UCS machines did starting six years ago, to save companies money.

Be the first to comment