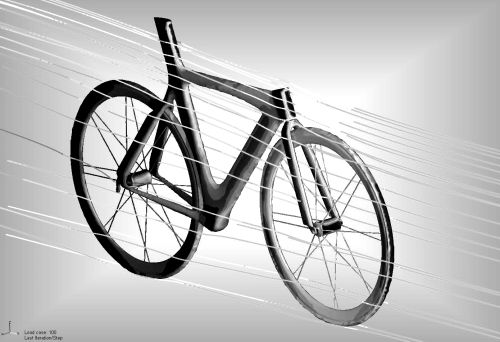

When it comes to high performance cycling, one might not think of high performance computing immediately, even though the majority of the speed, aerodynamics, and design process is heavily dependent on modeling and simulation at scale.

One of the world’s leading manufacturers of such bicycles is Wisconsin-based Trek, whose engineers regularly perform a large number of computational fluid dynamics simulations to design more aerodynamic, faster bikes. In addition to CFD codes, the company is reliant on other off-the-shelf computer-aided design codes to help work toward new models. At the helm of much of this effort at Trek is Mio Suzuki, the company’s lead analysis engineer, who not only performs CFD analysis, but is also responsible for bringing a new way of thinking about executing such models on high performance computing resources.

Interestingly, Suzuki’s graduate research, which focused on 3D physics simulations, also was directed at finding remote solutions to solving complex simulations. This was around 2008, however, when the options for cloud providers was slim and even where they existed, the ease of use, sleek interfaces, and pricing, licensing, security, and general business models were still developing.

When Suzuki joined Trek in 2010, she says that it was clear the teams were suffering because they did not have the right resources internally. For a company of only 2000 people and without great desire to hire HPC cluster administrators and deal with management of infrastructure, the emerging cloud and HPC on demand providers posed an interesting opportunity. Besides, most of the company’s CFD jobs were only running on between 96-256 cores, with little need or code scalability to go beyond, further invalidating the need for an on-site cluster and all the hassles that come with even a relatively small, ten node HPC setup.

Trek understood well what it meant to manage its own infrastructure. Five years ago, the R&D teams were running their CFD codes on a rackmount server with old CPUs. Using HPC on demand providers, however, was a game changer, both in terms of the team’s ability to use newer hardware and to be able to avoid long wait times. Trek uses Star-CCM+ from CD Adaptco for its CFD simulations, and in the beginning of Trek’s cloud journey, the software maker advised Suzuki to look to an HPC on demand provider like R Systems, which Trek used for a number of years—and still does, although Suzuki says that there is a balance between price and better interfaces, which her team finds using another HPC on demand provider, Rescale.

Among other codes the teams use are CAD software packages from SolidWorks, which often run on their local machines inside Trek. Suzuki says that there would be great advantage to manage an entire workflow on a cloud platform, but due to licensing and the fact that SolidWorks is tied to Windows while the rest of the workloads run on remote Linux clusters some challenges. The key, however, is to containerize the workflow and move it off to remote hardware. Currently, Trek, under Suzuki’s guidance, is moving toward just such a solution using a unique approach to Docker containers, Univa middleware, and clusters provided by UberCloud, which was initially one of the consulting groups Suzuki worked with when choosing a cloud platform provider, but has since branched out to offer their own HPC on demand services that wrap modeling and simulation-specific needs on the backend with the various performance, licensing, usability and other features that can keep some companies off the public cloud.

Containers represent a “game changer” for workflows like Suzuki’s at Trek. Engineering and R&D teams at the company have little desire to oversee infrastructure and simply want to run their jobs and forget about the rest. The Trek team is 80% through their final testing of the UberCloud approach to containers, which Suzuki expects they will implement for greater efficiency.

“The selling point of containers is, in my opinion, the fact that I don’t have to change my ‘routines’ to set up a simulation and solve it. Using the container is as if I’m logging onto just another local network machine via remote desktop,” Suzuki says. “This is great, especially for engineers like me with short project turnaround times restricting our ability to spend time to modify working patterns or investigate in the new setup even when the ‘new way of doing’ may become beneficial in the long run.”

As Wolfgang Gentzch, president of UberCloud notes, “application containers bring a simplicity to complicated performance and engineering environments unlike never before. We are building powerful engineering and scientific software application containers as a professional service and in partnership with leading software providers. UberCloud and Univa technologies complement each other, with Univa focusing on orchestrating jobs and containers on any computing resource infrastructure.”

With Univa Grid Engine Container Edition enterprise workload management and orchestration software at their core, UberCloud containers present a bundle of ready-to-rill software with core codes, including STAR-CCM+ and a wide range of other modeling and simulation codes for better code portability, repeatability (where users get one package that runs anywhere) and quick deployment. This lets Suzuki’s team alternate the infrastructure they are using between numerous providers, including Rescale, R Systems, the Ohio Supercomputer Center, and now UberCloud’s forthcoming infrastructure.

While Trek could certainly build its own workflow on Amazon Web Services or other cloud provider’s infrastructure, Suzuki says that her group’s focus is on goal-oriented research, development, and analysis—not maintaining infrastructure, even if it’s not on site. Further, by having an infrastructure provider that makes porting and executing CFD code easier and is tuned toward HPC hardware, Trek’s models can run faster and with less internal overhead. Currently, Suzuki says, the teams run between 5-10 models daily, although this naturally shifts with demand. Still, these jobs can execute in an hour in general, which allows for a faster development cycle overall—and with far less complexity than they deal with using on-site hardware, something difficult to justify cost-wise for jobs that run regularly but not a high core counts and with software licensing that the ISV’s have worked to make available in flexible packages.

Suzuki says that the process of moving from the first on-site environment to the current model of using resources on demand and with software that is quickly portable and easy to deploy will speed Trek’s development times. It also lets her teams get back to analysis without having to become hardware experts on the side. The price and performance are more attractive for a mid-sized company like Trek, whose value lies in the ability to do design and engineering better than their competition, and tools that let them manage such workflows via containers aid in overall productivity.

Be the first to comment