The first big supercomputing deal of the new year has been unveiled. Early next year, the National Center for Atmospheric Research in the United States is going to be replacing its current 1.5 petaflops “Yellowstone” massively parallel Xeon system with a kicker based on future Xeon chips from Intel that will weigh in at an estimated 5.34 petaflops and offer the weather and climate modeling research organization lots more oomph to run its simulations.

The forthcoming system, nicknamed “Cheyenne,” will be housed in the same Wyoming Supercomputing Center where the Yellowstone system was installed in 2012, which is located in Cheyenne, Wyoming and hence the name of the system. These two petaflops-class machines will run side-by-side for a while until the newer one is fully operational.

We will get into the feeds and speeds of Cheyenne and how it compares to Yellowstone in a minute, but one interesting thing about the future NCAR system is that SGI has partnered with DataDirect Networks to win the deal to build the Cheyenne system away from a bunch of other possible contenders.

“We had incumbency in a number of weather centers, but with NCAR, we have not sold to them in a number of years,” Gabriel Broner, general manager of the high performance computing business unit at SGI, tells The Next Platform. Broner took over the helm of the HPC business within SGI last year, returning to the company after stints at Ericsson and Microsoft, and had previously been in charge of SGI’s storage business and an operating system architect at Cray Research prior to that.

“We are clearly excited about the deal with NCAR, and one of the things that we have been doing even before I became general manager of the HPC unit is a renewed focus on weather and climate. On our team we have added a vertical leader and we have added application experts in the weather and climate space. NCAR is an important customer in that they are research institution that is going to develop new codes that other organizations are going to use. Their focus is on multi-year climate research, but the fact that NCAR is going to be developing new algorithms to, for example, better predict hurricanes or to do what they call streamflow to predict how water is going to flow over the landscape and be captured in reservoirs in a year-long timeframe, is going to help agriculture and cities plan weather for the next year.”

To chase similar big deals, SGI has created what it calls the Strategic Program Office, which leverages the experience of selling large systems to try to win new deals – a recognition that at certain scales and for certain customers, lessons learned from one deal can be applied to other deals and help SGI better close big business.

“We have done better than our plan for large systems for the year, so we are happy with that,” says Broner.

Driving Hardware And Software

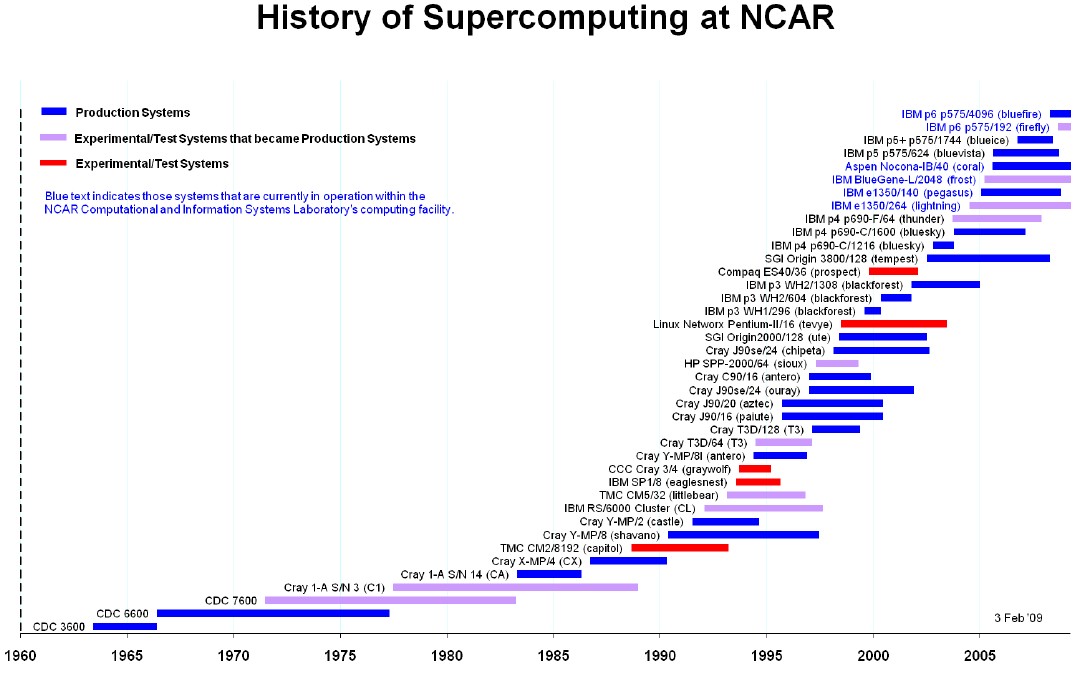

Decades ago, when supercomputing was new, NCAR was a big Cray shop, and it dabbling a bit with Thinking Machines and IBM iron in the 1980s and 1990s with an occasional machine from Hewlett-Packard/Compaq, Linux Networx, or SGI in the mix. According to the historical chart of NCAR’s production and experimental systems, the last SGI machine used by NCAR was an Origin 3800 machine called “Tempest” that saw duty in the early to middle 2000s.

Until the installation of Yellowstone in 2012, NCAR relied heavily on various Power-based machines from IBM, with its biggest being the “Bluefire” system that was installed in April 2008 and that saw duty until January 2013, after Yellowstone had been up and running for a while. The Bluefire machine was based on a cluster of water-cooled Power 575 servers from IBM, with a total of 4,096 processors and delivering about 77 teraflops of aggregate peak computing. With Yellowstone, NCAR shifted away from the Power architecture, but stayed with the InfiniBand interconnect to lash nodes together. (Most organizations do not like to change too many aspects of their hardware and systems software all at the same time, and supercomputing centers are no different in this regard.)

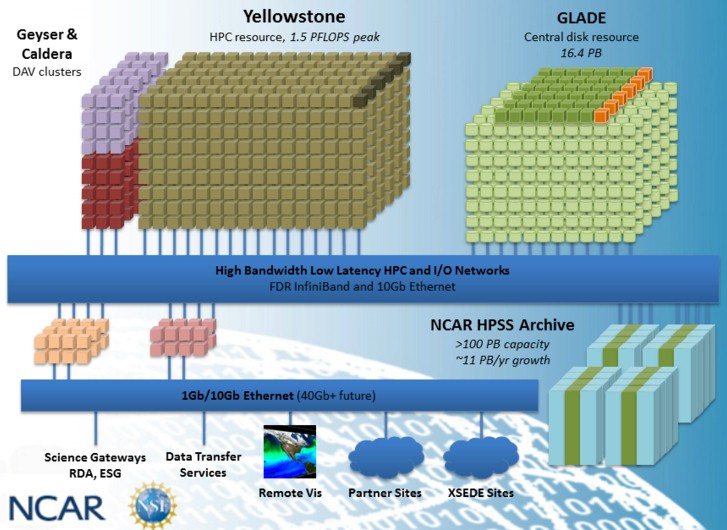

The Yellowstone machine was built by IBM and was based on Big Blue’s iDataPlex hyperscale-class machines which also saw some duty in the HPC arena. Yellowstone had a total of 4,536 two-socket server nodes that used Intel’s eight-core “Sandy Bridge” Xeon E5-2600 processors, giving it a total of 72,576 cores with which to run simulations. The Yellowstone machine used a 56 Gb/sec InfiniBand network to link the nodes to each other and also to the “Glade” storage cluster, which runs IBM’s Global Parallel File System (GPFS) on storage servers with a total capacity of 16.4 PB. Another 10 Gb/sec Ethernet network provides alternate links into the Glade storage.

Yellowstone had about 20 times the aggregate number-crunching performance as Bluefire, and the Cheyenne system that will eventually replace Yellowstone is expected to deliver 5.34 petaflops of performance across its 4,032 nodes.

Anke Kamrath, director of the Operations and Services Division at NCAR, tells The Next Platform that the Cheyenne machine will be based on a future Intel Xeon E5 processor – which we presume is one of the “Broadwell” Xeon E5 v4 motors expected in early 2016 – and confirms that it will have 18 cores per socket. That will represent a big jump in core count per socket from Yellowstone to Cheyenne, and coupled with improvements in vector math units over the Ivy Bridge, Haswell, and Broadwell generations, these two factors, more than any others, attributed to the big jump in performance in a smaller number of nodes.

With myriad core counts, cache sizes, clock speeds, and thermal ratings, it is interesting to see how HPC shops, hyperscalers, enterprises, and cloud builders choose their SKUs. How do HPC shops decide what chip to use?

“We can’t say which SKU it is yet, but it has to do with overall science throughput and it comes down to price and performance,” explains Kamrath. “The thread speed is definitely important to us because some of our scientists really need the fastest threads possible, but others just want as many cores as possible. We do a lot of data gathering on our current Yellowstone system so we can do some projections so we are not overbuying too much disk, or bandwidth, or memory and try to hit the sweet spot with the investments we make.”

If you do the math, Cheyenne will have 145,152 cores, about twice as many as what Yellowstone could deploy on workloads. But the performance improvement on real-world weather and climate modeling applications is expected to be larger. Cheyenne, at 5.34 petaflops, has about 3.6 times the peak performance of Yellowstone, at 1.5 petaflops. “We are projecting that our workloads will support 2.5X probably,” says Kamrath. “Just because you make the processors a little faster does not mean you can take advantage of all of the features.”

The plan is to have around 20 percent of the nodes have 128 GB of main memory, with the remaining 80 percent being configured with 64 GB, allowing for different parts of the cluster to run applications with differing needs for memory. The machine will have a total of 313 TB of memory, and that’s a little more than twice the aggregate main memory of Yellowstone, which stands to reason.

A system upgrade is not built into the Cheyenne deal, but the 9D enhanced hypercube topology that SGI and partner Mellanox Technology has created for the ICE XA system from SGI allows for an easy upgrade if NCAR just wants to expand Cheyenne at some point. (The hypercube topology allows for nodes to be added or removed from the cluster without shutting the cluster down or rewiring the network.)

“Things change so much, who knows about the future,” concedes Kamrath. “We could use Knights Landing, there are ARM processors coming out. I expect for our next procurement there will be a much higher diversity of things because there is more competition coming, which is a good thing.”

The ICE XA design crams 144 nodes and 288 sockets into a single rack, and Broner says Cheyenne will have 28 racks in total. The ICE XA machines, which debuted in November 2014 and which have had a couple of big wins to date, offer a water-cooled variant and NCAR will be making use of this to increase the efficiency of the overall system. In fact, NCAR expects that Cheyenne will be able to do more than 3 gigaflops per watt, which is more than three times as energy efficient as the Yellowstone machine it replaces. Other ICE XA customers include the Institute for Solid State Physics at the University of Tokyo, which fired up a 2.65 petaflops system back in July 2015, and the United Kingdom’s Atomic Weapons Establishment tapped a pair of ICE XA clusters of unrevealed capacity for simulating Trident nuclear missiles back in March 2015 and these machines were installed last November.

More Complex Simulations Need More Oomph

For the moment, NCAR is looking for Cheyenne to help it run existing weather and climate modeling codes better and faster and to give it a platform on which to create new codes.

NCAR has a variety of workloads that it wants to run on Cheyenne, but one of the main benefits of more powerful hardware is that simulations can span greater timescales or work at finer resolutions across many different types of applications. In addition to the streamflow application cited by SGI, NCAR will be doing work to run ensembles of high resolution weather forecasts to predict severe weather such as intense thunderstorm clusters and the probability of hail or flooding. Another set of applications will create a 3D model of the sun and be used to predict wiggles in the eleven-year solar cycle that our Sun experiences, and also be used to predict how the intensity of solar radiation will affect the generation of electricity by photovoltaic cells hours to days in advance of actual conditions. Cheyenne will also be used to look at the flows of water, oil, and gas below the Earth’s surface and simulate air quality on a long timescale around the globe.

But most of the work that Cheyenne will do related to climate and weather modeling. This includes decade-long models for predicting broader climatic changes down to drought or flooding for certain areas or changes in the Arctic sea ice over time.

Somewhere between 50 percent and 60 percent of the workloads run by NCAR today are for the climate modeling based on the Community Earth System Model, which is used to do long-term climate simulations, both reaching into the past and extending out into the future. This set of code is developed under the auspices of the National Science Foundation and the US Department of Energy. Another 20 percent or so of the workload running on NCAR systems today is the Weather Research and Forecasting Model, or WRF for short, which is a more short-term weather prediction system that dates back to the 1990s when NCAR, the National Oceanic and Atmospheric Administration, the Air Force Weather Agency, the Naval Research Laboratory, the Federal Aviation Administration, and the University of Oklahoma teamed up to create better weather forecasts.

Kamrath is hopeful that accelerators of one form or another will be used to speed up weather and climate codes, and says that there are variants of WRF that are accelerated by GPUs. “We have teams working on using GPUs and Xeon Phis and those kinds of things for both the climate and weather codes. It is just not ready for primetime. I am hoping in the future when the Knights Landing Xeon Phi comes out that might be an environment that is more conducive. Also there is future technology on the GPU side that will make them more usable. But it is still a question. The climate code is over 1.5 million lines of code and there are over 100,000 IF-THEN statements, and those kinds of things don’t do as well in GPU-type architectures. So we will have to see.”

The software stack for Cheyenne includes SUSE Linux Enterprise Server as the operating system with SGI Management Center and Development Suite extensions. NCAR will be using the PBS Professional workload manager from Altair to schedule work on Cheyenne, and Unified Fabric Manager from Mellanox to manage the InfiniBand network. Application developers will be using Intel’s Parallel Studio XE compiler suite.

As part of the Cheyenne installation, NCAR will be significantly expanding its GPFS parallel file system. DDN is the primary vendor for this piece of the system, and will be supplying four of its “Wolfcreek” storage controllers, announced at SC15 last November, and a slew of disk enclosures to create a 20 PB extension to the Glade setup. This array will have 3,360 nearline SAS disk drives with 8 TB of capacity each plus 48 mixed-used flash SSDs for storing metadata across the controllers; these have 800 GB capacity. This storage cluster will run Red Hat Enterprise Linux to host GPFS.

“The performance of the file system is hugely important to us,” Kamrath explains. “We found with the climate simulations, the file system is the bottleneck. You have to move the data through the simulation and do data analysis and visualization all from a high speed file system. So that is just crucial. We have really instrumented our environment with Yellowstone, so we really know what our codes are doing. When we did Yellowstone, it was more of a guess, but we are pretty confident the numbers with Cheyenne.”

“Our current environment has 16 PB of capacity and about 90 GB/sec of throughput, and that balance we found to be actually pretty good,” continues Kamrath. “With the next file system, we are increasing it to 20 PB and it will have 200 GB/sec of bandwidth, and that environment is easily expandable to 40 PB with the addition of disk drives.” If NCAR needs to expand the bandwidth in a portion of the GPFS cluster, it can add more Wolfcreek controllers to the GPFS file system.

The variant of the Wolfcreek storage arrays that Cheyenne is using is based on the SFA14KX platform, which has metadata servers external from the arrays and comes bare bones with customers adding their choice of file systems to the array. (Sometime this year, DDN will create appliance versions of the Wolfcreek storage that embed the metadata servers and the file system, but big supercomputing centers tend to get the raw arrays and tweak and tune their own Lustre or GPFS installations.)

Molly Rector, chief marketing officer at DDN, says that the combination of SGI compute and DDN storage gives the partners a chance to move weather codes “to the next level” and then push the resulting improvements in scalability and performance out into the broader market.

As for the interconnect network, NCAR is moving up to 100 Gb/sec EDR InfiniBand from Mellanox with Cheyenne, but the research institution did look at all of the options out there in the field before sending out the bids and signing its contracts with SGI and DDN a few weeks ago. Both Intel’s Omni-Path and Cray’s “Aries” interconnects were under consideration, and there was no requirement that it be InfiniBand.

The cost of the Cheyenne system is not being divulged, but Kamrath confirmed to The Next Platform that it is on the same order of magnitude as the cost of the Yellowstone machine, which had an expected price tag of between $25 million and $35 million when that deal between IBM and NCAR was announced back in 2011. NCAR and its system suppliers are wary of giving out numbers because price comparisons are often apples and oranges in these big system deals, with various compute, storage, software, and maintenance costs sometimes being added in and sometimes not.

It is natural to jump to the conclusion that because Lenovo bought the System x business from IBM, as well as licensing IBM’s Platform Computing cluster management and GPFS parallel file system software, that the IBM sale opened the door to other supercomputer makers because of a desire to have the system come from an indigenous US supplier. But Kamrath said the HPC market is very competitive and that a half dozen companies bid on the Cheyenne procurement. (These included Lenovo as well as Cray, which has dominant market share in weather systems, as The Next Platform has previously reported.) She could not get into the competitive details.

SGI and DDN will be delivering initial machinery for Cheyenne in July, with acceptance expected in September or October as early users test the system. Full production on Cheyenne is expected sometime in January 2017.

Be the first to comment