The bottlenecks never get removed from a system, they just shift around as you change one component or the other. We live in an era where compute is nearly free but moving data around so it can be used in computations – from cache and main memory out across cluster interconnects – is one of the big barriers to scaling up overall system performance.

Over the past several years, the advancements in interconnects used in high performance computing systems have evolved at a pretty rapid clip, and companies deploying systems for simulation, modeling, and now analytics workloads have lot of choices – sometimes tough choices – to make when it comes to deploying a network with which they lash together their compute engines.

To get a handle of the state of HPC interconnects as we enter 2016 and what the future holds over the next several years, The Next Platform had a chat with Steve Scott, the chief technology officer at Cray. Scott was instrumental in the development of several generations of Cray interconnects, and in recent years spent some time at GPU accelerator maker Nvidia and search engine giant Google before returning to his CTO role at Cray in September 2014.

Timothy Prickett Morgan: We have been thinking a lot about interconnects lately, with the rollout of Ethernet, InfiniBand, and now Omni-Path networks running at 100 Gb/sec speeds. We have always believed that there is a place for specialized networks aimed at specific problems, particularly at scale, and the market seems to support them. Is this a pattern that you think will persist?

Steve Scott: Computer architecture in general is this great field in that the answers to the same questions keep changing as the underlying technology sandbox changes. My advisor in grad school when talking about the qualifying exams for computer architecture told us that every year, we did not have to change the questions, just the answers. There is some truth to that. The right way to build a computer is completely dependent on the underlying technology. We have seen all of these changes in the processor landscape, mostly around energy efficiency – either through the memory hierarchy and the adoption of new memory technologies or different kinds of processors that are trying to get more efficient as Denard scaling has ended.

In the network space, the optimal way of building networks has also changed over time due to technology. I think that we are getting pretty close to figuring out what the right way is to build an interconnect, and I actually don’t see that changing much over the next decade. Eventually it will change once we get past CMOS chip making and to completely different technologies, but given the basic technology landscape, I do not see interconnects changing all that much.

So here is what has happened. Over the past fifteen years or so, it has become optimal to build networks with higher radix switches – basically switches with more skinny ports than fewer fatter ports. The main reason for that is that signaling rates have gone up relative to the sizes of the packets we are sending.

If you went back fifteen years when we were doing the T3D and the T3E, you didn’t want to have super skinny ports because the serialization latency – the time to squeeze a packet over one of those skinny ports – would become really large compared to the latency of the header moving through the network. So you wanted to have wider ports because the signaling rate was not that high and it would take a long time to squeeze the packet over the port. As the signaling rates have gone up and up, the penalty to squeeze a packet over a narrow port has gone down, and that has let us build switches that have a higher radix – that have a lot higher number of ports – and in so doing, you can now have a network topology that has a lower diameter, a smaller number of hops that you need to take through the network. With a high radix switch, you can now get from anywhere to anywhere else in the network with a small number of hops.

TPM: How has the network topology and the hopping around changed over time on the Cray interconnects?

Steve Scott: With the “Gemini” interconnect, there is a funny history behind all of that.

The original “SeaStar” routers and the routers that were in the T3D and T3E all implemented a 3D torus topology for the network. Gemini was built to do a higher radix network, basically like a fat tree – with the larger number of ports, the higher the branching factor in the fat tree and the fewer number of levels you need. Gemini was built to do a shallower fat free, and then it turned out from a product perspective, there were great advantages to being able to upgrade current systems without changing the topology. We had a large number of XT3s and XT4s in the field, and they had all of this cabling infrastructure that was there as a 3D torus and we ended up taking the Gemini router and combining ports together and making it look like you had two nodes of a 3D torus. So in the field, we could just replace all of the router cards so every two SeaStar chips became one Gemini chip and from a network perspective, Gemini looked like two nodes of a 3D torus.

The Gemini router could have done something more similar to the current “Aries” interconnect used in the XC30 and XC40, but we had not come up with the dragonfly topology yet. Gemini was going to be a high radix fat tree, kind of where InfiniBand is today. It was a product decision to use it as a 3D torus.

TPM: How did the port counts and network scalability evolve from SeaStar to SeaStar+ to Gemini to Aries?

Steve Scott: SeaStar had six router ports: it had a network interface port to talk to the local processing node and then it had plus and minus X, Y, and Z to make the 3D torus. Gemini was a 48 port switch, and it had 40 external ports and 8 internal ports to talk to the local nodes, and it was called Gemini because it had two local network interfaces on the chip. The Gemini ports were 3-bits wide and the old SeaStar ports were 12-bits wide, so we ended up combining the 40 Gemini ports so it looked like ten ports of SeaStar. If you draw a 3D torus with six ports coming out of each node, and then you draw a circle around a pair of nodes so they have one port connecting them internally inside of that circle, you have ten ports that are leaving that circle. This is how Gemini effectively looked like two of the SeaStar nodes.

It actually just worked out that way, and again, this was just a productization that allowed us to do a network swap just like we can do a processor swap.

TPM: Aries was a radically different animal in terms of its architecture?

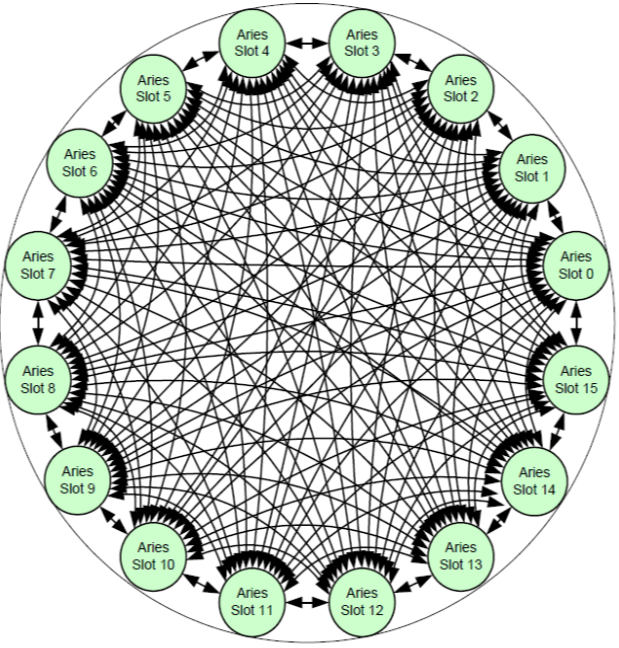

Steve Scott: Aries was developed as part of the DARPA HPCS program, and with this, we invented the dragonfly topology, which was created to take advantage of the high radix routers and build a network with a very low diameter and to minimize the number of expensive optical cables.

In addition to the technology that was changing routers, one other way the technology helped was in the development of relatively inexpensive optical signaling. When you build a network that uses high radix chips with a high number of ports that you can use in a low diameter network with fewer hops between nodes, the one downside is that you end up with some long cable lengths. You can build a 3D torus and never have a cable that is longer than a couple of meters, which means you can use all electrical signaling over copper wires. To build a very low diameter topology, you end up with some very long links and you need optics because you can’t do signaling at high rates over a fifteen meter cable.

The optical links are still expensive, so what we wanted to do with dragonfly and Aries is decrease the cost of the system. The main innovation with the dragonfly interconnect is that it gives you a lot of scalable, global bandwidth at a relatively low cost. If you think about a fat tree or a folded Clos, which is what it should be technically called, every time you grow the system from its current maximum size, you have to add another layer on. If we think about a standard InfiniBand fat tree based on 36-port router, you could have a single-level fat tree can connect to 36 nodes directly. If you go to two level fat tree, the top tier has 36 ports facing down and the following tier has 18 ports facing down and 18 ports facing up, and that will scale to 18 times 36 nodes, or 648 nodes. With a third tier, you will have 18 times 18 times 36, or 11,664 nodes, and to go bigger than that, you will need a fourth tier.

InfiniBand has another limit in terms of the number of logical IDs, or LIDs, it can support, which is around 48,000 end points, and for the most point, people have stayed within that limit. There is an extended version that has some higher packet overhead that can go to higher scalability.

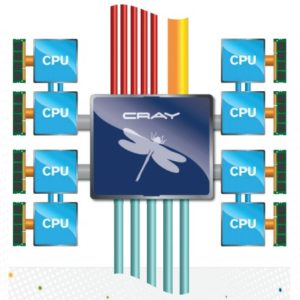

The idea behind the dragonfly topology is that you get the scalable global bandwidth of a fat tree but you have the cost scale like a torus. There is a router chip directly connected to the compute nodes, and you never have to add another router chip to the system. So in a “Cascade” XC40 system using the Aries interconnect, you have the exact number of router chips necessary, whether you have two cabinets or two hundred cabinets, and the way that we do that is the network diameter remains constant. You don’t have to increase the number of hops as you grow the system, whereas in a fat tree, as you grow the system size, the diameter of the network has to grow because to cross the network you have to go all the way to the top and then all the way back down again to get to the majority of the nodes in the system.

In the dragonfly, you have a level of all-to-all connections that are local. In the Cascade system, you have all-to-all links between the sixteen Aries chips that live in a single chassis, which is one hop across the backplane for any of the nodes to talk to each other. Then you have another set of all-to-all links between the six chassis in a two-cabinet group. These are all done with short copper cables. So you can get to any node in a two-cabinet group in no more than two hops, and this would have 768 Xeon processors in this group, which is a fairly dense. Then there are optical cables that leave the two-cabinet group, and they are connected in an all-to-all topology. The result is that you can take two local electrical hop, a single global optical hop, and two local electrical hops, so that means with five hops, you can get anywhere in the system – up to tens of thousands of nodes.

So with Aries and dragonfly the network diameter is low and notably there is only a single optical hop required, whereas in any kind of fat tree or folded Clos network, even a moderate sized one, will have a couple of optical hops – one to go up to the root switch and one to come down, and in larger networks, you might have two stages of optical links and have four hops. With dragonfly, the network latency stays low because the number of hops doesn’t grow, and the network costs stays low because you don’t have to add extra router chips. You minimize the number of expensive links, and you integrate your switching into the compute cabinets rather than having separate networking cabinets.

TPM: How has the latency of the these Cray interconnects changed over time, and how far can you push it? Depending on the network, we are in the high tens to low hundreds of nanoseconds for port-to-port hops, and it seems like at some point you hit the limit of physics.

Steve Scott: In fact, we are actually taking a small step backwards. As the industry moves to the next generation of signaling technology, which is probably going to be 50 Gb/sec with PAM4 signaling, and as we are pushing to 25 Gb/sec and 50 Gb/sec, the error rate on the transmissions and the electrical margins are getting to the point where we need to add on forward error correction in addition to CRC error correction which protects against flipped bits by detecting errors and retransmitting data. This forward error correction allows you to correct for small numbers of flipped bits without retransmitting. It is a bit like ECC on main memory, except it is a rather complex code, and this adds latency on a per-hop basis. So it looks like every hop will require another 100-plus nanoseconds to do the forward error correction in addition to whatever latency is required to go through the core of the router chip.

So latency per switch per hop is not getting better, and in future generations it looks like it will be worse. We have gotten to the point where we have dropped the number of hops down, to five, but with a higher dimensional radix switch – let’s say you built a 64-port switch – you could build a dragonfly topology that only had one internal, electrical all-to-all interconnect, and now you are down to three hops maximum across the topology. You could scale to over 250,000 nodes with a three-hop network, which is pretty cool.

You are not really going to get better than that. We know how to build a good high radix switch. This is where we need to be, and I don’t see that basic dragonfly topology changing and a mix of electrical and optical signaling needing to change any time in the not-too-distant future.

TPM: How does Aries compare to the future Omni-Path 2 series from Intel that will be deployed in future “Shasta” systems from Cray?

Steve Scott: The Omni Path 2 network is largely based on the technology that Intel acquired from Cray in 2012. The first generation Omni-Path 1 is largely based on Intel’s acquisition of True Scale from QLogic. Intel has taken a little bit of the Cray technology with respect to the link protocol, but with respect to the switch internals and what topologies it supports and how you do routing, that does not show up until Omni-Path 2. We really like Omni-Path 2, we are quite familiar with it, in large part because of its heritage, and there will be some unique features in the Omni-Path 2 network that are exclusive to Cray. I don’t think we can talk publicly about what they are, but they are significant. We will be able to build a really nice Omni-Path 2 network, and we will have that in Shasta.

We also expect that most of the processors in Shasta to come from Intel, but we want to be able to accommodate other processor technologies, so we are looking into open interconnects. We are not saying anything publicly about that yet. But we will have a network alternative, much as we do with our cluster systems, and we will ship Omni-Path 1 in our cluster systems as well.

We are happy with Aries until we get out to Omni-Path 2, which will definitely obsolete Aries.

In the EDR InfiniBand and Omni-Path 1 generations, the signaling rates at 25 Gb/sec have now moved beyond where Aries is, which uses 12 Gb/sec and 14 Gb/sec signaling, which was basically the level of signaling with FDR InfiniBand. So these technologies have higher signaling rates. But if we look back to the Gemini era, QDR InfiniBand was out at 10 Gb/sec signaling at the same time that Gemini was doing 3.5 Gb/sec, and so there was an even larger gap yet Gemini scaled better and delivered better performance than InfiniBand at that time. In the same sense, we feel very comfortable with Aries as an interconnect until we get to Omni-Path 2, and that is because it is the only interconnect that supports the dragonfly topology and does adaptive routing, which gives it performance at scale.

If you look at actual latency under load in real systems, it is almost all queuing latency. Most of it comes from congestion and queuing in the network, and not the minimum flight time of a packet through an unloaded network. What always gets quoted, because it is easy in a benchmarking sense, is what is the minimum MPI latency through an unloaded network between two nodes. In reality, as you start scaling up the system size, you start getting contention and the fact that Ethernet and InfiniBand do static routing leads to all sorts of contention and reduced efficiency in the network, and so they just don’t tend to scale well under load. That’s why Gemini performed well against InfiniBand at the time, and it is why we still feel good about Aries out through 2018.

TPM: Some of the LINPACK benchmark numbers do not show this benefit, however, and in fact, InfiniBand often looks better than Cray interconnects in terms of efficiency – measured performance divided by peak theoretical performance.

Steve Scott: LINPACK does not exercise the interconnect in any substantial way.

TPM: What is the delta between Aries and InfiniBand or Ethernet for real workloads then? Can you scale the Aries interconnect down and take on those alternatives on smaller-scale clusters? Or will Aries and then Omni-Path 2 be a high-end interconnect aimed at very large systems? I am thinking about the enterprise customers that Cray wants to sell to as well as national labs.

Steve Scott: It certainly does scale down, and in fact it can do it relatively efficiently. Even at a small scale, the draghonfly topology will have relatively low overhead in terms of the number of switches that you need. But the differentiation really drops off at a really small scale. If you have a 36-node cluster, all of them are linked directly to the switch, and you can’t really do better than that. What we see at small scale, comparing InfiniBand running on our Cluster Systems versus Aries running on Cascade XC systems – with the same compute nodes, dual-socket Xeon – we will see that the performance is basically the same over the two. The Cray Linux Environment might give you a 5 percent increase over a stock Linux, but from a network perspective, they look basically identical.

And then you get up beyond a few hundred nodes and you start seeing the difference. There is contention in the Cluster Systems, and the XC line starts to pull away.

The bottom line is that Aries is perfectly fine at a small scale, but you really don’t get the differentiation.

TPM: Are you interested in getting customers to choose XC machines over Cluster Systems using InfiniBand or Omni-Path, so they are already able to ramp up their clusters rather than having to change architectures?

Steve Scott: There is one area where it is interesting. This has nothing to do with the network topology, but the ability of Aries to do very low overhead, fine-grained, single-word, Remote Direct Memory Access (RDMA) references efficiently is intriguing. InfiniBand is getting better here, but the ability of Aries to do high rates of single word references can enable certain workloads even at the scale of a single cabinet.

Most MPI workloads don’t need to do fine-grained messaging or remote memory access, but some of the things we are looking at in the data analytics space – and in particular graph analytics – look like they can be accelerated using the capabilities of the Aries interconnect.

TPM: We have been thinking about how a future Urika appliance might be architected, oddly enough, on a converged Cray platform.

Steve Scott: I can say that we have done work on Aries looking at graph analytics workloads, and it looks very promising.

TPM: Will you need to upgrade the ThreadStorm mult-threaded processors in this future converged machine?

Steve Scott: You can do it just great with a Xeon. We are not planning on doing another ThreadStorm processor. But it does take some software technology that comes out of the ThreadStorm legacy.

TPM: What about ARM processors? Are they something that you can marry to Aries, or will you use a different interconnect there? We know that Cray is doing some exploratory work with the US Department of Energy with ARM processors.

Steve Scott: There is nothing really special about ARM, it is just another instruction set, and any processor that has a PCI-Express interface can connect to any interconnect interface that supports it – Aries, InfiniBand, Ethernet, Omni-Path, or otherwise. You are probably not going to see ARM processors with integrated Omni-Path NICs.

PCI-Express works just great except for the very most aggressive, single-word, direct memory access workloads. This is a niche within the HPC market, and you could do better with an integrated NIC than you can over PCI-Express. Although you can still do pretty well over PCI-Express, and today that is what Aries uses.

We see a lot of people who are interested in ARM, but they are waiting and seeing what happens. I think if you go down the road a few more years, you will see more competitive HPC processors in that space.

Be the first to comment