There is a simple test to figure out just how seriously social network Facebook is taking machine learning, and it has nothing to do with research papers or counting cat pictures automagically with neural networks. If Facebook is designing and open sourcing hardware to support machine learning at scale, then it is serious.

At the Neural Information Processing Systems 2015 conference in Montreal, Quebec this week, Facebook took the wraps off of a custom server, code-named “Big Sur,” that it has created to run its neural network training algorithms. Like most of its hyperscale peers, Facebook is accelerating the running of those training routines against data sets using GPU coprocessors, and Yann LeCun, the inventor of the convolutional neural network and a professor at New York University, is also one of the founders, along with Serkan Piantino, of the Facebook AI Research center at Facebook’s facilities in New York City. We spoke to LeCun about the future of deep learning hardware at the Hot Chips conference back in August, weighing the use of GPUs against FPGAs for neural network training.

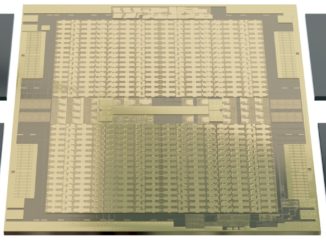

The Big Sur machine that Facebook has unveiled is powered by Nvidia’s latest Tesla M40 accelerator, which the company announced last month and which were aimed squarely at the hyperscalers and their neural network training needs.

Piantino said in a blog post announcing the Big Sur effort that Facebook was tripling its investment in GPU-accelerated systems as it boosts its research efforts in machine learning and seeks to have its software developers embed neural networks into more and more of the company’s services. The company did not divulge how many GPUs it already has in production as neural net accelerators, but clearly the volumes are now sufficient that the company is willing to invest engineering time and money into creating its own machines for this purpose. This engineering effort also implies that Facebook expects to see a rapid ramp in neural network training and it wants to trim costs by making its own machine and not using a third party GPU-capable system as it has been doing.

Even though the Open Compute Project, which was founded by Facebook, is five years old, it might be a bit surprising that every machine in use in the company’s server and storage systems is not based on an OCP design. We have been inside the Forest City, North Carolina facility and there was plenty of non-OCP iron still in use because once Facebook buys a server, it needs to get its money back out as work. Moreover, it takes a certain volume of machinery installs for Facebook to start designing one to replace one it could just buy in a low volume.

With the minimalist approach and specific tailoring that comes from its own hardware designs, Facebook is able to shave off hardware costs, reduce the complexity of its infrastructure (meaning easier management and fewer failures), and integrate the machines better into its Kobold server provisioning system and FBAR automated remediation system. And that is why Facebook is designing the Big Sur system. Helping others make use of what it has learned and building an ecosystem of suppliers for the Big Sur machinery is why Facebook eventually plans to open source the hardware design through the Open Compute Project. The precise timing of that was not divulged, but it is reasonable to expect that announcement at the Open Compute Summit in March 2016.

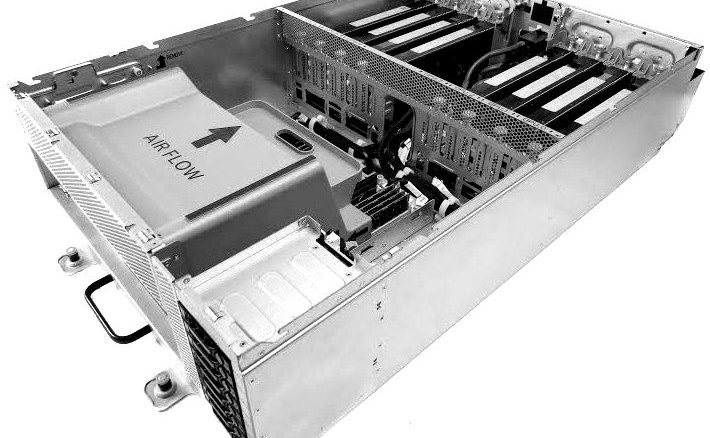

As you can see from the picture above, the Big Sur GPU-accelerated machine has a CPU component and a GPU component. The CPU complex is on a motherboard of its own that supports two “Haswell” Xeon E5 v3 processors and sixteen DDR4 memory slots. The Big Sur motherboard will be able to use the forthcoming “Broadwell” Xeon E5 v4 processors, which are coming out early next year and which are socket compatible with the Haswells.

The Big Sur system has a separate midplane, which you can see on the right side of the image above, that has room for eight PCI-Express 3.0 x16 slots, which can be any kind of peripheral that Facebook might want to use but which in this case will be eight of the Tesla M40 accelerators. The system is capable of supporting any kind of accelerator so long as it fits into a 300 watt thermal envelope. The peripheral slots are linked to each other and to the processor using a PCI-Express switch. Kevin Lee, an engineer on the infrastructure team at Facebook, tells The Next Platform that this switching allows for a number of different configurations when running neural networks.

“We are flexible in configuring the topology with this design,” Lee explains. “We can configure the system to optimize for peer to peer transfers, host to peer transfers, and even rearranging the NIC placement to utilize Nvidia GPUdirect. We have found that our optimal configuration is where the data can be transferred between all eight GPUs without returning to the CPU root complex.”

The Big Sur machine is light and airy compared to other GPU-packed servers even though it is probably drawing around 3,000 watts fully loaded. One of the reasons this is possible is that the Open Compute design puts power shelves and battery backup outside of the server enclosure and into the racks themselves. You can see the power feed coming into the system between the two banks of GPU accelerators.

The Big Sur system has eight 2.5-inch storage bays on the right side of the server, which are accessible from the front. These can support SATA devices, either disks or flash-based SSDs, but Lee says that Facebook is using SSDs to speed up data transfers.

While not divulging what prior machines it used for training neural networks, Lee did say that that these systems were based on Tesla K40 GPU accelerators from Nvidia and only have four of these in the box “due to topology, thermal, and power issues” and added that Facebook’s prior GPU machines “were also hard to service and manage at scale.” It took over an hour to remove the motherboard on the prior platform, whereas on Big Sur it only takes a minute; moreover, the memory sticks and drives are also easily accessible and pop right out, as do the accelerator cards, all without tools. The only thing that needs a screwdriver to remove is the heat sinks on top of the two Xeon E5 CPUs. This may not seem like a big deal, but once you have 5,000 or 10,000 machines, the speed of repair becomes a bottleneck because something is always failing.

The density on the Big Sur box is good, but it is not anything the market does not already offer. Colfax, for instance, has a 4U rack-mounted server that has two Haswell Xeon E5 processors and up to eight Tesla GPU accelerators plus two dozen 2.5-inch disks. This Colfax CX4860 machine has a board for the Xeons and another board for the Teslas, and it packs 50 percent more memory than the Facebook machine. The GPUs draw power from the CPU motherboard and there are four PCI-Express switches on the GPU portion that route through two PCI-Express slots back to the system board. There are other such machines that support four or eight GPUs.

And for the mother of all dense GPU machines, we can go all the way back to August 2010 when Dell launched the PowerEdge C410X, a PCI-Express expansion chassis that came in a 3U form factor and that crammed a whopping 16 GPUs (a dozen on the front and four in the back) into the enclosure and that linked back to its PowerEdge C6100, a four-node machine with two Xeon sockets per node, all in 2U of space and linking to the GPU blade server (that is basically what it was) through PCI-Express switching. So in 5U of space, more than five years ago, Dell could put eight processors and sixteen GPUs. This machine was specially built by Dell’s Data Center Solutions group for an oil and gas company doing seismic processing that was unsure what ratio of CPU-to-GPU it needed, so it wanted it to be flexible. This Dell box capped the power draw for the accelerators at 225 watts, however, not the 300 watts that the Big Sur machine can handle.

Doing More Work Or Doing It Faster

Based on early results, Facebook says that the Big Sur machine with eight Tesla M40 accelerators has about twice the performance running its neural network training algorithms than the system with four of the Tesla K40 coprocessors. That performance can either be used to run training sessions twice as fast, says Lee, or to train networks that are twice as large. Both the Tesla K40 used in Facebook’s previous system and the Tesla M40 used in Big Sur offer 12 GB of GDDR5 frame buffer memory for holding data and networks.

This stands to reason. The Tesla K40, which is based on the “Kepler” GK110B GPU, has a peak rating of 5 teraflops with GPU Boost overclocking on and fits in a 235 watt power envelope. The Tesla M40 is based on the “Maxwell” GM200 GPU, which peaks at 7 teraflops in a 250 watt power envelope. So the peak GPU performance of the old Facebook neural net training machine was 20 teraflops and the new one is 56 teraflops, or a factor of 2.8X. It looks like sustained performance might be only 2X on neural network training, and that could be because the memory is not scaling in parallel with single precision teraflops. (You would need 16.8 GB per Tesla M40 to do that.)

The difference between peak teraflops and Facebook performance on neural nets could also be an effect from the difference between spreading algorithms and data across eight GPUs instead of four. You can never escape the overhead of queuing. But with NVLink ports between GPUs starting next year, the bandwidth will be a little bit higher (20 GB/sec per NVLink port versus 16 GB/sec for PCI-Express) and presumably the latency will be lower, although we don’t know the cost. Facebook likes to use standard and cheap technologies because at scale, it is all about price/performance, unlike with HPC centers, where it is all about performance for its own sake. The point is, we don’t know how much enthusiasm there is for NVLink among the hyperscalers for linking GPUs to each other or for linking GPUs to CPUs, particularly if the Xeon processor doesn’t have NVLink ports. (Power8+ chips will next year and ARM chips could.)

The Big Sur system is obviously interesting for use cases other than neural network training. Any PCI-Express device that requires its own power to run can be plugged into the system, and that could mean FPGA or DSP accelerators. Even PCI-Express flash cards could be used in there to create a killer flash storage array front-ended by some pretty hefty CPU. At Facebook, we can envision all kinds of workloads where single precision floating point processing is useful, including video encoding, video streaming, and virtual reality, running on Big Sur.

As for running the inference code that results from a trained neural network model, the Tesla M4 is better suited to this and can be powered directly from the PCI-Express slot. To create a Big Sur variant for this workload, all Facebook has to do is tweak the power distribution a little and the machine could be populated with Tesla M4s. In fact, given the relative small size of the Tesla M4 (it is a half height, half width board), we can envision such a machine having two small two-socket motherboards and 16 Tesla M4 accelerators all crammed into the same space. You might even call it Little Sur.

Well, it’s not so impressive at all.

In 2012 a guy named Jeremi Gosney @jmgosney has build a machine with 8 Radeons, then stack 5 machines in a cluster of 25 video cards, The software used was Mosix VCL Cluster.