For most applications running in the datacenter, a clever distributed processing model or high availability clustering are enough to ensure that transaction processing or pushing data into a storage server will continue even if there is an error. But there is always a chance of data loss, and for some applications, this is not acceptable.

This is where NVDIMM memory, short for Non-Volatile Dual Inline Memory Module, a hybrid that welds non-volatile flash memory with regular DRAM, comes into play. With NVDIMMs, you get the low latency and high bandwidth of DRAM, and data is quickly replicated to flash memory on the module. In the event of a power failure in the server, there is still a chance that data might not make it from the DRAM to the flash on the NVDIMM module, so Micron also has a variant with a capacitor that will keep the module powered up long enough to dump the contents of DRAM into flash on the module.

Most servers these days use DDR4 main memory, and Micron Technology, one of the biggest plain vanilla memory makers in the world and also Intel’s development and manufacturing partner for its regular NAND flash memory and its forthcoming 3D XPoint non-volatile memory, is bringing a new set of NVDIMMs to market that adhere to the DDR4 standard. This is a little more than a year after DDR4 memory went mainstream with Intel’s “Haswell” Xeon E5 v3 processors, and expected lag for an exotic technology that will not be high volume but will be important in selected parts of the hardware platform stack for many applications.

These new DDR4 NVDIMMs from Micron are follow-ons to DDR3-based NVDIMMs that Micron manufactured and sold through Agiga Tech, and they adhere to a new set of standards that JEDEC, one of the key standards bodies in the microelectronics industry and one that focuses on memory architectures and interfaces, have adopted relating to how firmware works with NVDIMM. With the new DDR4 NVDIMMs, Micron is not only making the modules, but it is taking them to market itself through its various partner channels and, Ryan Baxter, director of marketing for the compute and networking business unit at Micron, tells The Next Platform that it is doing so because Micron believes that NVDIMM will be more of a mainstream part for servers in the DDR4 generation than it was in the DDR3 era.

In many cases, it is cheaper and smarter to add NVDIMM memory to a system to protect fast-moving and critical data than to try to ensure the viability of that data in some other fashion. Pairing NAND flash memory with DRAM on the memory stick radically reduces latency compared to taking data and pushing it out to a PCI-Express flash card or, worse still, to a flash-based SSD that is hanging off a controller or, if you are lucky, linked to the compute complex through direct ports using the new NVM-Express protocol that is just starting to be embedded in systems.

While NVDIMM memory got its uptake in clustered storage arrays, Baxter tells The Next Platform that Micron is anticipating wider use of the technology, starting with hyperscale companies and of course those engaged in high performance computing in its myriad forms.

With traditional storage arrays, making sure that data is replicated for safe keeping means having pairs of very expensive RAID controller cards – one in a master node, and another in a slave node in the cluster – with non-volatile memory embedded in them. Data comes into the master RAID card and is pushed to its non-volatile storage and at the same time replicated out over the network – very likely 10 Gb/sec Ethernet but maybe even InfiniBand at 20 Gb/sec or 40 Gb/sec, depending on the architecture – to the second RAID card, where it is also put into non-volatile memory on the controller. Once the data is committed to both sets of non-volatile memory, it is then pushed out to whatever permanent storage that sits on the controllers (be it disk or flash storage) and flushed from the non-volatile memory in the RAID controllers using whatever protocol is set up. This process takes on the order of hundreds of milliseconds to complete, which is an eternity for applications that need faster turnaround time than this.

With the NVDIMM approach, such system clustering for storage workloads can be radically simplified, says Baxter. “Instead of using exotic RAID cards and high speed Ethernet links, you use the Non-Transparent Bridge that ships with most Xeon-class processors to mirror that data into a slave using Remote Direct Memory Access into the NVDIMM and you are done. There is no waiting around for the write to complete in non-volatile memory in a second RAID card.”

Non-Transparent Bridge, or NTB, is a way of linking machines together directly over their PCI-Express buses, a kind of PCI-Express fabric, if you will. With this mix of NTB and NVDIMMs, it takes far fewer CPU cycles to ensure the data is protected, and you go down to something on the order of a few tens of microseconds for the whole cycle to complete, which is around four orders of magnitude – tens of thousands of times – faster.

“The fact of the matter is that there is still no persistent memory that equals the performance of DRAM, and so for that reason we actually we see DRAM-based NVDIMM co-existing with 3D XPoint for quite some time. There are applications that move to 3D XPoint for the capacity benefits, but if you need the latency and the persistency, you would still likely use NVDIMM in conjunction with 3D XPoint, probably as a front-end cache.”

Baxter says that this use of NVDIMM memory was popular for both traditional storage array appliances sold by the big brand-name vendors and also caught on with the hyperscalers and cloud builders who create their own storage servers and are more likely to use means other than RAID controllers – perhaps software-based RAID or erasure coding – to ensure the viability of data.

“That was the original use case for NVDIMM, but since then, demand for NVDIMM has really come from out of the woodwork,” says Baxter. “If your application relies on a set of variables that need to be in the critical path to ensure the performance of the applications and those variables need to be persistent – you cannot lose the data that is associated with those variables for whatever reason – those are typically variables that are stored on a hard disk drive or an SSD today. If your application depends on the low latency of those variables to perform well, chances are that if you move those variables into main memory and move it out of the critical path, you will likely see an immediate impact on performance acceleration. NVDIMM allows you to create a very low latency portion of your storage hierarchy to accomplish this.”

Baxter says that most applications involving NVDIMM use it as a block-mode storage device, although that is not the only way to make use of it, and therefore get the latency benefit of being on the memory bus instead of the PCI bus. To give you an idea of the performance difference, an NVDIMM module with 32 GB of DRAM backed by flash can deliver around 35 GB/sec of bandwidth, compared to 600 MB/sec on the fastest PCI-Express flash card. And the latency for the NVDIMM is on the order of 5X to 10X lower, too. This is using block mode addressing, which makes the NVDIMM look like a block device such as a disk drive or flash drive, even though it is not. Once you move into directly mapping the device, which gives the ability to address at the byte level, then the latency will drop by a factor of 10X to 20X compared to PCI-Express flash cards. “In this case, the only bottleneck is how fast you can write to the media, which in this case is DRAM,” says Baxter.

NVDIMM is going to be a bit more mainstream with the DDR4 generation than it was with the DDR3 generation, according to Micron, which is why it is making and distributing the product itself this time around. One of the driving factors is the move away from storage appliances with expensive RAID controllers to cheaper storage servers with clustered architectures and software-based controllers and high availability features, as discussed above. But another factor will be more widespread driver support in Linux and Windows Server next year, and more widespread BIOS support, too.

“All of these frictions are slowly being removed,” says Baxter, who reckons that during the DDR3 era, somewhere between 50,000 and 100,000 NVDIMM modules sold each year but that starting with the DDR4 NVDIMMs, Micron is modeling for hundreds of thousands to maybe even over a million units per year selling. This into a market that consumes 10 million servers and, by our back-of-the-envelope math probably somewhere around 50 million to 75 million memory sticks a year.

“That is what we are estimating, and that is probably a low water mark. Over the next 12 to 18 months we will be out there generating interest in NVDIMMs, and we have seen quite a bit of interest from the high frequency traders, who have plenty of those persistent variables and it is all about low latency in that business. We are seeing more and more of the next platforms coming out supporting NVDIMM, from OEMs, ODMs, and system integrators. But we are not under any delusion that this is going to be a 20 percent to 40 percent attach rate on all servers sold. We are expecting something south of 10 percent.”

Diablo Technologies, which worked with SanDisk on the UlltraDIMM flash-based, block-level memory and developed its own Memory1 flash-based memory, is similarly optimistic about persistent memory, although it has a different twist on it.

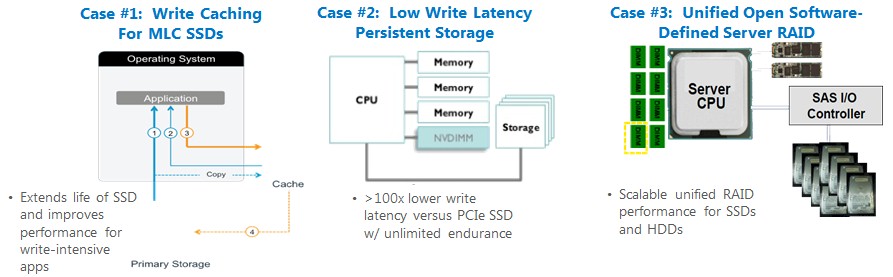

Micron knows that NVDIMMs are not for every use case, but that does not mean there will not be any. The HFT example above is one, and the high availability for storage mentioned at the beginning of this story is another. In many cases, there will be benefits from being able to do work faster and benefits for being able to do it on simpler and less costly hardware than has been possible in the past when data absolutely cannot be lost and must be very quickly accessed. Here are a few more scenarios:

The first case cited above is a common use case for NVDIMM among hyperscalers, and this is one that has actually been put into practice, it is not just some theoretical use case. The hyperscaler in question, who was not identified of course, wanted to use SSDs based on multi-level cell (MLC) flash memory in their primary storage because single-level cell (SLC) flash was too expensive, even if it is faster and more reliable. The problem with flash is that writes are slow and wear out the memory cells, and the variables in the this hyperscaler’s infrastructure were changing so fast that the MLC flash drives were wearing out in three to four months. So this hyperscaler decided to put NVDIMMs in the storage servers to act as write caches for the flash in the box. This extended the life of the flash SSDs to the three to five years they are warranted for, and the NVDIMM paid for itself in 6 to 9 months, depending on the drive in question.

Other customers, says Baxter, are looking to improve in-memory database processing with the use of NVDIMMs, and the idea here is not to store an entire database on NVDIMMs, but rather the metadata for the in-memory database would be stored there. In the event of a system crash or power failure, metadata that is stored on flash-based SSDs is much slower to process to recreate the database in memory than it is using NVDIMMs. Moreover, because main memory is so fast and the gap between main memory and flash on SSDs is so far away, many millions of operations of the metadata for an in-memory database can be lost when a crash happens, which means transactions are lost. For good. Which is bad.

The DRAM in the NVDIMM needs power even if the server fails, and can be backed up by a supercapacitor that fits in a 2.5-inch server bay (shown above) or by another one that fits into an adjacent memory slot in the server. This backup takes approximately 40 seconds for the 8 GB NVDIMMs.

Baxter says that the server platforms that are coming out in 2016 – presumably with the “Broadwell” Xeon E5 v4 server chips from Intel – can have 12 volt power routed directly to the memory slots from outside lithium ion batteries or from rack-based battery packs that are commonly installed to keep servers up during brownouts or crashes for a short period of time. These latter approaches will obviate the need for supercapacitors, which can hold a limited amount of power, just sufficient to keep the memory charged up long enough to dump copies of its bits onto the NAND flash on the NVDIMM stick.

NVDIMM memory is not going to be cheap. It has DRAM memory and then enough NAND on the stick to hold two copies of the data stored on the DRAM, plus the requisite controllers to link this all together. Baxter says that NVDIMM will cost anywhere from 1.5X to 2X the cost of the same amount of plain vanilla DRAM, clock for clock and capacity for capacity. The supercapacitors cost anywhere from $70 to $120 each, depending on the form factor and charge capacity. The NVDIMMs come in 8 GB capacities now, and they are available with the prior command set used in the DDR3 NVDIMMs sold by Agiga Tech and the new standard command set created by JEDEC. Baxter says that these 8 GB NVDIMMs are being used mostly in proofs of concepts and that the 16 GB sticks that Micron plans to ship in the first half of 2016 (more or less concurrent with the Broadwell Xeons) are the ones that customers are interested in deploying in production, and the vast majority will support the new JEDEC standard.

The 8 GB NVDIMM memory stick will probably cost in the $75 to $80 range, says Baxter, a little less than that for high volumes. The 16 GB NVDIMM stick will probably run 1.9X that because it will be using higher density DRAM, which will bring the price down a little. The 32 GB NVDIMMs will probably require DRAM chips to be stacked up two deep, says Baxter, and that because these will require extra circuits for managing higher energy consumption and manufacturing cost the 32 GB NVDIMM should cost about 4.2X that of the 8 GB stick – an estimate of maybe $315 to $336 a pop. There are no plans to do 64 GB NVDIMMs at the moment; this would require stacking the DRAM four high and Baxter says that Micron can do it if it chooses to. It all comes back to customer demand, and their willingness to pay a premium for this density.

The natural thing to do is to compare NVDIMM to the forthcoming 3D XPoint memory that Intel and Micron have been working on together for more than a decade and that will also start sliding into the memory hierarchy next year. Micron has not said much about its plans for 3D XPoint, but Intel has said that it will sell this new memory under the Optane brand, starting first with SSDs in 2016 and to be followed with the M.2 “gumstick” form factor, sticks that are compatible with normal DDR4 pinouts, PCI-Express flash cards, and U.2 form factor drives, which plug into NVM-Express ports but which slide into SSD form factors. The DDR4-style 3D XPoint sticks are expected with the future “Skylake” Xeon E5 v5 server chips, as The Next Platform revealed back in May.

As for 3D XPoint, it is slower than DRAM on writes and Baxter says it has asymmetric read and write time latencies – something that no one has talked about as far as we know but which also is an issue with NAND flash. And while 3D XPoint has more endurance than NAND flash, it is not nearly infinite like DRAM. (We told you about the performance of 3D XPoint SSDs versus NAND flash SSDs here.)

“The fact of the matter is that there is still no persistent memory that equals the performance of DRAM,” says Baxter, “and so for that reason we actually we see DRAM-based NVDIMM co-existing with 3D XPoint for quite some time. There are applications that move to 3D XPoint for the capacity benefits, but if you need the latency and the persistency, you would still likely use NVDIMM in conjunction with 3D XPoint, probably as a front-end cache.”

It looks like servers out there on the cutting edge won’t just have lots of different compute, but also lots of different memory.

Is it just me or is Micron one of the biggest innovators in computing at the moment? They do this are part of Xpoint memory and also have the Automata processor in the pipeline.

Imagine if they took a shining to compute and networking? HA!

AMD and Cavium, anyone?

3DXP memory technology was developed mostly with INTC collaborating with MU. I will not give the whole credit to MU at this point. It is all announced and developed thru their JV.