There is a perfect storm developing that is set to whisk the once esoteric field programmable gate array (FPGA) processor ecosystem off the ground and into the stratosphere.

While some might argue the real momentum developed when Intel purchased FPGA maker, Altera, for an enormous sum earlier this year, many others, especially in the hardware and software trenches at large telco, banking, and genomics companies, could explain how the impetus for an FPGA boom has been gathering force for far longer.

FPGAs are certainly nothing new, but the opportunities that exist for their exploitation are just becoming clear to a far wider set of domains. While there were some who have already rolled out platforms featuring FPGAs at the core and backed by a variety of host processors (from ARM to X86), one could suggest they were too early to the game and still required a vast amount of expertise to program (or conversely, had too much sacrifice on the programmability in favor of performance side) and make use of. So what has changed—and with that question in mind, let’s use genomics as a prime example.

Aside from the idea that Intel will soon integrate the reconfigurable devices on its chips sometime in the future, there is a growing demand for what FPGAs can lend to data-rich industries, including the genomics market. These reasons go beyond a slowing Moore’s Law (in the face of growing data volumes/compute requirements), the added complication of declining costs per genome, and the increase in demand for faster, cheaper sequencing services. Companies like Convey Computing saw this opportunity a couple of years ago in their efforts to integrate FPGAs onto high-test server boards, but following the acquisition of their company by Micron, that future is on hold—and the market is open to new opportunities for FPGA-backed sequencing.

The thing is, FPGAs, even with the OpenCL hooks that companies like Altera and Xilinx have been touting, are not easy to work with, at least to get the ultimate performance. While using a higher level approach like OpenCL will expand the potential market, according to Gavin Stone, who worked with FPGAs for a number of years at Broadcomm, the low-level programming stuff is not going to go away anytime soon. The answer is to take the Convey approach and spin out a company that bakes that low-level programming into the device, match it against powerful host processors (in this case dual 12-core Xeons) and bring that to bear for genomics. The company he works with now, Edico Genome, is a blend of long-time Broadcom FPGA veterans and a slew of genomics PhDs, all of whom are trying to match FPGA and genomics expertise to an ever-expanding market for rapid genomic analysis.

What Edico Genome is doing is interesting on the hardware and software fronts, but the real story of performance is in their claim that they’re able to roll out 1000 genomes per day on their in-house cluster, comprised of a combination of Xilinx FPGAs and beefy Xeon CPU cores–all of which combined, comprised their DRAGEN genomics platform. In addition to gunning for the world record there, their server offering is found at a number of institutions, including most recently, The Genome Analysis Centre in the U.K., which is well known for exploring emerging architectures to get the genomics job done faster (including using optical processors, as we highlighted here some months back).

For a team, many of whom came from long engineering careers at Broadcom working with FPGAs, that perfect storm is right overhead—and genomics is at the center. “When we were designing an ASIC to be deployed in production, all the pre-design work is being done in an FPGA, but now, just in the last couple of years, the paradigm has shifted where FPGAs are themselves being targeted to go into production versus as serving as a development vehicle,” Stone explains.

“The general purpose processors are not going to be able to keep up with the data driving all of this, Moore’s Law is slowing down or at least becoming a constant for now, and in genomics, which will in the next ten years be the ‘biggest of all big data’ there is a clear reason why the popularity is growing, and this is why Intel acquired Altera for us, why going into production with an FPGA for production genomics work makes good sense.”

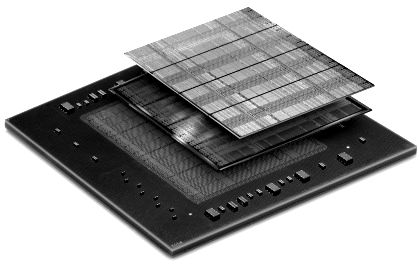

The DRAGEN board is a full-width, full-height (similar to GPU form factor) that goes into a dual-processor with two 12-core Xeons, which function as the host processors. There are typically have 6-8 high performance SATA drives in a RAID 0 configuration feeding the DRAGEN to target I/O bottlenecks. The system is strung together with Infiniband and is deployed in a 2U rackmount configuration. The company is selling this as preconfigured servers, which they can scale at will. It is one Xilinx-based DRAGEN FPGA board per host server.

The typical configuration for a high throughput center is several sequencing instruments (Illumina more than likely), which are connected via 10GbE to high performance storage and the data streams off the sequencers to there with a scheduler underpinning it to gather complete runs off the sequencers and over as DRAGEN runs. One DRAGEN card can handle the entire throughput of ten of the highest end Illumina sequencing cluster, Stone says.

There might be two to four DRAGEN servers to support ten or so Illumina setups, but Stone says their implementation in house of 20 DRAGEN servers is the largest to date—and that is the one that is set to garner them a world record for genomes processed per day. While some FPGA approaches have used the host CPU as a secondary element, in this case the 12-core Xeons play a critical role with both FPGA and CPU being constantly fed with utilization Stone says is almost 100%.

There might be two to four DRAGEN servers to support ten or so Illumina setups, but Stone says their implementation in house of 20 DRAGEN servers is the largest to date—and that is the one that is set to garner them a world record for genomes processed per day. While some FPGA approaches have used the host CPU as a secondary element, in this case the 12-core Xeons play a critical role with both FPGA and CPU being constantly fed with utilization Stone says is almost 100%.

Interestingly, Edico Genome has had a partnership to develop on the FPGA with Intel since before the Altera deal was announced. Of even greater interest is that they are currently using Xilinx FPGAs in their custom solutions. And to add one extra meaty bit of information, these genomics machines with Edico Genome’s DRAGEN FPGA boards are a bit different than what we’ve seen in many FPGA installations where the host CPU takes a backseat. Instead of using wimpy ARM or other lower-power cores to feed the system, these systems sport a dual 12-core Intel Xeon processors—letting those CPUs chew on one part of the workload while another parallelizable set of instructions hum away in reconfigurable fashion on the FPGA.

The value proposition for any company using FPGAs as the basis of their workloads is the same, however. To get the most performance out of their applications by blending CPU and FPGA—and exploiting that FPGA to the fullest with programming approaches that indeed, might prove barrier to entry to some, but when done properly, can lend enormous performance advantages. The next big wave of FPGA use will, according to Xilinx and Altera in several past conversations, be driven by the OpenCL programming framework and while this will open a wider user base, Stone says there is still room for specialization, particular in genomics.

“The advantage is being able to run whatever analysis pipelines are needed; that’s the advantage of going into production with an FPGA versus an ASIC—there are a lot of different genomic analysis pipelines. We can analyze RNA, agricultural biology, different pipelines for cancer research—all of these have widely varying pipelines and some are just nuanced, but they all require something different to be loaded into the FPGA before that run.”

“OpenCL makes FPGAs easier to program but it adds an extra layer of abstraction, so it’s like programming to Java versus machine code. It’s easier for more people, but you don’t get quite the performance you would if you programmed down at the machine level,” Stone notes. He says that while Intel is a current partner and they will indeed roll out their own FPGA-based servers sometime in the future, this is not a future barrier to their business. “FPGAs are a dedicated processor for the genomics tasks. The barrier to entry is higher but the performance improvements are significantly better once you get down to the machine level with the FPGA and have the domain expertise that you get from hiring PhDs and genomics experts.”

In the meantime, companies like Edico Genome are trying to make the case for why FPGAs make sense for genomics, something that has been a constant push since the company got its start in 2013. There are still relatively few in the genomics industry that truly understand what adding FPGAs into the mix means in terms of performance and capability, but he says that their installation on site where they do genomics runs as a service for existing companies shows that it’s possible to take on those 1000 human genomes—something that even large supercomputers can’t do. And all of this is done in a relatively small footprint.

Be the first to comment