The hyperscalers of the world have taught data processing organizations many important lessons over the past decade, and the two most important ideas are that you have to measure everything and use metrics to drive both hardware and software engineering, top to bottom, from the disk drive and memory stick all the way out to the power substation that feeds the datacenter. Very few organizations can afford such a holistic engineering effort, however.

That is one of the reasons why Cole Crawford founded Vapor IO last year. A former Dell IT strategist that worked on its own datacenter consolidation efforts and one of the founders of the Open Compute Project who was in the Facebook cafeteria when Mark Zuckerberg made an announcement to some 30-odd people that it was going to open source its server, storage, and datacenter designs.

Crawford eventually became chief operating officer and then executive director of the Open Compute Foundation, and helped shepherd the transformation of Facebook’s own engineering and manufacturing efforts into a community of developers working on a diverse set of products and an ecosystem of suppliers who build to the Open Compute specs or tweak them here and there. Crawford was also a co-founder of the OpenStack cloud controller. To say that he has datacenter hardware and software chops is an understatement.

Now, Crawford wants Vapor IO, the company he founded last year and launched in March, to do the same thing for datacenters, and the company is using a mix of software and open source hardware designs for an innovative new racking, cooling, and power distribution system to try to make datacenters cheaper to build and operate better – more like Google and Facebook.

At the March launch, Vapor IO talked mostly about Vapor Core, its software telemetry system, but a key aspect of its engineering effort to overhaul datacenter designs is the Vapor Chamber, a cylindrical, self-contained datacenter-in-a-rack that includes everything needed to run modern servers, storage, and switching. The Vapor IO idea started out as a means of putting datacenters closer to the edge of the network, Crawford tells The Next Platform, but it is being initially adopted by a different set of customers who are looking for an edge to either compete with Amazon Web Services.

“Making IT infrastructure competitive with public clouds is why Vapor IO was founded,” says Crawford. Not including the IT gear, and depending on the architecture of the building and its rack, power, and cooling systems, a modern datacenter costs as much as $10 million per megawatt to build, and when you do the math, that is enough money for “a ton of cloud computing capacity,” as he puts it. That is why carrier-neutral co-location facilities and managed hosting operations are growing more slowly than they were several years ago before the cloud took off.

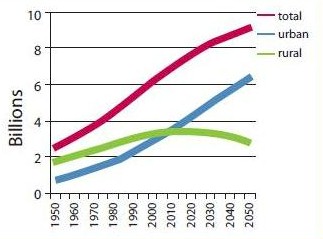

The original idea behind Vapor IO was to build a self-contained datacenter, which is called the Vapor Chamber, that could be parked in urban areas, close to end users who are accessing hyperscale applications as part of their work and home lives, and specifically in things like closed Radio Shack and Blockbuster Video locations that could be acquired on ten or fifteen years leases fairly cheaply.

Oddly enough, while urban distributed computing was the founding idea, co-los and managed hosters are the ones who are coming forward first to try out the Vapor Chambers and its related management software and telemetry electronics, says Crawford. “We give co-los and managed hosters the ability to adopt Open Compute in a very safe fashion. For example, with 480 volt DC power, you don’t want people accidentally touching that bus bar. It would be potentially lethal.”

The Vapor Chamber can put six 48U Open Compute racks (which are 21 inches across) into a cylinder that is 9 feet across and that takes up 81 square feet of floor space, and importantly, can be put in any location that has enough power and doesn’t mind the whirring of giant fans that keep the chamber cool. Vapor IO is working to adapt the datacenter chamber so it can accommodate standard 19-inch racks, which many enterprise and co-location customers still prefer. When rectangular racks are put into the cylindrical chamber, there is a wedge on each side of the chamber, and it is here where Vapor IO packs in power rectifiers and distribution units, Halon gas and fire suppression equipment, cabling for the IT gear, and all other elements of the datacenter that might hang above or be below the racks like that 480 volt bus bar that is pretty dangerous. Each chamber can have up to 150 kilowatts of gear, or 25 kilowatts per rack, which is pretty dense by today’s standards.

The upper part of the Vapor Chamber is a massive fan, which is much more efficient than having a lot of little fans distributed across the equipment, which pulls air over all the gear to keep it cool. The interesting bit about the Vapor Chamber is that the thermal engineers at the company did the math and figured out that air velocity and air volume is much more important to cooling equipment than air temperature, which makes intuitive sense once you stop and think about it. So the Vapor Chamber can draw a tremendous amount of relatively hot air through the gear and exhaust air at about 115 degrees Fahrenheit out of the top. This hot air can be piped out of vents in the datacenter, or thanks to a partnership with fuel cell provider Bloom Energy, it can be used to preheat the air that is used to convert natural to electricity.

Bloom Energy’s solid oxide fuel cells need warm air to mix with steam and natural gas to make the electricity, and they vent carbon dioxide, more water, and heat. The fuel cell wants air to be around 800 degrees Fahrenheit, so the servers and storage in the Vapor IO chamber can’t get it all the way there, but it is, in essence, free energy that the fuel cell can make use of to re-power the servers. What this means is that companies can think about building datacenters anywhere they have space and a gas line, without the need of generators or power stations. Vapor IO and Bloom plan to have their integration done by the first quarter of 2016, when the Vapor Chamber will be generally available.

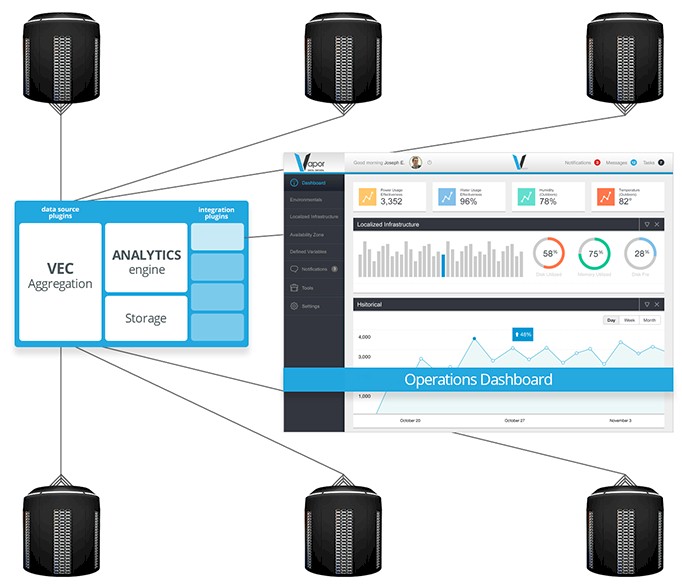

Vapor IO has created a special piece of hardware called the Data Center Runtime Environment controller for gathering up information from the gear in racks as well as an analytics stack, called Vapor CORE, to merge telemetry form the gear with log files from applications to get a holistic view that relates loading in the containerized datacenters to the applications running on them. Interestingly, while Vapor IO is opening up its Data Center Runtime Environment controller, which takes the place of a baseboard management controller in servers and storage servers running in the racks, to the Open Compute Project, the company is keeping control of the code for Vapor CORE. (It has to make a living somehow.)

The company is, however, licensing its Vapor Chamber designs, which leverage 72 patents relating to airflow, weight distribution, louvre configurations, and other factors, because Crawford says that it does not want to be a hardware manufacturer. Thus far, contract manufacturer Jabil Circuit (which makes gear for many name-brand IT suppliers, notably Cisco Systems) has stepped up to make Vapor Chambers. (Jabil Circuit is the parent company to Open Compute hardware supplier Stack Velocity.) Vapor IO, which only launched in March, has one customer in the United Kingdom, one in continental Europe, and one in the United States, with a bunch more in the pipeline.

Counting The Costs

Crawford says he was honestly expecting there to be more resistance to round form factors in the datacenter. But the need for more efficient datacenters in urban areas and in co-location facilities is so strong, and the economics are so compelling with Vapor IO’s stack, that they are intrigued.

“The co-location and enterprise consumers of IT are much more intelligent today in terms of what they are asking for,” he says. “It used to be that they had their relationships with Dell, or HP, or name a vendor and they would ask for X amount of rack space and Y amount of power, and put gear in there. The conversation today is about workloads and they want to put together an environment so they can hit their TCO and ROI objectives and maintain their SLAs. The world of public cloud, I think, has changed the dynamics and we are seeing a huge shift in net-new greenfield datacenters being built by companies for on-premises operations to co-location, managed cloud, and public cloud environments.”

But the conversation with Vapor IO gets started around basic datacenter layout and costs.

In the example above, with 120 racks with 36 kilowatts per rack, use four-node, 2U server enclosures you could pack 84 servers per standard 42U rack, for a total of 10,080 servers in this floor space. (That’s our estimate, not Vapor IO’s.) The Vapor Chamber setup above would have 360 servers per chamber (60 per wedge) for a total of 12,960 machines. Some of that difference is just due to having a higher 48U rack in the Vapor Chamber, but some of it is the denser packaging of the Open Compute designs. So there is an inherent density advantage with the Vapor Chamber if you can take a chamber that is considerably taller than 48U, including the upper layer with the fans. The good news is that you don’t have to worry about hot aisle containment. There is no hot aisle, just like there wasn’t with Rackable’s innovative back-to-back servers designs with a chimney back in the dot-com boom.

The standard datacenter with hot aisle and cold aisle isolation using standard rack-mounted gear has a power usage effectiveness of 1.9 or higher, says Vapor IO. (PUE is a common metric for datacenter efficiency that takes the total power draw of the datacenter and divides it by the power draw of the server, storage, and switching gear that actually does the computing and storing.) Moving to high-density gear like blade servers can get that PUE down to maybe 1.5 or so. Containerizing the datacenters can push that down to 1.2 or so, and then going modular can get you to maybe 1.15 or so, according to Crawford. The Vapor IO chambers can bring that down to 1.02, and that is about as good as it gets. (We presume there are some benefits from the recycled waste heat and the Bloom Energy fuel cells in that number.)

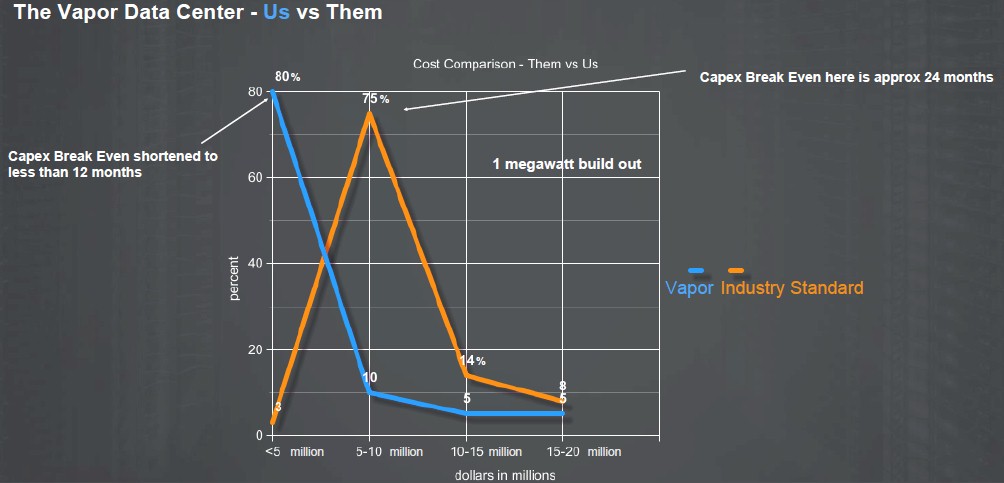

While the capital expenditure chart above shows the varying ways you can compare traditional and Vapor IO datacenter costs and paybacks, perhaps some more concrete examples are better. For a co-location customer, renting a 20 square foot chain link cage with massive cabling overhead has room for 63 servers, says Crawford, and delivers a PUE of around 1.8 at a cost of around $200 per rack per hour. A Vapor Chamber can put 63 servers in 9 square feet of space at a PUE of under 1.1 and at a cost per chamber (with six racks) of around $400 per hour, or $67 per rack. The capital expenditure savings are around 40 percent and the operational savings, thanks to efficiencies in the power and cooling as well as with the management through the Vapor CORE software, come out to be about 50 percent over what typical co-los do.

More generally speaking, Crawford says that a traditional datacenter build by enterprises or co-los using hot air containment and cold aisles will cost anywhere from $6 million to $8 million per megawatt, depending on the technology used, and in very pricey locations could go as high as $10 million per megawatt. The Vapor Chambers cost $300,000 a pop, and with seven units and all the other goodies Vapor IO is providing in software, a 1 megawatt installation will cost about $3 million. That’s anywhere from one-third to one-half the cost of a traditional datacenter.

The Vapor IO hardware is only one part of the stack the company is selling and perhaps not even the most important part.

“I can plug a bunch of hair driers into a power distribution unit and measure PUE, but it tells me nothing in the context of my workloads,” quips Crawford. “If we are going to be building distributed datacenters and disaggregated environments, we need situational awareness and telemetry about how my workload is performing at the physical layer. We need real-time heat maps and humidity and we want to be able to control the infrastructure over next-generation protocols, which is not IPMI. I think IPMI is a really ridiculous way to manage infrastructure. You need proprietary silicon in the form of a BMC, and grabbing sensor data – or any data – out of it is a nightmare. We wanted to introduce an open source REST API that allows us to look at the physical aspect of the datacenter and put the workload in context.”

Using the data culled from this open source telemetry controller and mashing it up with application, server, and storage logs on the systems, Vapor CORE can do on-the-fly analysis of how workloads are performing and also do performance per watt and performance per dollar calculations at the workload level, too. This is much more useful than a raw PUE for the overall datacenter. The Vapor CORE software also has a set of APIs that can be used for all kinds of integration, such as allowing cloud controllers like OpenStack and other system management tools to plug in such that when certain conditions are met in the datacenter environment, they can trigger applications to move around chambers or across distributed datacenters.

“a cylinder that is 9 meters across and that takes up 81 square feet of floor space”. Eh?

Otherwise, really interesting article!

Why are you comparing standard 42U racks with VaporChamber 48U racks?

For standard 48U racks you’ll get 11,520 servers instead of 10,080 – and that’s

significantly closer to VaporChamber density mentioned (12,960).