Although GPUs have found a wider market in high performance computing in recent years, an emerging market is exploding in deep learning and computer vision with companies like Baidu and others continuing to push the processing speed and complexity envelope. While they are not always at the fore of general processing for Google, Microsoft, and other search giants, GPUs are fertile ground for research into new algorithms and approaches to machine learning and deep neural networks.

At the Nvidia GPU Technology Conference earlier in the year there were several examples of how GPUs were being used in neural network training and for near-real time execution of complex machine learning algorithms in natural language processing, image recognition, and rapid video analysis. The compelling angle was that all of these applications were pushing into the ever-increasing need for real-time recognition and output.

Outside of providing web-based services (for instance, automatically tagging images or picking out semantic understanding from video) the real use cases for how GPUs will power real-time services off the web are still developing. Pedestrian detection is one of those areas where, when powered by truly accurate and real-time capabilities, could mean an entirely new wave of potential services around surveillance, traffic systems, driverless cars, and beyond.

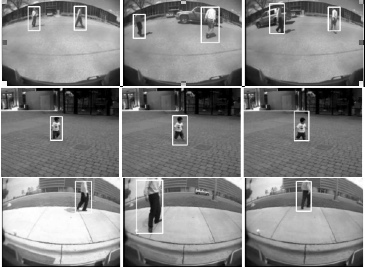

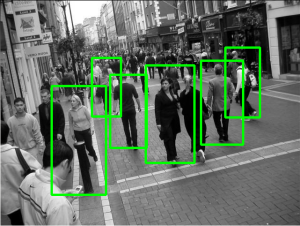

The complexity involved with real-time pedestrian detection is staggering, especially considering the range of settings, movements, other objects in a particular scene can rapidly change, interact and not just move, but in what manner pedestrians move. These are not new problems, however. Computer vision has long been a topic of research interest, and was certainly lent new possibilities with the parallel rise of GPU computing and more evolved deep neural networks (as described by deep learning pioneer Yann LeCun). But of course, with each new advance on both the hardware and code fronts, new expectations rise for balanced increases in performance and accuracy (there is a detailed overview of this two-plus decade quest here).

The complexity involved with real-time pedestrian detection is staggering, especially considering the range of settings, movements, other objects in a particular scene can rapidly change, interact and not just move, but in what manner pedestrians move. These are not new problems, however. Computer vision has long been a topic of research interest, and was certainly lent new possibilities with the parallel rise of GPU computing and more evolved deep neural networks (as described by deep learning pioneer Yann LeCun). But of course, with each new advance on both the hardware and code fronts, new expectations rise for balanced increases in performance and accuracy (there is a detailed overview of this two-plus decade quest here).

While a great deal of the existing research and practice of pedestrian detection happens on the GPU already, the goal of Google Research was to finally couple the speed with accuracy—a difficult task, according to the Google Research team. There are other approaches that provide a real-time solution on the GPU but in doing so, have not achieved accuracy targets (in this real-time approach there was a miss rate of 42% on the Caltech pedestrian detection benchmark). Another approach called the VeryFast method can run at 100 frames per second (compared to the Google team’s 15) but the miss rate is even greater. Others that emphasize accuracy, even with GPU acceleration, are up to 195 times slower.

At 15 frames per second, the Google team set a dramatic record that does not sacrifice either speed or accuracy against benchmarks like the Caltech Pedestrian detection metric, which is based on one of a few very large public datasets that leverages a bank of 50,000 “labeled pedestrians” and is comprised of data collected from a color dashboard camera with both rural and urban scenes. Using this benchmark as the base (and combining that data with their own generated pedestrian datasets with higher resolution images) the Google Research team showed how, on the right code, GPUs can dramatically outpace their CPU brethren and with far greater accuracy.

The team ran the pedestrian detection algorithm on an Nvidia K20 Tesla GPU, an earlier generation than the K40 and K80 GPUs that are deployed on several Top 500 supercomputers. It might be interesting to see where the performance boosts would have taken the benchmark, but nonetheless, they were able to see a significant improvement. To be fair, the ability to run their real-time pedestrian detection is also the result of a great deal of work in refining the approach to cascading the neural networks—an aspect that they have described at length.

The Google Research team expects to keep increasing the depth of the deep networks cascade by adding more tiny deep networks and exploring the efficiency-accuracy trade-offs. We further plan to explore using motion information from the images, because clearly motion cues are extremely important for such applications. Still, they say the challenge continues to rest on doing this at sufficiently high computational speeds.

Great article but can you pass on the last link “described at length”.. getting a 404 via mobile ??

It appears the article has been pulled. I can only load a cached version myself.

If you look at the wider picture of CNN or similar neural network structures it looks like Intel’s XeonPhi architecture is equally well if not even better tailored to this problem. So I wouldn’t be surprised especially with their better memory model that people will switch to that architecture sooner or later. Especially with the next XeonPhi iteration.

Also there seems to be a whole hole about how to scale CNN over more than one compute node I couldn’t find any research on how this would work unless you have a massive shared memory architecture over several computational nodes. It seems this problem is easily subdivided over a single computational node but I don’t think it is easy to subdivide over several computational nodes. If anyone has got any hints or research papers I would be happy to know