If you think that Hewlett-Packard is disappointed about the delays in getting the memristor to market, so is a tenacious inventor who has been working at the confluence of electronics and machine learning. Alex Nugent, who has been involved in a number of machine learning projects with the US Defense Advanced Research Projects Agency, including the SyNAPSE project that was eventually shepherded through several phases of development by IBM, has uncloaked a startup dedicated to using memristors at the heart of a new kind of analog computer that does what he says is a better job mimicking the function of the human brain.

Earlier this year, IBM Research was showing off a prototype neuromorphic computing system based on phase change memory – which has proved to be as difficult to make in production quantities as the memristors that HP and Nugent favor. Last summer, IBM finished off the SyNAPSE project with DARPA with partner Samsung to create a 4,096 core processor, called TrueNorth, that is capable of simulating millions of neurons. So Knowm, as the startup that Nugent has just uncloaked, has not cornered the market on the idea of using electronic storage devices as a kind of proxy for synapses in the brain.

One might reasonably wonder, given the eccentricities of the human brain and the ephemeral nature of the way we store data, why one might want to create an electronic device that simulates the synapses and networks of neurons that give advanced forms of life on earth both their processing and their data storage. And that is precisely why brains are fascinating to artificial intelligence researchers.

“The way we deal with information and memory is absolutely nothing like digital computers, but it is the fact that our digital computers separate storage and processing leads to massive inefficiencies. Our current digital systems are a factor of one billion less efficient in terms of space and power, and it is not fundamentally possible to create a computing system to do what our brains can do in the power and space we do it.”

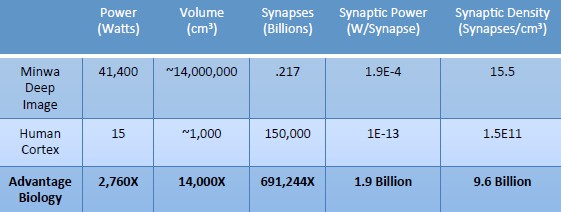

The advantage that human brains have over computers when it comes to image recognition, says Nugent, is astounding, and to make his point, he pulled out the statistics for Baidu’s Minwa hybrid CPU-GPU cluster, which the Chinese search engine giant has used to train its image recognition algorithms. The Minwa machine has three dozen server nodes, each equipped with two Intel Xeon E5 processors and four Nvidia Tesla K40m coprocessors. The system has 6.9 TB of main memory and about 600 teraflops of aggregate single-precision floating point processing power to dedicate to deep learning tasks. Here is how Minwa stacks up to the human brain:

This is obviously not a perfect analogy – our brains do lots of things besides seeing what is at the end of our noses – but you get the idea.

The central issue is not complex. Memory and processing are separated by wires, and the wires have capacitance, and every time you charge the wire up to send data back and forth across the digital system, it takes energy to do it and it also dissipates heat that has to be removed from the datacenter. “But brains in all living things are adaptive, and they adapt all the time, and adaptation is a memory processing operation,” explains Nugent. “To make a long story short, there is a fundamental barrier and to get through it, you have to stop calculating learning systems and you have to actually build one that physically adapts and learns. You have to map these learning systems to a physical system, to an analog operation. Not all of it – large scale digital communication can stay, but the thing that absolutely has to change is synaptic operations for adaptation. We have to create systems that bring memory and processing together, but that is very hard in a general purpose sense in that you can’t make a very efficient system where the architecture matches the algorithm that is also a general purpose computing system.”

The Best Way To Simulate Neurons Is To Electronically Mimic Them

Nugent tells The Next Platform that he examined a number of different devices that could be used to simulate neurons and their synaptic connections, but ultimately he decided that the best fit was the memristor. This device, which is shorthand name for memory resistor, has some interesting properties, and one of them is that it can “remember” the last voltage that has been applied to it, and by changing the state of the compounds in the memristor, you can use it to store binary data. This is not precisely what Nugent has in mind. While HP was going to use memristors as a kind of massive, low power, high performance memory pool in The Machine – its futuristic system with optical connects between compute and memory – the company was planning to use traditional compute engines, such as X86 and ARM processors running a homegrown Linux, to chew on the data stored in The Machine.

Knowm wants to actually make a system that is based on collections of memristors that do not separate data and processing, but rather use collections of memristors to simulate the synaptic pulses in the brain, which store data and process it. To jumpstart the effort, Nugent is working with researchers at Boise State University, just down the road from memory maker Micron Technology and who used to work with that company on advanced non-volatile memory technologies there, to get researchers and application developers some memristors and a complete software development stack so they can start playing with what Nugent calls neuromemristive technology to create artificial intelligence applications, where the hardware itself can adapt and learn, ahead of volume production of memristors, which will be doing the adapting and the learning.

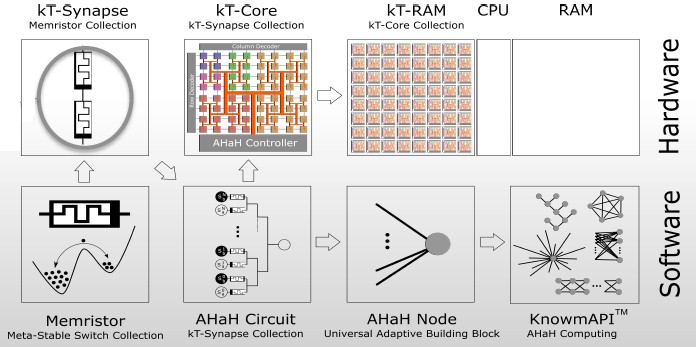

The memristors that are both the storage and compute in the “universal synaptic processor,” as Nugent calls the device, are woven together in a hierarchical way, and the overall technology is called kT-RAM, short for thermodynamic RAM. Here is how the hierarchy works:

Because memristors are not yet available in the quantities, the geometries, and the price that will be required for neuromemristive computing, Knowm has to do what other chip makers do: It has to simulate its kT-RAM using digital devices. To that end, the company has created an emulator based on four Epiphany massively multicore processors from Adapteva. Techies at Rochester Institute of Technology are also working with Knowm to create a kT-RAM emulator that will run atop FPGAs. The Epiphany version of the emulator is being packaged up in a tiny appliance called the Sense Server, which marries the Apache Storm real-time computing framework with the synaptic storage and processing of the kT-RAM; the idea is to show developers how to use the combination of the digital and synaptic technologies to do real-time anomaly detection in data streams.

A variant of the Sense Server is loaded up with a passive network tap and can hook into the Elasticsearch search engine index, the Logstash log file parser, the Bro network security monitor, and the Kibana data visualization front end to create a more sophisticated anomaly detection system. This one has had its simulated kT-RAM specifically trained to detect statistical distribution or temporal sequence anomalies.

Pricing for the test samples of memristors from Boise State, the Sense Server, and the full-on anomaly detection system have not been set yet.

Knowm is also publishing its machine learning API stack, which hooks into kT-RAM, which can drive a number of popular machine learning algorithms through the artificial synapses, including combinatorial optimizers, unsupervised feature learning, and multi-level classification with confidence estimation, and prediction and anomaly detection.

In an interesting twist, Nugent has open sourced the code in the Knowm stack, which he refers to as AHaH computing, and will be counting the contributions from the community he hopes to create around the machine learning platform so contributors can be paid, on a prorated basis, for the contributions they make to the code. All of this will be tracked in GitHub. The precise royalty scheme is being worked out now, but this is the first time we have heard of directly paying developers for the work they do in open source projects.

Like the rest of the semiconductor industry, Nugent is not sure when memristors will be available in sufficiently high quantities and low prices to put a system based on kT-RAM into production. But Knowm is working hard to build the simulators and the software tools to put such machine learning systems – in a literal sense of those terms – to good use once they are available.

> so contributors can be paid, on a prorated basis, for the contributions they make to the code. All of this will be tracked in GitHub. The precise royalty scheme is being worked out now, but this is the first time we have heard of directly paying developers for the work they do in open source projects.

This is not a first, but good to see. The more projects to go this way the better. MaidSafe.net are introducing a very similar scheme imminently, as announced at

https://forum.safenetwork.io