A lead deep learning expert at Microsoft Research thinks the low-hanging fruit for progress in deep neural networks has been picked and that for the next couple of years, refinement of neural networks and deep learning algorithms will be isolated and incremental.

As one of the more well-known voices in deep learning, Li Deng serves as partner research manager inside Microsoft’s division that explores new approaches to machine learning, neural networks, image and semantic recognition, and other artificial intelligence technologies. He recently described the pace of early progress on these fronts at Microsoft Research starting in 2009, and how these developments were closely matched with similar revelations at Google, Baidu, and various academic institutions. While he says that in many cases Microsoft hit the finish line first on key technologies, they were not the first to call The New York Times with the story like Google, Stanford, and others did (rather than waiting to share peer reviewed results at conferences). And he was also quick to point out that unlike Google, they do not acquire their talent–they cultivate it inside Microsoft Research, where they see the limits for big breakthroughs.

When it comes to speech recognition, Deng says he is “very confident that all the low-hanging fruit has been taken. There is no more.” So, he explains, companies have to work hard for even a small gain. “Every time you go to a conference, a lot of the work that you see—beautiful pieces of work—there is still not much gain.” This is not like three or four years ago where “you take just one GPU, dump a load of data there, do a little innovation in the training and get a huge amount of gain.” Image recognition also has the same small window of innovation, Deng says. “Most low-hanging fruit is taken here too. The error rate just dropped down to 4.7%, it’s almost the same as human capability. So if you get more data, more computing power, yes, you can still get the error rate down a little bit more, but not much more.

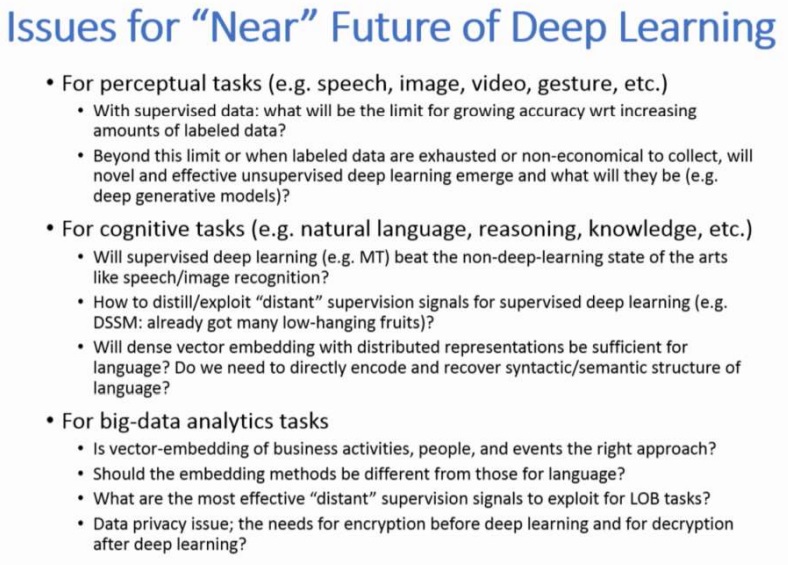

The real opportunity area for deep learning where there is still some “low hanging fruit” left to pick is around business data, although Deng says he is not able to share much about what applications are the target outside of the general term “business analytics”. Another opportunity for big gains is with “small data” but he says much of this can be leveraged in new ways using traditional machine learning approaches. It is difficult to tell what other advances lay just outside the research bubble that could shatter the way neural networks are processed, but Deng’s talk at a recent IEEE talk (the full presentation can be found here) does highlight how minimal the opportunities for ground-breaking achievements in machine learning—at least from Deng’s view.

The opportunities for these incremental gains, now that (according to Deng), all the low hanging fruit has been picked, are listed in the following slide from his presentation.

Deng says that even though the gains are smaller, more incremental, he does not make this clear to discourage anyone from trying harder to make the little steps. “This is actually to encourage serious researchers and graduate students who want to write PhD theses to get into this area. But if it’s low-hanging fruit and you’re a start-up company, don’t do that unless you find some good application to use the technology, not the technology itself.”

Although the big leaps forward might be fewer and farther between, there is still quite a bit of this less sexy incremental work to be done. As Deng says, for the type of work with do with speech recognition using deep neural networks developed internally, 90 percent of the work is done using the DNN, but there are other elements in that remaining 10 percent that will provide good opportunities for small, but important advances. Among those he cites are recurrent neural networks, memory networks, and deep generative models, as well as convolutional neural networks, which slightly different from DNNs.

One could make the argument that while it might not be discouraging per se, it does not fit with the general sentiment in technology where there is a always a much better potential approach to explore, to consider, to fight for. And one could also look back in computing history for plenty of times when one technology or approach seemed monolithic–developed to its peak and ubiquitous. But any of those examples fall apart when it becomes clear that indeed, there is a better way to do things, whether its how to make a service usable, how to process data faster and cheaper, or how to connect a technology with a wider market.

Ain’t necessarily so. Some of the slower algorithmic steps could be speeded up. Approximations can be found, calculation can be replaced with memory lookup. Even sometimes new ideas occur, though humans generally repeat with elaboration rather than innovate per se. It is not impossible that you could get 10 fold or 100 fold algorithm improvements. But that means you move from needing 1000 cores to needing 100 or 10 cores. A big cost difference, if you can achieve it.

As someone developing DNN code on GPUs, there is plenty of room for innovation and optimization yet. Beyond that, I think there is a surprising amount of low-hanging fruit remaining in traditional machine learning, a domain replete with O(n^3) and O(n^2) computation from O(n) uploaded data and downloaded results.

Combine this with the *huge* number of ML and analytics codebases currently implemented in weakly-typed single-threaded languages, and I think the fun is just beginning. In such cases, FPGAs/GPUs/ASICs potentially provide 10x-100x speedups. 10x-100x speedups provide IMO exponential speedups in failing fast until stumbling onto success.