Hadoop started out as a batch-oriented system for chewing through massive amounts of unstructured data on the cheap, enabling all sorts of things such as search engines and advertising systems. The software has evolved considerably, and the jobs that it is tackling are looking more and more like those that traditional relational databases have done – or would have done better if they could have scaled.

Given this, it is no surprise that Hadoop distributor Cloudera has tapped Daniel Sturman as its vice president of engineering. Sturman spent many years inside of IBM’s Software Group, working on its MQSeries message queueing software and eventually taking over development of its DB2 relational database. And then he made an abrupt jump to search engine giant Google, helping shepherd key resource management technologies such as the Borg cluster controller and its own homegrown container technologies and eventually being put in charge of development for its Google Cloud Platform, its public cloud. It will be interesting to see how this broad and deep experience is brought to bear by Cloudera to evolve the Apache Hadoop stack and give its Cloudera Distribution an edge over competitors. The Next Platform had a chat with Sturman about his experiences and the future of Hadoop.

Timothy Prickett Morgan: The first question I want to ask you is what is up with you DB2 people and hyperscale? First James Hamilton, who was the lead architect for DB2 and then SQL Server architect at Microsoft before he led Microsoft’s datacenter forecasting and design efforts, and then he moved over to Amazon Web Services to drive scaling and efficiency efforts there. You led IBM’s DB2 efforts as well, and then you spent eight years at Google working on key infrastructure. Database experts interested in hardware?

Daniel Sturman: Before I did DB2 at IBM, I was always working on scalable stuff. It is just life, the way things go around.

I actually started in the TJ Watson Research Center after grad school, and my focus there was on distributed messaging systems, specifically IBM’s MQSeries line. I then moved out of research into the product teams, and that is how I got looped into DB2. I was doing research and I loved it, but I was hoping to have more impact on products and this was a great opportunity within IBM for me to do that.

My final role with DB2 – and I don’t know if IBM has the same organizational structure as it did back then – was managing all of the new releases. We were doing a lot of work with SAP at the time, and there was a lot of work on what I will call operational efficiencies, about how the organization ran. We wanted faster releases with higher quality. Within IBM, the DB2 group has always been a very technically driven organization, and that was a good match for me – working with a very strong set of IBM Fellows and distinguished engineers for the product.

TPM: When you moved over to Google, what was your role there?

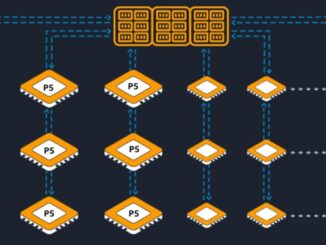

Daniel Sturman: I worked for Google for five years in its New York office, and at the time it was still relatively small, and the initial job as stated by Bill Coughran, who was SVP of engineering, was go figure out if you can build an infrastructure team outside of Mountain View, which had never been done. We had a small number of things that we were working on, such as the storage systems, the networking systems, and the cluster management systems, but they were scattered and, since Google was still experimenting at that time with sites outside of California, it wasn’t clear that this kind of core work could be done outside of headquarters. And it turns out that it could be, it just took a while to build up the deep expertise in systems in general and in Google’s particular systems. I spent the first few years building that team up, and as it grew, we eventually focused on the cluster management systems – Borg and Chubby and Omega, things that drive the computing layer at Google. Kind of the big operating system scheduler for Google.

I worked heavily on Borg and Omega heavily and when I moved to Mountain View about three years ago, it was to take over the entire cluster management team. John Wilkes was on my team and reported to me, and we were doing Borg and Omega and a whole host of different things relating to the cloud. At the time, Google decided that it wanted a closer relationship with what was going on in our cloud and what was going on internally, and there were a number of organizational changes, but the net result was a job to do the unification of the internal compute and cluster management with Borg and Omega with the external compute products such as Google Compute Engine and App Engine. There was a real push to try to get the layers out between those things and drive toward a much more unified platform.

TPM: Does that mean that Borg is somehow parked behind Compute Engine? We all assume that it is, but we don’t know it.

Daniel Sturman: It absolutely is. With Compute Engine, you are building virtual machines. And the thing about VMs and the ways that they are used in most places is that they are what you would call snowflakes. Each VM can be slightly different, and that is the beauty of the VM. You can do whatever the heck you want to it. Borg, on the other hand, wants to schedule a thousand of this particular thing. We were working to provide flexibility so it would work in both of those extremes. You can start to see that with the beta of Google Container Engine, which is built on Kubernetes. The paradigms are coming much, much closer together.

TPM: Is there something inside Google that is analogous to Kubernetes? It is my understanding is that Borg is itself the analog, which packages up cgroups containers with workloads and passes them around clusters.

Daniel Sturman: You should think of Kubernetes as Borg on top of VMs. It is exactly the same model, and that was exactly the point. That is why when we saw containers being created by Docker and others, we were excited. The cgroups stuff came out of the Linux team, which was also part of the cluster management team. What is great about Docker is that it added the packaging mechanism on top of it, not just computation isolation mechanisms. The paradigm is exactly the same one, and that is why Google is excited about it. This is exactly what worked for them and now it will be brought out to the rest of the world.

TPM: Does Google have an analog to Docker itself and the Enterprise Hub, which does the packaging and maintains a repository and all that?

Daniel Sturman: There was a tool, called MPM, but it was separate from the container paradigm, that created an image to be run. You would absolutely need that or otherwise Borg would not work. If you want to run a thousand instances of this, you need some way to say this. There is such an internal tool and it is still the primary one used inside of Google, which has Docker-like containers where you unify the application and runtime.

TPM: So let’s talk about all of this experience that you have and how you bring it over to the largest and best-funded of the Hadoop distributors. The Hadoop community is doing lots of interesting things, there are a slew of add-on projects and products, and some of the smartest people in the world are working on them. It has become so much more than emulating MapReduce and Google File System, which Google invented so many years ago. What can you do to shape things?

Daniel Sturman: First of all, I think that you are right. The Apache community is pretty phenomenal and it is not just MapReduce anymore, and they have brought out a much wider range of frameworks and allows people to analyze data depending on their skill level. At the same time, there are partners that are building on top of that, and not just Cloudera. This is a rich, large partner ecosystem that builds on top of Hadoop. There is a ton of innovation going on.

TPM: Right now, there are about as many ISVs creating tools for Hadoop as customers who have deployed Hadoop in production and paid for a commercial support contract – somewhere just south of 2,000 each. Those of us watching from the sidelines expect for the Hadoop installed base numbers to grow, and we expect that most companies will use this technology in one form or another – whether they run it internally, on a cloud, or as a service on a cloud – for at least some of their workloads going forward. I am expecting a hockey stick adoption curve and maybe we are on it and maybe we have yet to really get on it.

Daniel Sturman: I think that is precisely where I can add value. If you can unlock this technology as a company and get much deeper insight into your internal operations, your customers, and so forth – what I will call Google-level insight – that is pretty clearly going to be a huge competitive advantage. Enterprises live in a Darwinistic world, and those who have deep insight will have a very different outcome from those who can’t.

First of all, I come from a world where businesses have to bet on complex distributed systems. This experience with Google really helps put me in the customer’s shoes. My experience with DB2 taught me where enterprises are really coming from and the challenges they face. They do not look like Google inside. What does it mean to make this technology consumable to them? It means giving them the characteristics they need, such as the predictability of deployment or security which they absolutely must have. I think I bring a level of innovation on top of that. I think there has been a ton done by the community, but I don’t think that we are done, particularly around lowering the skill barrier needed to exploit Hadoop and related technology. I have seen a lot of interesting ideas and I think I am getting some new ones around what we can do to make it easier to operate these systems at lower cost and easier to access them from a programmatic point of view, like we are seeing with SQL.

TPM: Which is exactly what happened some years ago when Bigtable and Dremel were created at Google.

Daniel Sturman: That is exactly what was going on then. But we are thinking about how we use R, if you are a data scientist, or even just Python to access these systems. Different skills from different folks. How do we enable that with one underlying framework that contains your storage and allows access depending on skill level and the task at hand. Resource management at large is something I happen to know a lot about from my time at Google. Those are some of the places where I think I can help.

In general, one of the biggest things I learned from my time at Google is to always make sure to be pushing to the cutting edge. Cloudera already has a huge sense of that, but I can ensure we keep pushing as we get bigger, we need to keep that sense of innovating, we have to keep pushing, we have to keep taking on big challenges.

TPM: What do you think of Cloudera’s role inside of clouds? One of the arguments that Google made originally with App Engine was that it would insulate end users form the complexities of infrastructure and just provide application runtimes and data services. It was a credible argument, I think, until we all realized that sometimes, companies want to set up their own virtual machines and create their own services. I still, do this day, do not understand why Amazon Web Services customers do not use Elastic MapReduce except for the fact that all of the Hadoop distributions are not available on it. The support matrix for the Hadoop distros and their add-ons like Spark is pretty large, so that is a factor. How does Cloudera approach the public cloud? Once people are generating all of this machine-generated data from applications, it seems logical to put it on the cloud and keep it there.

Daniel Sturman: Some will absolutely want EMR, it’s a great service. Some will want a Cloudera distribution on a public cloud provider and we are working with Amazon, Google, and Microsoft to make that available. The Cloudera Distribution is, in a sense, deep into the data problems that these customers want to solve, on premises or in a public cloud. So with data governance, that problem doesn’t go away if you are on premise or using EMR. You still have to worry about who can access the data, what is the cleanliness of the data, and so forth – it is really a data management problem. You are going to want this tied into the Hadoop distribution and this is something we are actively working on and will appeal to both cases.

Also, a lot of enterprises are looking at a hybrid environment and that is something they are going to be doing for a while. We are aiming at multiple clouds, but with common governance so we can understand where resources are going. What we don’t want to have happen is that companies move to the cloud with Hadoop and spin up an entire new team that makes all the same mistakes that the on premises team did. We want them to just learn and run them in a holistic way.

TPM: I understand that companies have hybrid application architectures between on premise and public clouds, but does Hadoop work itself also get split? Does it depend on the sensitivity of the data, the cost to do inside versus outside, etc?

Daniel Sturman: I think they want Hadoop capability in both of those environments. People use public clouds because they gives them the ability to burst and they have a lot less management overhead, and those benefits apply to Hadoop just as they do to any other application. If a lot of the telemetry is coming into the cloud, you might as well do the analysis right there – particularly if it is no harder.

TPM: And you think you can get price and performance parity between Cloudera on premise and on public clouds? You want to make it so it doesn’t make a difference so people really can make that choice.

Daniel Sturman: We are still working through what that might look like, but it is pretty clear that we need to have a cloud presence. We already have some large customers on cloud and we are already doing that with Cloudera Director. But we have to smooth out that whole experience.

TPM: Speaking of Hadoop in general, what sorts of things need to be added to it or changed? There is such a wide variety of in-memory add-ons and SQL query engines. You have looked at this from the inside at Google, at the things it has built, what is Hadoop missing?

Daniel Sturman: Some of what I am going to say here is naïve because I have only been on the job for three weeks. Getting SQL right is going to clearly be very important. I am very excited about Spark and being able to stream in data that doesn’t just allow faster processing, but a different sorts of processing. Spark is going gangbusters, but I still think you would be hard pressed to say that it is all the way to maturity and we have to see that through. I still think we have a ways to go on the hard CIO issues of data privacy and data governance and resource management – and I don’t just mean scheduling, but figuring out who is using what resources where and at what cost.

TPM: Last question. Having containerized so many things at Google, do you think that there will be some way to containerize the broad Hadoop stack to make it more manageable, updatable, and resource efficient?

Daniel Sturman: That is actually on my list of things that I want to go think about. I think containerization will be a common basis for computation in general, and it will apply to Hadoop from standard resource isolation all the way out to how developers work.

Be the first to comment