There is no question that flash has utterly transformed the nature of storage in the datacenter, and that flash and other non-volatile memory that will replace it in the coming years will warp the architecture of systems. No longer can we think of storage as an afterthought. If you can’t find the data and move it easily, you can’t compute, after all.

For most enterprises, flash is something that they splurge on here and there to accelerate one particular workload here, goose another one there. Apple and Facebook were early adopters of PCI-Express flash cards from Fusion-io, which they used to accelerate databases in their infrastructure, and today enterprises have followed suit, using flash to accelerate their own databases and also as a fast tier of storage for hot data in all kinds of workloads. This is all well and good, but no one would call it massive scale. It is not pushing any barriers. But that doesn’t mean companies are not looking ahead to a future where they will want more of their data available in a much zippier fashion than is possible with disk or hybrid arrays today.

In talking over the launch of its all-flash DataStream 2000f storage array (which we will get to in a minute), we pushed Andy Warfield, co-founder and CTO at upstart storage vendor Coho Data, to give us some idea of how far flash arrays are scaling at shops today and how far they might scale in the near term.

The largest configuration that Coho has sold of its hybrid flash-disk arrays, the DataStream 1000h, has 23 nodes, and the company has tested the scalability of its storage across 40 nodes in its labs. But Warfield tells The Next Platform that an unnamed big financial services company that is shopping for hybrid and all-flash arrays is looking a few years out into the future and wants to see what a very large central pool of flash would look like – and what it would cost for Coho Data to put it together.

Before we get into that, we have to explain the Coho Data storage architecture a bit and explain how what the company is doing is a bit different from what many of its storage peers are doing.

The company’s founders are not just familiar with virtualization, they were part of the team that brought the Xen hypervisor to life at Cambridge University. The Xen hypervisor was eventually commercialized by XenSource and acquired by Citrix Systems in 2007 for $500 million. Coho Data was founded in 2011 and uncloaked from stealth mode in November 2013. Ramana Jonnala, the company’s CEO, worked at file system maker Veritas Software early in his career before leading the product team at XenSource and ran the virtualization management division at Citrix for a number of years. Warfield was in charge of storage on the XenSource team, and co-founder Keir Fraser, who still the lead maintainer of the Xen hypervisor, is chief architect at the storage company.

Balancing Compute, Network, And Storage

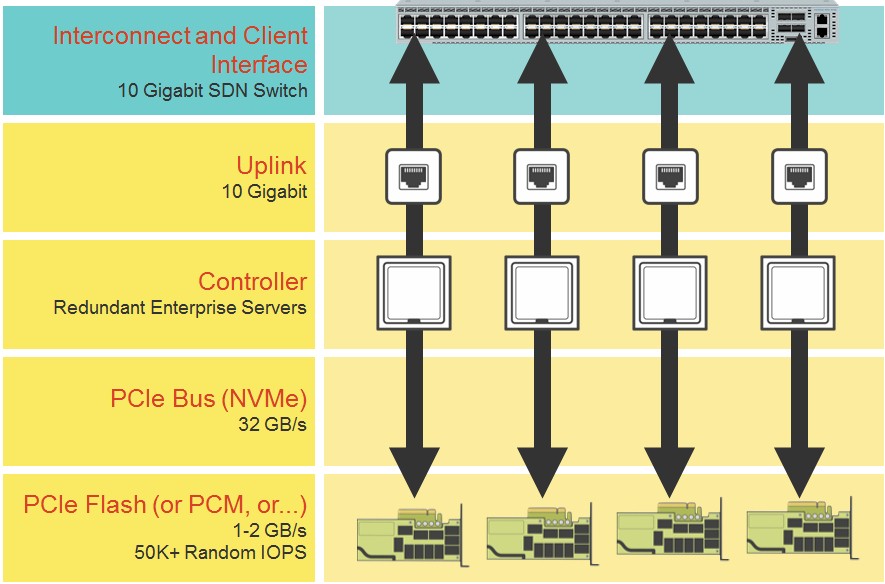

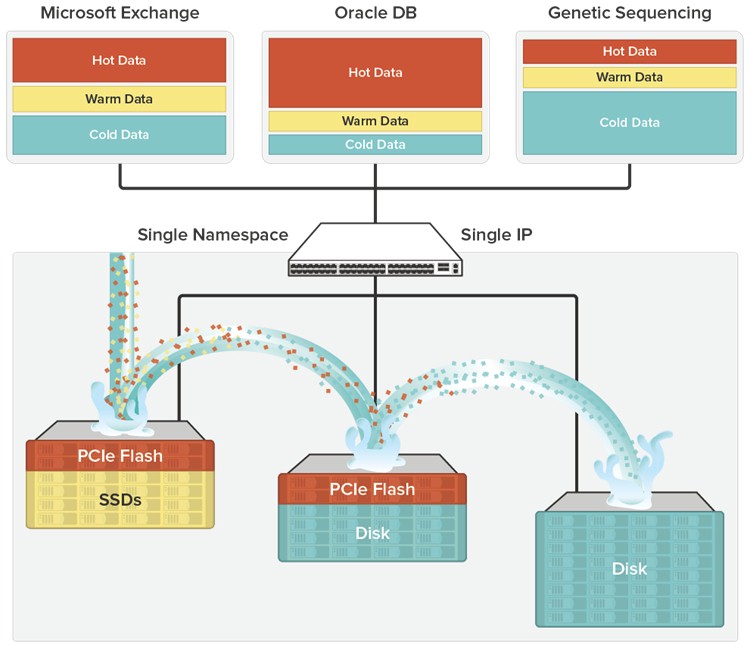

Not surprisingly, the Coho Data storage arrays are architected to have a look and feel of cloud storage, where companies don’t worry about low-level things like provisioning LUNs. Its storage systems employ very fast PCI-Express and now NVM Express flash and are designed to take advantage of the fact that flash prices fall 50 percent every eighteen months or so. (When and if NVDIMMs become available and affordable, Warfield says they could be adopted into the Coho systems as well.) The architecture of the DataStream is based on the concept of a microarray, which is a network interface with a compute element to manipulate data and flash storage (sometimes backed by disk). Each microarray has enough CPU performance and network connectivity on its own to expose the performance of the flash memory, which can be used to augment the performance of hybrid disk-flash arrays or used in all-flash arrays. Last but not least, the Coho architecture integrates switching and an NFS file system with the storage in such a way that the devices can be clustered and present a single IP address to applications in the datacenter.

“There’s this really weird thing going on right now,” Warfield tells The Next Platform. “Even though we have had flash for over a decade and the flash is basically the same memory cells, it is moving onto faster and faster buses. With PCI-Express and NVM Express, the thing that doesn’t seem to get enough play is that even one of these flash devices can saturate a 10 Gb/sec and increasingly a 40 Gb/sec network port. The capacity of a several hundred terabyte array is great, but it is really secondary to the fact that if you put a second device inside of that server, you cannot expose its performance. At $3,000 to $5,000 per device, these flash devices cost more than the CPUs. And one of the founding ideas of this company is that we are going from disks being the slowest things in the datacenter to flash being the fastest thing in the datacenter. The center of the datacenter is shifting from the CPU to the storage.”

Because the flash devices are so fast, Coho Data decided it had to have what is effectively one network interface card per flash device in the array. And the only way for us to make that work under NFS was to integrate a switch and weave it together with its own software-defined networking.

“By integrating the switch into the storage, we can present out as a single NFS endpoint and we are able to steer the traffic across the full bi-section bandwidth of the switching,” Warfield explains. “When you scale up the storage, you add additional compute and connectivity, and the system automatically rebalances the data and the connections and you still have one IP address and one namespace to NFS and one data store to VMware.”

The system can present multiple IP addresses if customers want to do that, of course. The metadata that describes the underlying distributed NFS runs inside of flash in the system as a microservice, and the architecture of the system borrows ideas from networking and other kinds of distributed signaling, says Warfield.

“The system architecture decouples a close-to-the-wire fastpath that wasn’t necessarily very smart and a more central brain that could make decisions with a view of the entire system. A lot of storage systems in the past have taken an upfront, fixed algorithm for how they spray data – RAID across a bunch of disks, or hashing and spraying data across an address space – but we took a much more SDN approach in having a central map that places the data and the connections between nodes and monitors load on the system and then moves data and connections around to optimize placement. This gives us the capability of responding to failure and to scale the cluster as storage nodes are added.”

Coho Data has partnered with networking upstart Arista Networks as its switch supplier. That could change now that Intel Capital and Hewlett Packard Ventures have both kicked in money for the company’s $30 million Series C funding round, bringing its total funding to $65 million. Andreessen Horowitz and Ignition partners have provided $35 million in two prior rounds of funding to Coho Data.

This week, Coho Data put its first all-flash array into the field, and it is this device that piqued the interest of one large financial services institution that wanted to look at how it might scale out the new DataStream 2000f to make a very large pool of storage.

The DataStream 2000f has two microarrays, each equipped with a two-socket Xeon server node that has two 10 Gb/sec Ethernet ports and two 1.6 TB PCI-Express flash cards. The chassis also includes up to 24 SSDs, which back-end the PCI-Express flash. The unit has a maximum raw capacity of 99 TB, and after the DataStream software does its replication and other data protection, it has a usable capacity of 47 TB. Turning on data LZ4 compression and assuming a compression ratio of approximately 2:1, that boosts the effective capacity of this 2U enclosure to 93 TB. Using 4 TB SSDs, the fully configured DataStream 2000f has a list price of $239,500, according to Warfield. Using 4K files and a mix of 80 percent reads and 20 percent writes, the 2U enclosure can chew through 220,000 I/O operations per second (IOPS), and with all reads, it can handle about 320,000 IOPS. The DataStream Switch that is bought as an accessory to the array is an Arista 7050TX-64 switch with 64 downlinks running at 10 Gb/sec and four uplinks running at 40 Gb/sec. It costs $25,995 at list price, and you probably want to add two for redundancy and for multipathing.

This is an interesting array, and no doubt would be useful as a flash island for some applications. But what about pushing it further?

The Big Bad All-Flash

Getting back to that forward-looking financial services company that Warfield mentioned, the company asked to spec out a six-rack configuration of arrays. It is not just as simple as putting switches and flash arrays into racks. You have to think about the network architecture and you have to consider where the compute is going to be if you want to make the best use of it. The good news is that, unlike many hyperconverged storage architectures, the ratio of compute, storage, and networking with the Coho Data clustering approach is not locked in.

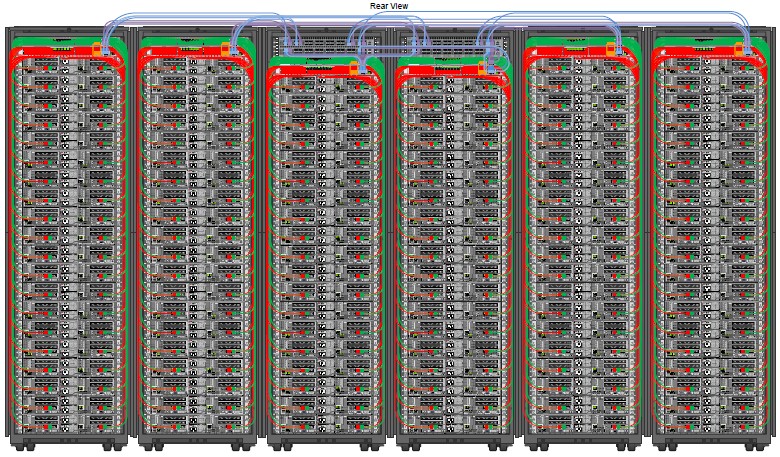

To be precise, that financial services firm wanted to spec out what six racks of hybrid and all-flash storage would look like and what it would cost. The company was, says Warfield, most interested in the all-flash variant. “This was a hypothetical configuration for a larger deployment than the customer intended to purchase, but it is absolutely representative of the type of scale that we are designing for,” says Warfield. And it is the kind of thing that large companies will be deploying over the next several years, whether they do it piecemeal or all at once.

The DataStream 2000f storage arrays in this config were linked to each other using a leaf/spine network architecture pioneered by Arista a few years back, which cross-couples the top-of-rack switches into a relatively flat network that puts each switch in the racks only one hop away from the other switches. This initial configuration had six pairs of Arista 7050TX-64 switches in the top of each rack acting as the leafs (no, they are apparently not called leaves), and the two center racks had one Arista 7250QX switches as the spine, which cost $69,995 each at list price from Arista and which sport 64 ports running at 40 Gb/sec. The racks have a total of 118 of the Coho Data arrays in them. Add it all up, and you are talking about $28.7 million for around 6 PB of flash storage that looks like one giant flash array as far as NFS is concerned. It would have an aggregate of 38 million IOPS.

In this setup, the spine connectivity is oversubscribed by a factor of 3:1 and this actually means that access to the storage is bottlenecked a bit. To not oversubscribe the spine switch, Coho Data would switch to Arista 7050TX-96 switches, which have 96 downlinks and which cost $39,995 at list price, as the top of rackers and the Arista 7500 series of core switches as the spine. We configured the spine with a pair of Arista 7508E core switches and a dozen 36-port line cards running at 40 Gb/sec, which is a much pricier option, at $737,958 per switch, but which gives non-blocking access to each port on the DataStream arrays. The way the architecture is set up, there is one hop between any storage nodes in the rack, and then two more hops to get to any other node (one through the spine and one through the lead in the outside rack). That brings the price up to $30.2 million at catalog list price. (Probably something like 40 percent off at least for a big deal like this would be our guess.)

While this is an interesting science and economics experiment, what Warfield said the customer should do is put the servers in the same racks as the storage. That way, the server nodes can be precisely one hop away from storage within the rack and no more than three hops away from storage across the racks. And the DataStream software would analyze the data access patterns for the servers in this truly hybrid cluster and move the data so it would only be one hop away from a server node wherever possible. The Arista 7508 switch has plenty of expansion capacity to double up the racks to keep it at around 6 PB of total capacity and put ten servers per rack in the whole shebang. That’s a 120-node cluster with what amounts to a single giant flash array that all of the nodes can share.

This is by no means a typical customer for Coho Data, which as we said has its largest customer to date deploying 23 of its arrays in a storage cluster. A typical 2U storage array from Coho is often powering a small cluster of tens of servers with hundreds to thousands of virtual machines on them. Most of Coho’s customers are using its arrays to host private clouds based on VMware’s ESXi/vCloud combination or the open source OpenStack cloud controller combined with the KVM hypervisor.

While there will still be plenty of spinning disk out there in the world many years hence, Warfield says Coho Data is keeping its eye out for other kinds of flash arrays. For instance, he envisions that a second class of relatively cheap flash that does not meet the stringent durability requirements will emerge as what amounts to a warm storage layer that is much less expensive than enterprise flash and closer to the cost of disk drives.

“We will see some flash devices emerge that are totally focused on low-cost storage,” Warfield predicts. “They will be great for random access, but they will not have high durability guarantees and you will only be able to overwrite them a few times in their lives. But if you front them with the fast and durable enterprise flash, it will be an interesting building block for scalable storage systems. I think that where this is going to take us is that by combining high capacity and high speed flash devices, we will be able to get tens of petabytes of storage in under 10U of rack space with a fronting layer with literally millions of IOPS. That kind of storage performance really starts to threaten the utility of services like S3 or EBS at Amazon Web Services.”

Looking even further ahead, Coho Data is examining how it might lash its storage arrays together in Clos networks in addition to leaf/spine approaches. And the company is looking at any way that it can move compute closer to the storage.

“As the architecture evolves, my intention is to allow developers to move applications and computation much closer to their data by allowing microservices to be hosted directly within the storage system,” Warfield explains. “We are in the early days of this right now, but have already done a six-month proof of concept with a Fortune 100 financial firm – different from the customer mentioned in the configuration above – in which we have implemented HDFS access alongside NFS, and allowed Cloudera clusters to be deployed within the system and to run directly on data when the storage system is otherwise idle.”

Be the first to comment