Hewlett-Packard may not have created a business line dedicated to custom server manufacturing like rival Dell. But the world’s largest server maker has what is arguably the most diverse lineup of compute platforms based on X86 engines and continues to tailor lines of machines for very specific workloads. This semi-custom approach suits the needs of most enterprises and supercomputing centers alike and complements its effort to break back into hyperscale through its Cloudline partnership with Foxconn.

In conjunction with Intel’s rollout of its “Haswell-EX” Xeon E7 v3 processors for machines with four or more sockets, HP is also rolling out a bunch of machines that make use of these engines. It is also updating and rebranding a family of scale-out servers that it had been peddling to enterprises and supercomputing centers looking for more features than a bare-bones hyperscale machine. The new scale-out systems are based on Intel’s Xeon E5 processors and flesh out HP’s Apollo line and are follow-ons to the ProLiant SL family of machines that are a few years old now.

Joseph George, a long-time HPer who took a detour at Dell for a few years to run strategy at its Data Center Solutions custom server unit, tells The Next Platform that the revamped Apollo line is a “recognition that enterprises are doing more open source software for analytics, object storage, and private clouds” and that the machinery underlying these systems has to reflect this without abandoning some of the key features in enterprise systems, such as the Integrated Lights Out (iLO) baseboard controllers that hook into asset management systems to manage fleets of servers.

In addition to the Apollo line extension, with the Apollo 2000s aimed at dense compute and the Apollo 4000s aimed at dense storage and based on Intel’s “Haswell-EP” Xeon E5 v3 chips announced last fall, HP is also revamping its four-socket rack and blade servers with the Xeon E7 v3 processors coming out today. The company is also readying yet another four-socket machine that will use the Xeon E5-4600 v3 variant of the Haswell chip, which is expected soon and only for four-socket machines. The company has no plans to launch an eight-way machine in the ProLiant line, as it has done in the past, but rather is emphasizing its “Project DragonHawk” Superdome X system for customers who need more scalability for their X86 workloads. The Superdome X machine scales from two to sixteen Xeon E7 sockets and borrows much of its technology from the Itanium-based Superdome systems to accomplish this. As far as we know, the Superdome X machines are not being upgraded to the Haswell Xeon E7 v3 chips today.

Cloudline Versus Apollo

With the Cloudline machines, HP is using the contract manufacturing might of Foxconn to create what are essentially white box, minimalist servers aimed at service providers, telecommunications companies, and hyperscalers who do not want any extras in their machines. For these customers, high availability and resilience are embedded in the application layer of the stack and are unnecessary to be embedded in the hardware through redundancy of components and or data protection schemes like putting RAID data protection on disk arrays. The base Cloudline CL1000, CL2100, and CL2200 machines are bare bones rack servers that are based on the Haswell Xeon E5s, while the CL7100 is a variant of a popular Open Compute server node and the CL7300 is an implementation of Microsoft’s Open Cloud Server, which the company actually buys from both HP and Dell (and possibly Quanta, which also sells a variant of the Microsoft system design).

It is not clear where the new Apollo 2000 and Apollo 4000 machines are being manufactured, and as far as enterprise customers are concerned, it doesn’t matter all that much. What matters is that the systems have the features they expect and the compute and storage density that they need for modern workloads – and they have a price that is commensurate with those features.

The SL4500 and SL6500 enclosures packed multiple compute and storage nodes into rack enclosures, as many dense systems do, and the Apollo 2000 and Apollo 4000 machines take the same approach. “We are anticipating a really strong uptick for these platforms,” says George, and this stands to reason given the popularity of the various multi-node rack machines from Supermicro and Dell.

The Apollo 2000 is designed to put four compute nodes into a single 2U chassis, with shared power across those nodes but individual links for each node to attach to networks or external storage. (It is more of a baby rack than a blade server, in this respect.) The nodes can accommodate the Haswell Xeon E5 chips running up to 145 watts and have eight DDR4 memory slots per socket (maximum of 512 GB with 32 GB sticks across the two nodes), which is enough to support most web-style applications as well as a fair number of HPC workloads if customers opt for the Apollo 2000s over the Apollo 6000s (air cooled) and Apollo 8000s (water cooled) that debuted last fall.

The chassis used in the Apollo 2000 machines supports two different nodes. The ProLiant XL170r has two I/O slots and takes up a 1U of height and is half wide; four fit into an enclosure. The ProLiant XL190r node is 2U high and has room for Nvidia Tesla GPU or Intel Xeon Phi coprocessors. It seems fairly likely that HP will eventually deliver a variant of the Apollo 2000 system that supports Intel’s future “Knights Landing” parallel X86 processor, which is a member of the Xeon Phi family coming out later this year that will be available as both a coprocessor and a standalone processor. With the original SL6500 enclosures, which came in 2U and 4U variants, and the latter had a server node (the SL270s) that could house eight coprocessors in a half-width node. That was a total of sixteen accelerators in a 4U enclosure with a pair of two-socket Xeon E5 nodes driving them.

The Apollo 2000 is offering half the GPU density of the SL6500, which is a bit of a mystery, but perhaps there will be a follow-on Apollo 3000 that will crank up the coprocessor count. Or, as we point out, HP might just throw its weight behind the Knights Landing as a standalone processor for certain parallel workloads.

In the Apollo 6000 line, which puts ten Haswell Xeon E5 processor sleds into a 5U chassis, there is a server node called the ProLiant XL250a that takes up two slots and has a two-socket X86 node and room for two Xeon Phi nodes. That yields ten accelerators in a 5U space, or two accelerators per rack unit. That is the same density as the Apollo 2000 – and still only half the density of the former SL6500 beast. Our guess? The SL6500 overheated a bit when fully loaded. If that were not the case, HP would be pushing the density angle, unless, of course, customers are not looking for that kind of density, but rather a one-to-one ratio between CPUs and accelerators. (The data from Nvidia suggests that companies want to hang more, not fewer, accelerators on each server node, and hence the NVLink interconnect will allow up to four nodes per system to have a very fast link between CPUs and GPUs and between the GPUs themselves.)

The Apollo 2000 comes with two variants on the chassis, and in both cases, the server nodes slide into the back and disk or flash drives snap into the front. The Apollo r2200 chassis supports a dozen 3.5-inch drives and the r2600 chassis supports two dozen 2.5-inch drives. The compute nodes come with the integrated SmartArray B140i disk and flash controller and can have a variety of cards snapped in. Interestingly, the machines have a pair of M.2 flash sticks (the kind favored by Microsoft in its Open Cloud Server) weighing in at 256 GB for local storage. Both Apollo 2000 enclosures can have a pair of power supplies, each one rated at 800 watts and the other at 1400 watts. The r2600 chassis costs $1,219 and the r2200 costs $1,299. The base configuration of the XL170r node costs $999 and the base configuration of the XL190r costs $1,059. These are not usable configurations, of course, but pricing was not yet available on HP’s online configurators, so we can’t tell you what the deal is for a real setup. Microsoft Windows Server and popular commercial-grade Linuxes are supported on the machines, of course; HP will also be happy to preinstall, for a fee, any operating system that can run on X86 gear that is outside of its certified list.

Servers Morph Into Storage

If the Apollo 2000s are the follow-ons to the entry SL6500 compute servers, then the Apollo 4000s can be thought of as the kickers to the SL4500 machines that came after them and that were designed to be a lot more heavy on the storage than on the compute but still include compute.

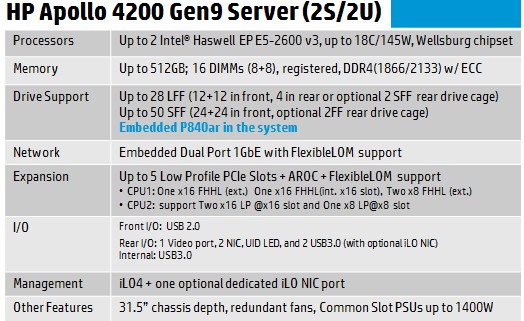

There are three models in the Apollo 4000 family of machines. The first is the Apollo 4200, which is a relative of the workhorse ProLiant DL380 server that dominates the datacenters of the world. But it has been re-architected to allow for up to 28 3.5-inch drives and up to 50 2.5-inch drives inside of its 2U chassis. That gives a maximum capacity of 224 TB in a 2U enclosure using 8 TB disks. This one is designed to run Hadoop and its various file systems as well as Ceph, Swift, Scality, and Cleversafe object storage. The Apollo 4200 will be available in June; pricing was not yet set.

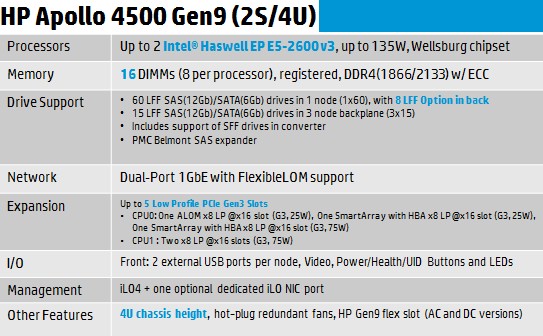

One notch up, and aimed specifically at running the object storage from Scality and Cleversafe (but certainly not limited to these) is the Apollo 4510. The prior SL4540 storage servers came in a 4.3U form factor used by the Moonshot hyperscale servers, and enterprise customers were a bit annoyed by that because it does not fit cleanly and evenly in a standard 42U rack as far as height is concerned. So HP went back to the drawing board and created a 4U enclosure and also bumped the 3.5-inch drive count up by eight to 68 drives while also shifting from 6 TB to 8 TB drives. So now HP can cram 5.4 PB of raw capacity for object storage into a 42U rack. This Apollo 4510 will be available in August; again, no word on pricing.

The final Apollo 4000 machine is the Apollo 4530, and it is explicitly designed to support Hadoop workloads and related NoSQL data stores. This machine puts three compute nodes and their storage into a 4U rack, and importantly, the balance between cores and disk or flash drives can be maintained, more or less, if that is still a valid ratio for Hadoop workloads. (Importantly, you can keep the disk and core count in balance without having to go anywhere near the top-bin Xeon E5-2600 v3 parts in the Intel lineup.) The Apollo 4530 crams 30 server nodes and up to 15 drives for each node into a 42U rack. (Sixteen drives would have been better because you could use eight-core chips in the nodes and have parity between drives and CPU cores.)

Scale Up Iron

Like many of its peers, HP is putting out a new four-socket system that is based on the new “Haswell-EX” Xeon E7 v3 processors that Intel just announced. The company is not doing a four-socket follow-on to the ProLiant DL980 that was reasonably popular a few years back. If you want something with more compute and memory capacity than the ProLiant DL580 with an HP label on it, you have to get a Superdome X blade server.

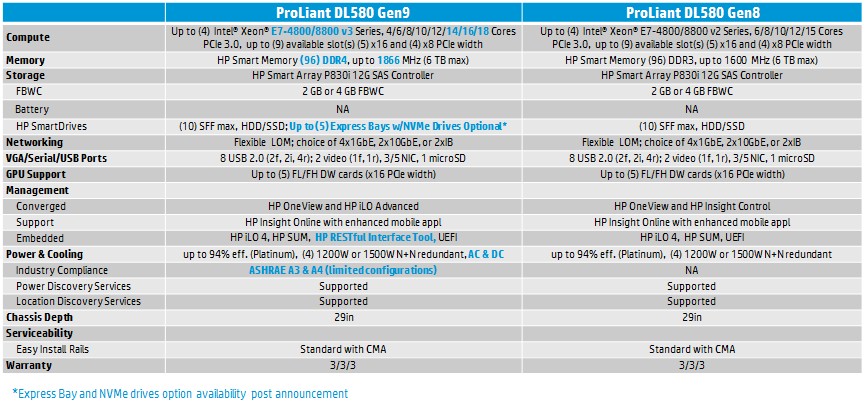

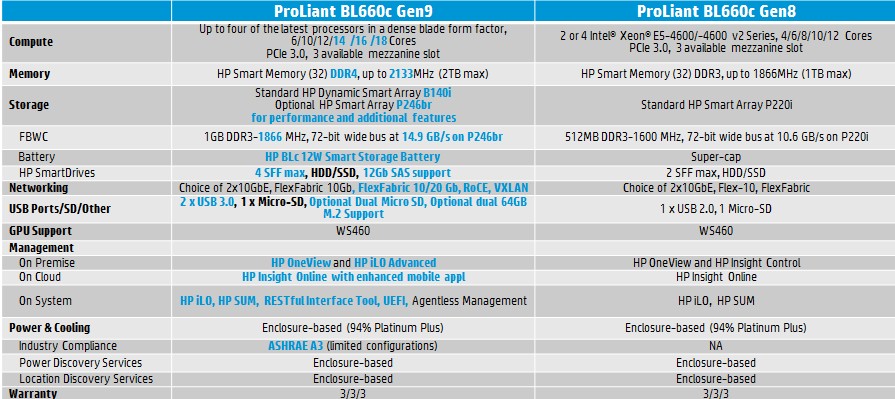

There is not a lot of things that changed between the Gen8 and Gen9 variants of the DL580, as you can see in the table above, and that is so by design. HP is also creating a four-socket node for its BladeSystem blade server, which is still used by enterprises but never by hyperscalers and very rarely by HPC shops. Here is how the ProLiant BL660c Gen9 compares to its predecessor:

Pricing for the ProLiant DL580 and BL660c servers was not announced and won’t be until they start shipping in June.

HP is also, like the rest of the players in the Xeon server racket, preparing itself for the impending launch of the “Haswell” E5-4600 v3 chip, which is used to create denser four-socket machines that have a lot less memory capacity and bandwidth. The ProLiant DL560 Gen 9 is expected to launch sometime in June, which probably means Intel is getting set to do another chip launch in a few weeks.

The four-socket part of the server racket is small but important, says Shab Madina, group manager for product and solutions planning for rack and tower servers at HP. The United States is still the largest market, in terms of revenues and shipments, for four-socket boxes, but a higher percentage of machines sold in China are based on four-socket motherboards. This has been the case for several years, and it turns out that Chinese IT buyers want the ability to upgrade CPUs and often only half populate the machines.

The Xeon E7-4800 is still driving more sales than the Xeon E5-4600, says Madina, but for workloads where you want a higher compute-to-memory ration, the Xeon E5-4600 wins. These chips allow for two four-socket machines with up to 6 TB of memory across those two nodes to be put into a 4U enclosure. A four-socket machine based on the E7s usually comes in a 4U or 5U enclosure and only puts four processors against that the 6 TB of memory that is supported in that four-way. The memory in the machines using the E5-4600 processors is also straight-up memory sticks and do not have to employ Scalable Memory Interface (SMI) buffer chips as the Xeon E7s do. The Xeon E7s offer more memory bandwidth across the caches and main memory, and a similar amount of compute, so they have their uses.

The big news on the Superdome X front is that the existing “Project DragonHawk” machine has been certified to run Microsoft Windows Server. Up until now, it has been limited to Red Hat Enterprise Linux and SUSE Linux Enterprise Server. The machine has not yet been updated with the new “Haswell-EX” Xeon E7 v3 processors that Intel just announced, and it is likely that it will take a few months for the certification for the new processor to happen. The Xeon E7 v2, v3, and v4 processors all share a common socket, precisely to make such engineering and testing easier.

Be the first to comment