Competing against a giant like Cisco Systems in the switch industry is never an easy task, but Mellanox Technologies has managed to carve out a sizeable niche in the supercomputing arena with its low-latency, high bandwidth InfiniBand and adapters. But in the past several years, Mellanox has been able to parlay that success into new markets, selling chips and boards that third parties that embed in their storage arrays, clouds, or financial trading systems. Mellanox bought its way into the Ethernet business with the acquisition of Voltaire in early 2011, and now its Ethernet products are getting traction just as the 100 Gb/sec ramp for both InfiniBand and Ethernet are starting.

It took Mellanox from its founding in 1999 until May 2012 to make its first cumulative $1 billion in revenues. In the past three years through the end of the first quarter, Mellanox has sold another $1.3 billion in chips, boards, switches, cables, and software, and its sales continue to accelerate. The key to its success is to keep on the cutting edge of high bandwidth and low latency, but Intel will be gunning for Mellanox with its upcoming Omni-Path follow-on to InfiniBand and Broadcom and Cavium Networks want to use their own network ASICs to try to take some of that storage and cloud revenue away from Mellanox, too. At this point, Mellanox has end-to-end 100 Gb/sec (EDR) InfiniBand adapters, cables, and switches in the field and some significant design wins on future supercomputers due in the 2017 to 2018 timeframe. The company is expected to get 100 Gb/sec Ethernet products into the field with a new set of ASICs this year to compete against the Broadcom Tomahawk and Cavium XPliant chipsets, which support the new 25G Ethernet standard that was raised by the hyperscalers Google and Microsoft, who broke with the IEEE until it conceded to allow a different Ethernet standard for 20 Gb/sec, 50 Gb/sec, and 100 Gb/sec products. Mellanox is facing an Intel that is converging compute and switching on one side and more intense competition in a more diverse set of Ethernet protocols on the other side.

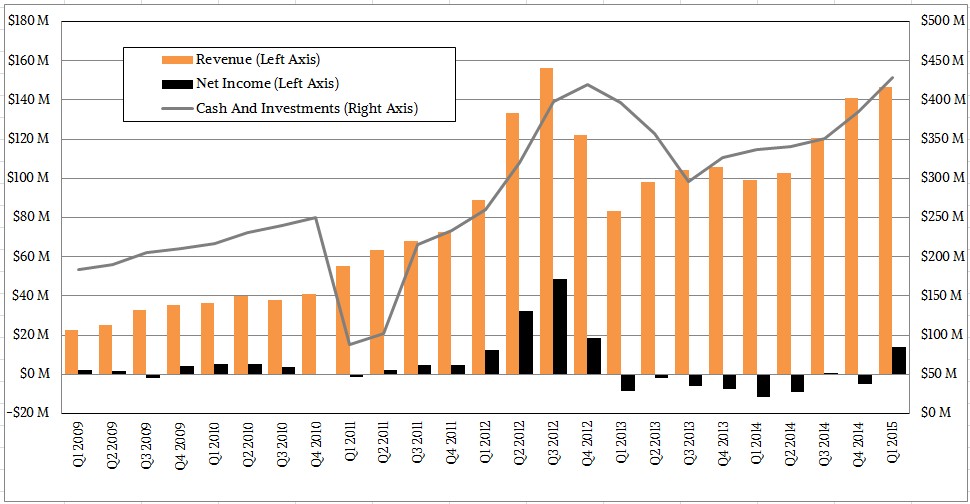

The important thing to remember is that despite the fact that Mellanox has not been profitable in a number of recent quarters as it invested in 100 Gb/sec research and development, it has a very big war chest and an established customer set that has woven InfiniBand into their HPC clusters, database clusters, and storage arrays. There is no guarantee that Mellanox can hold onto all of its customers if Intel pushes Omni-Path hard and integrates it with its Xeon and Xeon Phi processors, but Mellanox has a lead on its competitors in delivering 100 Gb/sec technology and a customer base that keeps coming back for more.

In the quarter ended in March, Mellanox posted $146.7 million in revenues, up 48 percent from the year-ago period and setting a new record for any first quarter. The company brought $13.7 million to the bottom line, which was a lot better than the $11 million loss it booked in the year-ago period.

Like many players at the high-end of the systems market, it is best to look at Mellanox over the long term, perhaps over many quarters. Sales among HPC shops and hyperscalers tend to be lumpy, driven more by Intel processor cycles than by any other factor these days. If Intel pushes out a product, as it did during the “Sandy Bridge” Xeon E5 generation a few years back, it can really mess things up. Mellanox has survived its share of ups and downs – a few years ago Hewlett-Packard accidentally overbought a slew of 56 Gb/sec (FDR) InfiniBand components, and there were some cabling issues to contend with, too. Everybody has issues in the IT business (Intel’s silicon photonics for hyperscale datacenters, which it has been touting for a few years, have been pushed out because of thermal issues, just to name one). The thing to keep in mind with Mellanox is that it is committed to both Ethernet and InfiniBand and, importantly, it has moved away from trying to support both protocols on the same switch ASIC. The Switch-X converged chips caused latencies to bump up a bit, as The Next Platform has previously reported, and this cannot stand in a world where Intel wants to eat the high-end of the switching market.

That is why that cash pile shown in the chart above is so vital. It gives Mellanox the peace of mind it needs to compete. The company has about three quarters of revenue in the bank, and as it has grown, it has been able to grow that cash hoard. The fact that Mellanox wasn’t profitable for a couple of quarters might not make Wall Street or investors happy, but there is no reason to question that Mellanox can ramp EDR InfiniBand products and get 200 Gb/sec HDR InfiniBand products into the field by the end of 2017.

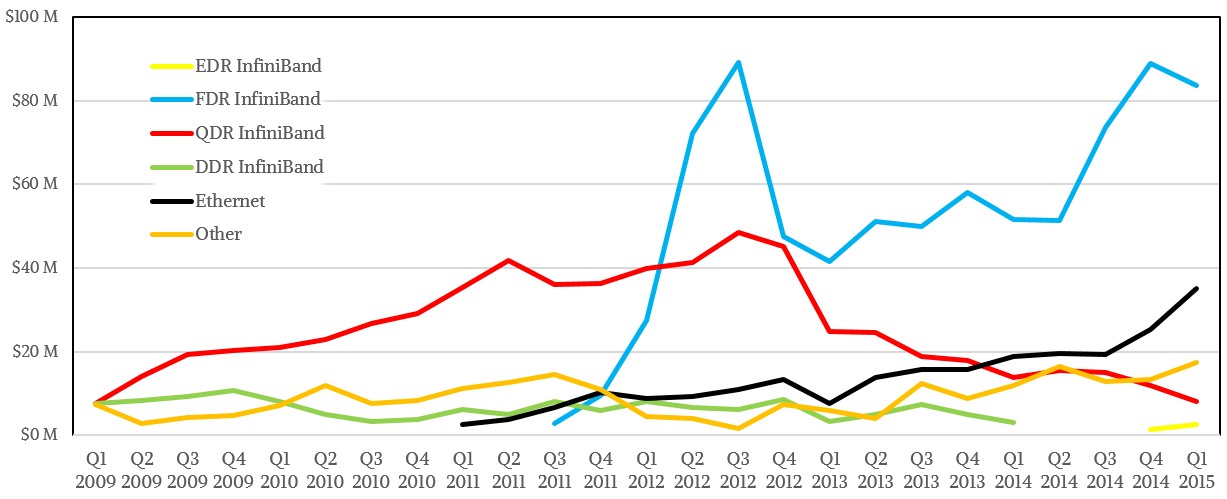

There is a fairly regular rhythm to the upgrade cycle for InfiniBand products at Mellanox, but it is not perfectly predictable because companies building parallel databases (like IBM, Oracle, and Teradata) or parallel storage arrays (like EMC and IBM) that use InfiniBand as a backbone do not tend to upgrade their products on the same cycle as the big government labs that are on the front-end of any HPC cluster technology. In the last, Mellanox CEO Eyal Waldman has said that 20 Gb/sec (DDR) InfiniBand took a long time to ramp, but QDR (40 Gb/sec) InfiniBand went from zero to more than 50 percent of sales in about four quarters. FDR InfiniBand took about four quarters to ramp, and then a funny thing happened: database and storage makers were still using DDR products and FDR got a second ramp, as you can see below:

That big spike in 2012 was a bit artificial in that this was when HP accidentally overbought components from Mellanox. Some big supercomputing deals from 2011 spilled into 2012 and some other deals expected in 2013 got pulled into 2012 as well, which also helped cause the revenue and profit spike. Mellanox had to hold tight, keep investing in future Ethernet and InfiniBand technologies, and ride it out.

The Voltaire acquisition from four years ago has more or less paid for itself in Ethernet product sales, if you want to be generous and allocate some of the Ethernet-based ConnectX adapter sales to the Ethernet switch side of the Mellanox house. Ignoring the time value of money, Mellanox paid $176 million in cash for Voltaire in early 2011 and it has generated $236 million in Ethernet product sales since that time. As you can see from the curve above, that Ethernet business is growing, even if it is utterly dwarfed by Cisco and is not anywhere near as large as that of Juniper Networks. Mellanox can and will compete against Arista Networks for high-performance Ethernet switches, although like all commercial players, Mellanox will have to contend with hyperscalers and large enterprises that want to build their own switches and load up open source network operating systems on them. But that is better than not getting this business at all and being relegated to traditional HPC systems, which used to be the dominant use for Mellanox products and which now represents less than half of its revenues.

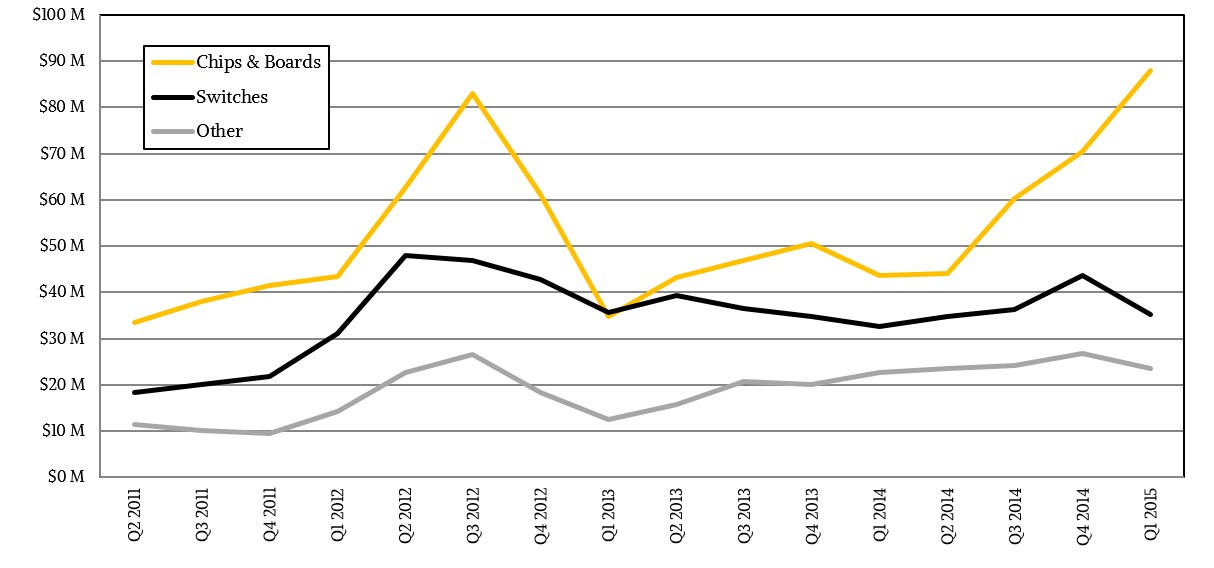

Drilling down into the Mellanox financials for the past four years, when the company has given out details on its chip and board sales versus switches and other products, you can see an interesting trend. Component sales are taking off at a much faster rate than finished switch sales. In general, it is usually tough for any company to sell components to OEMs and then also sell finished products based on the same technologies against those very same customers. Mellanox somehow gets away with it, or at least it has until now.

But it is also clear from the numbers that Mellanox is increasingly dependent on selling its chips and boards to make its revenues and its growth. Many of the customers who use InfiniBand for low-latency clustering can retool their software stacks to run on Ethernet, which has acceptable remote direct memory access (RDMA) thanks to a much-improved RoCE protocol and switch chips that support it. InfiniBand will continue to have a latency and bandwidth advantage, vital for certainly latency-sensitive applications in simulation and modeling and financial services, but InfiniBand is going to have to compete on price, too. With Intel coming on strong with Omni-Path and Ethernet having acceptably low latency for many applications, Mellanox may be forced to push Ethernet products harder than it has in the past. The Ethernet competition there will be tough, between Broadcom and Cavium and whatever Cisco and maybe Hewlett-Packard, Intel, and Juniper cook up on the ASIC front. But then again, the competition has been tough all along, and now Mellanox is humming along at a $600 million annual run rate, more than six times larger than it was six years ago.

So when we may expect to see any Mellanox 25/100 GbE switches?

IF they’ll be priced close to (or less than) recent EDR switches, it could be very a interesting/attractive product.

They have hinted that 25G, 50G, and 100G products are coming before the end of the year. Intel has yet to talk about its plans on this front, either.

Mellanox Ethernet switch is unused. I think you can clearly see that from the numbers above. The switch revenue even declined in the most recent quarter. Even IB switches went down.

Looks like the Haswell ramp is running out of steam already. Same as back in 2012. They get the boost from the new processor release.

In any case, Intel is about ready to release omni path. Intel has a laser focus on little Mellanox. The Intel solution will immediately become the best HPC solution. Here are several reasons why.

1. Intel has massive financial muscle. The will offer the processor plus cables and switches as a bundled deal. When you control the processor you have big leverage.

2. They are going to be on 14 nm. Mellanox is on 28 nm. That is a huge difference.

3. Intel has a 48 port switch. Mellanox is only 36 port. Oooppsss. Huge advantage for Intel.

4. The Omni path fabric is integrated directly onto the Intel chip set. This means much lower latency. There is no need to go across PCIe bus anymore. It’s already maxed out at x16 just to get to 100 Gbps.

5. In looking at Intel webinar, they might have 2×100 G ports available at full bandwidth. That’s 200G of bandwidth from the processor using two cables.

6. Intel actually has engineers working at the company. Big advantage.

Eyal was too arrogant not to sell Mellanox. It’s the Israeli major in him. He thinks he can beat anyone. Broadcom already has blown him out of the water in ethernet switching. Intel looks to be the next.