In the story we broke this morning about the forthcoming “Aurora” supercomputer set to be installed at Argonne National Laboratory—one of three pre-exascale machines that were part of the CORAL procurement between three national labs–we speculated that unlike the other two machines, which will be based on an OpenPower approach (Power9, Volta GPUs, and a new interconnect), the architecture of this system would be based on the third generation Knights family of chips from Intel, the Knights Hill processors. This marks a move away, by necessity, from the BlueGene architecture Argonne has historically been committed to with the end of that line in favor of Power emphasis.

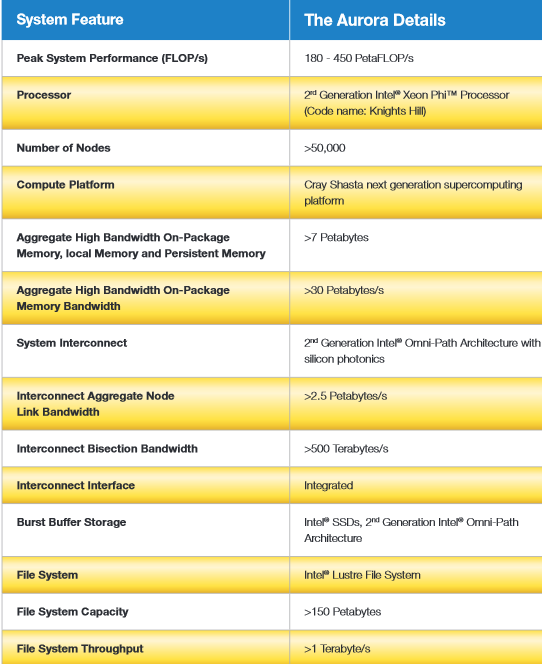

We have since learned that, indeed, this is the processor of choice for Aurora, which will be spread across over 50,000 nodes, achieving a predicted peak performance figure of 180 petaflops. This marks a difference for Argonne architecturally as they have historically been an IBM BlueGene lab. The range is actually much higher–up to over 400 petaflops but that is just too big of a gap to even work with. Still, assuming it’s a minimum of 50,000 nodes and a maximum of 200 petaflops, these are not groundbreaking in terms of sheer floating point capability, at least compared to big leaps in previous generations when we saw jumps from 32 nanometers to 14 nanometers. This is just a 14 nanometer to 10 nanometer leap–the thermal ceiling changes slightly. So to make a better machine that actually works for real applications (which, by the way, is the point), Intel’s strongest story is all the custom engineering they’ve done with integrator Cray and with their own interconnect and memory innovations to make this a system worth noting beyond it’s Top 500 appeal.

This huge performance number nonetheless threw us for a bit of a loop, however, because even if we are only looking at 50,000 nodes, that kind of performance is not a lot more than should be achieved with the Knights Landing processor due later this year. As we said earlier, Knights Hill might deliver somewhere between 4 teraflops and 4.5 teraflops of peak floating point performance, but at this rate, and given what we’ve been able to extract from Intel and Cray about some of the other performance features of the machine, it might be closer to 3.5 teraflops for the Knights Hill processor used in Aurora. We do not have information about the clock speeds on these forthcoming Knights Hill chips either, which may be another factor in how the 180 petaflops for that many nodes (without a publicly stated core count for the entire system) and projected cores (assuming these have more cores than Knights Landing) don’t completely add up just yet.

We can do the math, but with missing data, it’s all speculation. Still, as we keep emphasizing, this is a memory bandwidth machine, not necessarily a Top 500 shocker. Okay, well maybe it’s both, even if the generational jump isn’t as drastic as some might expect.

Either way, this system is a beast. And the real performance story has far less to do with the computational power of the cores than it does how data gets moved around. After all, supercomputers are no longer conscripted to being floating point machines—they have to do double-duty on big scientific data, which means they need to be outfitted accordingly, something IBM emphasized with its wins of the other two systems in the CORAL procurement cycle that presented a balanced memory to compute profile.

As we know from the original announcement, this system will combine non-volatile and stacked memory, although this is where Intel is rather mum. We can certainly assume is has something to do with what they are cooking with memory magnate, Micron, but details are slim.

We spoke with Intel Fellow, Alan Gara, in the wake of the news today. As he described, “We have architected the system so we can allow for enough in-package memory for most applications to run entire out of that memory. Because of the bandwidth opportunities that presents, there are big benefits in terms of performance—more than an order of magnitude more bandwidth than in traditional memory systems.” Gara says that “integrated non-volatile memory will be the important layer in the memory hierarchy that will allow for incredible bandwidth to be utilized by both the applications and for things like checkpointing,” which props against the much-discussed reliability concerns of pre-exascale systems and their future counterparts.

Another key feature of the system is the interconnect which, despite this being a Cray manufactured machine, does not sport the Aries interconnect. Rather, the next generation of Omni-Path, called, not unsurprisingly, Omni-Path-2, will tie the system together, providing the kind of scalability and performance that will be required if this machine ever wants to get close to its peak performance on the LINPACK benchmark. We were able to get some numbers about this forthcoming fabric, including the fact that the aggregate bandwidth will be 2.5 petabytes per second at the system level with a bisectional bandwidth of around a half a petabyte per second. It’s hard to wrap one’s head around what that means in terms of real application performance, but for context, consider that the Archer XC30 system in the United Kingdom using the Aries interconnect has 4,920 nodes and the bisectional bandwidth on that machine is 10.8 TB/sec. So it looks like Aurora will have more than 10X the nodes of Archer, but something closer to 45X the bisectional bandwidth. The Aurora system clearly has a lot more bandwidth per node.

“We’re also pushing the envelope on bandwidth and also addressing latency in the fabric. It’s more than a standard interconnect outside of what we think of in a standard fat tree interconnect. It really has a lot of capabilities to let us build more advanced highly scalable, switchless-type interconnects where there is no hierarchies of switches, it’s more of a system architecture where you really connect up racks to racks versus racks to switches.”

Aurora will deploy a Dragonfly Aries-like network topology, but that’s only one of many possible configurations, which hints at the fact that there will be more possible arrangements for different scaled systems of this type. This is encompassed in the company’s approach they talked about during the announcement called HPC scalable framework, which is where this comes in. Gara described this flexible framework as being enabled by a high-radix switching framework that allows for multiple topologies. “The radix of the switch is related to the number of hops or distance of the fabric, which has an impact on both bandwidth and latency,” Gara explains. “It turns out to be a critical parameter and is one of the dimensions where we’ve worked hard. It’s not just the radix, it’s also how you do the routing and we’ve done a lot of that work with Cray in terms of minimizing congestion is a result of both hardware and software.”

As we noted earlier, supercomputer maker Cray will be the manufacturer and integrator of the system, with Intel being the primary contract winner for the machine. And while we have seen plenty of XC30 and Xc40 supercomputers over the last few years, as well as the #2 system on the planet, Oak Ridge National Lab’s “Titan” Cray XK7 machine, we can expect some unique features and new engineering to find a way into the forthcoming Argonne super.

Cray has been hinting about the future Shasta systems for some time, including three years ago when Intel bought the “Gemini” and “Aries” interconnects from Cray that were at the heart of the XT and XC series of massively parallel supercomputers. Shasta was in development at that time, and there were whispers about a future interconnect code-named “Pisces,” but we could never find out much about it except that it might come to market in 2015. That clearly has not happened, and one reason is that a lot has changed in the market for HPC and enterprise systems in the ensuing years. In any event, when Intel acquired those interconnect assets from Cray, the two agreed to cooperate on future interconnects for the Shasta systems.

Steve Scott, who has returned to Cray as CTO after being CTO for Nvidia’s Tesla GPU coprocessor line and a systems architect for Google, did not pre-announce the Shasta architecture today as the Aurora system was unveiled at Argonne. But in a blog post, Scott did give some hints about the Shasta machines. (And don’t forget for one nanosecond that Scott was the architect of many of the interconnects and systems that Cray sold over the past decade.)

Shasta, he said, would be the most flexible system that Cray has ever built, harkening back to the original plans for the “Cascade” designs and the supercomputer maker first vocalized its “adaptive supercomputing” vision of mixing multiple processor types and multiple interconnects in a single system. This is precisely the plan with Shasta, which Scott says will support both Xeon Phi processors (like the “Knights Hill” massively multicore chips from Intel used in Aurora) as well as future Xeon processors. The Shasta machines will similarly support the second-generation Omni-Path interconnect from Intel, which borrows ideas from both InfiniBand and Aries to create an HPC-tuned, high-bandwidth, low-latency interconnect. But importantly, Scott made it clear that Shasta systems will include other processor types and other interconnects. What these are, Scott did not say, but it seems highly unlikely that Cray will support Power processors and very likely, considering the research it is already doing on this front, that it will support ARM processors. The ARM chips from Cavium and Broadcom seem likely candidates, seeing as though they are arguably better designs than the ones from AMD and Applied Micro. There is also an outside possibility of Cray creating its own interconnect for Shasta, particularly because ARM processors won’t have anything even remotely like Aries or Omni-Path.

“The Shasta network will have significantly higher performance and even lower diameter (ergo, low latency and bandwidth-efficient) than the groundbreaking Aries network in the Cray XC40 system,” Scott explained. “Using this network, we’ll be able to build truly balanced systems, where the network is tightly integrated and well matched to the processing nodes. High network bandwidth relative to local compute and memory bandwidth is key for sustained performance on real world codes.”

Because of its increasing focus on enterprise customers, Cray will also be tweaking the Shasta system packaging so it can be used in standard racks with a relatively small compute footprint to start and scaling all the way up to massive systems with the custom racks necessary for compute density in HPC centers. Scott hints that the machines will use warm-water cooling in some configurations so it can put higher-power processors into Shasta systems, too. The future machines will support Docker containers on Cray’s variant of Linux as well as the OpenStack cloud controller to orchestrate resources on the machine.

“This will be viewed in the future I hope as a machine that pushed the envelope and showed how to take the next step in how we build memory hierarchies. That has a lot to do with the stacked memory, but the focus on maintaining a consistent architecture and enabling current users’ codes to work well here is one of the key focuses and constraints of our system. There are revolutions at the processor level but a lot of the work is also in software, which is going to make this machine usable for the codes that will run on Aurora,” Gara says.

Be the first to comment