Search engine giant Google only talks about the underlying technology that it deploys in its datacenters years after they have been commercialized and a replacement has been developed and put into use. This way, Google contributes to the open source and broader IT communities, but it does not jeopardize its position as a titan of hyperscale technology.

But OpenPower might be the exception to that rule. By joining up in federation that is steering the development of an open hardware base centered on IBM’s Power8 and future processors, Google may be tipping its cards a bit about technology that is still under development and that it hopes could find a home in its hyperscale operations.

Like other hyperscalers, Google runs its business on a homegrown Linux stack, and while Linux has been available on prior Power processors, the chips were not available as merchant parts and there was not a community like the OpenPower Foundation steering the development of a complete system ecosystem behind it, including open source microcode. That OpenPower came into the world more or less at the same time as the Power8 chip was coming to market is probably not a coincidence, or it is a fortuitous one if it is, because the Power8 chip is the first one that has the same byte endianness to make it fairly straightforward to convert applications from X86 iron running Linux. In short, IBM had a need to open up Power to make it better compete against X86 and ARM iron, and Google and other hyperscalers and their peers in the adjacent HPC community need an alternative compute platform.

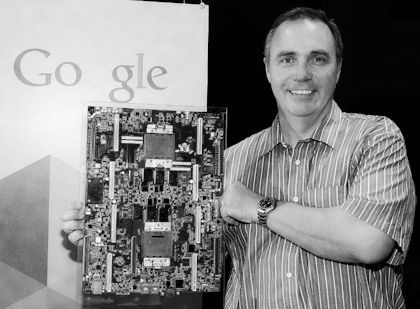

Gordon MacKean, who is chairman of the OpenPower Foundation, has been the senior director in charge of server and storage systems design for close to seven years, tells The Next Platform that he can’t really say much about what Google is doing specifically, but ahead of his keynote address at the inaugural OpenPower Summit in San Jose, which is being embedded inside of Nvidia’s GPU Technology Conference, MacKean did say a few important things that perhaps mean that Google’s involvement in OpenPower is more than a science project or a means to try to get better discounting on parts from Intel and possibly other chip suppliers from whom Google buys parts for its servers and storage.

First of all, MacKean says that Google very much wants to ensure that its software stack does not succumb to “bit rot,” as he put it, and moreover the company wants to have that code be demonstrably agnostic when it comes to instruction set architectures, or ISAs. “It is about more than testing,” says MacKean, who was not allowed to say anything further about the various architectures and systems that Google might be running in test, development, and production environments.

MacKean did reveal to The Next Platform that Google has not actually licensed the Power8 chip from IBM under the auspices of the OpenPower foundation, so Google is not making its own variant of the Power processor for its own use as Chinese chipmaker Suzhou PowerCore is doing for the Chinese market. But Google is keeping its options open.

“I don’t have the crystal ball, so I don’t know how it is going to play out,” MacKean tells The Next Platform. “I think that having that option there is actually a good thing that OpenPower is going to be supporting. The fact that IBM could license those cores to somebody is important, and it does not have to be to the end user that is going to be developing its own custom chip. It could be to another silicon vendor, or another CPU vendor, that wanted to license Power to fill out their portfolio that OpenPower can’t fulfill because IBM’s pipeline is committed. I can see a lot of areas where it can play out in different ways. All that I know is that all of those doors are open for us.”

Google, like other members of the OpenPower consortium and IT organizations in general, is thinking at a system level and what they all see is not so much that the Moore’s Law advance in price/performance at the chip level has stopped, but for the longest time, MacKean explained in his keynote, companies took the Moore’s Law improvements for CPUs as a proxy for the advancements in the performance and value of servers.

“If you really look at the cost/performance trends over time, when I talk to the different members of the OpenPower Foundation and people outside of the community, what I am hearing is that there has been a deceleration in the value increase in those servers,” MacKean explained in his keynote. And the deceleration is enough for Google to not only become involved in the OpenPower Foundation, but to be publicly involved.

The question that Google has been asked time and again is just how serious it is about using Power chips in its production environments. Its hyperscale peer Facebook has played around with ARM and Tilera systems, and is rumored to be looking at Power chips, too, for selected workloads, but as far as anyone knows, the server and storage systems at Facebook are still based predominantly on Xeon processors from Intel. These hyperscale shops, which control their complete software stack, from the Linux kernel all the way up to the highest parts of the application and which have a need for tens to hundreds of thousands of units of a processor for specific workloads, are the best place for any new architecture to get a toehold in the market and expand from there. Having Google even thinking about deploying Power chips gives it as much credence – and maybe even more – than having a few of the big HPC labs adopt the chip and a hybrid architecture that goes along with it at just about the time that many had thought IBM’s Power chips were destined for the dustbin of history.

Google will not divulge its Power chip system and storage plans, but MacKean said Google is in fact taking OpenPower very seriously.

“There would be a certain amount of arrogance for me to believe that my team could actually be leading the most important next thing all the time and that this was always going to provide the best value,” MacKean says. “I think that by participating in something like OpenPower and other open initiatives, we get a multiplying effect. There are discussions going on and technologies that we are able to look at and assess the suitability for us.”

“I don’t have the crystal ball, so I don’t know how it is going to play out. I think that having that option there is actually a good thing that OpenPower is going to be supporting. The fact that IBM could license those cores to somebody is important, and it does not have to be to the end user that is going to be developing its own custom chip. It could be to another silicon vendor, or another CPU vendor, that wanted to license Power to fill out their portfolio that OpenPower can’t fulfill because IBM’s pipeline is committed. I can see a lot of areas where it can play out in different ways. All that I know is that all of those doors are open for us.”

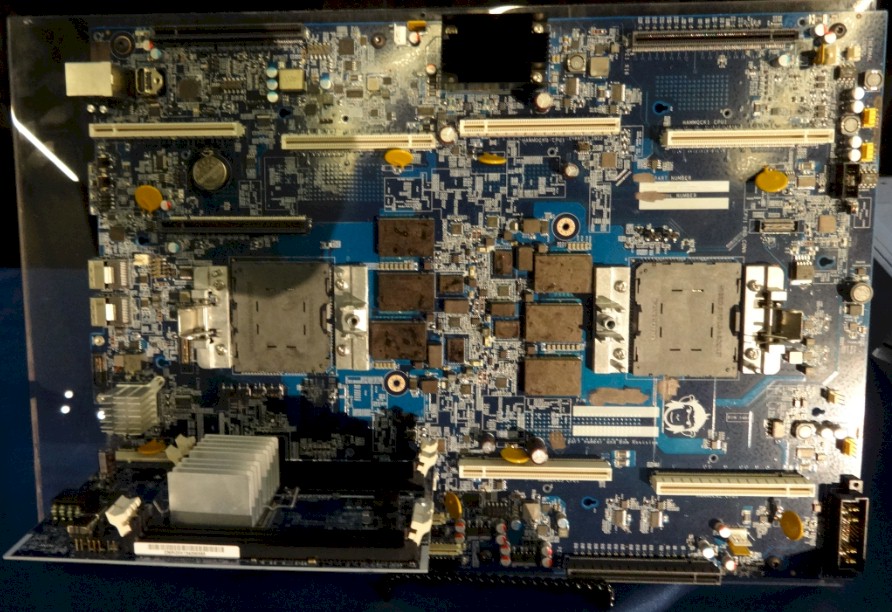

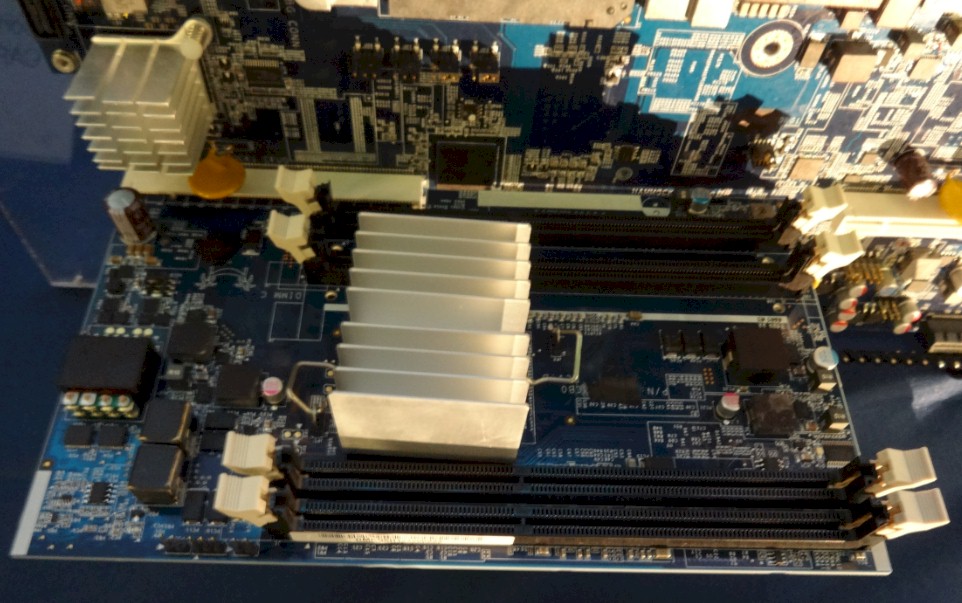

The other reason to think that Google is serious about the OpenPower effort is that Google is a big believer in the brawny core – as opposed to the wimpy one – and the Power8 chip has more threads, more memory bandwidth, and will have several high speed interfaces, including IBM’s CAPI and Nvidia’s NVLink, to attach adjunct memory and processing devices directly into the Power chip memory subsystem and processing complex.

“There are only two brawny cores out there: Xeon and Power,” MacKean explained. “Power is directly suitable when you are looking at warehouse-scale or hyperscale computing from that perspective. I actually think that the industry is going to be moving towards more purpose-built computing, and I think that is different users are going to be able to leverage the advanced I/O that IBM is opening up through OpenPower. They are going to be able to go with purpose-built platforms that suit their workloads. I think this is a big part of this. We just heard about the CAPI 2.0 interface having twice the bandwidth and we are actually excited about how that will play out at the system level. It is open, and we are seeing a lot of people innovating in a lot of directions.”

As for disclosing Google’s Power-based server designs, MacKean said that it was very likely that the company would not talk about what Google has created until five years after they have finished their useful life, much as it has done with key parts of its software stack. There are rare exceptions to this, of course, such as Google’s disclosure last year that it was making homemade routers at the heart of its “Andromeda: wide area network.

Google embraces new technologies and old technologies to find value. What if you were able to put all the memory on the CPU die & remove all memory / mass storage ? This is exactly what has happened with a New Memory Technology call R-RAM or MR-RAM. Resistive or Magneto Resistive RAM is being developed by no less that 8 different vendors who are ‘LITERALLY RACING” to become “FIRST” out the starting gate for this new memory type. Will it cannibalize every memory technology market on the planet.. ? In a word.. YES !

https://www.linkedin.com/pulse/20140711173826-3543789-bigger-than-google?trk=prof-post

Before Crossbar-Inc.com started work on this no one seemed to pay any notice. Sadly that remained true for about 2 years. Last January they announced that they had fixed a current leakage bug in when bank switching the R-RAM which enables the product to achieve Current DDR “ON DIE”. Many people quickly ask about the power envelope and will it be significant enough to change which chip topologies it can be paired with. At 1 femto amp per bit flip.. it is 3 orders of magnitude lower power consumption than flash. So Terabytes of memory can be stacked on a CPU die.

The net result of placing all the memory on the chip is two fold. 1st it can reduce the number of data center cabinets from ~50 to 1 cabinet. That cabinet can will then still only draw ~15KW which is average for server cabinet coming out today that employ high density 1U servers. 50 to 1 power savings ! When Google uses ~2% of the entires worlds supply of energy.. I am truly surprised that Google has not come upon this technology.. or many bit has and isn’t saying.. ?

If you would like to know more please read my article..