In the United States, the first step on the road to exascale HPC systems began with a series of workshops in 2007. It wasn’t until a decade and a half later that the 1,686 petaflops “Frontier” system at Oak Ridge National Laboratory went online. This year, Argonne National Laboratory is preparing for the switch to be turned on for “Aurora,” which will be either the second or the third such exascale machine in the United States, depending on the timing of the “El Capitan” system at Lawrence Livermore National Laboratory.

There were delays and setbacks on the road to exascale for all of these machines, as well as technology changes, ongoing competition with China, and other challenges. But don’t expect the next leap to zettascale – or even quantum computing – to be any quicker, according to Rick Stevens, associate laboratory director of computing for environment and life sciences at Argonne. Both could take another 15 to 20 years or more.

Such is the nature of HPC.

“This is a long-term game,” Stevens said in a recent webinar about the near and more distant future of computing in HPC. “If you’re interested in what’s happening next year, HPC is not the game for you. If you want to think in terms of a decade or two decades, HPC is the game for you because we’re on a thousand-year trajectory to get to other star systems or whatever. These are just early days in that. Yes, we’ve had a great run of Moore’s Law. Humanity’s not ending tomorrow. We’ve got a long way to go, so we have to be thinking about, what does high performance computing mean ten years from now? What does it mean twenty years from now? It doesn’t mean the same thing. Right now, it’s going to mean something different.”

That “right now” part that was central to the talk that Stevens gave is AI. Not only AI-enhanced HPC applications and research areas that would benefit from the technology, but AI-managed simulations and surrogates, dedicated AI accelerators, and the role AI will play in the development of the big systems. He noted the explosion of events in the AI field between 2019 and 2022, the bulk of the time spent in the COVID-19 pandemic.

As large language models – which are at the heart of such tools as the highly popular ChatGPT and other generative AI chatbots – and Stable Diffusion text-to-image deep learning took off, AI techniques were used to fold a billion proteins and improve open math problems and, there was massive adoption of AI among HPC developers. AI was used to accelerate HPC applications. On top of all that, the exascale systems began to arrive.

“This explosion is continuing in terms of more and more groups building large scale models and almost all of these models are in the private sector,” Stevens said. “There’s only a handful that are even being done by nonprofits, and many of them are closed source, including GPT-4, which is the best current one out there. This is telling us that the trend isn’t towards millions of tiny models, it’s towards a relatively small number of very powerful models. That’s an important kind of meta thing that’s going on.”

All this – simulations and surrogates, emerging AI applications, and AI uses cases – will call for a lot more compute power in the coming years. The Argonne Leadership Computing Facility (ALCF) in Illinois is beginning to mull this as it plots out its post-Aurora machine and the ones beyond that. Stevens and his associates are envisioning a system that is eight times more powerful than Aurora, with request-for-proposals in the fall of 2024 and installation by 2028 or 2029. “It should be possible to build machines for low precision for machine learning that are approaching half a zettaflop for low-precision operations. Two or three spins off from now,” Stevens said.

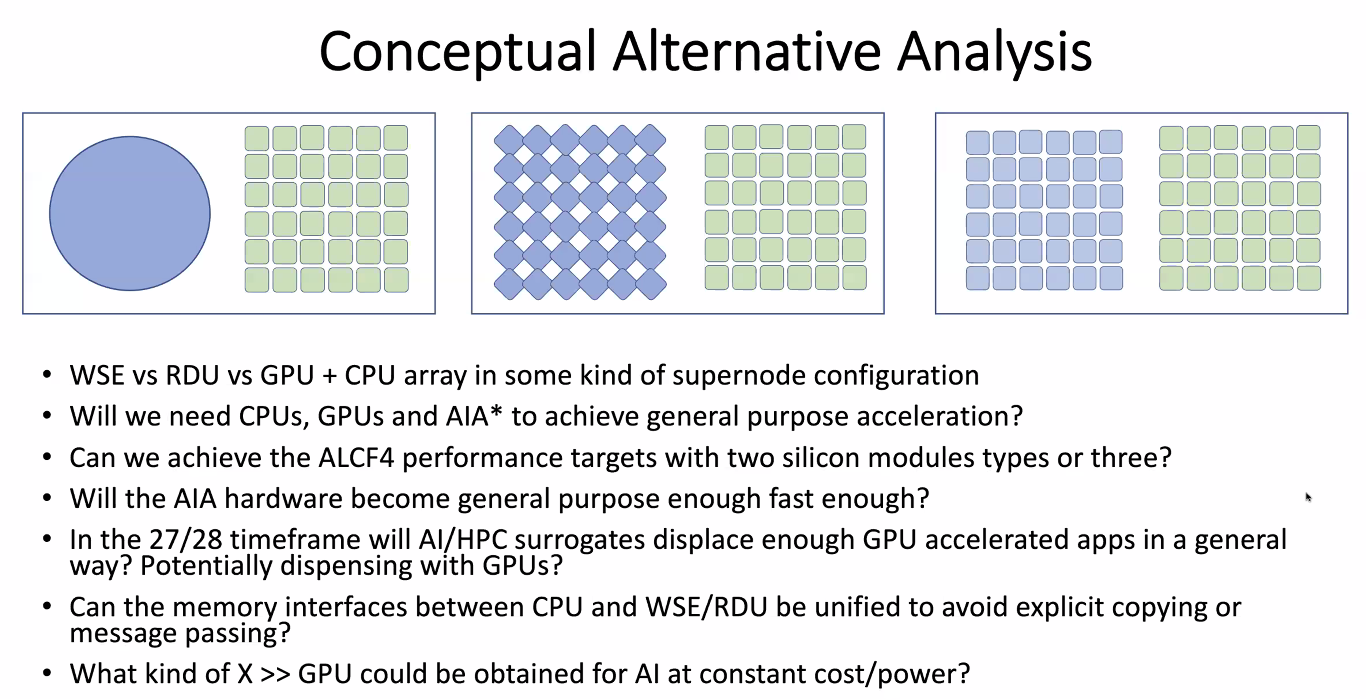

One question will be about the accelerators in such systems. Will they be newer versions of the general-purpose GPUs used now, GPUs augmented by something more specific to AI simulations or an entirely new engine optimized for AI?

“That’s the fundamental question. We know simulation is going to continue to be important and there’s going to be a need for a high-performance, high-precision numerics, but what the ratio of that is relative to the AI is the open question,” he said. “The different centers around the world that are thinking about their next generation are all going to be faced with some similar kind of decision about how much they lean towards the AI market or AI application base going forward.”

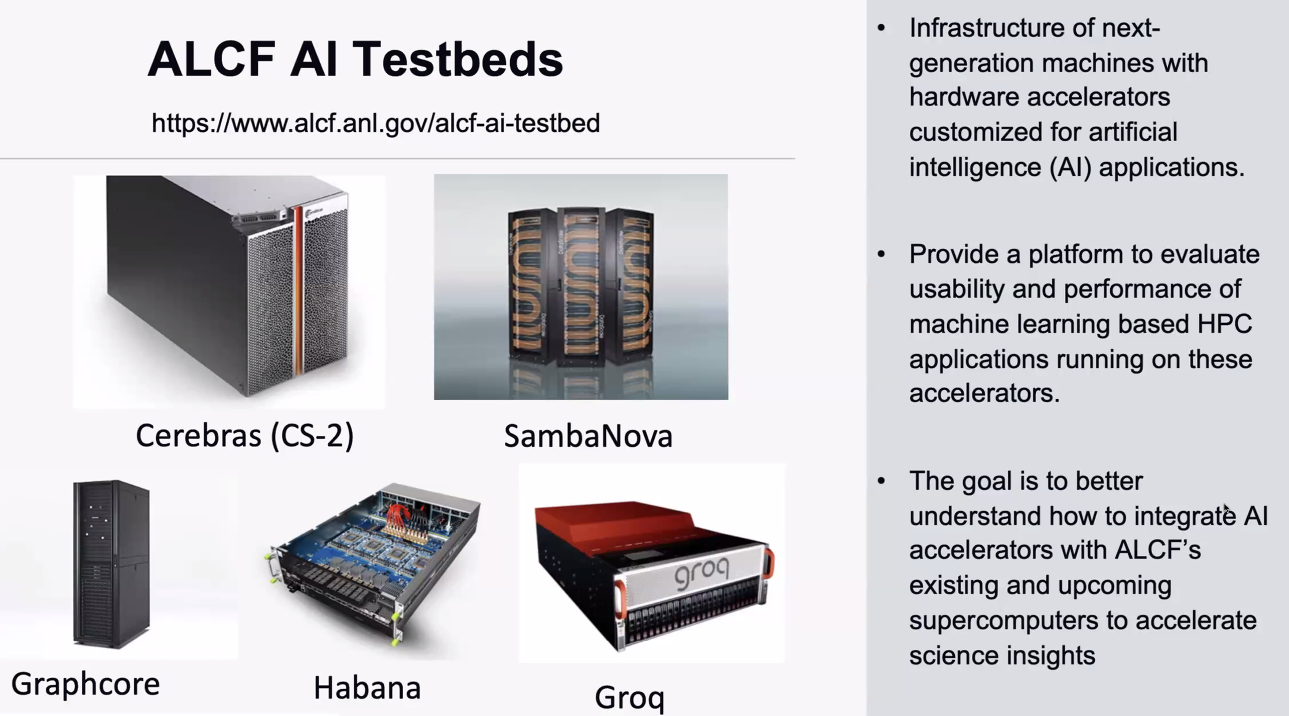

The ALCF has built AI Testbeds, using systems from Cerebras Systems, SambaNova Systems, GraphCore, the Habana Labs part of Intel, and Groq, that will include accelerators designed for AI workloads to see whether these technologies are maturing fast enough that they could be the basis of a large-scale system and effectively run HPC machine learning application.

“The question is, are general-purpose GPUs going to be fast enough in that scenario and tightly coupled enough to the CPUs that they’re still the right solution or is something else going to emerge in that timeframe?” he said, adding that the issue of multi-tenancy support will be key. “If you have an engine that’s using some subset of the node, how can you support some applications in a subset? How can you support multiple occupancy of that node with applications that complement the resources? There are lots of open questions on how to do this.”

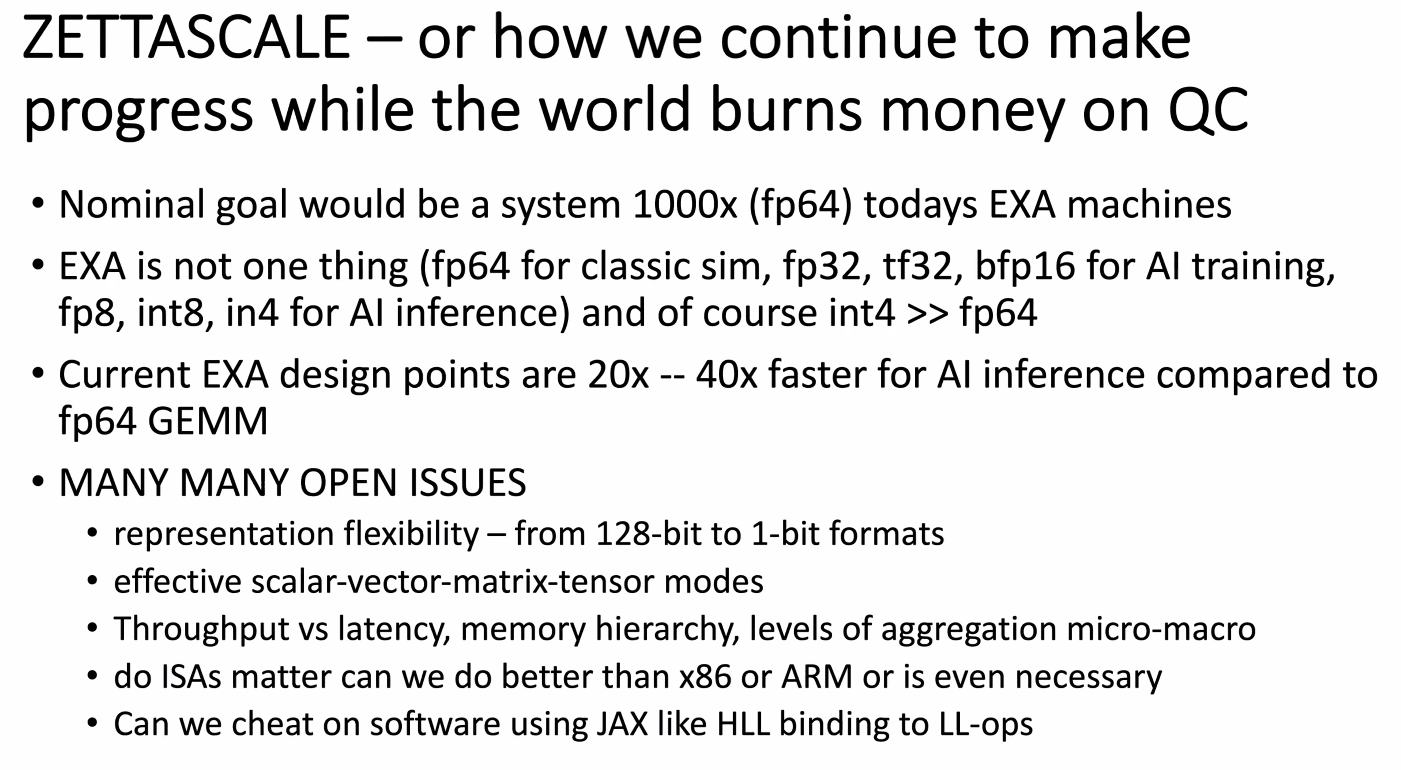

Some of those questions are outlined below:

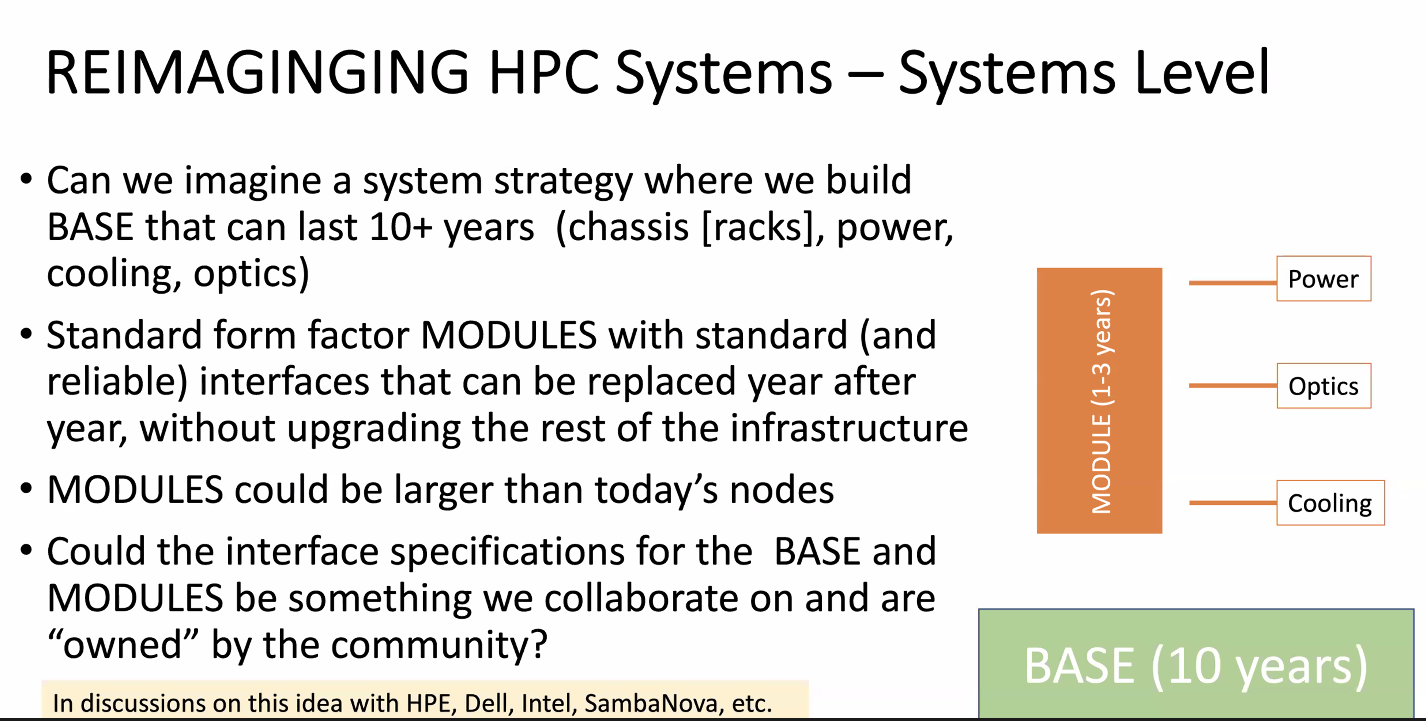

There also is the question of how these new big systems will be built. Typically new technology waves – changes in cooling or power systems, for example – mean major upgrades of the entire infrastructure. Stevens said the idea of a more modular design – where components are switched but the system itself remains – makes more sense. Modules within the systems, which may be larger than current nodes, can be replaced regularly without having to upgrade the entire infrastructure.

“Is there a base that might have power, cooling, and maybe passive optics infrastructure and then modules that are to be replaced on a much more frequent basis aligned with fab nodes that have really simple interfaces?” he said. “They have a power connector, they have an optics connector, and they have a cooling connector. This is something that we’re thinking about and talking to the vendors about: how packaging could evolve to make this much more modular and make it much easier for us to upgrade components in the system on a two-year time frame as opposed to a five-year timeframe.”

The ALCF is looking at these issues more aggressively now than in past several years, given the assets the Department of Energy’s Office of Science holds, such as exascale computing and data infrastructure, large-scale experimental facilities, and a large code base for scientific simulations. There also are a lot of interdisciplinary teams across domains and laboratories; the Exascale Compute Project comprised 1,000 people working together, according to Stevens.

Automation is another factor. Argonne and other labs have all these big machines and large numbers of applications, he said. Can they find ways to automate much of the work – such as creating and managing an AI surrogate – to make the process quicker, easier, and more efficient? That’s another area of research that’s underway.

While all this work is going on, churning in the at their own pace is the development of zettascale and quantum systems, neither of which Stevens expects to see in wide use for another 15 to 20 years. By the end of the decade, it will be possible to build at zettascale machine in low precision, but how useful such a system is will vary. Eventually it will be possible to build such a machine at 64 bits, but that’s probably not until at least 2035. (Not the 2027 that Intel was talking to The Next Platform about in October 2021.)

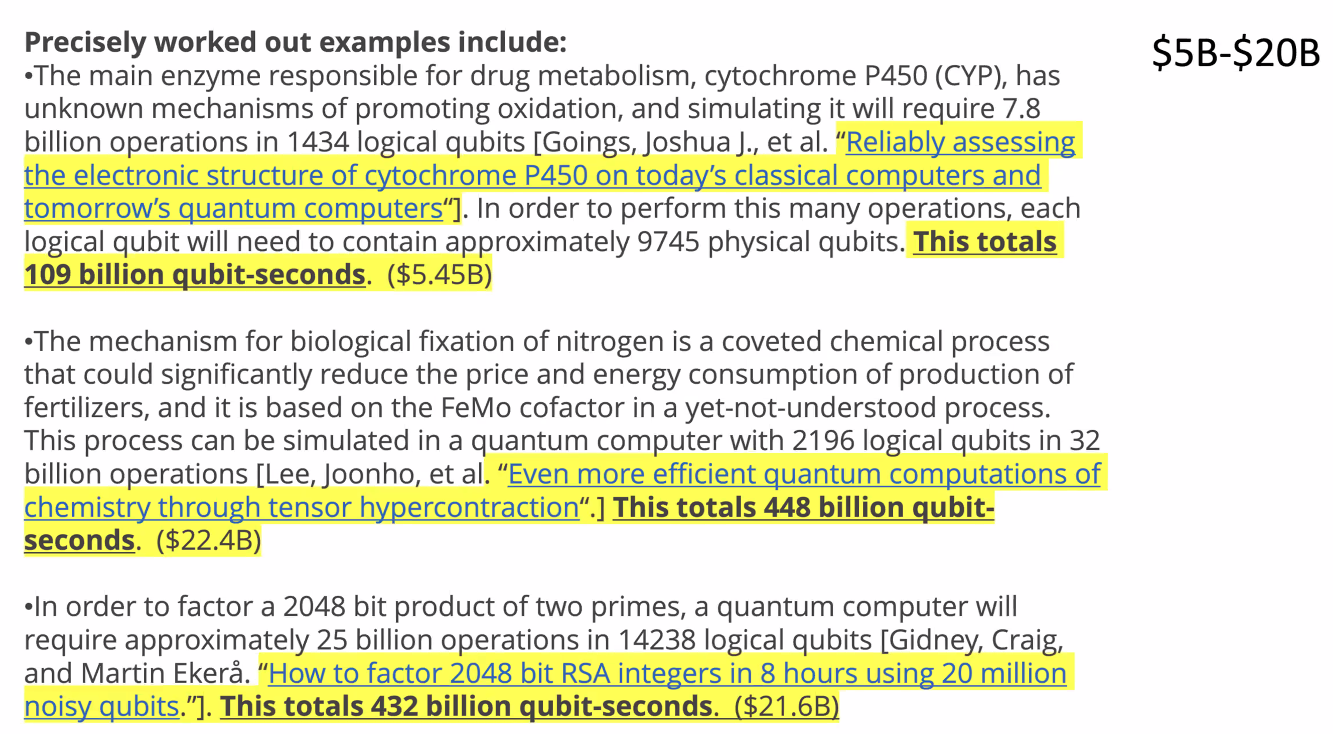

For quantum, the costs involved will be as important as the technology. Two weeks running an application on an exascale machine costs about $7 million of compute time. On a scaled-up quantum machine with as many as 10 million qubits – which doesn’t yet exist – running a problem could cost $5 billion to $20 billion, as shown below. That cost would have to come down in orders of magnitude to may it worth what people would pay to solve large-scale problems.

“What this is telling us is what we need to do is we need to keep making progress on classical computing while quantum is developing, because we can we know we can use classical computing to solve real problems,” he said. “This is really somewhat of an argument for that. We think that progress at zettascale is also going to take 15 to 20 years, but it’s a timeframe that we’re fairly confident in and we know we can actually use those machines.”

All of which plays back to the initial theme: innovation in HPC takes a long time. Quantum-classical hybrid systems may eventually be the way to go. The industry may have to switch computation substrates to something that is molecular, optical, or has yet to be invented. Engineers, scientists, and others will need to thing expansively.

“The thing that’s changing the landscape the fastest right now is AI and we’ve barely scratched the surface on how we might re-architect systems to really be the ideal platform for doing large-scale AI computation,” Stevens said. “That could be such a game changer that if we had this conversation 10 years from now, maybe something else happened. Or maybe we’re right on. I guess it’ll be somewhere in the middle. It’s going to be a long game and there will be many disruptions and the thing we have to get comfortable with is figuring out how to navigate the disruptions, not how to fight the disruptions, because disruptions are our friends. They’re actually what’s going to give us new capabilities and we have to be aggressively looking for them.”

Superluminal quantum teleportation, to/from orbiting geostationary datacenters, should solve the bulk of these AI challenges (a similar approach was demonstrated in the prescient 1990 documentary “Terminal City Ricochet”, where casting selected kitchen appliances to play the role of computational hardware — in those early, near-modern, but not quite, days). The drag that gravity is, along with reality, did bring them crashing down to earth, eventually, every now and then, much unlike AI hallucinations. But, as Rick James said, in Dave Chappelle’s True Hollywood Stories, starring Charles Murphy: “[AI]’s one hell of a [hallucinogenic]!” (or justabout). Which brings me to my main tangential point: if Mark Zuckerberg got gold medals, this Spring, in Brazilian Jiu-Jitsu (BJJ), as a white belt, which is the lowest level, starting belt, was he, as Rick James thought of Charles Murphy, “practicing karate with them little kids!”? Don’t drink the frog soup (in excess) … and … exhale …

It’s hard to argue against this plan for Argonne, given Rick Stevens’ superb credentials (automated reasoning and high-performance symbolic computation — Genesereth and Nilsson on steroids), and with JDACS4C and CANDLE (biomimetic AI) being developed for problems that are challenging to humans, and expected to run at Exascale on Aurora, and on Cerebras wafer-scale units. An underlying target (not at all apparent) may be the development of exocortices, that might do for our brains what exoskeleta do for our limbs (or not).

When it comes to locomotion, we found early on that the evolutive design of our limbs was not necessarily the best suited for the job, and developed a much more effective, non-biomimetic, alternative: the wheel. We also developed very efficient non-biomimetic washing machines (without arms), and plenty of other non-bio-inspired devices to free us from the limitations of our “unfortunate” limb+brain biodesign (rotary motors being an example of something seldom found in nature, except maybe in dinoflagellates).

Our brain is terrible at computations. It is excellent at recognizing hot-dogs in a crowd, great at speech-recognition in noisy-ish environments, and good at controlling our flailing limbs on uneven ground, but crap at logic and math — they require the longest training time to properly master, even approximately. Why on earth then, do we want to build bio-inspired machines, that mimic brain functioning (to some rough extent) to help us with math and logic? We’ve developed very successful (accurate, fast, and efficient) non-biobased tools to help us with this already (slide rules, electronic calculators, CPUs+RAM, predicate calculus, normal calculus, numerical algorithms, Fortran, LISP, Prolog, …) and it is not entirely clear (to me) what additional benefit a bio-inspired exocortical helmet could bring to this field, beyond fashionable headgear (with a huge powerpack?). It may, however, help deal with ALS, Alzheimer’s, Parkinson’s, … maybe.

That a biomimetic AI can be made so large, and trained so expensively, that it can correctly add two small integers, is an impressive feat, to be sure. But, as the grand voodoo priest of the horror-backpropagation plague nearly said, I doubt that an approach that makes this particular hammer ever bigger, represents the best use of potentially scarce resources, GPUs, and PhDs (but it is great fun, in a nerdy, mostly workaholic, kind of way!). Indoor decoration, EDA floorplanning, and the Argonne projects, are probably good application areas though, along with stockmarket crystal ball fortune telling (which remains the killer app).

Still, it’s best to keep an open mind on this long road to the Zettascale (just a Saturday opinion, between Pina Coladas … 8^b)!

Well said, and thank you.