Scientists at the Institute for Computational Cosmology (ICC) in England spend most of their time trying to unravel the secrets held in the deepest parts of space, from the Big Bang and the origins of the universe to mysteries like dark matter. Much of this work is done through simulations run on the COSMA lineup of supercomputers.

The newest system in the family, the COSMA8, has more than 50,000 compute cores and 360 TB of RAM. COSMA7 is a large experimental system, with more than 12,000 Xeon SP 5120 CPU cores and 250 TB of memory across 452 nodes. The massively parallel workloads that run on the systems – doing research into everything from black holes and planet formation to collisions – are extremely compute and data intensive.

The ICC researchers run huge simulations of the universe that start with the Big Bang and propagate forward over time and the data from the simulations is brought together with what they see through their telescopes for a more complete picture of the evolution of the universe, according to Alastair Basden, technical manager of COSMA HPC cluster at Durham University.

“We can put models into our simulations of things that we don’t understand, stuff like dark matter and dark energy,” Basden said during a recent virtual meeting with journalists and analysts. “By fine tuning our models and parameters that we input into the simulations, we try to match the output of the simulations to what we see in the sky with these telescopes. This takes quite some time, but the simulation needs lots of compute time on tens of thousands of compute cores. You need a lot of compute, but you also need a fast network to connect all these different compute nodes.”

In HPC, more compute and storage can always be added to a cluster. The network can be a particular challenge. With emerging workloads like artificial intelligence becoming more prominent, demand for compute resources from disparate parties is always an issue and “noisy neighbors” affecting the performance of other workloads on the same system, networks in the HPC space are often dealing with issues of bandwidth, performance degradation, and congestion.

“Whatever goes on in a node over here also affects what’s happening on the node over there, all the time with transferring data around the cluster,” Basden said. “Therefore we need a faster network interconnect.”

In the models of the universe the ICC creates, a change in a star in one section will impact other stars in far-flung parts of the simulation. It’s important that the messages make their way across the model as quickly as possible.

That’s where Rockport Networks comes in. We met the ten-year-old startup when it came out of stealth in November 2021 with a switchless network architecture designed to meet the rising performance needs of enterprises and HPC organizations running those AI workloads. The ICC, housed at Durham University, is working with Rockport to test the architecture on the COSMA7 supercomputer to see if it can reduce the impact of network congestion on the applications it runs, including modeling codes that will run on future exascale systems.

The Rockport installation is being funded by the Distributed Research using Advanced Computing (DiRAC), an integrated supercomputing facility that has four sites around the country, including at Durham, and the ExCalibur project, a five-year program that is part of the UK’s exascale efforts that focuses mostly on software but does have money for novel hardware architectures. The money for the Rockport project is aimed at testing unique network technologies.

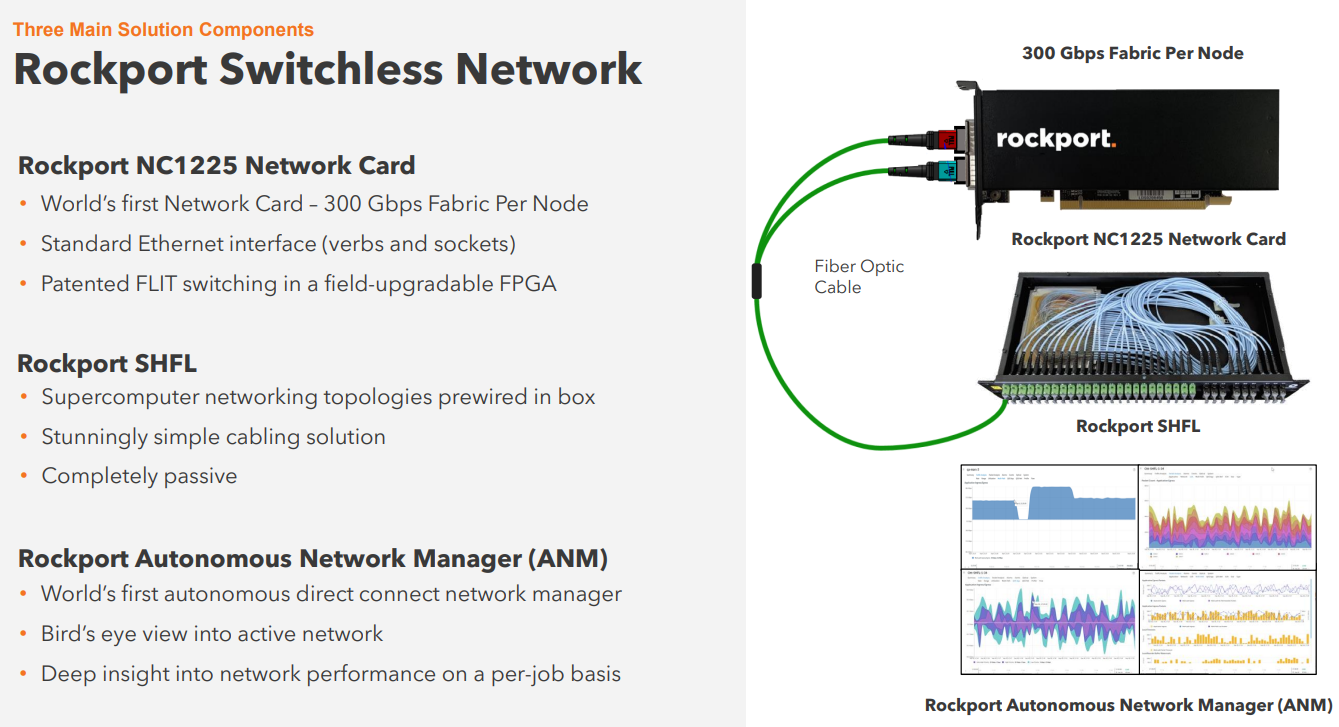

Rockport fits that definition of unique. As we reported earlier, the Rockport architecture does away with traditional switches to create a highly scalable and efficient network that addresses the cost, latency, bandwidth, and congestion issues in typical networks. The company does this by putting the switch functions in its endpoint nodes that include twelve network points per node.

“We’ve moved away from a centralized switch approach to distributed switching, where every single node in the network contains switching functionality,” Rockport chief technology officer Matt Williams said. “Instead of being connected to centralized switches, our loads are directly connected to each other through twelve dedicated links in each of our nodes. No external switches and nodes are directly connected, which gives us architecturally tremendous performance advantages when we’re talking about performance-intensive applications. Directly connected nodes, switching functionality distributed down to the end points. In our environment, nodes don’t connect to the network. The nodes are the network.”

Rockport’s Switchless Network includes the NC 1225 network card that creates a 300 GB/sec per-node fabric and includes the standard Ethernet interface and the passive SHFL device that houses the HPC-level networking. An FPGA implements all the technologies. The Autonomous Network Manager oversees the direct connect network and rNOS operating system automates the discovering, configuring and healing of the system and ensures the best path through the network is taken by each workload.

The ICC has been testing Rockport’s infrastructure for more than a year on the Durham Intelligent NIC Environment (DINE), an experimental system that includes 24 compute nodes and Rockport’s 6D torus switchless Ethernet fabric. The Rockport technology was put on 16 nodes and the ICC has been testing the technology and benchmarking the results.

The scientists ran code on the system and artificially increased the congestion in the network to simulate having other codes running at the same time. The ICC ran different scenarios – with Rockport’s technology running on varying numbers of nodes and changing numbers of noisy neighbors running on nodes increasing congestion. Scientists found that performance did not degrade as the network congestion increased, according to Basden.

They also found that it took workloads 28 percent more time to reach completion than those on the Rockport nodes.

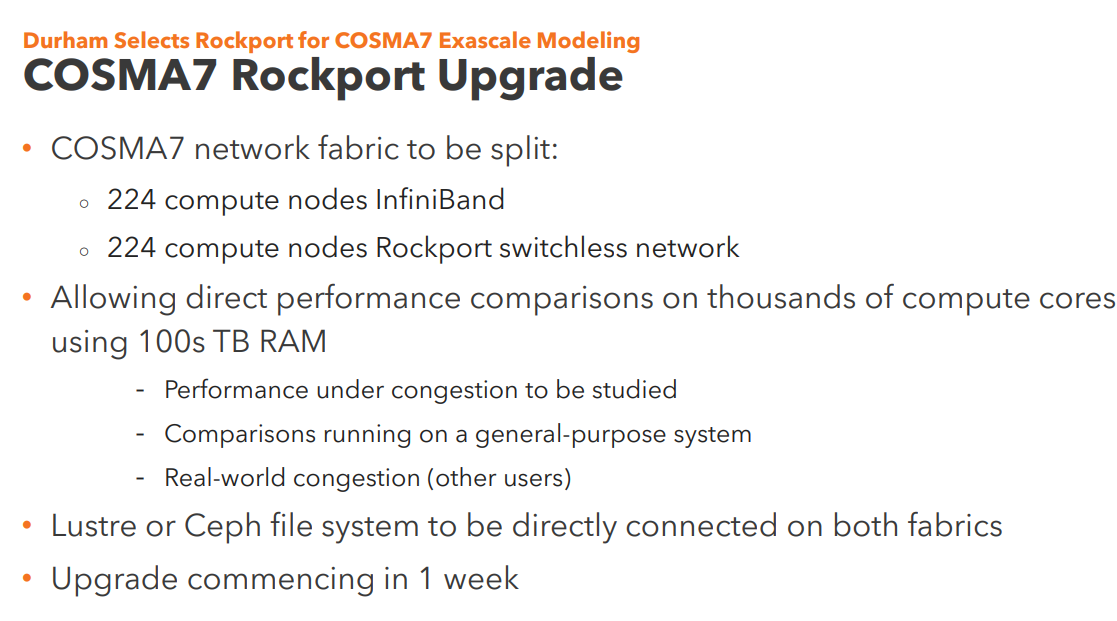

With COSMA7, Basden will split the supercomputer in half, with 224 nodes running Rockport’s Switchless Network architecture and the other 224 using InfiniBand. Lustre or Ceph files will be directly connected on both fabrics and scientists will be able to directly compare the two networks running on thousands of compute cores using 100 TB of RAM. The performance of workloads on congested networks will be studied, as will comparisons of them running on a general-purpose system.

“What’s important is that these nodes are going to be identical – identical RAM, identical processors, etc.,” Badsen said. “We’ll really be able to do direct comparisons of the codes in a quiet environment, so we can keep other users off and say, ‘Just run this code.’ We’ll artificially introduce congestion but also in unconstrained environments and then we’ll be able to get a feel for which network is generally doing better.”

They will also ramp up the number of cores and RAM and “run real scientific simulations and looking at the performance of these under the congestion,” he said.

Williams is confident Badsen and the ICC will see significant improvements when running Rockport.

“When we think about performance under load, which is really what matters, it’s not the data-sheet best-case scenario, it’s how well do you control performance under very heavy load,” he said. “Let’s break all that packets up into small pieces. Allow us to take these critical, latency-sensitive messages and move ahead in the queue, which allows us to ensure that those critical messages that drive workload location time always arrive first as they get through the network.”

This goes back to the importance of messages from one part of these large simulations are delivered to other parts as quickly as possible. In tests, messages in Rockport’s architecture take 25 nanoseconds to reach their targets. That compares with 15,000 nanoseconds with InfiniBand and 220,000 nanoseconds with Ethernet.

In institutional and enterprise HPC environments, “the network is the problem,” Williams said. “Compute and storage are getting fast. The network really hasn’t kept up. When you look at how a traditional spine-and-leaf network architecture provides access to the aggregate bandwidth, it is pretty inefficient in doing that and you can get up to 60 percent less bandwidth than you believe you should get. What we see is because of the congestion on that network, because of the challenge of effective use of that bandwidth, you can get a degradation of workload performance. In an idle network, you can get very good performance for your code. As other people start using it, you get that congestion and your workloads slow down. We’ve seen between 20 or 30 percent impact due to those noisy neighbors. We have a very different kind of architecture. We want to make sure our technology is easy to adopt, easy to deploy, easy for people to use.”

Be the first to comment