With enterprises increasing embracing HPC environments to help manage the tidal wave of data that’s washing over them and the rise of AI and machine learning workloads, tech vendors selling into the market need to demonstrate both flexibility and adaptability.

Most of these organizations are going to start slowly with such emerging technologies, evaluating what they need and then trying out compute and storage products to see what works best for them. Things change after that, when they move from kicking the tires into full-blown adoption. That’s when vendors need to ensure they have the products on hand to meet the evolving demands.

“What we think is going to happen as a result of that is that for a while they’re all going to start experimenting,” Curtis Anderson, software architect at HPC storage vendor Panasas, tells The Next Platform. “They’re going to see if there’s enough value there for them, given their data, their needs, and for those that decide there is value, then they’re going to go into production. From our point, there’s a very distinct divide between somebody playing – they’ve got a terabyte or two of data and a couple of GPUs – vs. going into production. Some of those people, when they go to production, their data is still a couple of terabytes, maybe 100 TB. But we heard somebody recently who said, ‘I’ve got a lot of data. It’s like 300 or 400 gigabytes.’ That’s a lot of data – except not for us.”

Panasas knows something about adapting to the rapidly changing HPC environment. The company was founded in 1999 as a hardware company. However, over the past few years, it’s shifted its focus to software, to the point where it sells its software – with the PanFS file system at its foundation – running on systems from Supermicro.

At the same time, over the past ten years, the company has looked to the enterprise HPC space as its key growth market, a trend backed up by research. According to a 2020 study by Hyperion Research and commissioned by Panasas, the worldwide market for HPC storage systems will grow an average of 7 percent a year, from $5.5 billion in 2018 to $7 billion in 2023, driven in large part by the ongoing digitization of businesses and the rise of AI in both established and newer HPC markets.

The study also found that the requirements for HPC storage system requirements are becoming increasingly more complex and diverse.

Anderson says the company is “focused on the intersection point between commercial enterprise customers and traditional HPC workloads. The central observation was an HPC storage system that is down has zero bandwidth. How can it be high-performance? So having the system up and stable and reliable became a central piece of our offering of the value proposition for the company. That has attracted Fortune 500 customers who have HPC practices in them. It is not as much value to the national labs. They value open source because they have a mandate of sorts from their charter to go and invest in the storage to make it faster. We’re a commercial product. The fact is that the enterprises are adopting AI. AI grew up in the HPC environment.”

The enterprise demand for HPC technologies to run their growing AI workloads will only increase, he says. A problem has been that while many organizations are starting AI projects, a high percentage stop before they go into production, in large part because they are unsure how to use it. They’re wrestling with different “species” of AI systems, from convolutional neural networks (CNNs) to generative adversarial networks (GANs). However, Anderson points to the growth of graph neural networks (GNNs) as a promising sign.

“That applies neural network technologies to more graph problems, like scheduling, truck driving or solving for the shortest path between here and there,” he says. “That kind of the development will open the door for these people who start a project, but they can’t figure out how to make value out of it. The new species [of neural networks] will help you get value out of that.”

Overall, Panasas isn’t overly concerned about their place in enterprise HPC. More than half of its customers have been running an AI project on its storage for several years. For those with HPC installations, it’s been easy for them to spin up such a project – buying a couple of GPUs, installing some nodes and trying it out. Those running the projects have not complained about the performance from Panasas’ technologies.

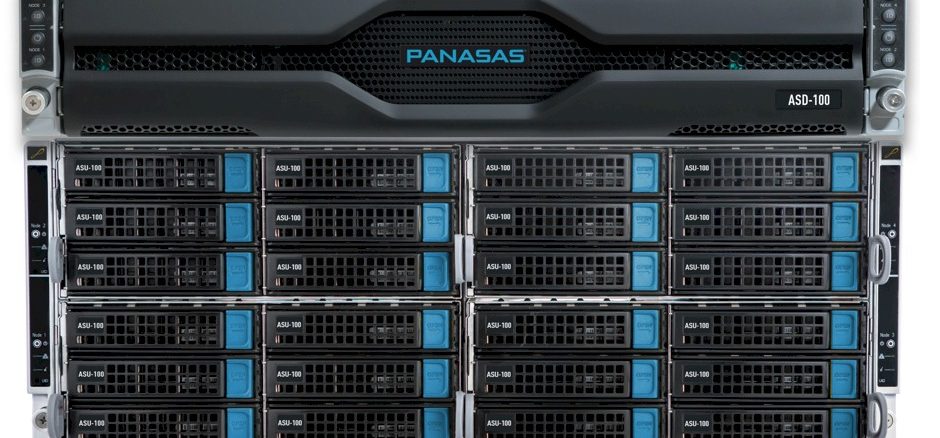

However, taking a look at the changing environment, where more of these experimental projects are turning into production events, Panasas did see a shortcoming in its portfolio. The vendor essentially was a one-product company, selling the ActiveStor Ultra, a system with multiple iterations and running the PanFS file system platform and aimed at the midrange for companies running mixed workloads, with large and small files and metadata operations. However, more than half of AI projects start small – 100 TB to 200 TB, or less – and then grow.

To address these evolutions, Panasas earlier this month grew its portfolio to three products, adding the all-NVMe flash system ActiveStor Flash for smaller workloads like AI and machine learning training, life sciences and electronic design automation projects, and the high-end ActiveStor XL for large-scale environments that include workloads with cooler datasets and large file sizes. Those can include seismic exploration, scientific and government research, and manufacturing.

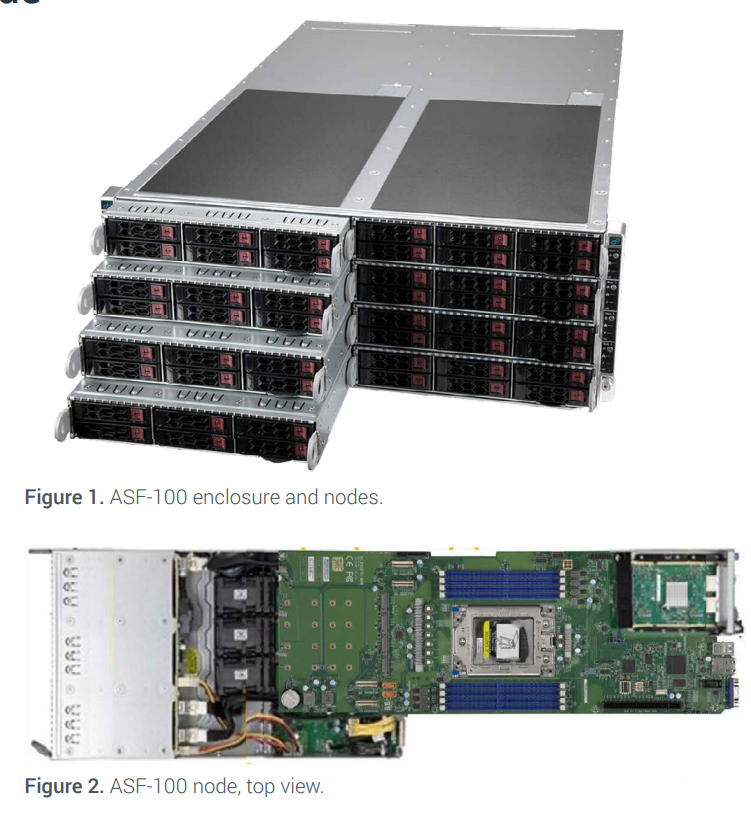

“We needed something that would come down in full capacity from our current three-quarters-of-a-petabyte offering,” Anderson says. “That’s the smallest you can get from the current offering, so we went with an all-flash product – again, on Supermicro hardware – with the same PanFS, the same dynamic data acceleration internals inside it. It starts at 200TB and the rationale is two-fold. One is because we do scale-out, there’s a minimum of eight of these nodes for us, but you could string multiple chasses together and use it for a high-performance scratch base if you’re traditionally a HPC customer or you could just buy the 200 TB and create an AI project for your life sciences project. Both of those start much smaller.”

“We needed something that would come down in full capacity from our current three-quarters-of-a-petabyte offering,” Anderson says. “That’s the smallest you can get from the current offering, so we went with an all-flash product – again, on Supermicro hardware – with the same PanFS, the same dynamic data acceleration internals inside it. It starts at 200TB and the rationale is two-fold. One is because we do scale-out, there’s a minimum of eight of these nodes for us, but you could string multiple chasses together and use it for a high-performance scratch base if you’re traditionally a HPC customer or you could just buy the 200 TB and create an AI project for your life sciences project. Both of those start much smaller.”

ActiveStor Flash comes in a 4U, eight-node, 19-inch rackmount chassis and holding four or eight storage nodes. Each node is managed by the PanFS software.

ActiveStor Ultra XL include eight hard drives, rather than the six in the Ultra system. Another change is using larger NVME SSDs for both small files and metadata rather than SATA SSDs. It delivers 5.76 PB in a single 42U rack.

“It’s a higher capacity, more capacity-optimized product that works great for oil and gas, which has pretty much only large file workloads, but it also works for a less frequently accessed, slightly cooler archives,” he says. “It’s still high performance, but it’s not a cold archive. It’s lower dollars per terabyte.”

With the new systems, “this is the first time in the company’s history that we’ve had an honest-to-goodness portfolio with a range of different capabilities for different customers as opposed to just that one,” Anderson says. “We’ve had several different products in the market at the same time, but they were all just a mixed-workload, midrange product.”

Panasas sees the expanded portfolio as a way to grow its presence in the dynamic enterprise HPC space. Some companies only want all-flash systems, others are looking for more flexibility and options. The new systems coupled with the ActiveStor Ultra stalwart means that Panasas can compete for more business than what the single Ultra product allowed.

More changes could be on the way. Since shifting to a software company, the vendor has put the ActiveStor Ultra software onto Supermicro systems. As the transition to a software company accelerates, Panasas is likely to port its software to more hardware platforms, Anderson says. The software will become more portable and the vendor can move it rapidly with the new class sizes.

“We’d like to be able to provide it on the hardware of their choice,” he says. “The reason we call it portable rather than software-defined is we look at a piece of hardware from an engineering perspective and look at the failure modes of that hardware. We change our software, if we need to, to include optimal recovery paths for each. That’s part of the appliance model. We want to stay with that appliance model, but we don’t want it to be just appliances from Supermicro hardware.”

Be the first to comment