Renee James knows about processors and she knows about the cloud. In a career at Intel that spanned more than 28 years and saw her rise to become president of the chip giant, she also ran a range of divisions within the company, including HPC, software and the cloud.

She left Intel to found Ampere Computing in 2017, one of a number of chip makers that are building processors optimized for a rapidly changing IT environment that is stretching out from central datacenters to the cloud and out to the edge and putting an increasing emphasis on factors beyond chip performance, including latency, power efficiency and platform scalability.

The company acquired the assets of Applied Micro, one of the pioneers in developing processors based on the low-power Arm architecture with its X-Gene chips and has since run out its 80-core Altra chip and, last year, its 128-core Altra Max, both chips based on Arm’s Neoverse design. Now the company this year is planning to unveil a 5 nanometer chip that will be based on its own in-house Arm-compliant cores.

For decades, James was a vocal proponent of the Intel architecture and the company’s driving of improvements to chips driven by Moore’s Law. However, the compute environment is changing. Not only is it increasingly distributed and cloud-based, but the development tools and software enterprise are using also are changing. Developers are using tools like microservices, containers, and Kubernetes controllers. Processor designs and architectures need to change as well to keep up with evolving demands of organizations as they adopt the cloud and the edge.

“How do we know we’re in a new phase of compute?” James, now chief executive officer at Ampere Computing, asked during a talk at the recent virtual ISSCC 2022 event. “We know because the underlying software approaches changed, the tools have changed, the way we build software, the way software is distributed and how it’s consumed through the cloud is fundamentally different than in the era of server computing. One of the hallmarks of a new phase of compute is you use the technology that’s available to you to build a new operating environment. That’s exactly what we’ve been doing in the cloud today. The industry is taking the X86 architecture and the approach pioneered over 40 years ago for the building of the PC architecture and applied it to cloud computing and the modern datacenter.”

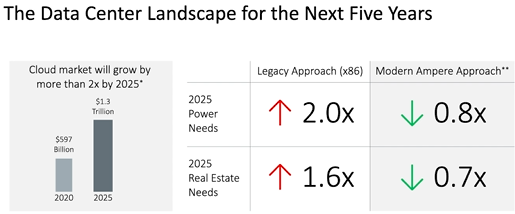

That’s not going to cut it anymore, she said. Using the same architecture – in this case, those used by Intel and AMD – will create further problems around power consumption and datacenter space. The semiconductor sector needs to come up with sustainable products that will reduce the carbon footprint. James noted that the expected growth in the cloud datacenter market. Synergy Research Group said that global enterprise spending on cloud infrastructure services in 2021 reached $178 billion, a 37 percent year-over-year increase.

“If the datacenter providers continue to deploy legacy solutions, it will consume two times the power and 60 percent more real estate than what’s being consumed today,” she said. “On the other hand, if they use cloud-native solutions like the ones designed by Ampere, they can deliver similar performance – if not even better performance – at a fraction of the power and real estate footprint.”

Ampere Computing is one of a number of vendors looking to leverage the Arm architecture for hyperscale, HPC and cloud-based datacenters and as we have reported, such companies have been the target of Ampere Computing since its founding. Some companies, like Amazon Web Services are rolling out their own chips – in Amazon’s case, the Graviton line – and Nvidia also is looking to expand its portfolio, especially in the wake of the demise of its ill-fated $40 billion bid for Arm. But no one is the clubhouse leader yet in the effort to bring Arm into the datacenter. It’s still a mashup of vendors.

There also the expanding world of accelerators, from GPUs to FPGAs, and workload-optimized silicon like data processing units (DPUs). At the same time, Intel is looking to re-establish itself as the top chip maker after several years of stumbles, and those chips include GPUs – starting with Ponte Vecchio – and other silicon.

So it’s going to be an active and highly competitive semiconductor market. At ISSCC, James and several other Ampere Computing officials spoke about the need for processor architecture to change along with enterprise demands, which has put pressure for the way chips are designed to change.

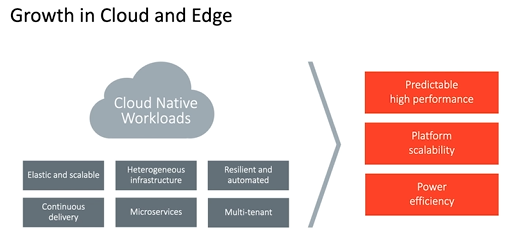

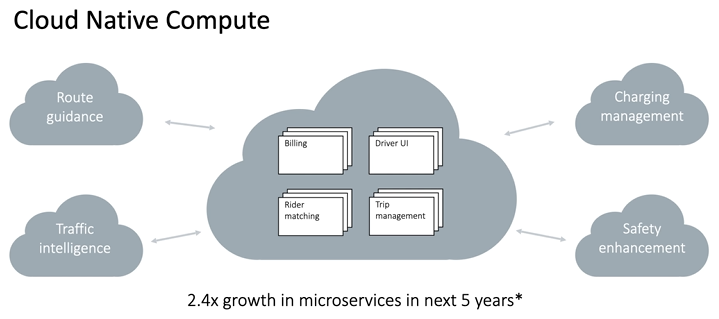

Ampere Computing chief product officer Jeff Wittich said organizations are moving to the cloud because services can be seamlessly deployed across vast hardware infrastructures. Developers are using microservices to more quickly build more innovation applications and to more easily scale individual components, which is important for companies in such sectors as ride hailing, where thousands of microservices can be scaled to provide everything from matching riders and drivers to mapping the rides to billing.

Artificial intelligence and machine learning further improve such services and much of the inferencing is being done at the edge to drive down latency. High performance and low latency will continue to be important as such technologies as autonomous vehicles come into play.

“As cloud infrastructure becomes more distributed and less centralized to meet critical, low-latency requirements, having a power-efficient processor that can fit in the more constrained power and thermal envelopes at the edge while still delivering high compute performance is a top requirement,” Wittich said. “It is critical that the underlying hardware enables the desired elasticity, resiliency, automation and portability required by cloud service providers and cloud-native developers. For this, you need the right type of CPU, one that delivers predictable high performance.”

This enables the cloud to deliver the necessary performance at all times without degraded service platform scalability and to scale out to the edge, where space is even more constrained.

That comes with some key architectural requirements that are different from what has typically been needed during the decades that led up to the cloud, according to Ampere Computing chief technology officer Atiq Bajwa. The focus traditionally has been on peak performance for workloads that tended to be composed of large, monolithic software applications and density was a key consideration.

However, the drive for atom-scale architectures has slowed the cadence and generation-to-generation improvements in performance, density and power, Bajwa said, adding that process design rules are more complex.

“Meeting the performance and functionality needs of today’s cloud datacenters increasingly requires that we assemble multiple pieces of silicon together in a package,” he said. “Performance – and consistent performance – under load with multiple independent and potentially interfering workloads all running on a single server. Power efficiency is a first article, not just because of its impact on performance and total cost of ownership, but also because of its implications on sustainability. Finally, the software landscape is also quite different, with workloads increasingly composed of collections of software packages and an array of microservices deployed in [virtual machines] or containers with multi-tenancy the norm. With all that has changed, what does it mean to build cloud-native products?”

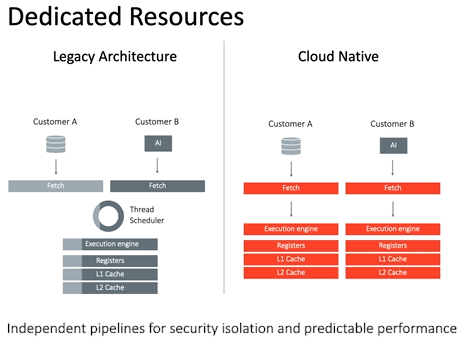

For Ampere Computing, it has meant taking a clean-slate approach in both what they built and how they built it. The architecture needs to provide dedicated hardware for performance, consistency and security isolation, the frequency must be consistent, performance and consistency means a cache hierarchy and memory subsystem with high and consistent bandwidth to feed the CPU cores at scale and under high loads.

The new chips are demanding high large-scale integration, high-volume manufacturing and higher bandwidth.

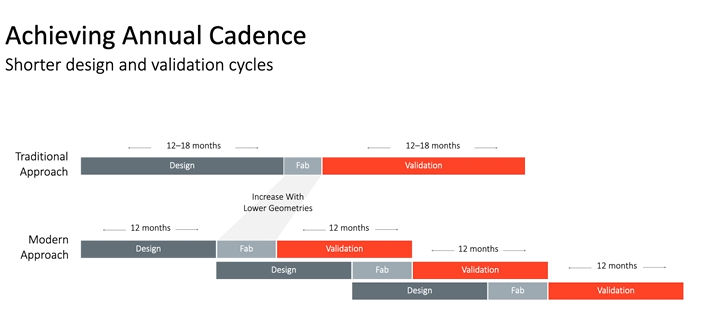

“With the increasing complexity, validation and emulation become critical pieces to ensure high quality of the design,” said Mitrajit Chatterjee, senior vice president of silicon engineering. “All these designs must converge faster and seamlessly to meet a regular and rapid cadence of cloud software designs.”

Yesh Kolla, vice president of engineering at Ampere Computing, noted that to keep pace with compute density needs, the company uses smaller disaggregated dies integrated at the package level.

“By disaggregating into chiplets, we can continue increasing the overall chip-level transistor count by increasing the number of die chiplets while the individual die areas can be optimized for yield and manufacturability,” Kolla said. “Disaggregation does push the integration challenges to other areas. Advanced packaging techniques must adapt to efficiently integrating multiple dies. Die-to-die link latency and bandwidth must continue improving, while also increasing signal density and reducing power. Manufacturing, testing, validation, and firmware has evolved to manage multiple dyes in a package.”

Ampere Computing also improves the performance by choosing designs the minimize communication and routing, finding logical hierarchies efficiently, working through design partitioning, and using selective and highly customized circuits to speed up critical paths, among other techniques, he said. There also is work done in conjunction with the vendor’s foundry partner, Taiwan Semiconductor Manufacturing Co, to help reduce the power consumption and footprint of the chips as well as developing advanced power management capabilities and innovative I/O designs.

The company also wants to keep a steady cadence of new chips every year, Chatterjee said.

“Design convergence and product design cycle is where everything comes together,” he said. “Keeping up with the server demands, the cloud server semiconductor is executing at a rapid pace. At Ampere, our infrastructure is now built to support multiple design stage overlaps, sharpening cycle times by planning for silicon success and developing all system collaterals. For post-silicon success, key is IP validation, emulation, firmware and platform readiness play key roles.”

This is curious. Ampere acquired Applied Micro for the same reason that Qualcomm acquired Nuvia: in order to obtain the licensing necessary to create a true custom core design like Apple has with their smartphone and PC SOCs. Why did Nvidia attempt to buy ARM Holdings instead of an entity like Applied Micro as Ampere did? Or they could even buy Ampere right now?