While there is a lot of hype, there is no question that quantum computers are going to revolutionize computing. But we are still in the early stages of exploring quantum development, and truly useful quantum systems are still years away. That does not mean that quantum lacks opportunities, however, and companies such as Dell and quantum startup IonQ are exploring the possibilities of hybrid systems that combine classical computer systems with quantum hardware.

IBM currently holds the record for the world’s largest superconducting quantum computer, with its Eagle processor announced last November packing in 127 quantum bits (qubits). But many experts believe that machines with many more qubits will be necessary in order to improve on the unreliability of current hardware.

“Superconducting gate speeds are very fast, but you’re going to need potentially 10,000 or 100,000 or even a million physical qubits to represent one logical qubit to do the necessary error correction because of low quality,” said Matt Keesan, IonQ’s vice president for product development.

Keesan, speaking at an HPC community event hosted by Dell, said that today’s quantum systems suffer greatly from noise, and so we are currently in the noisy intermediate-scale quantum (NISQ) computer era, unable yet to fully realize the power of quantum computers, because of that need for a lot more qubits to run fully fault tolerant quantum computers.

This NISQ era is projected to last for at least the next five years, until quantum systems have developed enough to be able to support qubits in the thousands.

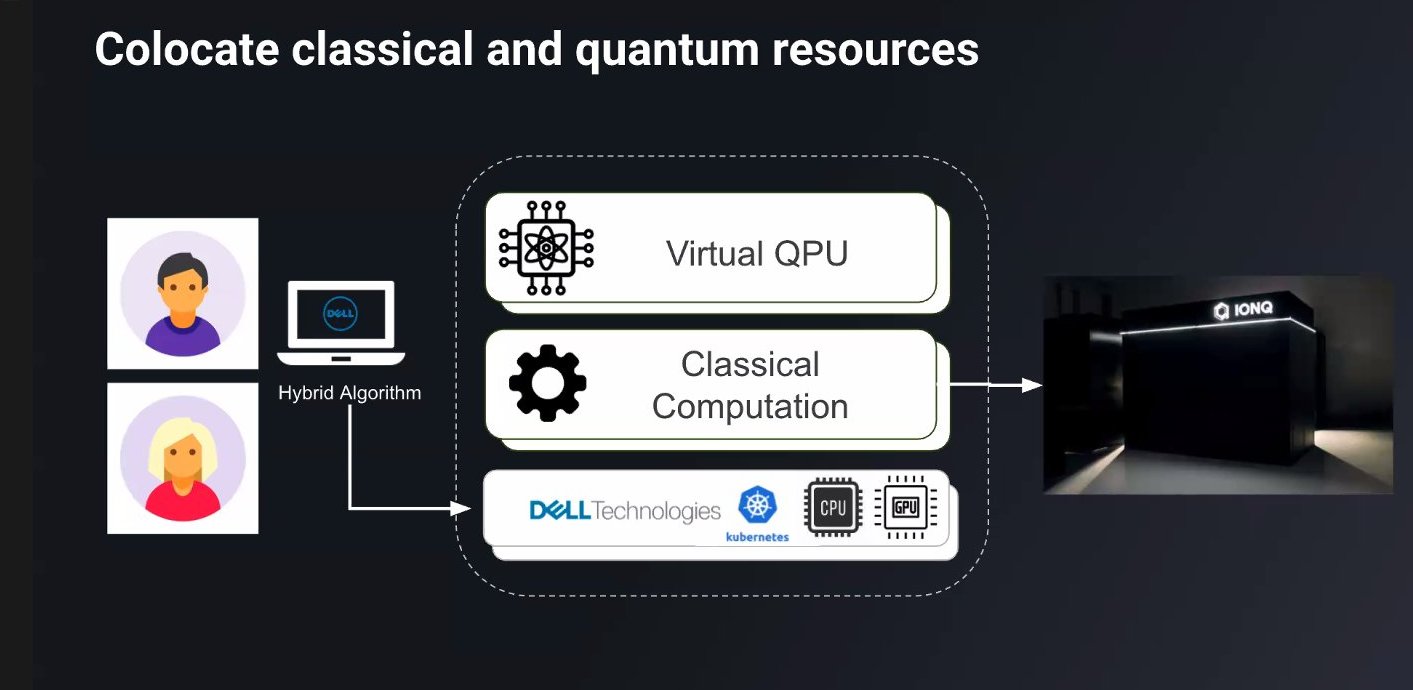

In the meantime, researchers can still make advances by pairing current quantum systems with traditional classical computers, in a way that Keesan compares with adding a GPU to a server.

“It turns out the quantum computer by itself isn’t enough,” he declared. “Just like a GPU is more useful when paired with a regular CPU, the quantum processing unit or QPU is more useful today when paired with a classical computer.”

Keesan cited some examples of problems that are amenable to this treatment. One, the Variational Quantum Eigensolver (VQE) algorithm, is used to estimate the ground state energy of small molecules. Here, the optimiser runs on a classical computer while the evaluation of that output happens in the quantum computer, and they work together back and forth iteratively.

Another, the quantum approximate optimisation algorithm (QAOA) can find approximate solutions to combinatorial optimization problems by pairing a classical pre-processor with a quantum computer. Quantum circuits can also be used as machine learning models, with the quantum circuit parameters being updated by the classical computer system and evaluated using quantum methods.

More explanation of this is available on IonQ’s blog, but the trick with these hybrid applications apparently lies in finding the right control points that allow the quantum and classical portions of the algorithms to effectively interact. VQE does this by creating a single quantum circuit with certain parameterized components, then using the classical optimisation algorithm to vary these parameters until the desired outcome is reached.

But this iterative process could easily be very slow, such that a VQE run might take weeks to execute round robin between a classical computer and a quantum computer, according to Keesan, unless the quantum and classical systems are somehow co-located. This is what Dell and IonQ have actually demonstrated, with an IonQ quantum system integrated with a Dell server cluster in order to run to run a hybrid workload.

This integration is perhaps easier with IonQ’s quantum systems because of the pathway it has taken to developing its quantum technology. Whereas some in the quantum industry use superconductivity and need the qubits to be encased in a bulky specialised refrigeration unit, IonQ’s approach works at room temperature. It uses trapped ions for its qubits trapped ions for its qubits suspended in a vacuum and manipulated using a laser beam, which enables it to be relatively compact.

“We have announced publicly, we’re driving towards fully rack-mounted systems. And it’s important to note that systems on the cloud today, at least in our case, are room temperature systems, where the isolation is happening in a vacuum chamber, about the size of a deck of cards,” Keesan explained.

Power requirements for IonQ’s quantum processors are also claimed to be relatively low, with a total consumption in kilowatts, “So it’s very conceivable to put it into a commercial datacentre, with room temperature technology like we’re using now,” Keesan added.

For organisations that might be wondering how to even get started in their quantum journey, Ken Durazzo, Dell’s vice president of technology research and innovation, shared what the company had learned from its quantum exploration.

One of the key ways Dell found to get started with quantum is by using simulated quantum systems, which Durazzo refers to as using virtual QPUs or vQPUs, to allow for hands-on experimentation to allow developers and engineers to become familiar with using quantum systems.

“Some of the key learnings that we identified there were, how do we skill or reskill or upskill people to quickly bridge the gap between the known and the unknown in terms of quantum? Quantum computation is dramatically different than the classical computation, and getting people with hands-on experience there is a bit of a hurdle. And that hands on experimentation helps get people over the hurdle pretty quickly,” Durazzo explained.

Also vital is identifying potential use cases, and Durazzo said that zoning those down to a level of smaller action-oriented types of activities is key to really understanding where a user might find a benefit in terms of quantum computation, and therefore where to place the biggest bets in terms of solving these types of issues.

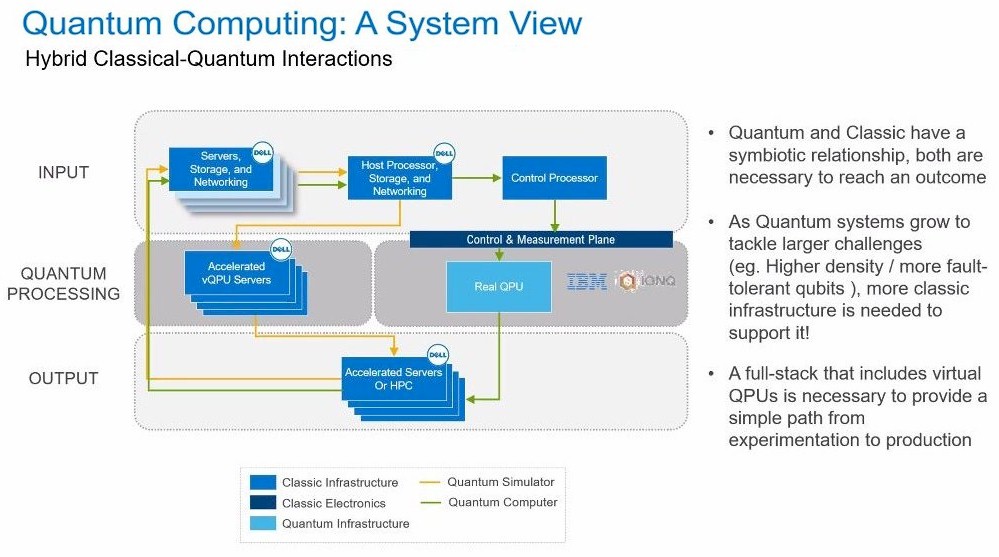

Dell also decided that bringing into operation a hybrid classical-quantum system would best suit their purposes, one in which it would be possible to transit workloads between virtual and the physical QPUs to provide a simple path from experimentation to production.

“All of those learning activities enabled us to build a full stack suite of things that provided us the tools that allowed us to be able to integrate seamlessly with that hybrid classical quantum system,” Durazzo said.

In Dell’s view of a hybrid classical-quantum computer, the processing capabilities comprise both virtual QPU servers and real QPUs that deliver that quantum processing capability. This arrangement provides the user with the ability to simulate or run experiments on the virtual QPUs that will then allow them to identify where there may be opportunities or complex problems to be solved on the real QPU side.

“One area that we have focused on there is the ability to provide a seamless experience that allows you to develop an application inside of one framework, Qiskit for example, and run that in a virtual QPU or a real QPU just by modifying a flag, without having to modify the application, without having to change the parameters associated with the application,” Durazzo explained.

Sonika Johri, IonQ’s lead quantum applications researcher, gave a demonstration of a hybrid classical-quantum generative learning application. This was trained by sampling the output of a parametrized quantum circuit, which is run on a quantum computer, and updating the static parameters using a classical optimisation technique. This was run on both run on both a quantum simulator – a virtual QPU – as well as a real quantum computer.

That example application was run using just four qubits, and Johri disclosed that the simulator is actually faster than the quantum computer at that level.

“But when you go from 4 to 40 qubits, the amount of time and the amount of memory the simulator needs will increase exponentially with the number of qubits, but for the quantum computer, it is only going to increase linearly. So at four cubits the simulator is faster than the quantum computer, but if you scale up that same example to say, 30 to 40 qubits, the quantum computer is going to be exponentially faster,” she explained.

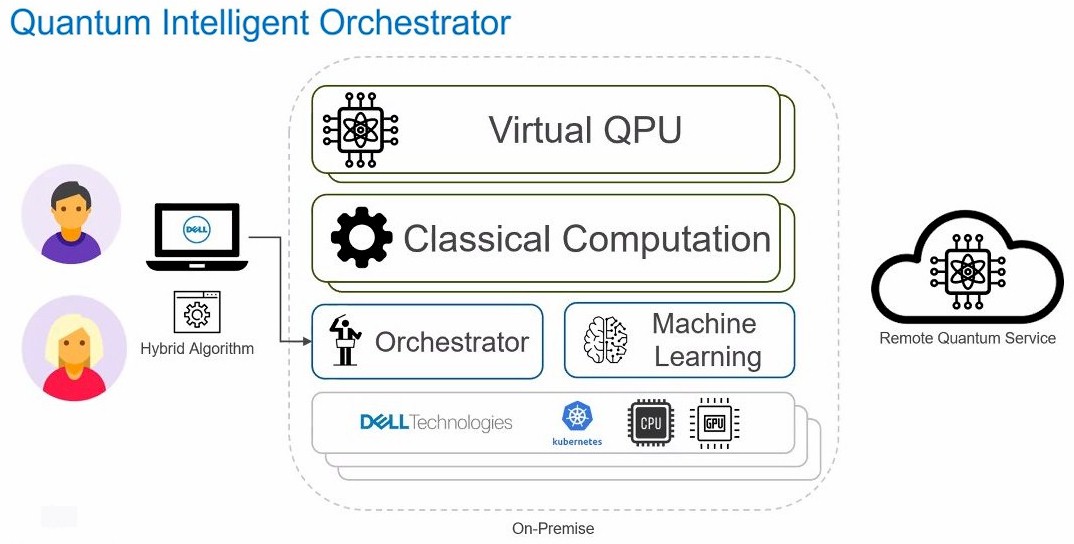

Dell has also now begun to further adapt its hybrid classical-quantum computer by adding intelligent orchestration to automate some of the provisioning and management of the quantum hardware, and further optimize operations.

“We have taken that two steps further by adding machine learning into an intelligent orchestration function. And what the machine learning algorithms do is to identify the characteristics associated with the workload and then match the correct number of QPUs and the correct system, either virtual or real QPU, in order to get to the outcomes that you’re looking to get to a very specific point in time,” Durazzo said.

Quantum computer hardware will continue to evolve, and may even pick up pace as interest in the field (and investment) grows, but Dell’s Durazzo believes that the classical-quantum hybrid model it has developed is good for a few years yet.

“I think that diagram actually shows the future state for a very long time for quantum of a hybrid classical-quantum system, where the interactions are very tight, the interactions are very prescriptive in the world of quantum and classical for growth together into the future,” he said. “As we further grow those numbers of qubits, the classical infrastructure necessary to support this quantum computation will grow as well. So, there should be a very large increase overall in the system as we start becoming more capable of solving more complex problems inside the quantum space.”

Be the first to comment