It is not news that China wants a rich, native, diverse semiconductor ecosystem to feed its largest consumers of compute. From supercomputing systems to those that power the country’s largest online social and retail platforms, there is a close reckoning for US-based chipmakers.

China’s top supercomputers — including the Sunway TaihuLight machine or the mighty Tianhe-2A — are packed with native technologies, from chips to interconnects. And its social media giants, including Alibaba and Baidu, are already in production with their own devices for AI training and inference at massive scale.

One of China’s hyperscalers, Tencent, has yet to roll out its own chips. But it’s worth noting it has invested mightily in Shanghai-based Enflame, which will soon be releasing its first-generation AI training devices — DTU 1.0 — which have been in development since 2018. Over the last three years, Enflame has raised close to $500 million, with Tencent leading the charge.

What is interesting about the DTU 1.0 device is that there is nothing particularly interesting about it at all. In other words, it isn’t trying to do anything outlandish. That’s not to say it is a simple device as there are some unique features, but Enflame is not taking a route that pitches mind-boggling core counts, non-mainstream precision or model types, or taking chances with packaging.

The question in our minds is what this device can do that a GPU can’t for large-scale training. The answer might simply be that it can be a Chinese native technology for Enflame’s most enthusiastic backer, Tencent — the company that needs to follow its Chinese hyperscale brethren by building (or buying its way into) homegrown AI hardware.

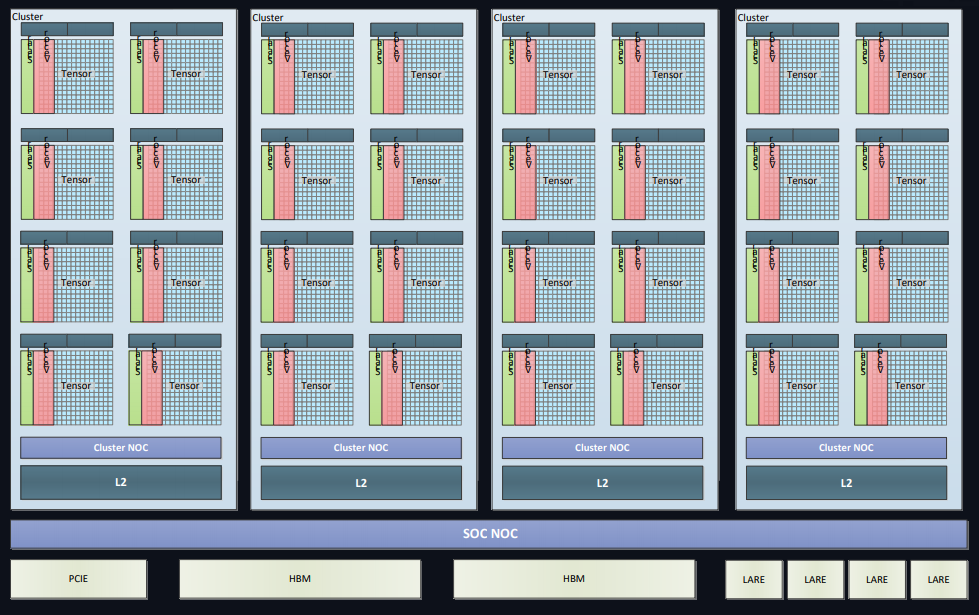

We finally a got a look at Enflame’s 12nm FinFET training SOC at Hot Chips this week. The neat package below shows 32 “AI compute cores” separated into four clusters. Forty additional host processing modules push the data around along four of Enflame’s own interconnects. Each device has two HBM2 modules for 512GB/sec bandwidth.

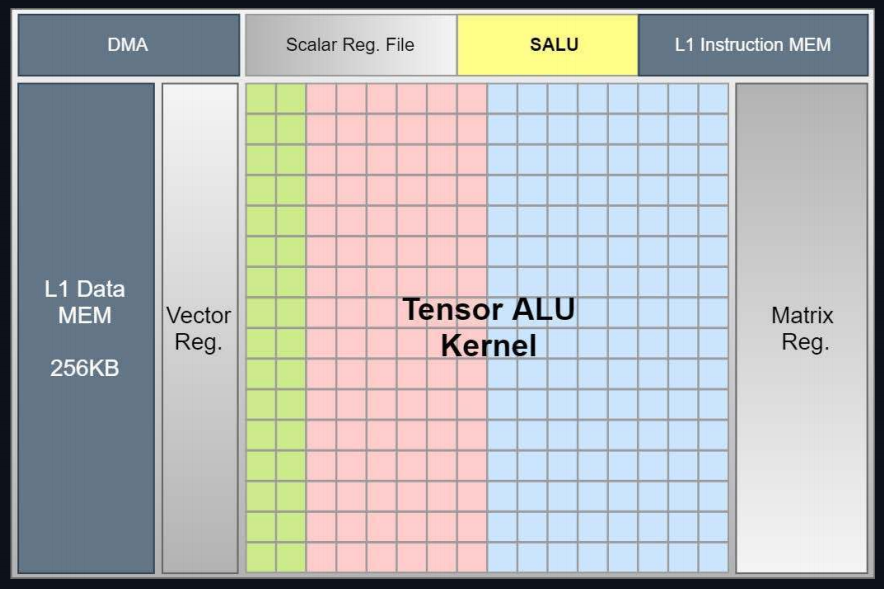

The AI portion has much in common with the same TensorCore concept we saw first from Nvidia and which is now being added into designs for several other CPUs. Enflame says it can reach 20 teraflops at FP32. The device also supports FP16 and Bfloat (both reaching peak 80 teraflops) and can support mixed precision workloads with Int-32, 18 and 8. Each of these is based on a 256 tensor compute kernel.

Here is a closer look at the tensor units:

The chip is designed with GEMM operations and CNNs in mind, which is right up Tencent’s alley as it is largely driven by visual media (video, photos, ecommerce).

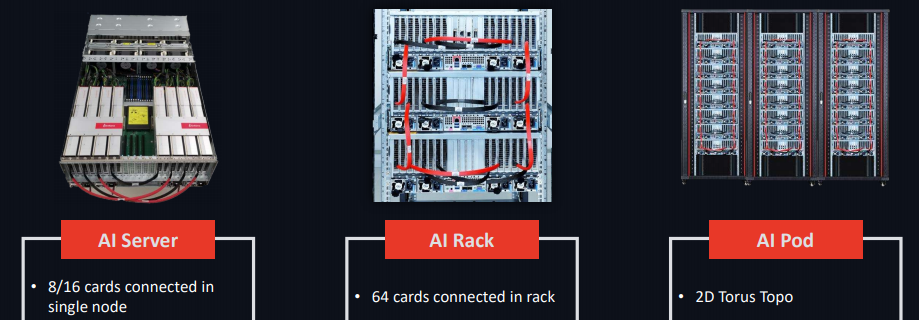

The startup is providing a PCIe Gen4 accelerator card called “CloudBlazer” and consumes between 225W and 300W depending on configuration, the highest consumer being the CloudBlazer T21 based on the Open Compute Project’s OAM (open acceleration model) design. In addition to the PCIe-only device, Enflame has packed together systems, ranging from a single node to rack to “pod” featuring its 2D torus interconnect.

Enflame shared scaling results for the various configurations, showing single cards hitting 81.6 per cent when scaled to 160 cards and 87.8 per cent when packaged into a node. This is on par with what we’ve seen with GPU scalability roughly, although it’s not an apples-to-apples comparison.

The startup has a shot at providing AI training acceleration for China’s hyperscalers but it also has some roots in the US. The CEO and co-founder, Lidong Zhao, spent 20 years in the Bay Area at both R&D and product roles with GPUs — although not at Nvidia. He spent seven of those years at AMD running product for its CPU/APU division before helping AMD establish an R&D center in China. Before that he was developing network security devices and also spent time at S3 Inc. working on GPU development.

Co-founder and Enflame COO, Zhang Yalin, was a colleague at AMD, serving as senior chip manager and technical manager for global device R&D with work on AMD’s early GPUs as well.

“Artificial intelligence is at the heart of the digital economy infrastructure of the future and a battleground for hard technology,” Enflame founder and CEO Zhao Lidong says.

“As a technology-driven company, we have planned and are fully implementing the product technology roadmap for the next three years, with joint development of hardware and software systems as the core for product iterations to establish the competitive advantage of Enflame technology in the market. At the same time, we will also increase the exploration of cutting-edge technologies in the field of artificial intelligence so that future innovation enables greater commercial value.”

Be the first to comment