Imagine a platform that hits a database with over a trillion online signals in a 100ms window and scores ML models over one million times per second to deliver near-real time results across over 100 million destinations. Now, imagine the costs of doing this each day, including retraining of large models, on-prem versus in the cloud.

Given the cost and latency, one might think this is all done on-prem given the high costs of moving data, training massive language models, and delivering a speedy inference product. For adtech giant, Quantcast, it is very expensive—from model training, scoring, storage, and compute but the nuanced TCO/ROI is still making a huge shift to AWS worth the effort. And so, at what point do companies decide that they’re going to face far higher costs (especially at the un-optimized outset) by making a big scary shift?

In 2016 when VP of Engineering, Chris Zimmerman and team looked at what a move from 18 global datacenters to AWS would take, he said the estimates for a pure lift-and-shift would results in 8-10X higher operational costs but what they left behind helps us understand how that leap in costs was palatable. “We were running datacenters around the world and it’s still the cheapest way to do this but it became a distraction as a company. Hardware was improving so fast and we were spending a lot of time just upgrading.”

He adds that finding the people to manage the on-prem infrastructure was an ever-present challenge and so too was working with a hardware environment that had hardware that was seven years old running alongside newer systems. A great deal of software resources had to go into navigating around failures and further, dedicating software folks to tackle new concepts meant carving out chunks of the large distributed infrastructure.

“We ran on crappy old hardware and we ran it into the dirt. That’s why the costs were so low,” Zimmerman says. “From an accounting perspective, everything was fully depreciated in the first three years. We’d just keep adding capacity and ended up with a very heterogenous environment. We modified the software to efficiently use the old hardware or a brand-new 36-core system. But there was no hardware RAID, very little redundancy at the node level, not a lot of dual power supplies. Where we spent our time was on the software becoming resilient to failures.”

Agility has its returns but comes at a price. “The big push was around changing how our engineering teams operated. We had to figure out how to move to AWS cost effectively because the numbers were horrendous. Just transferring data between locations was astronomical compared to what we did previously,” Zimmerman says. By this point in 2021, he says from a pure cost of hardware standpoint, they’re probably only paying double now with all the efficiencies they’ve been able to extract since the 2017 move. “And we don’t have teams dealing with hardware, teams can programmatically allocate hardware and we can auto-scale as well, so the TCO after all these years of work may show we’re running cheaper now.”

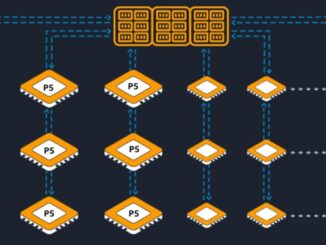

Of course, all of this took some serious collaboration with AWS, including several customized hooks to let Quantcast carry over everything from its own QFS file system to addressing the edge infrastructure (at publisher and exchange sites) and networking challenges.

For instance, AWS worked with Quantcast to bring a new product called “Bring Your Own IP” to bear where the adtech company could bring its own IP address ranges to Amazon’s network. This meant Quantcast no longer had to build its own fault-tolerant backbone and could instead seamlessly fail over to Amazon’s backbone with temporary costs during outages. They also worked with AWS to see how they could use S3 as a backing store after porting QFS to be S3-ready because if they used something for “normal” users like EBS or attached storage, that’s where the costs would certainly jump from on-prem. They collaborated to optimize S3 buckets for the 400Gb/s throughput they needed.

Quantcast engineering teams picked an interesting time to migrate. In 2017, machine learning, especially in adtech, was taking off—and so too were the costs for building and deploying increasingly large, complex models. Doing all of this via the freshly minted AWS partnership wasn’t cheap.

As Quantcast CTO, Peter Day, tells us, the first-run rudimentary language model they trained on AWS was around $225,000. “We were using a fairly naïve model then but we have that down now an order of magnitude,” Day says. Quantcast retrains the core language model (based on internet text extraction/NLP before a run through the language model). Inference costs are not high in the grand scheme but working around bottlenecks like getting data into the model are a greater challenge than costs.

We asked Day if there is a foreseeable future when Quantcast might be pushed back into the datacenter because of the prominence of AI training and its increasing costs, which will likely scale linearly with model complexity. “It’s possible,” he says, but not in the near term. “We always have to balance what’s economically feasible with what’s technically possible.”

Zimmerman was less certain about any return to the datacenter, even if models get larger and become too large/expensive to train and run on AWS. “Year over year, our costs to operate are going down so we can re-invest that into other areas, including doing more sophisticated models. Long-term that might counter-balance the need to go back to our own hardware and even if that happened, it would likely be a hybrid model.” This hybrid model could work well for Quantcast since they have already co-located some of their edge infrastructure with AWS. Inside those locations they could do some in-house, near-site processing and ship that back and forth.

“The cloud providers are getting so efficient at onboarding new hardware and giving access to it but there is a price point at which the economics won’t make sense, it won’t do to give an extra 40% to the cloud provider when you have a specialized use case. Machine learning algorithms are also getting more efficient with more chipsets and specialized chips via cloud providers that wouldn’t be available for our datacenters,” Day concludes.

Quantcast’s use case and pricing tale is not necessarily generalizable. After all, they have a lot of edge infrastructure located next to publishers and exchanges and bidding times and processes that many industries don’t need to match. Still, it’s interesting that the lift and shift and operational costs aren’t cheaper out of the gate—and for some users, may never be. But with personnel issues in the on-prem datacenters, a lack of agility, and need to make developers focused on the core product, the cloud works wonders, even if it’s not the cheapest option.

Still, for an industry that has more in common with high-frequency trading than most other workloads, the ROI math will remain a moving target. Balancing in real-time advertising and publisher demand with the right ad at the right time—and all for a lower cost than what the compute requires? That will continue to be a grand challenge.

Be the first to comment