Intel in October clarified its memory plans moving forward when it announced it is selling its NAND memory business to SK Hynix in a two-step acquisition that is worth $9 billion and will take until 2025 to be completed.

However, while the deal itself is unusual – the deal is expected to close in the second half of 2021 and the first step will include Hynix paying $7 billion for Intel’s SSD business and NAND fab in China, while Hynix will pay the remaining $2 billion four years later for the rest of Intel’s NAND operations – the message from Intel was clear: that at a time when the amount of data and demand for compute are both skyrocketing, a key growth area in the memory market will be in persistent memory. For Intel, that means its Optane business.

The growth in data – driven by the cloud, the Internet of Things (IoT), emerging technologies like artificial intelligence (AI) and data analytics – is accelerating rapidly, with IDC analysts predicting the amount of data being generated will accelerate an average of 26 percent a year, reaching 175 zettabytes in 2025. It presents a challenge to computing companies like Intel, which are trying to keep up with demand through more cores and accelerators along with shrinking dies as they look to offset the slowdown in Moore’s Law.

“This exponential growth of data combined with the new analytics capabilities that businesses are using to gain insights out of the data, such as AI, machine learning [and] big data analytics, that work on ever larger datasets are doing two critical things,” Alper Ilkbahar, vice president of Intel’s Data Platforms Group and general manager of its Intel Optane Group, said in a conference call with journalists. “The first one is to fuel the insatiable demand for computing. This is obviously great for a company like ours, which is in the business of computing. We get that huge demand for compute to process all this data. But the same forces also drive the need to bring more and more data close to the CPU and this is driving the demand for memory. The incredible growth of this data essentially is putting … some stress and strain on the datacenter architectures and creating new stress points for us to address.”

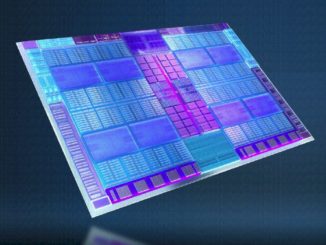

Transistor scaling and process shrinks have slowed relative to Moore’s Law, though the introduction of chiplet-based, disaggregated architectures are enabling continued growth in core counts. However, the industry is facing a growing gap between the demand for more memory capacity being driven by the rapid data growth and the slowing capability for DRAM to scale to meet that demand, Ilkbahar said. Memory needs to keep pace.

Persistent memory – also known as storage-class memory – is a bridge between DRAM and storage designed to do just that. Like DRAM, it’s directly accessible and it’s faster than hard disk drives (HDDs) and solid-state drives (SSDs). In addition, persistent memory can keep stored data even if the power has been turned off and sits on the DRAM bus, closer to the processor, an ideal spot to handle the large and complex datasets that are part of the modern datacenter.

Given that, it’s no surprise that Optane was front and center of this week’s virtual Memory and Storage Moment event by Intel. The chip maker, despite its deal to sell the NAND business to Hynix, did introduce new additions to its NAND SSD portfolio. There was the D7-P5510, a 144-layer TLC 3D NAND appliance aimed at cloud datacenter workloads that offers capacities of 3.84TB and 7.68TB and will be available this month. The D5-P5316, a highly dense and high-performing 144-layer QLC NAND device with up to 30.72TB of capacity and available in the first half of 2021.

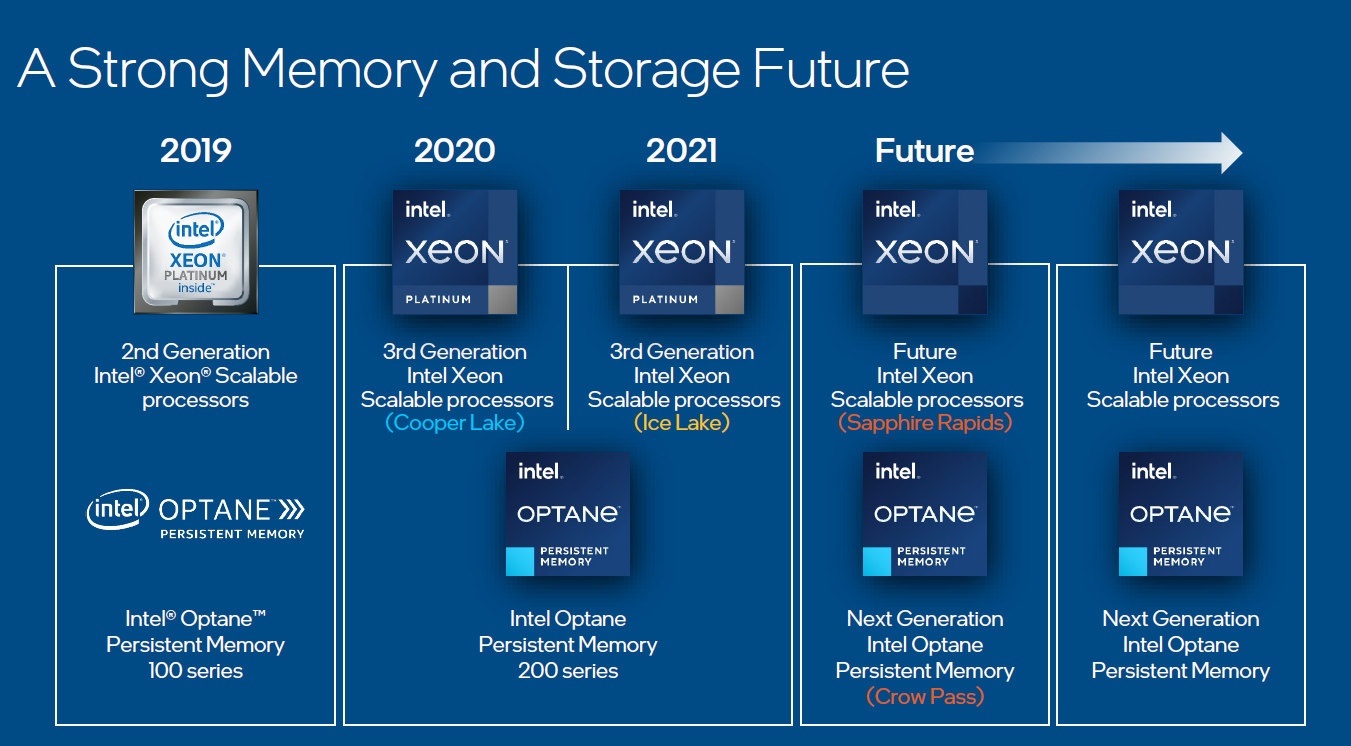

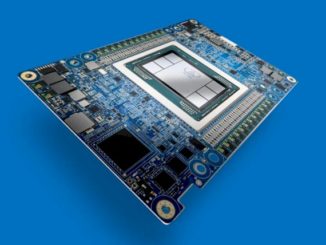

But much of the focus was on Optane, which first started coming to market in 2017. Last year the chip maker introduced the Optane 100 series in conjunction with the release of 2nd Generation Xeon Scalable processors. This week the company introduced three new Optane products, all of which are faster than their predecessors and one – the P5800X, codenamed “Alder Stream” – a datacenter drive that offers capacities of 400GB to 3.2TB, supports PCIe 4.0 and can reach 1.8 IOPS in a 70/30 read/write workload. David Tuhy, vice president and general manager of Intel’s Data Center Optane Storage Division, called it the world’s fastest datacenter SSD. It includes three times the random 4k mixed read/write IOPS of its predecessor, the P4800X, and 67 percent higher endurance, at 100 drive writes per day.

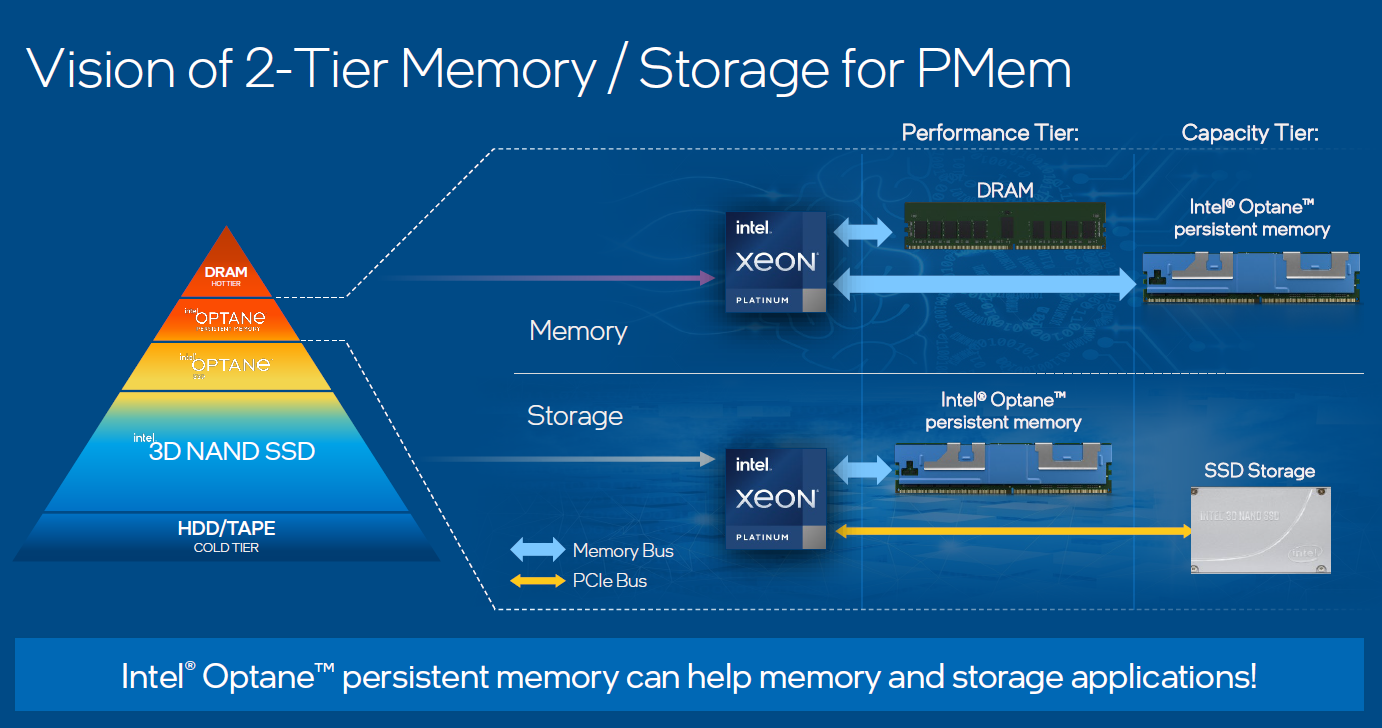

Intel isn’t the only memory maker looking at persistent memory. Other vendors, including Hynix as well as Samsung and Toshiba America Memory, also are making inroads. Intel is expecting that its head start with Optane, which was developed with Micron, will give it an advantage in what is expected to be a fast-growing market. Ilkbahar said that company is working to create a two-tier persistent memory and storage environment with Optane. In memory, Optane will work in the capacity tier, with DRAM in the performance tier. On the storage side, Optane will be used for performance and SSDs for capacity.

<<Intel two tier>>

“We’re definitely seeing now, step by step, a two-tiered memory and storage architecture vision becoming a reality,” he said. “You may wonder how persistent memory is able to remove some of these memory and storage bottlenecks in the server, and part of the answer is that we have a revolutionary media, we have a byte addressable [memory] and direct load store access and memory sitting on the DDR bus and a CPU really no longer waiting for data that’s normally in storage. And we also eliminate the entire storage stack software as well. It’s an entirely hardware interaction transaction system.”

Intel also has a roadmap that looks well beyond 2021. When it introduced the 100 Series, the company also said it was planning the second generation – the 200 Series, called “Barlow Pass” – that was launched in June with the “Cooper Lake” Xeon SP processors and also is compatible the “Ice Lake” Xeon SPs. The plan is to release the Ice Lake Xeons and 200 Series together as a platform, he said.

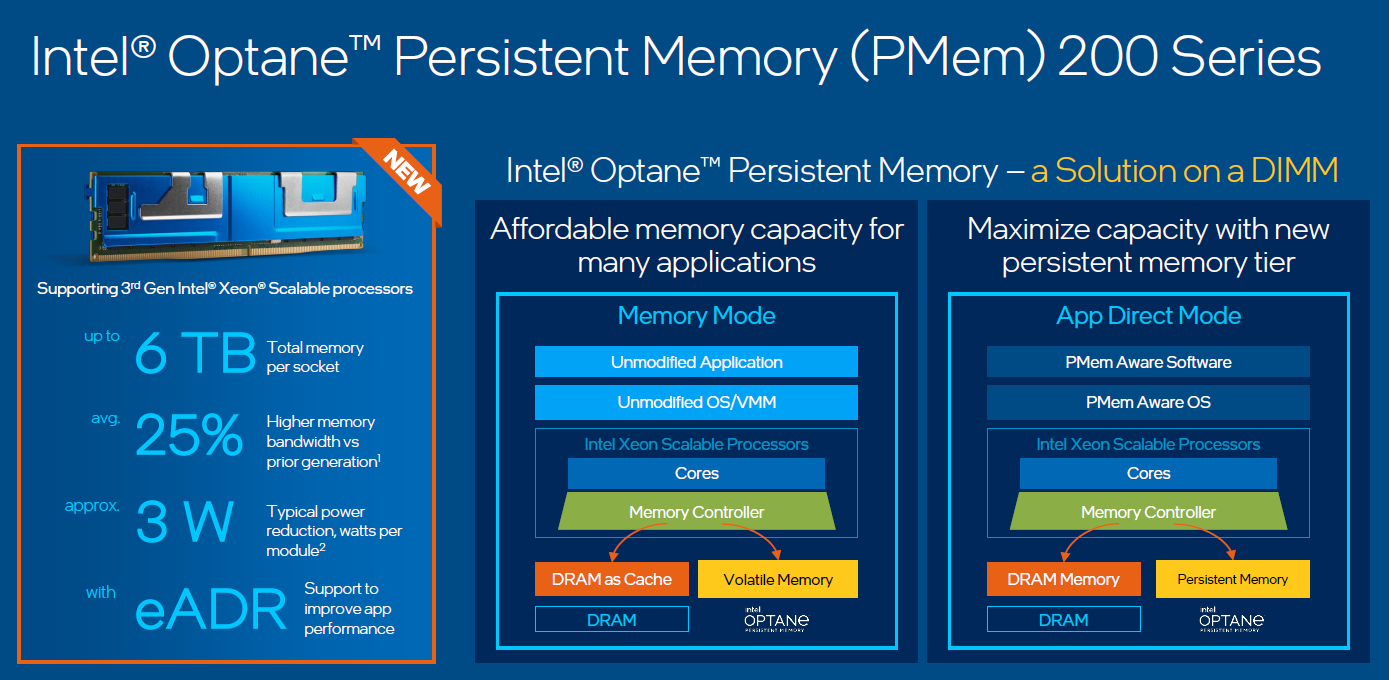

The 200 Series, which delivers up to 25 percent performance gains over the first generation at a lower power consumption point. It comes in two flavors, the Memory mode – which delivers large memory capacity without application changes and performance near that of DRAM – and App Direct Mode, which also enables large memory capacity and data persistence for software to access DRAM and persistent memory as two tiers of memory. About 60 percent of organizations current opt for Memory Mode, though as more software becomes available on App Direct, the mix is expected to shift toward App Direct, Ilkbahar said.

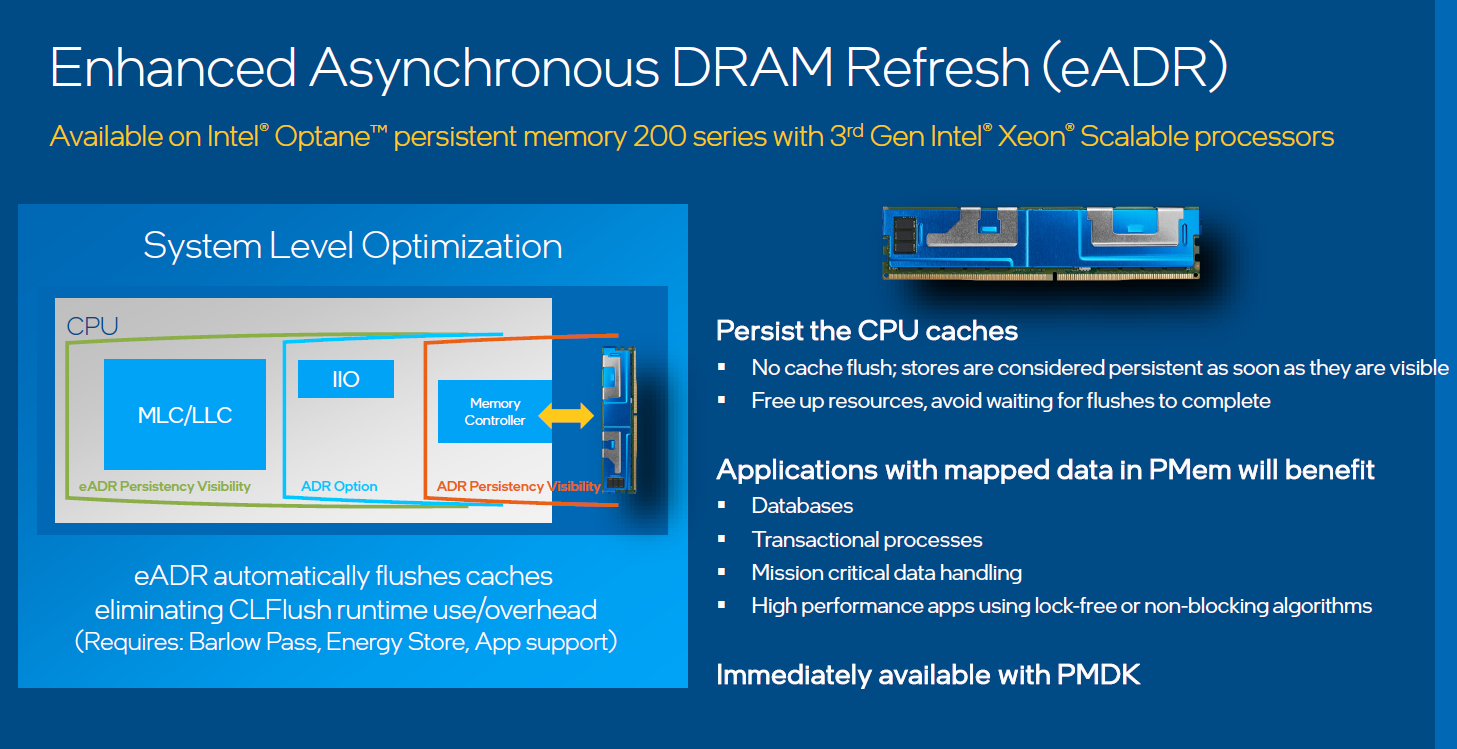

The 200 Series also comes with a feature called enhanced Asynchronous DRAM Repression (eADR) that is unique to persistent memory. It enables contents of the cache to be flushed automatically into the persistent memory, which improves application performance and protects against disruptions if power is lost.

“When you look at certain applications like databases or transactional processes, mission-critical data-handling applications or high-performance applications that are using non-blocking algorithms, one thing they have to worry about in every data access or data uptake is … ‘Do I care about this data in case I lose the power?’’ he said, adding that with these applications, data integrity is hurt if power goes out. “As a result, when they uptake this critical data, they flush the entire cache into persistent memory and they have to restart the cache. This is a significant performance impact. With this new feature – and this is essentially something that’s enabled already in the standard programing model – the application will simply check if the system is enabled with the eADR and, if so, you don’t need to flush the cache and lose the performance. You know that if the power were to go away, the system will automatically take care of that, so you don’t run into the danger of losing your critical data.”

Ilkbahar also announced the 300 Series “Crow Pass” Optane product, which will be available on the future “Sapphire Rapids” Xeon SP chips, which are expected to be released by late 2021 or early 2022. He declined to talk about specs or other architectural details of Crow Pass.

Be the first to comment