In a data-centric tech field that is shaped by emerging trends and technologies like artificial intelligence, analytics, greater mobility, and the Internet of Things (IoT), “optimization” has become a marching order for hardware and software makers.

As we have seen at Nvidia’s recent virtual GTC 2020 conference, CPUs and GPUs are not going to be enough for workloads that are generating massive amounts of data that need to be collected, stored and analyzed. Like we’ve noted, for Nvidia, its data processing units (DPUs) will be more important for the GPU maker than the CPU technology its planning to pick up in its $40 billion bid for Arm.

Applications and data are being created, stored, and accessed in myriad locations, not only traditional central datacenters but also the cloud and the edge and enterprises are under increasing pressure to innovate faster, which requires greater automation, which relies on technologies like AI and machine learning. And that pressure is shared with OEMs, which are looking to roll out servers, storage systems and other appliances that are optimized for particular workloads and environments. Dell Technologies for years has worked with some customers to offer servers that fit their particular needs. The vendor has worked with large enterprises and hyperscalers as part of its Extreme Scale Infrastructure business, developing specialized servers, proofs-of-concept, and similar offerings.

Dell has taken what it’s learned from such work to enable it to develop optimized systems for the mass market. Earlier this year, the company introduced the first of its PowerEdge XE systems aimed at offering the market nonstandard form factors for nonstandard use cases.

The XE2420, rolled out in February, a compact “short-depth” two-socket system that was made for edge deployments like networks for 5G, where space is tight and conditions can be harsh. It includes four Nvidia GPUs and offers 92 TB of storage to enable it to handle workloads like data analytics. The XE2420 also fit in with Dell’s view of the expanding IT environment of datacenters, clouds and edges as a continuum rather than separate areas, which drives the need for common components and software to ensure ease of use and easy movement of applications and data from one place to another.

“It is about taking something that is that is familiar to IT and putting it in a in a form factor that makes it more relevant to each case that they’re in,” Jonathan Seckler, senior director of product marketing at Dell’s Server and Infrastructure Solutions unit, tells The Next Platform. “In this particular case, we took a PowerEdge R440 motherboard and built it into an edge-specific use form factor, with short depth, improved thermal capabilities [and] very high-density flash storage to fit in that smaller, short form factor. … That was an easy win because edge computing is a huge growth factor. Everyone is talking about how data is exploding outside of the datacenter and we need to bring something to market in that space.”

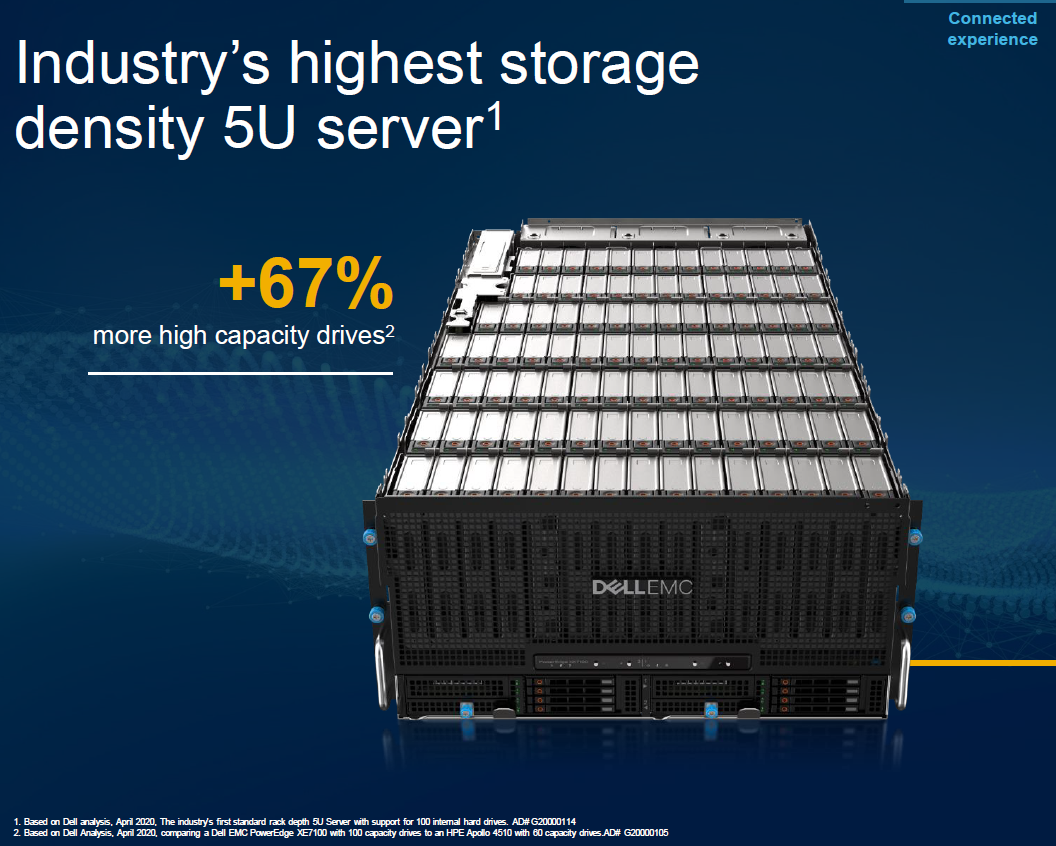

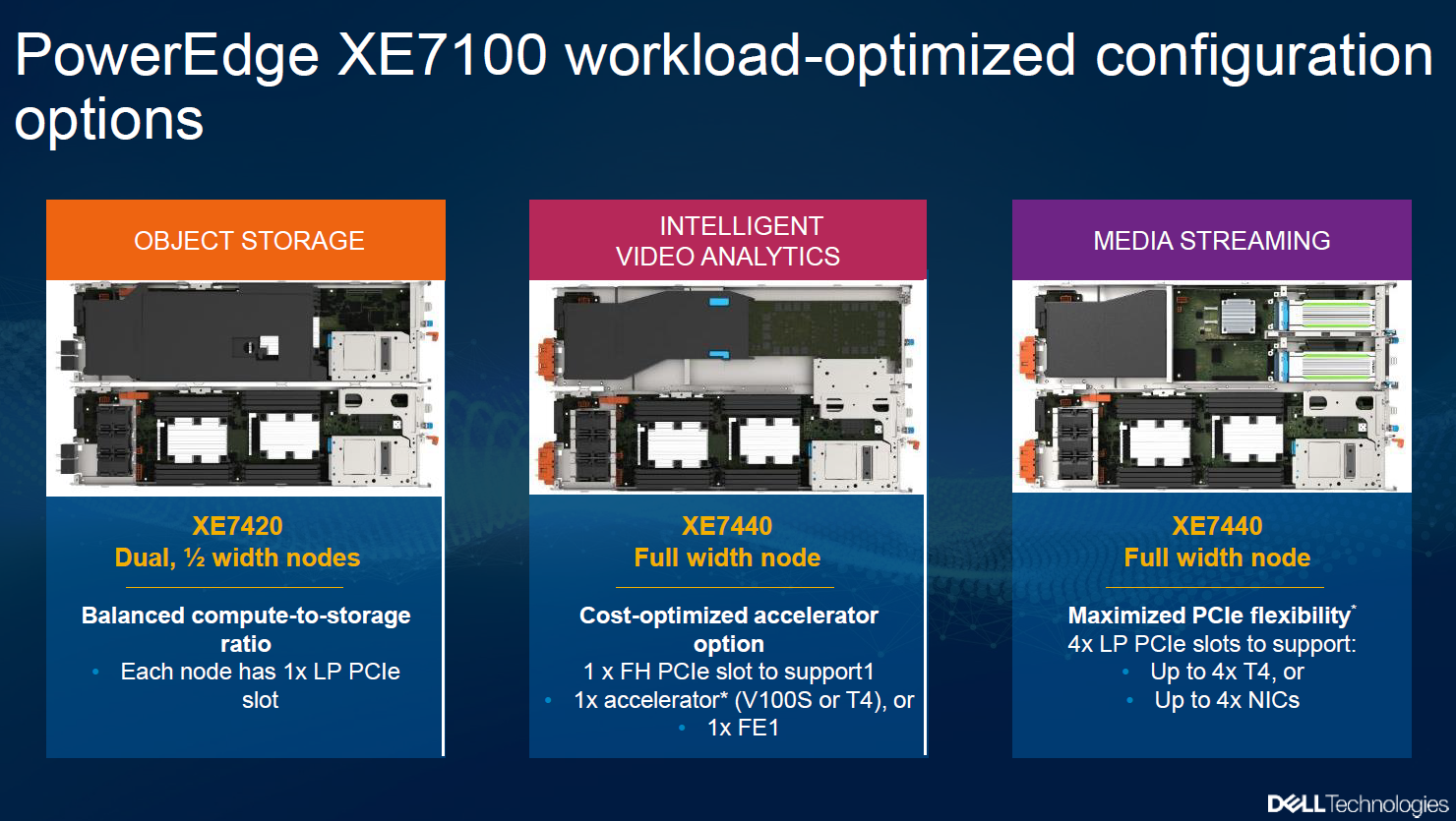

Eight months later, the tech giant is coming out with its latest PowerEdge XE system, this one aimed at the challenges presented by the world of big data. The XE7100, a 5U system with the ability to hold up to 100 hard drives of 16 TB or more, giving organization almost 1.5 PB of storage in a single chassis. It offers up to two dual-socket nodes, with up to 52 cores per nodes, and later this year will include up to four GPU accelerators and multiple memory options. It’s aimed at object storage, intelligent video analytics and media streaming, according to Seckler.

Dell leveraged what it had developed for the Datacenter Scalable Solutions (DSS) 7000, a dense hyperscale storage server. Engineers took capabilities from that system and put them into a form factor that could fit a standard 19-inch rack, he says.

“We redesigned that whole chassis around fitting into a standard 19-inch rack with the standard depth,” Seckler says. “They did a lot of work to support it with our OpenManage and iDRAC management suites. Now we’re able to leverage that knowledge and bring it to market for customers. And we target object storage for large CEPH-type storage clusters, video analytics, that kind of thing. With video storage, we’ve got some customers who have legal requirements to hold so many days of video surveillance or records on-premises in case of a crime or something like that. With PowerEdge XE7100 and the amount of storage it can hold, we can hold almost 60 to 90 days of high-definition video in a single box. That’s a huge advantage.”

The Flat Iron Institute, a New York City-based research organization that runs a collaborative environment for researchers, data scientists, and programmers who run such workloads as data analysis, theory, modeling and simulation, has been a DSS 7000 customer and is now adopting the XE7100, he says. The new systems can add to the collaborative nature of the work by enabling the organization to store large amounts of complex data that the researchers can access.

Dell also leveraged the PowerEdge C6420 server multi-node design based on a sled architecture to offer enterprises flexibility with configurations. The XE7100 takes the modular system’s design, which allows organizations to “either do a configuration of 100 drives to a single sled with accelerators or 50 drives connected to two different servers or sleds in any configuration. That way you can balance the latency vs. shear storage amount vs. compute capacity, etc. It gives customers a lot of flexibility,” he says.

Dell plans to continue growing the PowerEdge XE family with more optimized systems. Seckler wouldn’t give details but said AI workloads will likely be addressed.

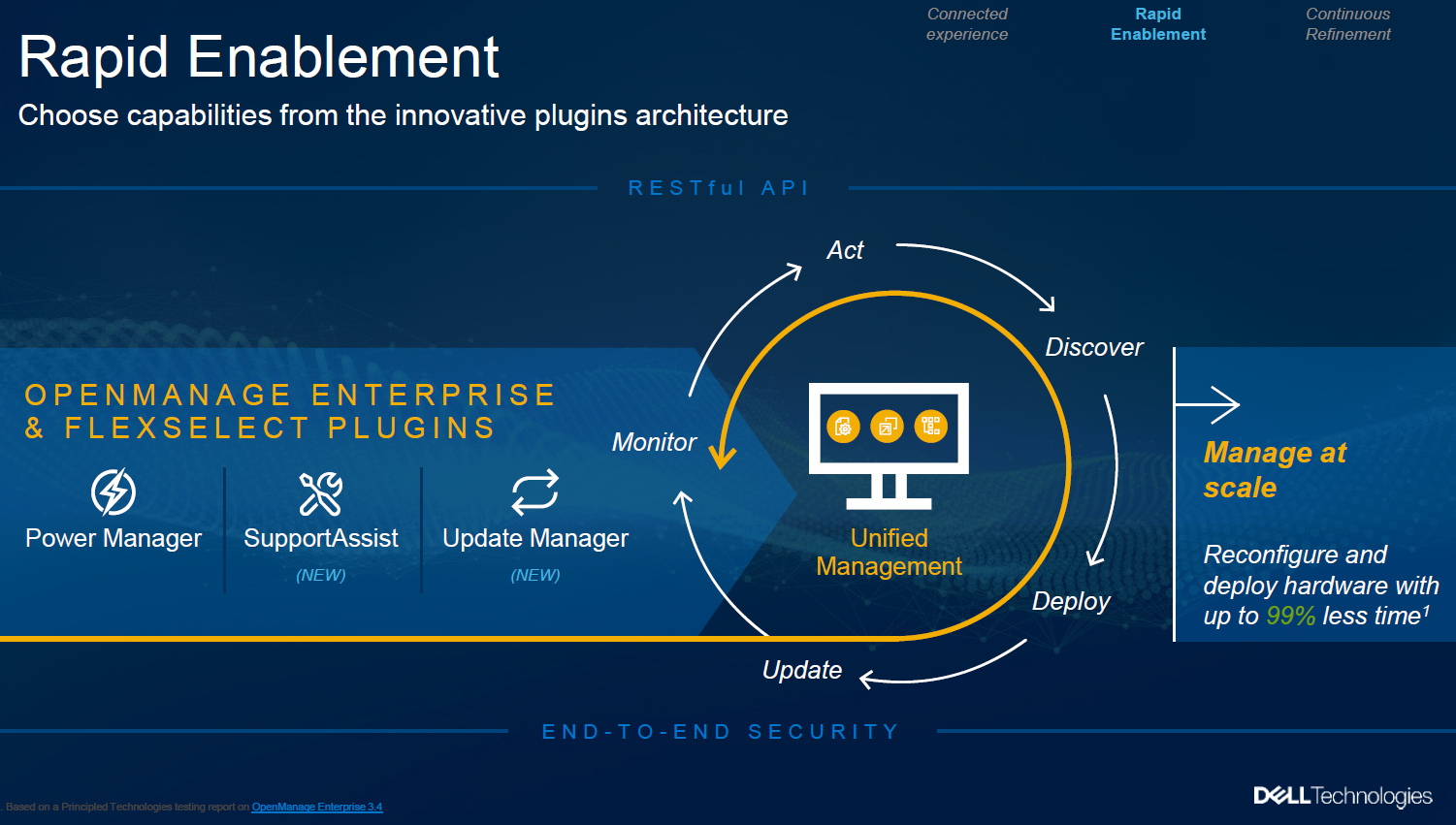

As it did with the XE2420, Dell also is supporting the XE7100 with enhancements to the OpenManage and iDRAC management software. Key to what Dell is looking to do with add more automation and intelligence that can be spread across the vendor’s portfolio, according to Kevin Noreen, senior director of product management for systems management for Dell’s Server and Infrastructure Solutions unit.

“How do [the servers] give you the productivity as quickly as possible?” Noreen tells The Next Platform. “They don’t want to spend a lot of time on that. They want to automate the onboarding process. They want to be able to make sure that from a complete management perspective, they’re automating as much as possible. There’s a couple of different things that we’ve done. We’ve always offered for the past five [or] six years profile-based provisioning through either APIs or direct communications through iDRAC or through OpenManage Enterprise, which is our flagship console that would make it simple and easy for people to onboard.”

With OpenManage Enterprise and iDRAC, within 60 seconds, the server automatically announces itself, it’s discovered on the network and the onboarding starts as part of OpenManage Enterprise, he says. Once identified, the profiles are automatically pushed out the systems. It’s a faster process than what’s normally done, when the software does an occasional “ping sweep” of IP address to find what has connected to the network, according to Noreen.

In addition, Dell is adding new modules to the management software to build off what it rolled out last year. OpenManage Enterprise FlexSelect was introduced as an architecture that offers an interface for managing parts of the server and that can be integrated with third-party management consoles.

Power Manager was rolled out last year to find and control underutilized resources and power consumption. The company now is unveiling SupportAssist to help prevent downtime by leveraging analytics, predictive alerts and automatically created support tickets. In addition, Update Manager automates update tasks.

Be the first to comment