It is no surprise that the key criteria that organizations look for when shopping for high performance computing storage is performance. It’s right there in the name. This will continue to be true as workloads continue to evolve and the need for HPC capabilities – storage and otherwise – grows among enterprises that are finding themselves being swamped by waves of data that they need to somehow capture, manage, and analyze in order to pull out the useful information that will enable them to make informed business decisions and more rapidly roll out products and services for their customers.

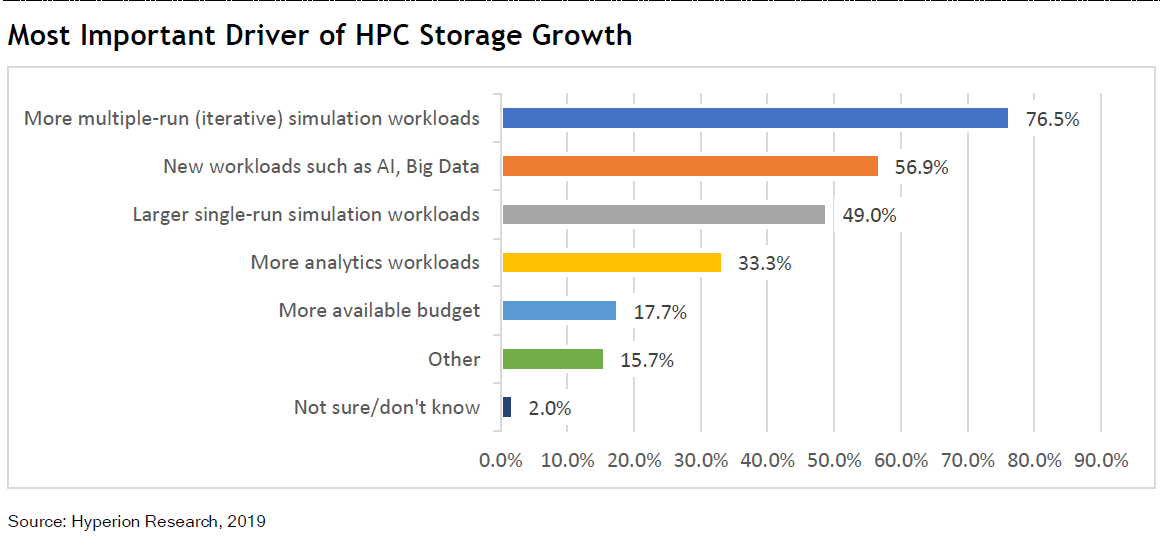

There is a growing need for HPC capabilities in the enterprise, and not just for traditional simulation and modeling but for data analytics and artificial intelligence workloads, too. A study released this week by Hyperion Research and commissioned by HPC storage vendor Panasas reiterated the point that performance was at the top of the list of HPC storage shoppers and that driving the growth in HPC storage capacity were iterative simulation workloads and newer jobs around technologies like AI and big data. Indeed, in the global survey of a broad array of datacenter and storage professionals, managers and users, performance topped the list – at 57 percent – of the most important criteria when buying HPC storage. In second was price, at 37 percent.

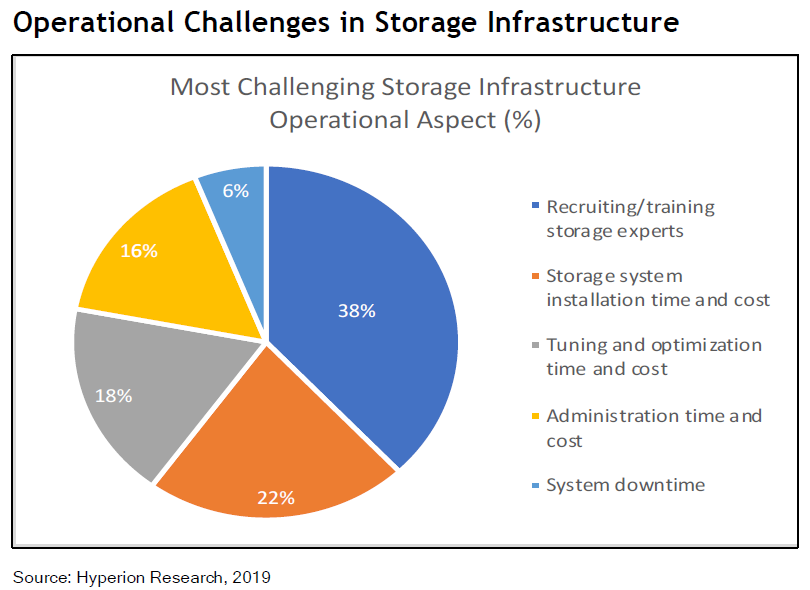

However, tied with price in second was the issue of total cost of ownership (TCO), which the Hyperion researchers purposely didn’t define because they found that TCO often meant different things to different organizations. That said, authors Steve Conway and Earl Joseph of Hyperion Research wrote in the study, the survey results “confirm the importance of what happens during the period of ownership, after the storage system has been purchased. The findings show that for many HPC storage buyers, operating expenses – ranging from staffing needs and power to the cost of unscheduled downtimes – are just as important considerations in upfront buying decisions as the initial acquisition cost of the storage system.”

The high importance placed on TCO in the study indicates that users are putting a greater emphasis on it, according to Panasas. The survey also found that historically organizations have been willing to put up with high rates of downtime that can cost them millions of dollars over a matter of days in order to get that performance they need. The drive for cost-effective performance comes with complexity and unreliability that that resulted in lost productivity, long recovery times from storage failure and the challenge of finding the skilled staff to run and manage the environment, according to Panasas.

“Storage system failures and lost productivity are the norm in HPC storage,” Jim Donovan, chief sales and marketing office for Panasas, tells The Next Platform. “If you think about any other enterprise technology, this would not be acceptable, but in the case of HPC infrastructure, it was sort of the medicine you had to take to get performance at any cost. That was so important that putting together the capability to support this became paramount and why that’s the biggest operational challenge.”

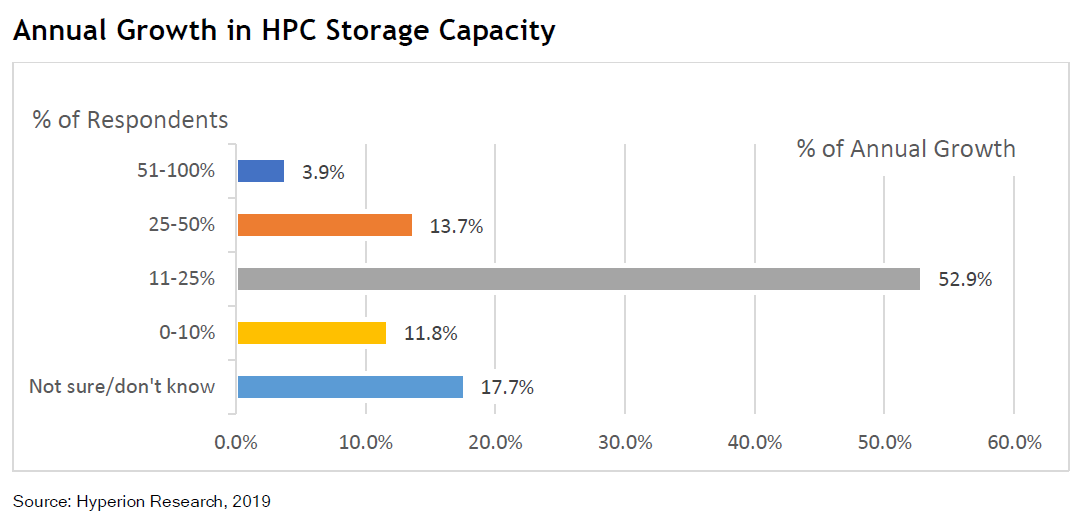

The organizations surveyed differed widely in the amount of HPC story capacity they had, from less than 100TB to more than 50PB, and more than half expected their HPC storage needs to grow between 11 percent and 25 percent annually. In addition, 43 percent were managing all this with one to three full-time equivalent (FTE) staffing. The survey found that almost half of the organizations said they experience storage system failures at least once a month, with downtimes ranging from less than a day to more than a week. Each day of downtime costs the companies anywhere from less than $100,000 to more than $1 million.

One point the study made was that while 82 percent of organizations said they were relatively satisfied with their current HPC storage vendor, a “substantial minority” said they were likely to switch vendors the next time they upgrade their primary HPC systems, with the “implication here is that a fair number of HPC storage buyers are scrutinizing vendors for competencies as well as price.”

Findings like that were one of the reasons why Panasas – which caters to businesses that are step below the major national laboratories and similar institutions in size and needs – commissioned the study, which surveyed organizations with annual revenues ranging from less than $5 million to more than $10 billion. The company wanted to help quantify the operational cost challenges companies are dealing with regarding HPC storage. At the same time, it wanted to present itself as a more reliable alternative that can also deliver high performance and reasonable costs through its PanFS parallel file system that’s delivered on the vendor’s ActiveStor Ultra appliance, which was introduced in November 2019.

When speaking to a focus group about the study, Robert Murphy, director of product marketing for Panasas, tells The Next Platform that some group members said that during the procurement process, they would forget about the operational issues they had with their current storage environments. In addition, they often didn’t realize there were alternatives to consider, Murphy says, adding that “they’ll run the tables from GPFS to Lustre to BeeGFS and back again because they really don’t think they have an option and the whole point of this exercise is to show them that there is a new option now.”

Panasas also has to overcome what Donovan calls an “intertia” among organizations when buying HPC storage.

“People buy what they know and are familiar with, particularly at the largest labs,” he says. “That’s not really our sweet spot. Our sweet spot is not the very largest 300 or 400 petabyte system because they can have an open-source product and they can bend it to their needs, and they have the skillsets and the processes, the people to manage it. Right underneath the most advanced sites, everybody else relies on the people to make it do what it does. We have so many anecdotal stories of failures. These are significant institutions that have had failures, data loss, these challenges of downtime and they’ve had to live with them because there wasn’t a story before, which now there is. The timing is that most of the market doesn’t know this, that they can have it all.”

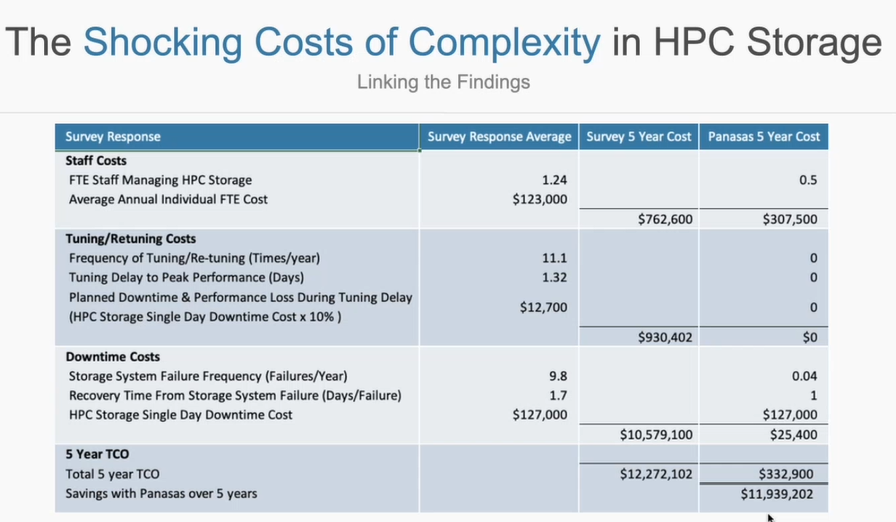

In conjunction with the study, Panasas has put a new TCO calculator on its site to help organizations better figure out their own operational costs. Using the calculator, Panasas took the average of various operational costs from survey responses and averaged them out over five years and then compared them with the costs associated with its technology, with the result being almost $12 million in cost savings over the five years.

The company also points to statements from some current customers about having no unplanned downtime for eight years when using Panasas products. With the new PanFS on the ActiveStor Ultra appliance, the system is low-touch, doesn’t need highly skilled managers operating it, and companies can manage a Panasas environment with part-time employees, which is important for its customers. When buying HPC storage, mid-size research firms will often look at the systems their larger brethren are buying and then follow suit, assuming the larger research institutions know what they are doing. The difference is that those organizations have the skilled people to deal with the various challenges. The smaller companies don’t and because of that, can roll up significant operational costs, Donovan says.

Ludicrous numbers in the TCO table.