Enterprise IT continues to cast its attentions – and sometimes its aspersions – out to the edge, that place outside of traditional datacenters and beyond that cloud where data is increasingly being generated and processed.

At The Next Platform, we have written about the systems and platforms that hardware and component makers like Hewlett Packard Enterprise, Lenovo, Cisco Systems, Intel and Nvidia and the drivers behind the expanding edge computing environment are well known.

Most of the massive amounts of data created by organizations increasingly is being generated at the edge, thanks to such trends as the proliferation of mobile devices, the cloud and the Internet of Things (IoT), all of which area helping to create highly decentralized IT environments. Dell EMC points to Gartner’s prediction that by 2022, 75 percent of the data that’s created will come from outside of core datacenters. There also is a growing need to more quickly collect, storage, analyze and act on this data, which is fueling the need to get that work done at the edge, closer to where the applications are and data is being created. That means not only pushing the compute and storage out there, but also the modern tools like analytics, artificial intelligence (AI) and machine learning. The oncoming jump to 5G networking will only add fuel to all this.

Despite all the focus on the edge in recent years, in many ways it’s still an environment that’s still coming into focus. In some ways that can be seen in how people see it rolling out over the next several years. Keerti Melkote, founder of Aruba Networks, the company that is now the tip of the spear for HPE’s edge efforts, told us last year that while hardware is important, it will be applications that will drive the edge infrastructure. There has been talking of micro-datacenters, specially-designed hardware and emerging technologies like Gen-Z networking and storage-class memory, or SCM.

Last year, John Roese, global chief technology officer at Dell EMC, spoke with The Next Platform about the different types of edge environments that will arise and the need for infrastructures for all of them being able to share common components. In keeping with that vision, the company this week is unveiling a range of new offerings that have their places along the continuum that Dell EMC describes as going from the edge to the datacenter core and out of the cloud, all designed with the understanding that data is now central for enterprise IT and that it’s coming from myriad sources, is increasingly unstructured and often has to be analyzed in real time in untraditional environments.

“We see the edge as really being defined not necessarily by a specific place or a specific technology, but instead it’s a complication to the existing deployment of IT in that because we are increasingly decentralized,” Matt Baker, senior vice president for strategy and planning at Dell EMC, said during a webcast. “As we’re using our IT environments, we’re finding that we’re putting IT infrastructure, solutions, software, etc., into increasingly constrained environments. When I say constrained, I’m saying that a datacenter is a largely unconstrained environment. You build it to the specification that you like, you can cool it adequately, there’s plenty of space, so on and so forth. But as we’ve placed more and more technology out into the world around us to facilitate the delivery of these real-time digital experiences, we find ourselves in locations that are challenged in some way. They can be challenged by bandwidth and network connectivity while needing to process a significant amount of data. They can be environmentally constrained. They could be in dusty, dirty environments. In many of the telco environments, you find dimensional constraints — short-depth racks that require different sizes and form factors. There are many electrical issues. One of the bigger ones is that you have operational constraints. It’s in many of these cases we are now deploying infrastructure and solutions and software out into the world, far away from skilled IT staff, which puts greater pressure on the ability to manage highly distributed environments in a hands-off, unmanned environment.”

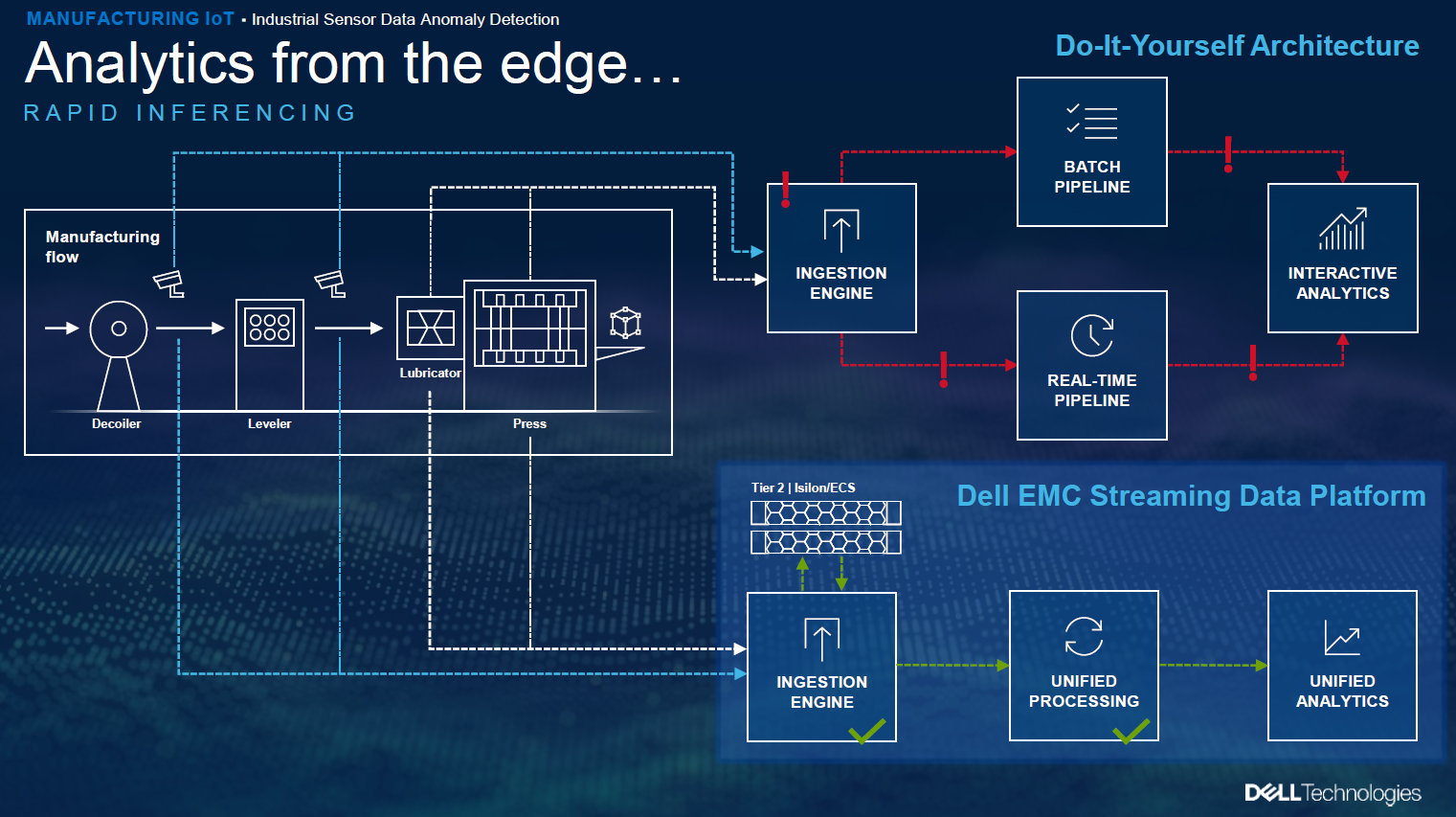

The edge is not an island unto itself, but part of a larger IT environment that also will touch the data, Baker said. Data may need to be analyzed at the edge, but machine learning and AI are also being used for inferencing and real-time analytics used for finding patterns and trends in the data that can be sent back to the datacenter for training new models and optimizing existing models.

With that in mind, Dell EMC is introducing the PowerEdge XE2420, a compact “short-depth” two-socket system for space-constrained and harsh environments that can be used for such jobs as building out networks for 5G. It can run up to four Nvidia GPU accelerators and offers 92 TB of storage, for modern technologies like data analytics. The company also is rolling out the Modular Data Center Micro 415, a pre-integrated offering that includes power, cooling and remote management in a size that shorter and narrower than a parking spot. It’s a way to bring datacenter capabilities out of the datacenter to run such jobs like analytics at the edge when there’s not enough time or bandwidth to send the data to the cloud or datacenter. There also is the updated XR2 ruggedized system that allows for AIOps management and more GPU accelerators.

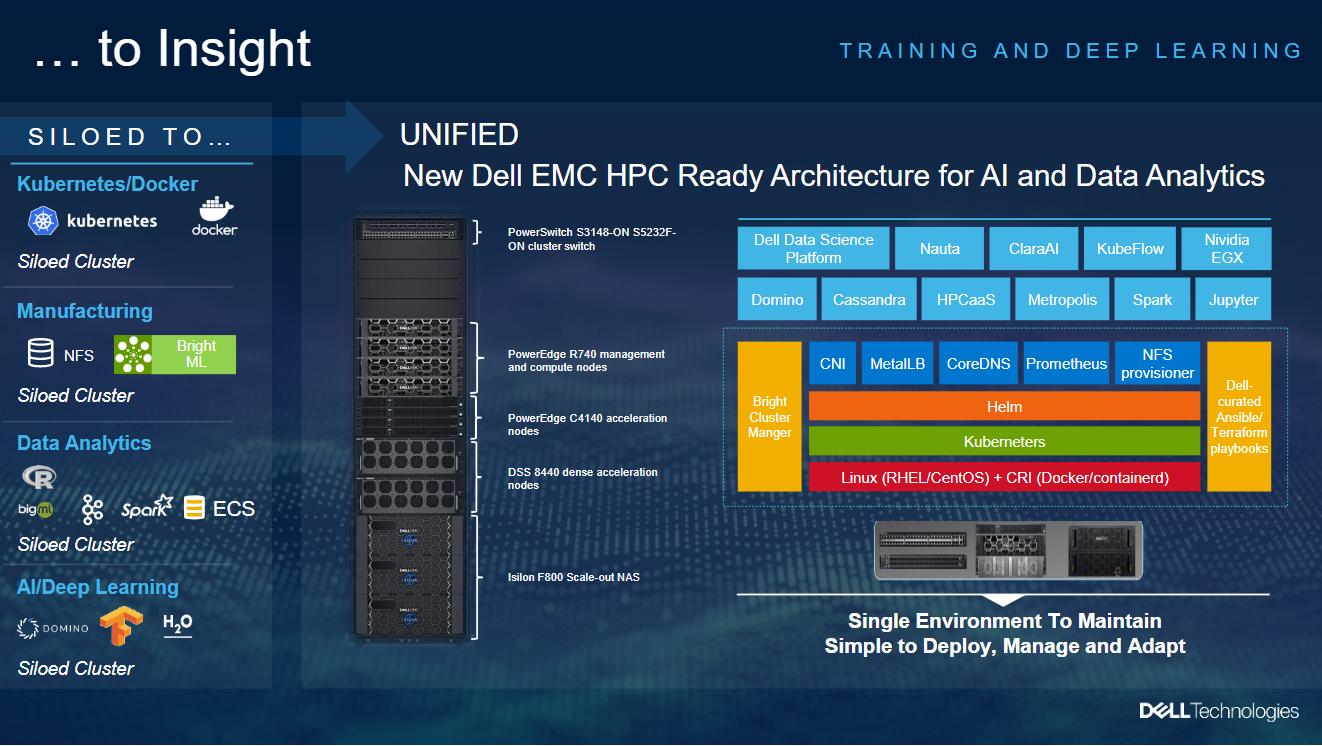

The vendor’s new Streaming Data Platform leverages its Isilon and ECS tiered storage allows for analysis and inferencing of streaming data at the edge and that is designed to work with Dell EMC’s HPC Ready Architecture for AI and Data Analytics, an integrated system that includes PowerEdge R740 compute notes and C4140 and DSS 8440 accelerations nodes, Isilon F800 scale-out network-attached storage (NAS) and PowerSwitch S31480-ON or S5232F-ON cluster switch. It uses Bright Cluster Manager, runs Linux (Red Hat Enterprise Linux or CentOS), Kubernetes and Docker containers and leverages such software as Nauta CLaraAI, KubeFlow. and Cassandra.

The goal is to give enterprises a single platform for their workloads, according to Baker.

“A lot of folks are looking to develop new AI- and [machine learning]-like workloads, and that requires that they learn a number of different things,” he said. “Today, that world is pretty siloed. If you want to utilize something like Bright machine learning with NFS in a manufacturing environment, that’s one build. If you want to use Spark, ECS and big ML in other areas, that’s another platform. We find that this creates a lot of complexity for our customers and really slows them down, slows down their innovation. What we wanted to do is build a Ready Architecture for many AI and data analytics frameworks so that it’s just a whole lot easier for our customers to approach, deploy and leverage all of these new great technologies like Casssandra, Domino Data, and Spark. You might ask why I am talking about a very large rack of systems in an edge presentation? This is an ecosystem, an end-to-end system, and in order to develop a real-time inferencing application, it typically requires that you train it against a large set of data. This is designed to complement and be deployed not physically alongside, but logically alongside the Streaming Data Platform. This is where one can train those new applications, train those new algorithms to be then deployed out to the edge and to fuel those real-time digital experiences.”

Dell EMC also is rolling out the iDRAC 9 Datacenter embedded remote management tool that enables enterprises to use streaming data analytics capabilities to see and understand what is happening with the PowerEdge systems deployed at the edge. The streaming telemetry in the solution lets users track trends, tweak operations and use predictive analytics to ensure high performance and reduce downtime. It also comes with zero-touch security, using metric reports from almost 2.9 million data points on each server and AI techniques to create custom routines on specific data for their systems.

It’s about “the ability to have comprehensive control,” Baker said. “Again, we’re talking about operating in environments with little to no IT staff and the ability to stream in telemetry from your infrastructure is a huge opportunity to increase the availability and to reduce the cost of supporting these increasingly distributed environments. We’re adding a number of new monitoring metrics around GPUs, serial data logs, optical interfaces, and so forth to ensure that you can examine and look at every aspect of your PowerEdge platform deployed out the edge.”

Be the first to comment