Storage systems are inherently data intensive. But the rapid emergence of artificial intelligence as a standard datacenter workload has storage vendors scrambling to design platforms that better meet the more stringent performance needs of these applications.

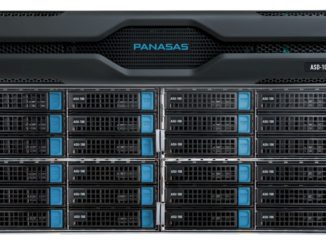

Panasas, one of the few HPC storage specialist that hasn’t been swallowed by a larger IT company, knows a thing or two about how to design for performance. After all, it’s been churning out HPC storage appliances since 1999. But AI and similarly data-intensive codes have thrust the issue of I/O latency to the forefront. Minimizing latency ensures that the system’s most expensive componentry, namely CPUs and GPUs, are kept busy and not sitting idle waiting for data to arrive.

Our recent conversation with Panasas storage architect Curtis Anderson, shows how AI has is driving these designs in new directions. The full interview filmed live at The Next HPC Platform can be found below.

“The workload HPC has provided to storage for the last couple of decades has been pretty consistent,” explains Anderson. “It has been growing in bandwidth, but not particularly in any other characteristic. AI is the first case where we are really seeing latency become an issue for us.”

To be fair, latency has been an issue for top tier supercomputing applications for a while, but not nearly as much in the broader commercial HPC domain, which is the principal market that Panasas plays in. But AI is now becoming so broadly adopted that latency will become a limiting factor everywhere in the HPC user community.

The other aspect to this, says Anderson, is the growing amount of metadata, which is mainly being driven by the tremendous number of files used in training AI models. The metadata problem is actually a subset of the latency issue, since with larger numbers of files to process, it becomes more important to minimize the time required to open a file, read something from it, and close it.

According to Anderson, building systems for maximum bandwidth has been relatively straightforward, enabled by a combination of fast disks and SSDs and the parallel data access (provided by file management software like PanFS). But incorporating low latency features into those same high bandwidth platforms requires some heavy lifting. And since Panasas’s expectation is that customers want to share their storage across a mix of HPC workloads – scientific simulations, analytics, and now AI – the system has to deliver on both aspects.

Panasas is in to process of developing a new storage acceleration technology that promises to do just that. The work is being accomplished under the company’s Ludicrous Mode project, an apparent reference to the “Ludicrous Speed” setting of the spaceship in the movie comedy Spaceballs.

Part of the Ludicrous Mode technology will involve NVM-Express-over-Fabric (NVMe-oF, a storage disaggregation technology that is fast becoming standard fare for content streaming, real-time data analytics and machine learning. Storage-class memory also looks to be a feature of the Panasas effort. Unlike burst buffers, which were mainly featured in storage setups for high-end HPC, Anderson believes NVMe-oF will be adopted across HPC environments, from elite supercomputers down to enterprise clusters.

As a result, Panasas wants to make sure the Ludicrous Mode technology is accessible to their commercial customers without them having to be concerned with the low-level details of the underlying hardware. As Anderson observed, life scientists writing genomics analysis codes aren’t going to be interested in learning how to optimize I/O patterns across an NVMe storage network to maximize performance. For this to work, he says, “a storage vendor is going to have to step and say ‘we’re going to manage this for you.’”

Be the first to comment