The consensus is growing among the big datacenter operators of the world that CPU cores are such a precious commodity that they should never do network, storage, or hypervisor housekeeping work but rather focus on the core computation that they are really acquired to do. And that means that SmartNICs might go mainstream in the coming years, creating an even more asymmetric and hybrid processing environment than many are envisioning down the road.

Strictly speaking, the SmartNIC is not a new idea. There are relatively few new ideas in computing, just new twists on old stuff or things reinvented by those who did – or did not – study history.

If you really want to get technical, IBM has had asymmetric processing with lots of distributed intelligence in all manner of offload engines in its proprietary mainframe and minicomputer systems for many decades. And for just the reasons outlined above.

The RISC processor, invented by John Cocke at IBM Research in 1974, was originally intended to be put into a telephone switch that could handle the then-huge workload of 1 million calls per hour. But this 801 processor, as IBM called its first RISC chip, ended up as an intelligent controller in mainframe disk drives and eventually migrated down into the RT PC as the Unix workstation market was coalescing in the mid-1980s. With the rise of Hewlett-Packard, Sun Microsystems, and Data General in the Unix workstation and then server businesses, IBM launched project “America,” which put a revamped RISC architecture, known as Power, with lots of oomph at the center of a new line of systems, called the RS/6000. Ironically, IBM’s System/38 and AS/400 minicomputers, launched in 1978 and 1988, respectively, had a relatively modest CISC engine – we always thought this CISC processor was a licensed Motorola 68K processor with its memory addressing and processing extended from 32 bits to 48 bits, and that was because these systems had what IBM called “intelligent I/O processors” that ran chunks of the operating system microcode remotely from the CPUs. And these IOPs were all based on Motorola 68K chips. So why not keep the architecture all similar and make a funky 48-bit 68K? In any event, IBM eventually consolidated the AS/400 and RS/6000 minicomputer designs, and one of the things that got dropped into the bucket of history was the IOP; all of the I/O processing was brought back into the processor. And here we are, now two decades later, and it looks like the industry is getting ready to offload it back onto SmartNICs because, once again, CPU processing is to be cherished and optimized.

More recently, bump in the wire processing on the network interface card has been used by the financial services industry on NICs for more than a decade, usually with FPGA engines doing the work of manipulating data streams before they even hit the systems running transactions. These days, you can offload all or most of a virtual switching stack or a big chunk of a distributed storage stack onto SmartNICs, which tend to have real CPUs embedded in them. Microsoft has FPGA SmartNICs that run a variety of network jobs and can change personality to accelerate its Bing search engine. Amazon Web Services has its “Nitro” SmartNIC, which started off with network virtualization, added storage virtualization, and now can run just about all of a KVM hypervisor on the Arm-based SmartNIC. In all of these cases, the idea is to either accelerate certain functions or to free up CPU cores to do other work – usually both.

We sat down and had a chat about the evolution of the SmartNIC and its prospects in the near and far term at our recent The Next I/O Platform event in San Jose, and specifically we had a conversation with Donna Yasay, vice president of marketing for the Data Center Group at Xilinx (formerly of Marvell); John Kim, director of storage marketing at Mellanox Technologies; and Jim Dworkin, senior director of business development at Intel.

“Microsoft and Amazon have been deploying SmartNICs for a long time, and it is almost inevitable that is should come to this point,” says Yasay, speaking of server architecture. “You can’t just keep throwing compute cores on it, and it gets worse and worse. The Netflix speaker did the exact pitch for SmartNICs: He wants to iterate fast, the I/O is running out, and he wants flexibility. The key thing for Xilinx is that we see SmartNICs not only offloading and accelerating, but they are going to that next level that compute is going to.”

We are only half joking here, but it will be hard to tell if the CPU is a compute accelerator for the SmartNIC or the SmartNIC is the network, storage, and hypervisor accelerator for the CPU.

Mellanox has been providing SmartNICs for nearly five years by some definitions, longer than that using others, and only for a few years with the most aggressive definition.

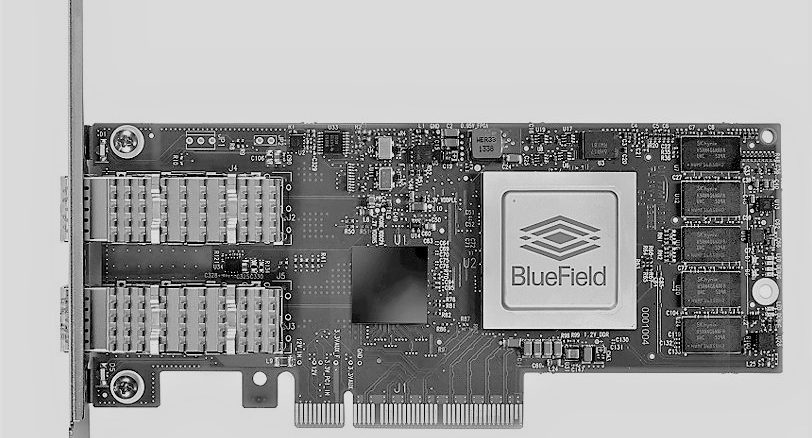

“If you say that a SmartNIC is a programmable NIC with a programmable data plane, Mellanox would say that we have been deploying these since 2015,” explains Kim. “If you say that a SmartNIC has to have a programmable processor and a programmable control plane, then part of the reason that it has taken so long, aside from Moore’s Law slowing down, is that a lot of the customers were waiting for a NIC that had advanced networking functionality to go with the “smart” part – programmable cores or FPGAs.”

Other than hardware, software, systems, and business reasons, there was nothing holding the SmartNIC back until recent, says Dworkin – and yes, he was being funny as well as serious. “The NIC is in the data plane and it can’t go down, and it naturally causes risk aversion for the entire user base, no matter who you are,” Dworkin says. “There were switching costs associated with SmartNICs. At the software level, there are complexities with user space stacks and the division of workloads on some of the networking functions, and there was the need for a vSwitch, etc. And then there were going to be storage offloads and other types of acceleration.” And then, there was the complexity and distinctiveness of the initial SmartNICs. Some were based on custom network processors (NPUs), some were based on FPGAs, some on CPUs in SoC format, and some a mix of these technologies, all with their different software stacks. The server OEMs and ODMs, lastly, had to figure out how to integrate these SmartNICs into their boxes and have the right thermals for them and the right software to support them.

The hyperscalers and cloud builders that have deployed SmartNICs could control a lot of these factors – Microsoft and AWS are on the record as using them, and Google is widely believed to be working on its own design. And now the rest of the world can catch up and commercialize the idea.

If you want to find out more about what these SmartNIC suppliers think about the prospects for this approach to hybrid compute, then you need to watch the rest of the interview.

You could reasonably mention the Meiko “Elan” NICs used in the CS-2 (https://en.wikipedia.org/wiki/Meiko_Scientific#CS-2) from the early 90s here in your “History fo smart NICs” too; they had these properties

1) A SPARC processor in the NIC, which could run code with the same memory protections as the invoking process on the main CPU

2) The NIC was on the SPARC MBus, so cache-coherent with the CPUs

2) Direct remote user-space to user-space memory access with no need for page lockdown

3) No need to enter the kernel to initiate transfers.

Much of this was carried over into Quadrics’ (https://en.wikipedia.org/wiki/Quadrics) and then Gnodal’s (https://en.wikipedia.org/wiki/Gnodal) products.

It seems it takes 25 years for good ideas to be reinvented enough times that people accept them!

(FWIW, I am one of the founders of Meiko :-))

Interesting to hear the different takes on the movement around smartNICs and that there is a trend to move more markets — evident in the discussions from NetFlix and others. We already see it with the Hyperscalers — the premise of this post. However there are a number of companies like CSPi that have been driving the smarNIC through its evolution of network packet capture and HFT special applications. But we now believe that the smartNIC will evolve so much beyond that and become more easily available as part of your server purchase. We are now seeing truly flexible and programmable smartNICs, like the ones we produce, that are only 2x to 3x the typical NICs. But the value is the flexibility of a platform that is close to the data and now allows us to do more than packet capture — we now can provide security isolation, beyond VPN services and compute offload as simple as firing up a container or affinitizing a thread to a core on the smartNIC as the Linux scheduler does with multiple sockets on a motherboard. We hope there are more articles and sessions to help educate the markets, there is so much further to go and so much for the markets to benefit from. Thanks for the nice work here.