Qumulo was born at a time of change. When the company was founded seven years ago, enterprises were still running most of their business on premises, but the cloud was out there now and software-defined was gaining steam. Some of the company’s founders, with experience with EMC’s Isilon fall-flash scale-out network-attached storage business, thought that whatever the new company developed should be built to work both behind the firewall and in the cloud.

“Back in 2012, when the company was founded and the cloud was at that point just becoming kind of a real thing, one of the core tenants we have is anything we can do on prem-on local hardware we can do in the cloud,” Molly Presley, global product marketing manager at Qumulo, tells The Next Platform. “Architecturally, it runs in the exact same way, [has] all the same features, our software licensing is completely portable, so if customer starts with whatever [number of] petabytes on-prem and wants to move part of that to the cloud, there’s no new licenses they need to get. Everything we’ve built is designed for hybrid environments. Cloud [and] on-premises all kind of work equivalently, and that’s why the company built a new file system.”

Qumulo came out of stealth mode three years ago with its cloud-enabled hybrid distributed file system, giving enterprises what the company says is an easy on-ramp into the cloud when they’re ready to make the move.

“We’re definitely seeing that most of our customers are still on-prem and they lean to Qumulo for a handful of reasons,” Presley says. “They like our business model of being cloud-ready, so even if they’re not doing a lot in the cloud today, knowing that they can without a big architectural uplift is really important to them. It’s kind of a future-proofing decision, but they choose us instead of maybe some of the other startups that are out there because we have such deep enterprise expertise.”

As we’ve noted here at The Next Platform, Qumulo’s file system aims to give organizations the range of capabilities they’ll need at a time of rapidly change in the IT environment, from big data and the cloud to the Internet of Things (IoT) and software-as-a-service (SaaS), and in the continuing shift from analog to digital. The file system uses cross-protocol access to files and integration with LDAP and Active Directory so when a large enterprise needs to bring new technology in, that support is built into Qumulo’s protocols and file system capabilities, she says. The company’s products are designed for the hybrid cloud, whereas other startups went straight to the cloud, eschewing those enterprise capabilities.

The result is that Qumulo is pushing to become the leader in such emerging industries as autonomous vehicle research and workloads that leverage technologies like artificial intelligence (AI), machine learning and analytics. At the same time, the company is displacing GPFS in HPC as well as Dell EMC’s Isilon and NetApp, Presley says.

“The key reason is simplicity,” she says. “Not just simplicity of deploying and managing, though we are much, much easier. The model of how you get a Qumulo system in there tuned and deployed vs. GPFS. It’s hours vs. days. It’s much easier to deploy, especially when you don’t have real technical IP staff that understands file systems. But the bigger thing is the visibility and the real-time ongoing management and capabilities we have. We have … real-time analytics that essentially alleviates there having to be a file system scan to do maintenance, to figure out where your capacity is being used, to bill back to users.”

With NetApp, Isilon and GPFS, it “requires going to scanning the entire directory tree, looking at whatever it is you want to know or whatever you want to do, and in these really big unstructured data environments, that can take literally days, and most of those processes have to happen – especially in a NetApp or Isilon – in a serial manner so only one can run at time and once it finishes, the next job can run. So users often just don’t have the ability to know who is using their data, which users are using it for what, why they’re running out of capacity [or] how much capacity they have. Because of all those things, they’re really blind into their system and unstructured data is growing really quickly, it’s very strategic in businesses now and that really leaves IT administers blind to what’s going on their system.”

Qumulo is again looking to adapt to changing conditions in the IT environment, this one around NVM-Express, the storage protocol aimed at improving the performance of flash and other non-volatile memory to help address the increasing demand for more performance and throughput and lower latency as data continues to pile up. System OEMs like IBM, NetApp, Cisco Systems and Dell EMC are all growing the NVMe capabilities in their portfolios, and other tech vendors are following suit.

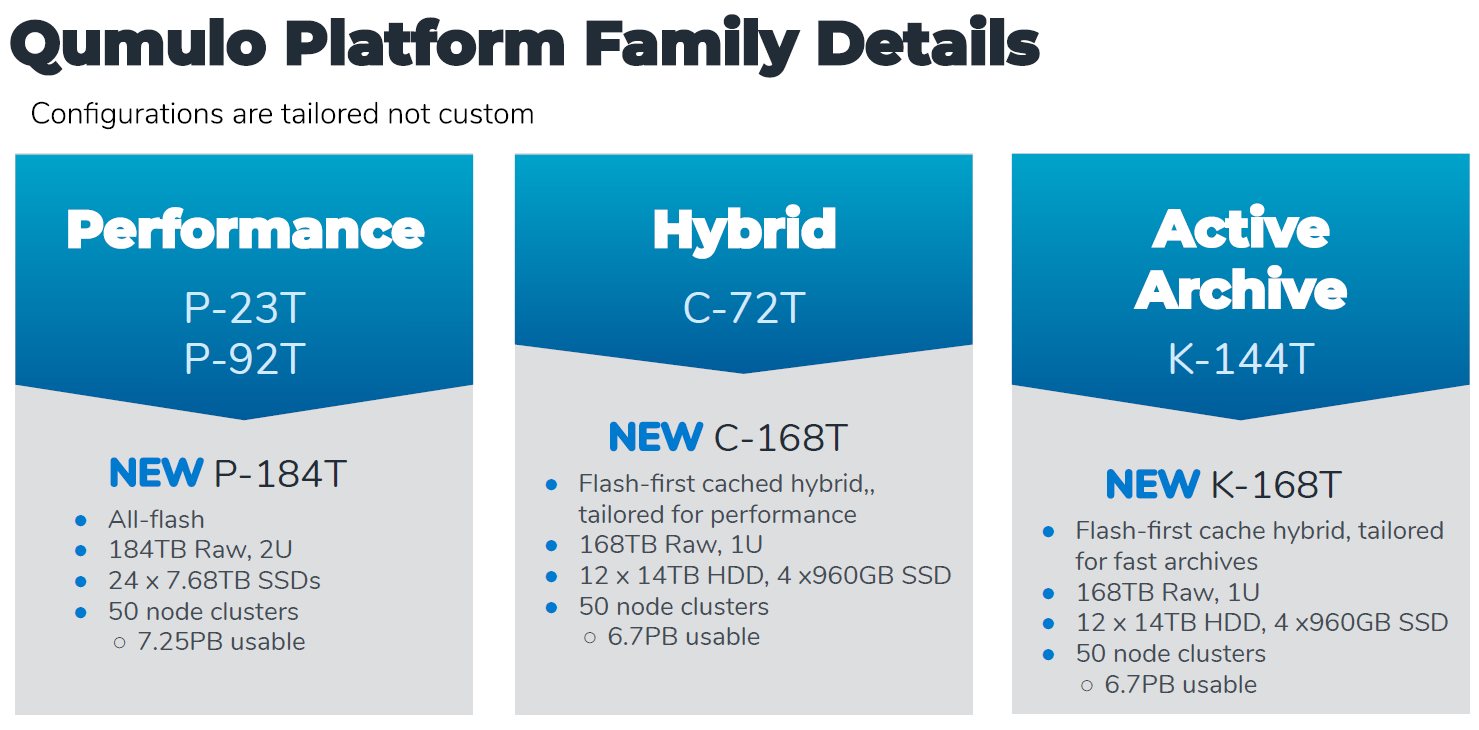

Qumulo this week is rolling out new flash-based storage hardware platforms that can take greater advantage of NVMe, not only around improved performance but also better cost per workload. The new platforms – the P-184T, C-168T and K-144T – are either all-flash or flash-first offerings, as seen below:

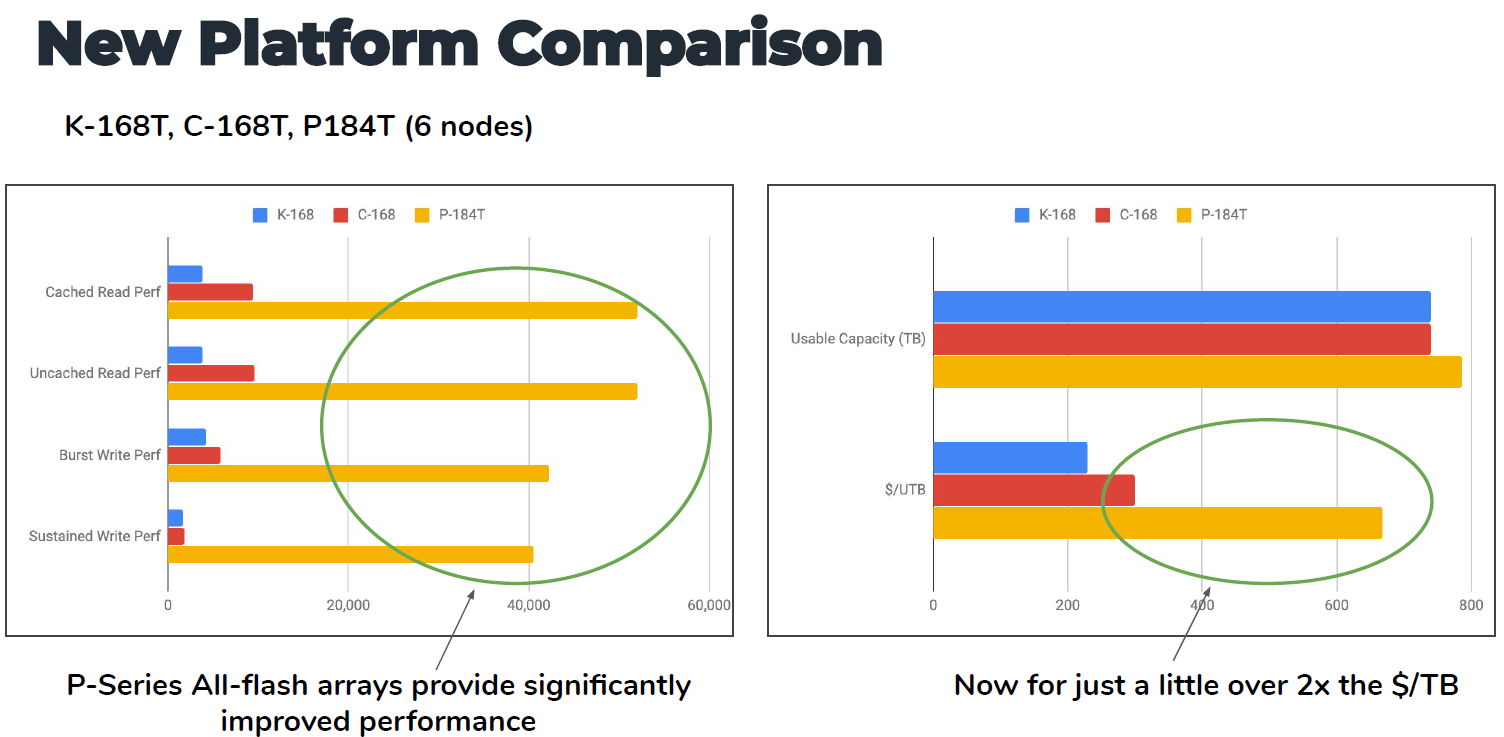

NVMe is at an inflection point, where the price is coming down enough to where it makes economic sense, Presley says, noting a 30 percent drop over the last quarter, driving by oversupply, the supply chain pushing to get their inventory into the market and the desire among organizations to use fast SSDs. NVMe’s price per capacity is about twice the cost per terabyte compared with hard drives, but with the new platforms there is almost a 10-fold performance increase, “a shift here where we really think that NVMe is going to continue to become the storage media of choice. For a lot of users, that would be worth the extra capacity cost, especially in a high-performance workload.”

The new platforms are Qumulos on-premises clusters enterprises can use to build out their storage systems. The company doesn’t make money on hardware – revenue and profits are driven by software – so the company can pass on savings from commodity pricing to customers, she says.

Qumulo’s predictive caching also helps drive down costs by leveraging machine learning built into the file system to move files and workloads to the fastest storage or best memory available in the system. The organization is paying for the lowest-cost capacity but seeing Qumulo move workloads to the faster tiers. It’s something that other companies are trying to do but are challenged because of the architecture of parallel file systems, where the metadata is separate from the storage, with different code bases to run different types of storage. Assigning data in real time to file systems is difficult to do across different code bases. Qumulo has a single code base, enabling it to skip over the problems other vendors are having, she says.

The eke out more performance, Qumulo engineers developed a new kernel that uses memory more efficiently and rewrote how the system page writes to memory and how the memory is used in the software. The result is up to a 40 percent increase in read performance on the all-flash systems, which will play well in the HPC field, Presley says.

“If you think about the HPC market and how much is going on with analytics, machine learning [and] things like that, everything we can do on the read side has become a lot more important, where five, 10 years ago it was more about streaming writes off the computing cluster,” she says. “This data is becoming more and more active all the time and we’re really having to focus on how we can keep pace with the amount of activity on the read side as well.”

On the software wide, Qumulo is introducing I/O Assurance, which leverages some of the new memory and processors in the new platforms to ensure that enterprises don’t have to over allocate their storage and other resources to avoid I/O problems during a system rebuild, which will save money and time, Presley says. With Qumulo Audit, the vendor is addressing security by enabling enterprise to “see who’s logged into the system, what they did when they’re there, who’s changed permission to deleted files, all those different things. We have a very extensive log that we in real time are constantly updating that people can take a look at what’s going on within the system from a usage perspective or a user behavior perspective.”

In addition, enhance real-time analytics gives organizations greater visibility into their storage, including into capacity, snapshot and metadata usage.

Be the first to comment