More and more of the networking that is done in the datacenter is performed on software-defined networking whiteboxes rather than on proprietary black box machinery that was controlled by one supplier. Breaking the hardware free of the software lets both evolve independently, and also allows network devices to be tuned to do just the jobs that are necessary and no more.

SDN succeeds by separating the control and data planes, employing a software-based, centralized control plane to direct networking hardware data plane and ensure efficient management of the entire network. The centralized control plane software makes it easy to provision, manage and update many physical and virtual switches, instead of embedding a controller in every switch. It’s a trend that’s seeing many of the big names in networking attempt to build platforms that layer SDN on top of their existing hardware – integrating that hardware with software layers through proprietary network controllers. Their marketing message is that they deliver a turnkey package that centralizes management and security.

Such a setup may appear attractive – perceived as low risk if you already operate networking kit from that vendor with your engineers familiar with its platforms – but it means you are locked in to that vendor. And that lock in comes at a substantial price: proprietary controllers start somewhere in the tens of thousands of dollars, quickly going to hundreds of thousands at any kind of scale.

Fortunately, there is a move towards a more open form of SDN that employs standard protocols and doesn’t lock you into a single vendor.

The Future Of SDN Is Open

Two protocols underlying this are Virtual Extensible LAN (VXLAN), to create network overlays, and Ethernet Virtual Private Network (EVPN) that provides the controller function. Both have been adopted as standards by the Internet Engineering Task Force (IETF) in RFC 7348 (VXLAN) and RFC 8356 (EVPN).

How do they work? VXLAN encapsulates Layer 2 Ethernet frames into Layer 4 UDP packets to create an overlay – a virtual network atop the physical infrastructure that carries traffic for a specific function or service. It avoids the need to reconfigure the physical network to deploy a new service, and multiple Layer 2 networks can co-exist on top of one physical network.

VXLAN is popular because it’s scalable: supporting more than 16 million virtual networks for VXLAN versus just 4,094 for the standard Layer 2 VLAN protocol (IEEE 802.1Q). Each VXLAN can also operate across an intervening Layer 2 network whereas standard VLANs are typically restricted to one L2 domain. Both facts make VXLAN particularly suitable for operating services in complex and expanding datacenter environments

EVPN is technically an extension of Border Gateway Protocol (BGP), used for routing traffic across the internet. EVPN was originally intended to be used with Multiprotocol Label Switching (MPLS) to replace Virtual Private LAN Service (VPLS) in service provider networks, but now EVPN has been co-opted by the IETF as the control plane for network overlays inside of datacenters.

Traditionally, BGP operated at Layer 3 of the IP stack while EVPN operates at the Layer 2 level (MAC address). EVPN uses the same mechanism to implement MAC address learning, so the VXLAN Virtual Tunnel End Points (VTEPs) can discover where they need to forward the network overlay packets so that they reach the correct VTEP for their ultimate destination. A VTEP would typically be the virtual switch in a server hosting virtual machines, but it might also be a physical Ethernet switch capable of handling VXLANs and terminating VTEPs. Such a switch is critical when bridging between virtualized and non-virtualized parts of the datacenter.

BGP is proven to scale and extending with EVPN allows for the propagation of IP and MAC addresses without the need for a centralized (and often expensive) controller. Hence this architecture is sometimes known as “Controllerless EVPN.”

Enter Controllerless Cloud Fabric Switches

This all sounds great – but wait. While network overlays are simple, in that network nodes only need the support of VTEPs to forward packets, they do introduce a level of complexity when it comes to managing the overlays. Also, VXLAN and EVPN come with certain trade-offs.

VTEP obviously requires that the switches can support the VXLAN protocol, but many of the switches that support VXLAN and EVPN do so for only a small number of ports and server racks. Also, on many switches, getting these capabilities requires a high-priced software license.

One way around this is to deploy enhanced Ethernet switches, designed for Ethernet Cloud Fabrics (ECF), that handle EVPN and VXLAN with BGP as the control plane. Properly designed ECF switches offer scalable support for many thousands of virtual endpoints and hundreds of racks, all running at line rates for 25 Gb/sec, 50 Gb/sec, and 100 Gb/sec on any and all of their ports. And cloud fabric switches should include these features in the base software package, without requiring additional expensive licenses.

These ECF switches provide a number of advantages:

- Switches maintain low latency and minimize packet loss caused by microbursts, or uneven performance due to a poorly-designed buffer architecture.

- These switches are “controllerless,” so you remove the cost and possible lock-in associated with a proprietary network controller.

- Switches operate in tandem with SmartNICs or Intelligent NICs, for extra VXLAN performance and scalability

- Implementing the VTEP is simpler and more flexible because it can be done in the server NICs or in the switch hardware – whichever makes sense for the type of server and the network architecture.

- They allow for the possibility of a single dashboard view of your full complement of virtualization, compute and network infrastructure elements.

Software-Defined With Hardware Acceleration

But there exists another conundrum: even if the switches support VXLAN with VTEP, the network interface controller (NIC) in each server that uses an overlay network with a tunneling protocol like VXLAN must be able to handle the new packet header formats in its silicon. If it doesn’t, the host CPU ends up having to do all the overlay network processing, soaking up CPU cores and thereby hurting the performance of applications.

This fact has seen many hyperscale internet companies integrate on-board hardware accelerators into their servers’ NICs, to offload SDN functions such as VXLAN packet encapsulation/decapsulation, RDMA, and Open vSwitch (OVS) from the hosts and free up the CPU’s cores.

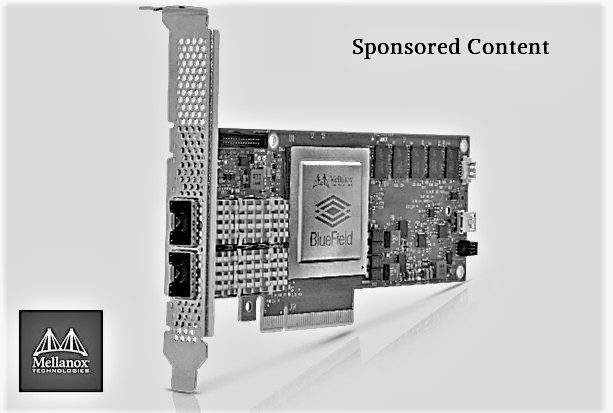

These so-called SmartNICs are now appearing as products for the datacenter – but there exist different ways of implementing SmartNICs, with different vendors touting different approaches. Typically, these break down into three approaches: the SmartNIC makes use of a custom-designed Application Specific Integrated Circuit (ASIC), uses an FPGA, or adds CPU cores (typically Arm-based) to the NIC to perform the acceleration.

The ASIC and FPGA approaches are the most common today, and it is said to offer good performance for handling network offloads. FPGAs, however, are typically costly and relatively challenging to program as they generally rely on complex, low-level hardware definition languages like Verilog. This means they are often used for optimizing specific functions that are not likely to change that often.

Employing an ASIC offers a great balance of price and performance along with data plane programmability through open APIs (for example: Linux TC/Flower). The core ASIC functionality is not reprogrammable but in properly-designed ASIC-based intelligent NICs the data pipelines can be modified quickly by an external network controller. Also new functionality and accelerations can often be added via firmware updates. With the ASIC approach the control plane processing still resides on a host CPU.

The third method is to turn the NIC into a system-on-chip (SoC) with one or more CPUs integrated alongside the standard network interface logic. This is by far the most flexible and open approach, as it allows for the use of standard operating systems such as Linux and for functions to be implemented in familiar programming languages, resulting in code that can be updated as often as required. A fundamental advantage of these SoC based SmartNICs is that the control plane can also run on the integrated processors, in an entirely different security domain than the applications running on the host CPU. This isolation from untrusted host applications is a key capability to deliver trusted bare-metal cloud.

In fact, some go as far as to claim that the programmable, CPU-augmented NIC should be regarded as the only “true” SmartNIC, with those using FPGAs or ASICs relegated to being something only slightly more intelligent than standard NICs. The purpose-built, and programmatic packet processing is central to the flexibility of the SmartNIC and Intelligent NICs, allowing them to offer more versatile acceleration of SDN and other network-centric applications, and take on other tasks such as security filtering or functions to drive software-defined storage.

Walk Softly And Carry A SmartNIC

SDNs are growing in popularity, but don’t take that to mean they must therefore be simple in some way. SDN’s are, far from trivial and you should plan carefully, because the technology choices you make at the start of your journey will have profound implications down the road.

Proprietary systems may seem like a safe choice of building block, but they are typically costly and – with a centralized SDN controller tightly coupled to the switching and routing elements – do not meld well with products from other suppliers. You will have limited your future options.

Choosing an open architecture for SDN using tools such as VXLAN, EVPN, SmartNICs and Intelligent NICs means you can keep your network options open without suffering this inevitable hit to your pocketbook or flexibility.

The one caveat? Give careful consideration as to where the processing burden of those overlay networks will fall – hardware acceleration in appropriate switches and SmartNICs or intelligent NICs can ensure your servers are left doing what they are meant to: Run the workloads.

Be the first to comment