The commercial realization of artificial intelligence has companies scrambling to develop the next big hardware technology breakthrough for this multi-billion dollar market. While research into areas like neuromorphic and quantum computing have captured most of the attention for future AI systems, the idea of using optical processors has slowly been gaining traction.

Compared to electronics, optical technology has the distinct advantages of both lower power and lower latency, which are two elements of particular significance to AI. Those two advantages are attributed to the fact that photons are inherently faster – as in speed-of-light faster – and more energy-efficient than electrons.

In fact, the technology holds enough promise that Intel is underwriting a research effort that could bring optical-based AI computing to market, by taking advantage of the chipmaker’s expertise in silicon photonics, semiconductor manufacturing and deep learning hardware design. The research is being led by Casimir Wierzynski, Senior Director, Office of the CTO, for Intel’s Artificial Intelligence Product Group. While the effort is still in its early stages, Wierzynski and his colleagues at the University of California, Berkeley, have published a paper that details some of their recent work.

In a nutshell, the research centers on optical neural networks (ONNs) and how different circuit designs implemented with silicon photonics can minimize computational imprecision caused by variations introduced during fabrication. (Computational photonics is analog in nature and is therefore sensitive to imperfections in the circuitry.). The researchers tested the performance of two ONN designs using a deep learning benchmark for handwritten digit recognition based on the MNIST dataset.

“You can think of a device like this as an optical TPU,” Wierzynski told us, referring to Google’s custom-built Tensor Processing Unit it developed specifically for deep learning. While the TPU is all digital, the ONNs performed the underlying matrix operations of neural network processing using photonics, with the remainder of the computation relying on electronics.

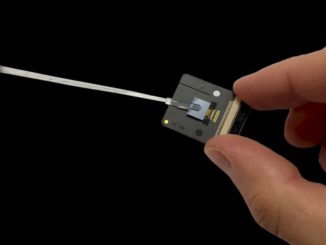

Unlike optical computing based on free-space designs, where you shoot lasers through diffractors to implement Fast Fourier Transforms, silicon photonics works at the level of optical circuitry. Thus, it can take advantage of the kind of scalability inherent in modern-day semiconductor manufacturing. Intel has expertise in both silicon photonics and semiconductor fabrication, which makes optical neural networks an attractive bet for the chipmaker. “We know how to miniaturize optical circuits,” explained Wierzynski. “We’re very good at that.”

The research work described by the paper is based on simulations of ONNs, rather than any hardware implementation. With that in mind, the research demonstrated accuracies of around 95 percent for the handwriting recognition test under ideal conditions. And it was able to maintain a high level of accuracy even as noise was incorporated into the simulation – the noise being used to represent the imprecision present in circuit fabrication and calibration. Another ONN design achieved an even higher accuracy of 98 percent, but its performance degraded rapidly as noise was introduced.

Based on previous research work with ONNs, Wierzynski noted that these devices can achieve inference latencies in the picosecond range, which is significantly less than you can squeeze out of a GPU, CPU or even an FPGA. Latencies for those digital architectures are typically in millisecond territory.

Likewise, energy efficiency for silicon photonics-based devices promise to be significantly better than their electronic counterparts. “In terms of energetics, we’re looking for something like one or two orders of magnitude better at compute-per-watt,” Wierzynski said.

Better yet, the energy efficiency of ONNs gets better as the number of circuits increases, since some of the power overhead can be amortized over the whole device. That’s due to the fact that there is a fixed power cost for the lasers that drive the photons through the circuits; the optical computation is essentially free regardless of how many circuits those photons must traverse.

The building block of the device is the Mach-Zender interferometer (MZI), which is used to form a waveguide on the silicon substrate. When you bring the two waveguides close together, they interfere with one another in a way that produces the analog equivalent of a 2×2 matrix multiplication. The trick is to be able to arrange a number these MZI circuits together so that a much larger matrix multiplication operation can be performed. The Intel research focused on arranging these circuits so that the effects of fabrication variations are minimized, thus improving the precision of the optical computations.

It’s the precision that’s the sticking point for these devices, which makes AI inferencing, rather than training, the logical choice for any initial work. But if the imperfections in the fabrication could be circumvented to an even greater degree, AI training, or even higher-precision applications, like HPC simulations, could be tackled as well.

But that’s getting ahead of ourselves. At this point, only simulated ONN designs have been revealed. Any hardware implementations, if they exist, remain behind closed doors at Intel. But given the chipmaker’s expertise and interest in silicon photonics for data communications, that is almost certainly where this research is headed. In fact, we wouldn’t be surprised if prototype ONN hardware isn’t already sitting in an Intel lab somewhere.

The combination of energy efficiency, near real-time latencies, and scalability would potentially make this technology suitable for a variety of AI environments – from hyperscale clouds to IoT edge devices. But first the technology will have to prove itself to be generally viable, and if so, what kind of applications domains make the most sense for it. “Whether it’s in a car or a datacenter or a phone, we have to see where the technology goes,” said Wierzynski.

It is a little bit discouraging that they are using MNIST digit recognition as their benchmark, given how trivially easy that task is for modern machine learning techniques. In particular, the biggest challenge facing photonics systems that I’m aware of is producing nonlinearities, which are absolutely critical to any interesting and sophisticated neural network. This work doesn’t look like it is made any headway on that, which makes me think it is a long way from replacing electronics in any practical application.