The diverse set of applications and algorithms that make up AI in its many guises has created a need for an equally diverse set of hardware to run it. At the 2019 Stanford HPC Conference, Microway’s Brett Newman offered some general guidelines on how to co-design such systems based on the nature of the software stack.

Although Newman’s formal title is vice president of marketing and customer engagement at Microway, a supplier of HPC and now AI iron, he is part of a technology team that specs out high performance iron for buyers. The team, says Newman, is full of people that “spend all day designing and delivering a huge quantity, and more importantly, a huge diversity of HPC and AI systems.” Over the years, Newman has been involved in hundreds of deployments of these systems, working with the customers to figure out what kind of hardware they need to run their application workloads. He also did a stint at IBM, where he worked in the Power Systems division, which developed Big Blue’s PowerAI machine learning stack and which obviously sells GPU-accelerated Power9 systems like those used in the “Summit” supercomputer at Oak Ridge National Laboratory.

Given his affiliation, Newman’s Stanford presentation was surprisingly light on promoting Microway, an HPC system integrator that’s been around for 35 years. The company actually jumped on the GPU bandwagon early, starting in 2007, which was the same year Nvidia released its first version of the CUDA runtime and software development toolkit.

The AI market came alone much later, of course, but Microway was quick to recognize that GPU-based systems would soon have the benefit of a much larger market. “We’re hardly the only vendor out there,” Newman admits. “But we’re one of the more interesting shops when it comes to AI hardware solutions.”

In his presentation at the Stanford conference, Newman talked about the basic criteria that novice AI customers need to consider when selecting a system to run their workloads, and how these criteria map to the hardware. In doing this, he provides something of a procedural recipe for users to follow as they consider their various choices. Note that the choices described here all revolve around GPUs supplied by Nvidia, which, as we all know by now, has become the dominant player in machine learning training.

Putting vendor selection aside for the moment, Newman, thinks there are two overarching questions that users first have to ask when considering an AI system installation: What is your AI dataset like and what are your workloads and algorithms like?

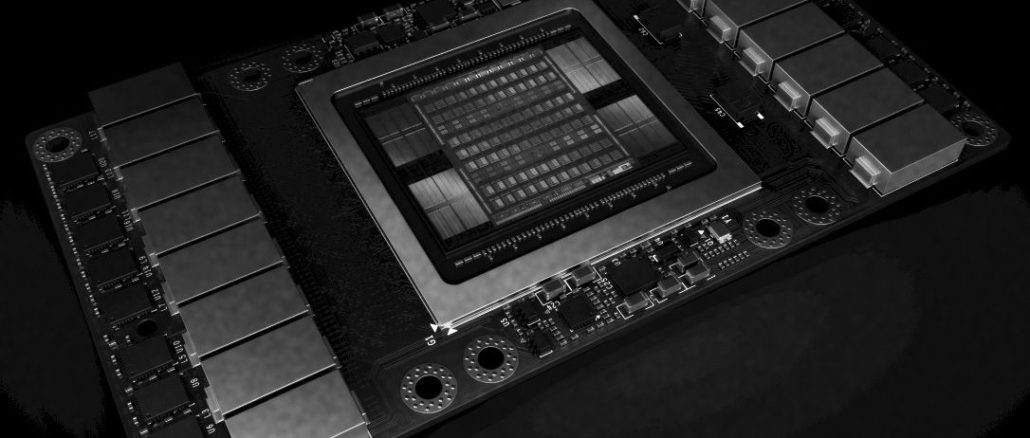

The nature of the dataset becomes key to the selection of GPUs, especially with regard to memory capacity. Will the data fit entirely into GPU memory, and if so which GPU or GPUs will it fit into? (The top-of-the-line Nvidia V100 GPU is offered with either 16 GB or 32 GB of local HBM2 memory, so the choice there is pretty simple) In most cases, the dataset will be larger than 32 GB, so it will have to be distributed across multiple GPUs in a server or even across a cluster of such servers.

That leads into a secondary consideration of how the data can be split into logical chunks that can fit into a GPU or portion of a GPU, which ends up determining your batch size for training an AI model. You also have to consider the size of your individual data items (assuming you have a more-or-less homogeneous dataset) and how many of these items will fit into a GPU with a given memory capacity.

For example, if you have a 128 GB dataset of 8 GB images, you can use a four-GPU server, with each GPU loaded with four images. That’s a pretty small dataset, but you get the general idea. For training AI models, Newman recommends using the NVLink-enabled Nvidia GPUs. NVLink connects GPUs to each other at speeds of up to 300 GB/sec (if you are using Nvidia’s NVSwitch interconnect) and offers cache coherency and memory atomics, too. So NVLink provides better price/performance than the basic 32 GB/sec PCI-Express 3.0 hookup for GPUs for this kind of work.

Newman says that on multi-GPU servers with NVLink, you can realize a 10 percent to 20 percent performance dividend when training workloads compared to the same GPUs connected via the slower PCI-Express. Note that NVLink-based V100 GPUs provide about 11 percent more computational capacity that their PCI-Express counterparts (125 versus 112 raw tensor flops, respectively), so it’s hard to say how much of the observed performance boost is attributed solely to the higher bandwidth and how much is coming from Volta GPUs with more inherent oomph.

What about those cases where the dataset is too large for any GPU and cannot not be logically divided into smaller pieces? One solution, Newman says, is to opt for an IBM Power9/Nvidia Tesla V100 GPU system where the NVLink extends to the Power9 host. That enables the processor’s more capacious primary memory to effectively be used as an extension of the GPU’s memory, thus making room for much larger datasets. Although Newman didn’t mention it, users need to take into account that primary memory is a good deal slower than the HBM2 memory in the GPU package, so there could be a performance hit if the particular algorithm being used is severely memory-bound.

The kind of algorithm or algorithm you are running on the system will also factor into the NVLink versus PCI-Express choice, as well as help determine the ratio of GPUs to CPUs. Newman says if your system is dedicated to training AI models, you should probably select a dense GPU configuration and opt for the NVLink version, given that most of the algorithm is going to run on the coprocessor side and benefit from the cache coherency and speedy inter-GPU communication.

If you want to give special consideration to ease-of-use for this kind of dedicated training work, Newman says you should consider Nvidia’s shrink-wrapped DGX systems. Not only are the GPUs NVLink-enabled, but Nvidia has already optimized all the common AI frameworks, drivers, and libraries for the DGX hardware. At that point, your main consideration is selecting the platform with appropriate scale, namely a four GPU DGX-Station, an eight GPU DGX-1, or a sixteen GPU DGX-2 (this one has NVSwitch).

On the other hand, if you’re mixing AI with more traditional HPC science or engineering simulations, it makes sense to raise the CPU:GPU ratio for a more balanced system. For this scenario, Newman recommends a dual-socket server, with two to four GPU accelerators. Sometimes people knock these systems as lacking performance, but they tend to be more common than dedicated AI machines. “In our experience,” says Newman “the majority of AI systems that we sell are delivered with this kind of balanced hardware configuration because most people are actually running mixed workloads.”

In the case of mixed workloads where the AI dataset is still too large to fit into a GPU, Newman says you’re going to want a PCI-Express switched architecture with lots of GPUs dangling off a single CPU, These PCI-Express switches provide plenty of bandwidth by virtue of the large number of links they support. Such a solution solves the communication problem to a large extent, but it’s still missing cache coherency advantage NVLink.

To optimize application performance for this sort of setup, the user should have the application transfer all the data to the GPU memories first, and then let the number-crunching proceed with the faster memory on the GPU package. That way, you only pay for the PCI-Express performance penalty at application startup, not throughout the computation.

A more challenging situation is when the user doesn’t know much about the dataset or algorithms they’re intending to use. In this case, Newman recommends testing what you have with the resources at hand, even if it’s just a CPU system or a minimally configured GPU one. That enables you to start gathering the kind of information you’ll need to design your final system. Even if you only end up moving to a newer GPU architecture, you’ll be able to realize a fairly significant performance boost.

Newman thinks one of the biggest challenges to overcome for novice AI user, and one that is underappreciated, is finding the relevant expertise on the vendor side. According to him, the key to this is locating someone in the organization who proves they know something about these types of problems and is willing to spend the time with you to help you overcome the hurdles. Often it just amounts to finding the people with the right title, such as technology salesperson or solution engineer.

According to Newman, there are also different strategies when you’re dealing with tier one and tier two vendors. In the former case, you’ll need to find the HPC or AI group inside the organization. “Having worked in a big tier one organization, I can tell you that it is very hard sometimes to find these people,” he says. “However, they do exist, and when you find them, they are very, very good.”

In the tier two space, you want to find companies those specializing in AI and HPC that have been around for a while and knows the ropes. He says to avoid parts resellers that only offer limited integration and testing. “The worst experience you can have as an end user is sitting there fighting with hardware for six months that was poorly tested or wasn’t integrated at all – and you get no use out of your new shiny AI system.”

Be the first to comment